在 chest X-ray 数据集上做肺分割

在 chest X-ray 数据集上做肺分割

介绍

在此任务中,您将开发一个系统,可以在胸部X光片中自动检测肺部的边界框。

对于这项任务,我们将使用一个名为[Yolo]的网络(https://arxiv.org/pdf/1506.02640.pdf) 。有关YOLO(以及最新的YOLOv2 / YOLO9000)方法的详细信息,请参阅以下文章:

- YOLO:统一的实时物体检测[https://arxiv.org/abs/1506.02640]

- YOLO9000:更好,更快,更强[https://arxiv.org/abs/1612.08242]

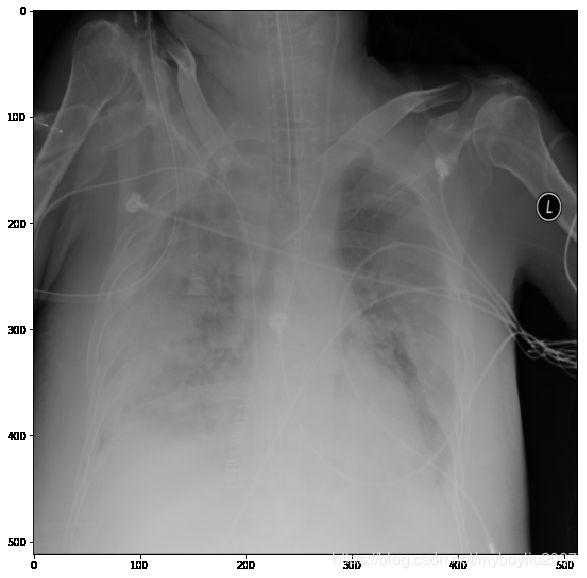

胸部X光片是医学中最见的医疗影像。胸部X光片使用非常小剂量的χ射线来产生胸部的图像。它用于评估肺部,心脏和胸壁,可用于帮助诊断呼吸短促,持续性咳嗽,发烧,胸痛或受伤。它还可用于帮助诊断和监测各种肺病的治疗,如肺炎,肺气肿和癌症。由于胸部X光检查快速简便,因此在紧急诊断和治疗中特别有用。

由于空气,软组织和骨骼之间密度的差异,肺部看起来比周围环境更暗。肺部较亮的区域可能表明存在病理。

任务目标

这项任务的目标是熟悉YOLO架构,损失函数和训练过程,以及它产生的输出类型以及如何在边界框的实际预测中对其进行转换。此外,您将熟悉在胸部X射线中检测肺部的问题,并且将来可以重新训练使用的体系结构以检测胸部X射线图像中的更多解剖结构。

为了开始使用YOLO架构,我们为您提供预训练的YOLO网络,仅检测右肺,您将首先在某些图像上运行,然后重新训练以检测左右两侧肺部。

这项任务的2个主要任务是:

- 任务1:解码网络输出以显示右肺预测的边界框

- 任务2:在两肺上重新训练网络

数据

在此任务中使用的数据属于CHESTXRAY14 数据集,该数据集可公开获取,可在以下链接中找到:https://nihcc.app.box.com/v/ChestXray-NIHCC 。 由NIH发布的数据集包含112,120个30,805名不同患者的正面X射线图像,使用NLP方法在放射学报告中使用多达14种不同的胸部病理标签进行注释。

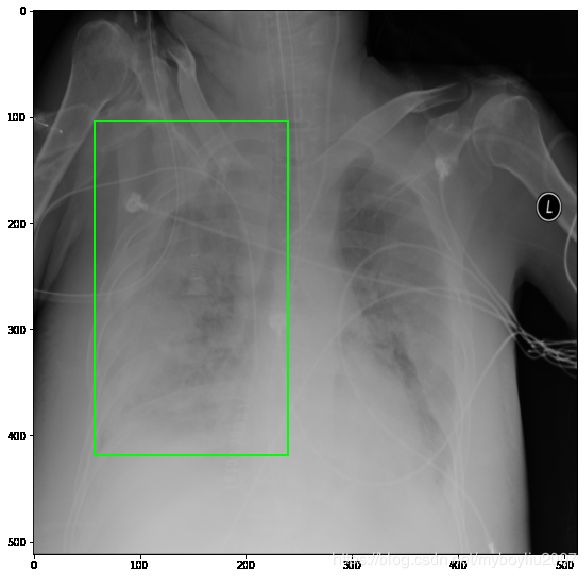

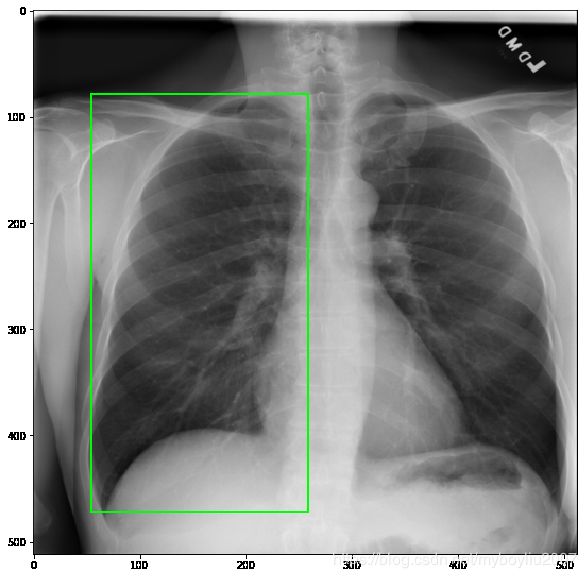

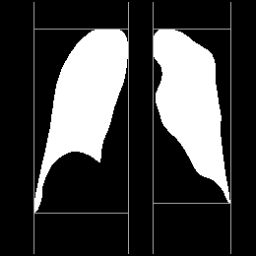

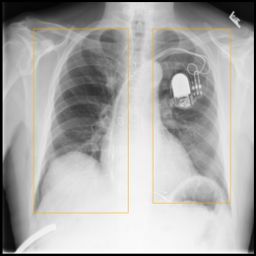

对于这项任务,我们从CHESTXRAY14中选择了13,331个胸部X射线图像,并生成了包含左肺和右肺的边界框。 边界框的坐标是从先前获得的肺部分割中提取的,可在我们的研究组中获得(CHESTXARY14数据集中没有肺部分割),如以下示例所示:

| chest x-ray image | lung segmentation | bounding box |

|

|

|

边界框数据采用xml格式,这是我们将在此分配中使用的YOLO训练脚本读取的格式(在下一个单元格中有关此内容的更多详细信息)。

用于图像“00000004_000的右肺和左肺边界框的xml文件内容示例如下:

<annotation verified="no">

<folder>Lungsfolder>

<filename>00000004_000filename>

<source>

<database>Unknowndatabase>

source>

<size>

<width>512width>

<height>512height>

<depth>1depth>

size>

<segmented>0segmented>

<object>

<name>RLname>

<pose>Unspecifiedpose>

<truncated>0truncated>

<difficult>0difficult>

<bndbox>

<xmin>64xmin>

<ymin>62ymin>

<xmax>256xmax>

<ymax>490ymax>

bndbox>

object>

<object>

<name>LLname>

<pose>Unspecifiedpose>

<truncated>0truncated>

<difficult>0difficult>

<bndbox>

<xmin>298xmin>

<ymin>78ymin>

<xmax>450xmax>

<ymax>502ymax>

bndbox>

object>

annotation>

在那里可以找到的所有参数中,最重要的参数如下:

(width, height):图像大小(RL,LL):右肺和左肺的标签(xmin,xmax,ymin,ymax):边界框左上角和右下角的坐标

现在了解这些参数非常重要,因为将在此任务中使用它们。

让我们获得此任务所需的预训练网络的权重和数据。如果您在计算机上工作,则必须下载数据和权重。

首先,让我们导入所需的库。此notebook中使用的代码是基于此github存储库开发的:https://github.com/experiencor/basic-yolo-keras 出于这个原因,出于兼容性原因,我们将使用该代码中使用的python库。因此,您将看到我们将使用opencv库来操作图像,这是我们在之前的任务中没有做到的。虽然在本周的讲座中我们已经看到了YOLO的基本思想,但在本作业中我们将使用YOLOv2的代码。不要担心,该方法的主要思想是相同的,与原始YOLO相比,您在此任务中将注意到的唯一差异是:

- 在体系结构中存在快捷连接(如在ResNet中)

- 在预测边界框的宽度和高度时使用指数函数

- 基于网格单元大小而不是图像大小来标准化anchor boxe的宽度和高度

# tensorflow as base library for neural networks

import tensorflow as tf

# keras as a layer on top of tensorflow

from keras.models import Sequential, Model

from keras.layers import Reshape, Activation, Conv2D, Input, MaxPooling2D, BatchNormalization, Flatten, Dense, Lambda

from keras.layers.advanced_activations import LeakyReLU

from keras.callbacks import EarlyStopping, ModelCheckpoint, TensorBoard

from keras.optimizers import SGD, Adam, RMSprop

from keras.layers.merge import concatenate

import keras.backend as K

# h5py is needed to store and load Keras models

import h5py

# matplotlib is needed to plot bounding boxes

import matplotlib.pyplot as plt

import matplotlib.patches as patches

%matplotlib inline

import imgaug as ia # library for image augmentation, used in the repo code

from imgaug import augmenters as iaa

from tqdm import tqdm_notebook

import tqdm

import numpy as np

import json

import pickle

import os, cv2, time, random

import xml.etree.ElementTree as ET # needed to read bounding boxes in xml format

import ntpath

# notebook-specific libraries, provided by the repo code

from preprocessing import parse_annotation, BatchGenerator, normalize

import utils

workdir = './'

确认一下 workdir文件夹的结果:

train # directory of training data

images # training images

xml # bounding boxes of right and left lungs

xml_right # bounding boxes of only right lung

test_images # test images

weights_right_lung.h5 # weights of pre-trained YOLO (only right lung detection)

pretrained_yolo_weights.h5 # weights of pre-trained YOLO (VOC dataset)

Task 1: 了解数据和YOLO网络

在本节中,您将:

- 可视化训练集中的胸片图像

- 从右肺的标注数据中读取边界框数据

- 绘制边界框

之后,您将:

- 运行YOLO网络,预先训练以检测右肺,测试图像

- 解码输出张量以提取边界框信息

- 可视化预测的边界框

从训练集中加载chest x-ray数据

要了解您将要使用的数据,首先从训练集中加载chest x-ray的示例并在下面进行可视化。

- 注意:您可以多次运行下面的单元格以查看数据的可变性! 正如您将注意到的,所有图像的大小均为512x512像素。

# define directory of training images

train_dir_images = os.path.join(workdir, 'train', 'images')

# pick random training image

case = os.path.join(train_dir_images, random.choice(os.listdir(train_dir_images)))

case_filename = os.path.splitext(ntpath.basename(case))[0] + '.xml'

# open image with opencv and visualize it

image = cv2.imread(case)

plt.figure(figsize=(10,10))

plt.imshow(image)

plt.show()

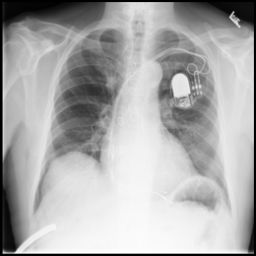

Q: 您在训练样本中看到了多样性,考虑可能导致它的原因?

答:目前有几种可变性。 首先,患者的躯干大小不同,这可能基于年龄(儿童/成人),性别和一般的成长过程。 此外,图像的颜色强度不同,并且不是每个图像都能提供像肺一样的感兴趣区域的清晰视图。 这可以基于患者体内的脂肪百分比,其可以覆盖感兴趣的区域并且使得难以透视。 另一点是由于心脏起搏器这样的植入物,可以被看出与“正常”患者相比是有着明显区别。

训练数据中的所有图像都附有围绕右肺的边界框的注释。 每个注释都包含一个单独的xml文件,其坐标定义了框的边缘(xmin,xmax,ymin,ymax)。

下面你会找到定义类BoundBox的代码,它存储边界框的信息,但格式略有不同,使用(x,y)作为中心的坐标。 边界框,和``(h,w)```作为它的高度和宽度。 它还实现了与类,分数和标签相关的其他变量,这些变量将在notebook中稍后加以说明。

class BoundingBox:

def __init__(self, x, y, w, h, c = None, classes = None):

self.x = x

self.y = y

self.w = w

self.h = h

self.c = c

self.classes = classes

self.label = -1

self.score = -1

def get_label(self):

if self.label == -1:

self.label = np.argmax(self.classes)

return self.label

def get_score(self):

if self.score == -1:

self.score = self.classes[self.get_label()]

return self.score

Now we define a function to read annotation in xmlformat and convert them into parameters to pass to the BoundingBoxclass. Most of the code of this function is provided, but you will have to implement the last part of it (replace the Nonevalues).

NOTE: as for many variables in the YOLO framework, (x, y, h, w)used in the BoundingBoxclass are normalized by the image height and width, meaning that they have values in the range [0.0, 1.0]. Take this into account in your implementation!!!

现在我们定义一个函数来读取xml格式的注释,并将它们转换为参数以传递给BoundingBox类。 提供了这个函数的大部分代码,但你必须实现它的最后一部分(替换None值)。

注意:对于YOLO框架中的许多变量,在BoundingBox类中使用的(x, y, h, w),通过图片的长和宽归一化了,意思是它们的值在[0.0,1.0]范围内。 在您的实施中考虑到这一点!

def xml2bbox(xml_file):

# parse structure of XML file

tree = ET.parse(xml_file)

for elem in tree.iter():

if 'width' in elem.tag:

width = int(elem.text) # image width

if 'height' in elem.tag:

height = int(elem.text) # image height

for attr in list(elem):

if 'bndbox' in attr.tag:

for dim in list(attr):

if 'xmin' in dim.tag:

xmin = int(round(float(dim.text))) # xmin bounding box

if 'ymin' in dim.tag:

ymin = int(round(float(dim.text))) # ymin bounding box

if 'xmax' in dim.tag:

xmax = int(round(float(dim.text))) # xmax bounding box

if 'ymax' in dim.tag:

ymax = int(round(float(dim.text))) # ymax bounding box

### Replace None with your code ###

x = ((xmin + xmax) / 2.0) / 512

y = ((ymin + ymax ) / 2.0) / 512

w = (xmax - xmin) / 512.0

h = (ymax - ymin) / 512.0

print(x, y, w, h)

return BoundingBox(x,y,w,h)

现在定义 BoundingBox类和 xml2bbox函数, 我们可以获得与所选训练图像的XML文件注释相对应的边界框:

anno = os.path.join(workdir, 'train', 'xml_right', case_filename)

bbox = xml2bbox(anno)

(0.291015625, 0.509765625, 0.35546875, 0.61328125)

此时,定义一个matplotlib绘制边界框的函数,为了绘制边框更加方便。 我们将使用Rectangle类。 下面的函数读取一个BoundingBoxobjects列表,并返回一个对应的Rectangleobjects列表。

def get_matplotlib_boxes(boxes, img_shape):

plt_boxes = []

for box in boxes:

xmin = int((box.x - box.w/2) * img_shape[1])

xmax = int((box.x + box.w/2) * img_shape[1])

ymin = int((box.y - box.h/2) * img_shape[0])

ymax = int((box.y + box.h/2) * img_shape[0])

plt_boxes.append(patches.Rectangle((xmin, ymin), xmax-xmin, ymax-ymin, fill=False, color='#00FF00', linewidth='2'))

return plt_boxes

现在我们可以使用到目前为止编写的代码来读取,处理和可视化右肺的边界框以及上面可视化的胸X射线图像:

# get bounding boxes in matplotlib format

plt_boxes = get_matplotlib_boxes([bbox],image.shape)

# visualize image and bounding box

fig = plt.figure(figsize=(10, 10))

ax = fig.add_subplot(111, aspect='equal')

plt.imshow(image.squeeze(), cmap='gray')

for plt_box in plt_boxes:

ax.add_patch(plt_box)

plt.show()

问:为什么当边界框实际位于图像的左侧时,我们会谈论右肺?

答:从患者的角度来看,我们在图像中看到的实际上是右肺的边界框。 但是,如果从外人的角度来看它确实放在了左肺上。 换句话说,这取决于您从哪个角度来看待它。 然而,对于该图像的正确描述将是从患者的角度看边界框放置在右肺上。

加载预训练YOLO模型来测试测试集数据

我们已经训练了一个YOLO网络来检测胸片图像中的右肺。 预训练网络的权重可在workdir中找到。 我们现在将使用这个预先训练好的权重文件来测试。

YOLO 参数

The list of parameters and their short description is the following:

YOLO方法有几个参数需要配置才能检测物体。 所有参数均在YOLO和YOLO9000(YOLOv2)论文中展示和解释。 特别是,我们知道YOLO将输入图像划分为单元格网格,其大小必须由用户定义。

| 变量名 | 描述 |

|---|---|

LABELS |

训练集的标签列表 (RL= right lung, LL= left lung) |

IMAGE_H, IMAGE_W |

输入图像的高度和宽度 |

GRID_H, GRID_W |

图像每个维度的网格单元数。网格单元格大小为 (IMAGE_H/GRID_H, IMAGE_W/GRID_W) |

BOX |

单个网格单元可以预测的框的数量(即anchors的数量) |

CLASS |

分类的个数 |

CLASS_WEIGHTS |

Array to define the importance of each class |

OBJ_THRESHOLD |

Threshold used in final object detection |

NMS_THRESHOLD |

Threshold used in non maximal suppression |

ANCHORS |

定义所用锚点的网格单元大小系数列表(将由两个读取)。 这意味着(ANCHORS [0],ANCHORS [1])是第一个锚点的宽度和高度,(ANCHORS [2],ANCHORS [3])是 第二个锚的宽度和高度等。与原始YOLO论文中的内容不同,并且这里的锚点是通过网格单元大小而不是图像大小来标准化的,所以我们可以有 w >= 1.and h >= 1.. |

NO_OBJECT_SCALE |

损失函数的一部分 |

OBJECT_SCALE |

损失函数的一部分 |

COORD_SCALE |

损失函数的一部分 |

CLASS_SCALE |

损失函数的一部分 |

BATCH_SIZE |

Mini-batch size |

WARM_UP_BATCHES |

Number of mini-batches used at the beginning of training to do warm-up (a different loss is applied) |

TRUE_BOX_BUFFER |

Used to create list of ground truth boxes, which is needed for Keras implementation |

所有这些参数都在下一个单元格中被设置了.

# set the parameters for the detection of the right lung

LABELS = ['RL']

IMAGE_H, IMAGE_W = 512, 512

GRID_H, GRID_W = 16 , 16

BOX = 5

CLASS = len(LABELS)

CLASS_WEIGHTS = np.ones(CLASS, dtype='float32')

OBJ_THRESHOLD = 0.3

NMS_THRESHOLD = 0.3

ANCHORS = [0.57273, 0.677385, 1.87446, 2.06253, 3.33843, 5.47434, 7.88282, 3.52778, 9.77052, 9.16828]

NO_OBJECT_SCALE = 1.0 # lambda noobj

OBJECT_SCALE = 5.0 # lambda obj

COORD_SCALE = 1.0 # don't touch this

CLASS_SCALE = 1.0 # don't touch this

BATCH_SIZE = 8

WARM_UP_BATCHES = 100

TRUE_BOX_BUFFER = 50

YOLO 网络结构

现在我们定义了YOLO网络的体系结构,因为它附带了repo中的代码,它与YOLO论文中的代码非常接近,并且包含了YOLO9000论文中的一些功能,例如某种残差连接(就像在ResNet中一样)。

def space_to_depth_x2(x):

# Re-arranges blocks of spatial data into depth.

# Outputs a copy of the input tensor where the values from the height and width

# are moved to depth dimensions

return tf.space_to_depth(x, block_size=2)

input_image = Input(shape=(IMAGE_H, IMAGE_W, 3))

true_boxes = Input(shape=(1, 1, 1, TRUE_BOX_BUFFER , 4))

def YOLO_network(input_img,true_bxs,CLASS):

# Layer 1

x = Conv2D(32, (3,3), strides=(1,1), padding='same', name='conv_1', use_bias=False)(input_img)

x = BatchNormalization(name='norm_1')(x)

x = LeakyReLU(alpha=0.1)(x)

x = MaxPooling2D(pool_size=(2, 2))(x)

# Layer 2

x = Conv2D(64, (3,3), strides=(1,1), padding='same', name='conv_2', use_bias=False)(x)

x = BatchNormalization(name='norm_2')(x)

x = LeakyReLU(alpha=0.1)(x)

x = MaxPooling2D(pool_size=(2, 2))(x)

# Layer 3

x = Conv2D(128, (3,3), strides=(1,1), padding='same', name='conv_3', use_bias=False)(x)

x = BatchNormalization(name='norm_3')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 4

x = Conv2D(64, (1,1), strides=(1,1), padding='same', name='conv_4', use_bias=False)(x)

x = BatchNormalization(name='norm_4')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 5

x = Conv2D(128, (3,3), strides=(1,1), padding='same', name='conv_5', use_bias=False)(x)

x = BatchNormalization(name='norm_5')(x)

x = LeakyReLU(alpha=0.1)(x)

x = MaxPooling2D(pool_size=(2, 2))(x)

# Layer 6

x = Conv2D(256, (3,3), strides=(1,1), padding='same', name='conv_6', use_bias=False)(x)

x = BatchNormalization(name='norm_6')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 7

x = Conv2D(128, (1,1), strides=(1,1), padding='same', name='conv_7', use_bias=False)(x)

x = BatchNormalization(name='norm_7')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 8

x = Conv2D(256, (3,3), strides=(1,1), padding='same', name='conv_8', use_bias=False, input_shape=(512,512,3))(x)

x = BatchNormalization(name='norm_8')(x)

x = LeakyReLU(alpha=0.1)(x)

x = MaxPooling2D(pool_size=(2, 2))(x)

# Layer 9

x = Conv2D(512, (3,3), strides=(1,1), padding='same', name='conv_9', use_bias=False)(x)

x = BatchNormalization(name='norm_9')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 10

x = Conv2D(256, (1,1), strides=(1,1), padding='same', name='conv_10', use_bias=False)(x)

x = BatchNormalization(name='norm_10')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 11

x = Conv2D(512, (3,3), strides=(1,1), padding='same', name='conv_11', use_bias=False)(x)

x = BatchNormalization(name='norm_11')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 12

x = Conv2D(256, (1,1), strides=(1,1), padding='same', name='conv_12', use_bias=False)(x)

x = BatchNormalization(name='norm_12')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 13

x = Conv2D(512, (3,3), strides=(1,1), padding='same', name='conv_13', use_bias=False)(x)

x = BatchNormalization(name='norm_13')(x)

x = LeakyReLU(alpha=0.1)(x)

skip_connection = x

x = MaxPooling2D(pool_size=(2, 2))(x)

# Layer 14

x = Conv2D(1024, (3,3), strides=(1,1), padding='same', name='conv_14', use_bias=False)(x)

x = BatchNormalization(name='norm_14')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 15

x = Conv2D(512, (1,1), strides=(1,1), padding='same', name='conv_15', use_bias=False)(x)

x = BatchNormalization(name='norm_15')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 16

x = Conv2D(1024, (3,3), strides=(1,1), padding='same', name='conv_16', use_bias=False)(x)

x = BatchNormalization(name='norm_16')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 17

x = Conv2D(512, (1,1), strides=(1,1), padding='same', name='conv_17', use_bias=False)(x)

x = BatchNormalization(name='norm_17')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 18

x = Conv2D(1024, (3,3), strides=(1,1), padding='same', name='conv_18', use_bias=False)(x)

x = BatchNormalization(name='norm_18')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 19

x = Conv2D(1024, (3,3), strides=(1,1), padding='same', name='conv_19', use_bias=False)(x)

x = BatchNormalization(name='norm_19')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 20

x = Conv2D(1024, (3,3), strides=(1,1), padding='same', name='conv_20', use_bias=False)(x)

x = BatchNormalization(name='norm_20')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 21

skip_connection = Conv2D(64, (1,1), strides=(1,1), padding='same', name='conv_21', use_bias=False)(skip_connection)

skip_connection = BatchNormalization(name='norm_21')(skip_connection)

skip_connection = LeakyReLU(alpha=0.1)(skip_connection)

skip_connection = Lambda(space_to_depth_x2)(skip_connection)

x = concatenate([skip_connection, x])

# Layer 22

x = Conv2D(1024, (3,3), strides=(1,1), padding='same', name='conv_22', use_bias=False)(x)

x = BatchNormalization(name='norm_22')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 23

x = Conv2D(BOX * (4 + 1 + CLASS), (1,1), strides=(1,1), padding='same', name='conv_23')(x)

output = Reshape((GRID_H, GRID_W, BOX, 4 + 1 + CLASS))(x)

# small hack to allow true_boxes to be registered when Keras build the model

# for more information: https://github.com/fchollet/keras/issues/2790

output = Lambda(lambda args: args[0])([output, true_bxs])

model = Model([input_img, true_bxs], output)

model.summary()

return model

Now we can make an instance of the YOLO network by calling the function:

model = YOLO_network(input_image, true_boxes, CLASS)

____________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

====================================================================================================

input_1 (InputLayer) (None, 512, 512, 3) 0

____________________________________________________________________________________________________

conv_1 (Conv2D) (None, 512, 512, 32) 864 input_1[0][0]

____________________________________________________________________________________________________

norm_1 (BatchNormalization) (None, 512, 512, 32) 128 conv_1[0][0]

____________________________________________________________________________________________________

leaky_re_lu_23 (LeakyReLU) (None, 512, 512, 32) 0 norm_1[0][0]

____________________________________________________________________________________________________

max_pooling2d_6 (MaxPooling2D) (None, 256, 256, 32) 0 leaky_re_lu_23[0][0]

____________________________________________________________________________________________________

conv_2 (Conv2D) (None, 256, 256, 64) 18432 max_pooling2d_6[0][0]

____________________________________________________________________________________________________

norm_2 (BatchNormalization) (None, 256, 256, 64) 256 conv_2[0][0]

____________________________________________________________________________________________________

leaky_re_lu_24 (LeakyReLU) (None, 256, 256, 64) 0 norm_2[0][0]

____________________________________________________________________________________________________

max_pooling2d_7 (MaxPooling2D) (None, 128, 128, 64) 0 leaky_re_lu_24[0][0]

____________________________________________________________________________________________________

conv_3 (Conv2D) (None, 128, 128, 128) 73728 max_pooling2d_7[0][0]

____________________________________________________________________________________________________

norm_3 (BatchNormalization) (None, 128, 128, 128) 512 conv_3[0][0]

____________________________________________________________________________________________________

leaky_re_lu_25 (LeakyReLU) (None, 128, 128, 128) 0 norm_3[0][0]

____________________________________________________________________________________________________

conv_4 (Conv2D) (None, 128, 128, 64) 8192 leaky_re_lu_25[0][0]

____________________________________________________________________________________________________

norm_4 (BatchNormalization) (None, 128, 128, 64) 256 conv_4[0][0]

____________________________________________________________________________________________________

leaky_re_lu_26 (LeakyReLU) (None, 128, 128, 64) 0 norm_4[0][0]

____________________________________________________________________________________________________

conv_5 (Conv2D) (None, 128, 128, 128) 73728 leaky_re_lu_26[0][0]

____________________________________________________________________________________________________

norm_5 (BatchNormalization) (None, 128, 128, 128) 512 conv_5[0][0]

____________________________________________________________________________________________________

leaky_re_lu_27 (LeakyReLU) (None, 128, 128, 128) 0 norm_5[0][0]

____________________________________________________________________________________________________

max_pooling2d_8 (MaxPooling2D) (None, 64, 64, 128) 0 leaky_re_lu_27[0][0]

____________________________________________________________________________________________________

conv_6 (Conv2D) (None, 64, 64, 256) 294912 max_pooling2d_8[0][0]

____________________________________________________________________________________________________

norm_6 (BatchNormalization) (None, 64, 64, 256) 1024 conv_6[0][0]

____________________________________________________________________________________________________

leaky_re_lu_28 (LeakyReLU) (None, 64, 64, 256) 0 norm_6[0][0]

____________________________________________________________________________________________________

conv_7 (Conv2D) (None, 64, 64, 128) 32768 leaky_re_lu_28[0][0]

____________________________________________________________________________________________________

norm_7 (BatchNormalization) (None, 64, 64, 128) 512 conv_7[0][0]

____________________________________________________________________________________________________

leaky_re_lu_29 (LeakyReLU) (None, 64, 64, 128) 0 norm_7[0][0]

____________________________________________________________________________________________________

conv_8 (Conv2D) (None, 64, 64, 256) 294912 leaky_re_lu_29[0][0]

____________________________________________________________________________________________________

norm_8 (BatchNormalization) (None, 64, 64, 256) 1024 conv_8[0][0]

____________________________________________________________________________________________________

leaky_re_lu_30 (LeakyReLU) (None, 64, 64, 256) 0 norm_8[0][0]

____________________________________________________________________________________________________

max_pooling2d_9 (MaxPooling2D) (None, 32, 32, 256) 0 leaky_re_lu_30[0][0]

____________________________________________________________________________________________________

conv_9 (Conv2D) (None, 32, 32, 512) 1179648 max_pooling2d_9[0][0]

____________________________________________________________________________________________________

norm_9 (BatchNormalization) (None, 32, 32, 512) 2048 conv_9[0][0]

____________________________________________________________________________________________________

leaky_re_lu_31 (LeakyReLU) (None, 32, 32, 512) 0 norm_9[0][0]

____________________________________________________________________________________________________

conv_10 (Conv2D) (None, 32, 32, 256) 131072 leaky_re_lu_31[0][0]

____________________________________________________________________________________________________

norm_10 (BatchNormalization) (None, 32, 32, 256) 1024 conv_10[0][0]

____________________________________________________________________________________________________

leaky_re_lu_32 (LeakyReLU) (None, 32, 32, 256) 0 norm_10[0][0]

____________________________________________________________________________________________________

conv_11 (Conv2D) (None, 32, 32, 512) 1179648 leaky_re_lu_32[0][0]

____________________________________________________________________________________________________

norm_11 (BatchNormalization) (None, 32, 32, 512) 2048 conv_11[0][0]

____________________________________________________________________________________________________

leaky_re_lu_33 (LeakyReLU) (None, 32, 32, 512) 0 norm_11[0][0]

____________________________________________________________________________________________________

conv_12 (Conv2D) (None, 32, 32, 256) 131072 leaky_re_lu_33[0][0]

____________________________________________________________________________________________________

norm_12 (BatchNormalization) (None, 32, 32, 256) 1024 conv_12[0][0]

____________________________________________________________________________________________________

leaky_re_lu_34 (LeakyReLU) (None, 32, 32, 256) 0 norm_12[0][0]

____________________________________________________________________________________________________

conv_13 (Conv2D) (None, 32, 32, 512) 1179648 leaky_re_lu_34[0][0]

____________________________________________________________________________________________________

norm_13 (BatchNormalization) (None, 32, 32, 512) 2048 conv_13[0][0]

____________________________________________________________________________________________________

leaky_re_lu_35 (LeakyReLU) (None, 32, 32, 512) 0 norm_13[0][0]

____________________________________________________________________________________________________

max_pooling2d_10 (MaxPooling2D) (None, 16, 16, 512) 0 leaky_re_lu_35[0][0]

____________________________________________________________________________________________________

conv_14 (Conv2D) (None, 16, 16, 1024) 4718592 max_pooling2d_10[0][0]

____________________________________________________________________________________________________

norm_14 (BatchNormalization) (None, 16, 16, 1024) 4096 conv_14[0][0]

____________________________________________________________________________________________________

leaky_re_lu_36 (LeakyReLU) (None, 16, 16, 1024) 0 norm_14[0][0]

____________________________________________________________________________________________________

conv_15 (Conv2D) (None, 16, 16, 512) 524288 leaky_re_lu_36[0][0]

____________________________________________________________________________________________________

norm_15 (BatchNormalization) (None, 16, 16, 512) 2048 conv_15[0][0]

____________________________________________________________________________________________________

leaky_re_lu_37 (LeakyReLU) (None, 16, 16, 512) 0 norm_15[0][0]

____________________________________________________________________________________________________

conv_16 (Conv2D) (None, 16, 16, 1024) 4718592 leaky_re_lu_37[0][0]

____________________________________________________________________________________________________

norm_16 (BatchNormalization) (None, 16, 16, 1024) 4096 conv_16[0][0]

____________________________________________________________________________________________________

leaky_re_lu_38 (LeakyReLU) (None, 16, 16, 1024) 0 norm_16[0][0]

____________________________________________________________________________________________________

conv_17 (Conv2D) (None, 16, 16, 512) 524288 leaky_re_lu_38[0][0]

____________________________________________________________________________________________________

norm_17 (BatchNormalization) (None, 16, 16, 512) 2048 conv_17[0][0]

____________________________________________________________________________________________________

leaky_re_lu_39 (LeakyReLU) (None, 16, 16, 512) 0 norm_17[0][0]

____________________________________________________________________________________________________

conv_18 (Conv2D) (None, 16, 16, 1024) 4718592 leaky_re_lu_39[0][0]

____________________________________________________________________________________________________

norm_18 (BatchNormalization) (None, 16, 16, 1024) 4096 conv_18[0][0]

____________________________________________________________________________________________________

leaky_re_lu_40 (LeakyReLU) (None, 16, 16, 1024) 0 norm_18[0][0]

____________________________________________________________________________________________________

conv_19 (Conv2D) (None, 16, 16, 1024) 9437184 leaky_re_lu_40[0][0]

____________________________________________________________________________________________________

norm_19 (BatchNormalization) (None, 16, 16, 1024) 4096 conv_19[0][0]

____________________________________________________________________________________________________

conv_21 (Conv2D) (None, 32, 32, 64) 32768 leaky_re_lu_35[0][0]

____________________________________________________________________________________________________

leaky_re_lu_41 (LeakyReLU) (None, 16, 16, 1024) 0 norm_19[0][0]

____________________________________________________________________________________________________

norm_21 (BatchNormalization) (None, 32, 32, 64) 256 conv_21[0][0]

____________________________________________________________________________________________________

conv_20 (Conv2D) (None, 16, 16, 1024) 9437184 leaky_re_lu_41[0][0]

____________________________________________________________________________________________________

leaky_re_lu_43 (LeakyReLU) (None, 32, 32, 64) 0 norm_21[0][0]

____________________________________________________________________________________________________

norm_20 (BatchNormalization) (None, 16, 16, 1024) 4096 conv_20[0][0]

____________________________________________________________________________________________________

lambda_3 (Lambda) (None, 16, 16, 256) 0 leaky_re_lu_43[0][0]

____________________________________________________________________________________________________

leaky_re_lu_42 (LeakyReLU) (None, 16, 16, 1024) 0 norm_20[0][0]

____________________________________________________________________________________________________

concatenate_2 (Concatenate) (None, 16, 16, 1280) 0 lambda_3[0][0]

leaky_re_lu_42[0][0]

____________________________________________________________________________________________________

conv_22 (Conv2D) (None, 16, 16, 1024) 11796480 concatenate_2[0][0]

____________________________________________________________________________________________________

norm_22 (BatchNormalization) (None, 16, 16, 1024) 4096 conv_22[0][0]

____________________________________________________________________________________________________

leaky_re_lu_44 (LeakyReLU) (None, 16, 16, 1024) 0 norm_22[0][0]

____________________________________________________________________________________________________

conv_23 (Conv2D) (None, 16, 16, 30) 30750 leaky_re_lu_44[0][0]

____________________________________________________________________________________________________

reshape_2 (Reshape) (None, 16, 16, 5, 6) 0 conv_23[0][0]

____________________________________________________________________________________________________

input_2 (InputLayer) (None, 1, 1, 1, 50, 4 0

____________________________________________________________________________________________________

lambda_4 (Lambda) (None, 16, 16, 5, 6) 0 reshape_2[0][0]

input_2[0][0]

====================================================================================================

Total params: 50,578,686

Trainable params: 50,558,014

Non-trainable params: 20,672

____________________________________________________________________________________________________

加载预训练权重来检测右肺部

执行下面的单元格将加载已经在训练好右肺检测权重。

wt_path = os.path.join(workdir, 'weights_right_lung.h5')

model.load_weights(wt_path)

print("Weights loaded from disk")

现在我们可以尝试使用YOLO处理来自测试集的一个图像,并检查生成的输出的格式。 由于图像在训练期间被标准化为具有0.0和1.0之间的值域,因此我们将在测试时应用相同的标准化策略。

# define directory of test images

test_dir = os.path.join(workdir,'test_images')

# pick a random test file

test_img = os.path.join(test_dir, random.choice(os.listdir(test_dir)))

# process image

t_start = time.time()

print("Processing", test_img)

image = cv2.imread(test_img)

input_image = image / 255. # rescale intensity to [0, 1]

input_image = input_image[:,:,::-1]

img_shape = image.shape

input_image = np.expand_dims(input_image, 0)

# define variable needed to process input image

dummy_array = np.zeros((1,1,1,1,TRUE_BOX_BUFFER,4))

# get output from network

netout = model.predict([input_image, dummy_array])

print('processing one chest x-ray took {} seconds'.format(time.time() - t_start))

('Processing', './test_images/00014389_023.png')

processing one chest x-ray took 0.626572132111 seconds

让我们打印张量netout的shape并尝试了解它包含的信息类型。

print(netout.shape)

(1, 16, 16, 5, 6)

如果您没有更改YOLO的默认参数,您会看到如下内容:

(1, 16, 16, 5, 6)

这些值代表:

(mini_batch_size, grid_h, grid_w, n_bbox, ???)

为了理解第五维的含义(我们指出为???),我们要求你做两件事:

- 阅读并尝试了解以下功能的内容

decode_netout() - 使用本notebook的内容,关于物体检测和YOLO

def decode_netout(netout, obj_threshold, nms_threshold, anchors, nb_class):

"""

Decode output tensor of YOLO network and return list of BoundingBox objects.

"""

grid_h, grid_w, nb_box = netout.shape[:3]

boxes = []

# decode the output by the network

netout[..., 4] = utils.sigmoid(netout[..., 4])

netout[..., 5:] = netout[..., 4][..., np.newaxis] * utils.softmax(netout[..., 5:])

netout[..., 5:] *= netout[..., 5:] > obj_threshold

for row in range(grid_h):

for col in range(grid_w):

for b in range(nb_box):

# from 4th element onwards are confidence and class classes

classes = netout[row,col,b,5:]

if classes.any():

# first 4 elements are x, y, w, and h

x, y, w, h = netout[row,col,b,:4]

x = (col + utils.sigmoid(x)) / grid_w # center position, unit: image width

y = (row + utils.sigmoid(y)) / grid_h # center position, unit: image height

w = anchors[2 * b + 0] * np.exp(w) / grid_w # unit: image width

h = anchors[2 * b + 1] * np.exp(h) / grid_h # unit: image height

confidence = netout[row,col,b,4]

box = BoundingBox(x, y, w, h, confidence, classes)

boxes.append(box)

# suppress non-maximal boxes

for c in range(nb_class):

sorted_indices = list(reversed(np.argsort([box.classes[c] for box in boxes])))

for i in range(len(sorted_indices)):

index_i = sorted_indices[i]

if boxes[index_i].classes[c] == 0:

continue

else:

for j in range(i+1, len(sorted_indices)):

index_j = sorted_indices[j]

if utils.bbox_iou(boxes[index_i], boxes[index_j]) >= nms_threshold:

boxes[index_j].classes[c] = 0

# remove the boxes which are less likely than a obj_threshold

boxes = [box for box in boxes if box.get_score() > obj_threshold]

return boxes

Q: 变量 netout的第五维的第六个值是什么?

A:

-

value #1: x -

value #2: y -

value #3: h -

value #4: w -

value #5: confidence -

value #6: classes

现在我们可以将所有这些东西放在一起,并创建一个函数,我们可以使用它来获得测试图像的预测边界框:

def predict_bounding_box(img, model, obj_threshold, nms_threshold, anchors, nb_class):

"""

Predict bounding boxes for a given image.

"""

image = cv2.imread(img)

input_image = image / 255. # rescale intensity to [0, 1]

input_image = input_image[:,:,::-1]

img_shape = image.shape

input_image = np.expand_dims(input_image, 0)

# define variable needed to process input image

dummy_array = np.zeros((1,1,1,1,TRUE_BOX_BUFFER,4))

# get output from network

netout = model.predict([input_image, dummy_array])

return decode_netout(netout[0], obj_threshold, nms_threshold, anchors, nb_class)

我们使用刚刚定义的函数来处理我们的测试图像并可视化结果:

# define a threshold to apply to predictions

obj_threshold=0.2

boxes = predict_bounding_box(test_img, model, obj_threshold, NMS_THRESHOLD, ANCHORS, CLASS)

# get matplotlib bbox objects

plt_boxes = get_matplotlib_boxes(boxes, img_shape)

# visualize result

fig = plt.figure(figsize=(10, 10))

ax = fig.add_subplot(111, aspect='equal')

plt.imshow(cv2.imread(test_img), cmap='gray')

for plt_box in plt_boxes:

ax.add_patch(plt_box)

plt.show()

问:对于第一次测试,我们使用阈值obj_threshold = 0.2,这使得模型对右肺产生了有意义的边界框。 试着看看如果你选择一个低得多的阈值会发生什么,例如obj_threshold = 0.0,或obj_threshold = 0.001并解释你看到的内容。

答:将阈值降低到0.0隐含意味着没有对象阈值。 此外,要显示的边界框必须具有大于已设置的obj_threshold的分数。 由于阈值设置为0.0,因此在显示结果时可以查看为上述对象找到的每个可能的布线框。 同样,将对象阈值设置为0.001将会消除一些边界框(仅限分数低于0.001的边界框),但结果的可视化将指向与阈值0.0相同的混乱。

现在最好在整个测试集上检查YOLO的性能。 由于测试集太大而无法以这种方式处理,如果您认为上面单元格的输出似乎是正确的,请运行下面的单元格来处理测试数据集中的前50个图像并可视化输出。 更改obj_threshold以允许检测具有更高/更低置信度值的边界框。

out_dir = './outputs'

if not os.path.exists(out_dir):

os.makedirs(out_dir)

test_dir = os.path.join(workdir,'test_images')

file_list = os.listdir(test_dir)

obj_threshold=0.2

for t in range(3):

test_img = os.path.join(test_dir,file_list[t])

print('processing {}'.format(file_list[t]))

boxes = predict_bounding_box(test_img, model, obj_threshold, NMS_THRESHOLD, ANCHORS, CLASS)

# get matplotlib bbox objects

#

plt_boxes = get_matplotlib_boxes(boxes, img_shape)

# visualize results

#

fig1 = plt.figure(figsize=(10, 10))

ax1 = fig1.add_subplot(111, aspect='equal')

plt.imshow(cv2.imread(test_img).squeeze(), cmap='gray')

for plt_box in plt_boxes:

ax1.add_patch(plt_box)

plt.show()

processing 00028774_012.png

processing 00017038_000.png

processing 00005252_000.png

任务 2. 再训练YOLO模型来检测2个肺!

现在我们已经熟悉了数据,网络架构及其模型预测结果输出,我们可以开始重新训练YOLO以检测两个肺部。

使用 BatchGenerator 来初始化训练集

在我们开始训练模型之前,我们初始化了BatchGenerator,来在训练时对训练集和验证集的数据进行采样,类似于之前任务中的操作。 在这种情况下,我们将使用Github仓库中定义的类BatchGenerator。 这个类需要两个输入对象:

*all_imgs:图像列表

*generator_config:配置参数字典

我们首先在下一个单元格中定义generator_config(我们将重用之前已经使用的所有变量,但在需要时最后使用“2”,表示这次是针对2个肺):

# define configuration parameters for batch generator

LABELS2 = ['RL','LL'] # RL = right lung, LL = left lung

CLASS2 = len(LABELS2)

CLASS_WEIGHTS2 = np.ones(CLASS2, dtype='float32')

generator_config = {

'IMAGE_H' : IMAGE_H,

'IMAGE_W' : IMAGE_W,

'GRID_H' : GRID_H,

'GRID_W' : GRID_W,

'BOX' : BOX,

'LABELS' : LABELS2,

'CLASS' : len(LABELS2),

'ANCHORS' : ANCHORS,

'BATCH_SIZE' : BATCH_SIZE,

'TRUE_BOX_BUFFER' : 50,

}

input_image_2 = Input(shape=(IMAGE_H, IMAGE_W, 3))

true_boxes_2 = Input(shape=(1, 1, 1, TRUE_BOX_BUFFER , 4))

model_2 = YOLO_network(input_image_2, true_boxes_2, CLASS2)

____________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

====================================================================================================

input_3 (InputLayer) (None, 512, 512, 3) 0

____________________________________________________________________________________________________

conv_1 (Conv2D) (None, 512, 512, 32) 864 input_3[0][0]

____________________________________________________________________________________________________

norm_1 (BatchNormalization) (None, 512, 512, 32) 128 conv_1[0][0]

____________________________________________________________________________________________________

leaky_re_lu_45 (LeakyReLU) (None, 512, 512, 32) 0 norm_1[0][0]

____________________________________________________________________________________________________

max_pooling2d_11 (MaxPooling2D) (None, 256, 256, 32) 0 leaky_re_lu_45[0][0]

____________________________________________________________________________________________________

conv_2 (Conv2D) (None, 256, 256, 64) 18432 max_pooling2d_11[0][0]

____________________________________________________________________________________________________

norm_2 (BatchNormalization) (None, 256, 256, 64) 256 conv_2[0][0]

____________________________________________________________________________________________________

leaky_re_lu_46 (LeakyReLU) (None, 256, 256, 64) 0 norm_2[0][0]

____________________________________________________________________________________________________

max_pooling2d_12 (MaxPooling2D) (None, 128, 128, 64) 0 leaky_re_lu_46[0][0]

____________________________________________________________________________________________________

conv_3 (Conv2D) (None, 128, 128, 128) 73728 max_pooling2d_12[0][0]

____________________________________________________________________________________________________

norm_3 (BatchNormalization) (None, 128, 128, 128) 512 conv_3[0][0]

____________________________________________________________________________________________________

leaky_re_lu_47 (LeakyReLU) (None, 128, 128, 128) 0 norm_3[0][0]

____________________________________________________________________________________________________

conv_4 (Conv2D) (None, 128, 128, 64) 8192 leaky_re_lu_47[0][0]

____________________________________________________________________________________________________

norm_4 (BatchNormalization) (None, 128, 128, 64) 256 conv_4[0][0]

____________________________________________________________________________________________________

leaky_re_lu_48 (LeakyReLU) (None, 128, 128, 64) 0 norm_4[0][0]

____________________________________________________________________________________________________

conv_5 (Conv2D) (None, 128, 128, 128) 73728 leaky_re_lu_48[0][0]

____________________________________________________________________________________________________

norm_5 (BatchNormalization) (None, 128, 128, 128) 512 conv_5[0][0]

____________________________________________________________________________________________________

leaky_re_lu_49 (LeakyReLU) (None, 128, 128, 128) 0 norm_5[0][0]

____________________________________________________________________________________________________

max_pooling2d_13 (MaxPooling2D) (None, 64, 64, 128) 0 leaky_re_lu_49[0][0]

____________________________________________________________________________________________________

conv_6 (Conv2D) (None, 64, 64, 256) 294912 max_pooling2d_13[0][0]

____________________________________________________________________________________________________

norm_6 (BatchNormalization) (None, 64, 64, 256) 1024 conv_6[0][0]

____________________________________________________________________________________________________

leaky_re_lu_50 (LeakyReLU) (None, 64, 64, 256) 0 norm_6[0][0]

____________________________________________________________________________________________________

conv_7 (Conv2D) (None, 64, 64, 128) 32768 leaky_re_lu_50[0][0]

____________________________________________________________________________________________________

norm_7 (BatchNormalization) (None, 64, 64, 128) 512 conv_7[0][0]

____________________________________________________________________________________________________

leaky_re_lu_51 (LeakyReLU) (None, 64, 64, 128) 0 norm_7[0][0]

____________________________________________________________________________________________________

conv_8 (Conv2D) (None, 64, 64, 256) 294912 leaky_re_lu_51[0][0]

____________________________________________________________________________________________________

norm_8 (BatchNormalization) (None, 64, 64, 256) 1024 conv_8[0][0]

____________________________________________________________________________________________________

leaky_re_lu_52 (LeakyReLU) (None, 64, 64, 256) 0 norm_8[0][0]

____________________________________________________________________________________________________

max_pooling2d_14 (MaxPooling2D) (None, 32, 32, 256) 0 leaky_re_lu_52[0][0]

____________________________________________________________________________________________________

conv_9 (Conv2D) (None, 32, 32, 512) 1179648 max_pooling2d_14[0][0]

____________________________________________________________________________________________________

norm_9 (BatchNormalization) (None, 32, 32, 512) 2048 conv_9[0][0]

____________________________________________________________________________________________________

leaky_re_lu_53 (LeakyReLU) (None, 32, 32, 512) 0 norm_9[0][0]

____________________________________________________________________________________________________

conv_10 (Conv2D) (None, 32, 32, 256) 131072 leaky_re_lu_53[0][0]

____________________________________________________________________________________________________

norm_10 (BatchNormalization) (None, 32, 32, 256) 1024 conv_10[0][0]

____________________________________________________________________________________________________

leaky_re_lu_54 (LeakyReLU) (None, 32, 32, 256) 0 norm_10[0][0]

____________________________________________________________________________________________________

conv_11 (Conv2D) (None, 32, 32, 512) 1179648 leaky_re_lu_54[0][0]

____________________________________________________________________________________________________

norm_11 (BatchNormalization) (None, 32, 32, 512) 2048 conv_11[0][0]

____________________________________________________________________________________________________

leaky_re_lu_55 (LeakyReLU) (None, 32, 32, 512) 0 norm_11[0][0]

____________________________________________________________________________________________________

conv_12 (Conv2D) (None, 32, 32, 256) 131072 leaky_re_lu_55[0][0]

____________________________________________________________________________________________________

norm_12 (BatchNormalization) (None, 32, 32, 256) 1024 conv_12[0][0]

____________________________________________________________________________________________________

leaky_re_lu_56 (LeakyReLU) (None, 32, 32, 256) 0 norm_12[0][0]

____________________________________________________________________________________________________

conv_13 (Conv2D) (None, 32, 32, 512) 1179648 leaky_re_lu_56[0][0]

____________________________________________________________________________________________________

norm_13 (BatchNormalization) (None, 32, 32, 512) 2048 conv_13[0][0]

____________________________________________________________________________________________________

leaky_re_lu_57 (LeakyReLU) (None, 32, 32, 512) 0 norm_13[0][0]

____________________________________________________________________________________________________

max_pooling2d_15 (MaxPooling2D) (None, 16, 16, 512) 0 leaky_re_lu_57[0][0]

____________________________________________________________________________________________________

conv_14 (Conv2D) (None, 16, 16, 1024) 4718592 max_pooling2d_15[0][0]

____________________________________________________________________________________________________

norm_14 (BatchNormalization) (None, 16, 16, 1024) 4096 conv_14[0][0]

____________________________________________________________________________________________________

leaky_re_lu_58 (LeakyReLU) (None, 16, 16, 1024) 0 norm_14[0][0]

____________________________________________________________________________________________________

conv_15 (Conv2D) (None, 16, 16, 512) 524288 leaky_re_lu_58[0][0]

____________________________________________________________________________________________________

norm_15 (BatchNormalization) (None, 16, 16, 512) 2048 conv_15[0][0]

____________________________________________________________________________________________________

leaky_re_lu_59 (LeakyReLU) (None, 16, 16, 512) 0 norm_15[0][0]

____________________________________________________________________________________________________

conv_16 (Conv2D) (None, 16, 16, 1024) 4718592 leaky_re_lu_59[0][0]

____________________________________________________________________________________________________

norm_16 (BatchNormalization) (None, 16, 16, 1024) 4096 conv_16[0][0]

____________________________________________________________________________________________________

leaky_re_lu_60 (LeakyReLU) (None, 16, 16, 1024) 0 norm_16[0][0]

____________________________________________________________________________________________________

conv_17 (Conv2D) (None, 16, 16, 512) 524288 leaky_re_lu_60[0][0]

____________________________________________________________________________________________________

norm_17 (BatchNormalization) (None, 16, 16, 512) 2048 conv_17[0][0]

____________________________________________________________________________________________________

leaky_re_lu_61 (LeakyReLU) (None, 16, 16, 512) 0 norm_17[0][0]

____________________________________________________________________________________________________

conv_18 (Conv2D) (None, 16, 16, 1024) 4718592 leaky_re_lu_61[0][0]

____________________________________________________________________________________________________

norm_18 (BatchNormalization) (None, 16, 16, 1024) 4096 conv_18[0][0]

____________________________________________________________________________________________________

leaky_re_lu_62 (LeakyReLU) (None, 16, 16, 1024) 0 norm_18[0][0]

____________________________________________________________________________________________________

conv_19 (Conv2D) (None, 16, 16, 1024) 9437184 leaky_re_lu_62[0][0]

____________________________________________________________________________________________________

norm_19 (BatchNormalization) (None, 16, 16, 1024) 4096 conv_19[0][0]

____________________________________________________________________________________________________

conv_21 (Conv2D) (None, 32, 32, 64) 32768 leaky_re_lu_57[0][0]

____________________________________________________________________________________________________

leaky_re_lu_63 (LeakyReLU) (None, 16, 16, 1024) 0 norm_19[0][0]

____________________________________________________________________________________________________

norm_21 (BatchNormalization) (None, 32, 32, 64) 256 conv_21[0][0]

____________________________________________________________________________________________________

conv_20 (Conv2D) (None, 16, 16, 1024) 9437184 leaky_re_lu_63[0][0]

____________________________________________________________________________________________________

leaky_re_lu_65 (LeakyReLU) (None, 32, 32, 64) 0 norm_21[0][0]

____________________________________________________________________________________________________

norm_20 (BatchNormalization) (None, 16, 16, 1024) 4096 conv_20[0][0]

____________________________________________________________________________________________________

lambda_5 (Lambda) (None, 16, 16, 256) 0 leaky_re_lu_65[0][0]

____________________________________________________________________________________________________

leaky_re_lu_64 (LeakyReLU) (None, 16, 16, 1024) 0 norm_20[0][0]

____________________________________________________________________________________________________

concatenate_3 (Concatenate) (None, 16, 16, 1280) 0 lambda_5[0][0]

leaky_re_lu_64[0][0]

____________________________________________________________________________________________________

conv_22 (Conv2D) (None, 16, 16, 1024) 11796480 concatenate_3[0][0]

____________________________________________________________________________________________________

norm_22 (BatchNormalization) (None, 16, 16, 1024) 4096 conv_22[0][0]

____________________________________________________________________________________________________

leaky_re_lu_66 (LeakyReLU) (None, 16, 16, 1024) 0 norm_22[0][0]

____________________________________________________________________________________________________

conv_23 (Conv2D) (None, 16, 16, 35) 35875 leaky_re_lu_66[0][0]

____________________________________________________________________________________________________

reshape_3 (Reshape) (None, 16, 16, 5, 7) 0 conv_23[0][0]

____________________________________________________________________________________________________

input_4 (InputLayer) (None, 1, 1, 1, 50, 4 0

____________________________________________________________________________________________________

lambda_6 (Lambda) (None, 16, 16, 5, 7) 0 reshape_3[0][0]

input_4[0][0]

====================================================================================================

Total params: 50,583,811

Trainable params: 50,563,139

Non-trainable params: 20,672

____________________________________________________________________________________________________

Here we define the list of images all_imgsby reading all training images and corresponding annotations. This may take a while, because we have 10,000 training images!

在这里,我们通过读取所有训练图像和相应的标注数据来定义图像列表all_imgs。 这可能需要一段时间,因为我们有10,000张训练图像!

# extract list of images and corresponding annotations

image_path = os.path.join(workdir, 'train', 'images/')

annot_path = os.path.join(workdir, 'train', 'xml/')

all_imgs, seen_labels = parse_annotation(annot_path, image_path)

初始化BatchGenerator用于训练和验证集。 为此,我们必须定义我们想要用于训练和验证的百分比。

# define percentage of data to use for training

training_data_percentage = 0.8 # set a number between 0 and 1

# define number of training images

train_valid_split = int(training_data_percentage * len(all_imgs))

# initialize training and validation batch generators

train_batch = BatchGenerator(all_imgs[:train_valid_split], generator_config, norm=utils.normalize)

valid_batch = BatchGenerator(all_imgs[train_valid_split:], generator_config, norm=utils.normalize)

损失函数

我们了解到YOLO网络具有包含多个元素的损失函数。 在这里,我们报告损失函数的公式,以及它与repo代码一起提供的实现。 正如您将看到的,这是一段相当长而复杂的代码。 我们在这里展现一下公式和代码的完整性,但您现在不必过于担心,只需使用它!

L = λ coord ∑ i = 0 S 2 ∑ j = 0 B L i j obj [ ( x i − x ^ i ) 2 + ( y i − y ^ i ) 2 ] + λ coord ∑ i = 0 S 2 ∑ j = 0 B L i j obj [ ( w i − w ^ i ) 2 + ( h i − h ^ i ) 2 ] + ∑ i = 0 S 2 ∑ j = 0 B L i j obj ( C i − C ^ i ) 2 + λ noobj ∑ i = 0 S 2 ∑ j = 0 B L i j noobj ( C i − C ^ i ) 2 + ∑ i = 0 S 2 L i obj ∑ c ∈ classes ( p i ( c ) − p ^ i ( c ) ) 2 \begin{aligned}L &=\lambda_\textbf{coord} \sum_{i = 0}^{S^2} \sum_{j = 0}^{B} L_{ij}^{\text{obj}} \left[ \left( x_i - \hat{x}_i \right)^2 + \left( y_i - \hat{y}_i \right)^2 \right] \\ &+ \lambda_\textbf{coord} \sum_{i = 0}^{S^2} \sum_{j = 0}^{B} L_{ij}^{\text{obj}} \left[ \left( \sqrt{w_i} - \sqrt{\hat{w}_i} \right)^2 + \left( \sqrt{h_i} - \sqrt{\hat{h}_i} \right)^2 \right] \\ &+ \sum_{i = 0}^{S^2} \sum_{j = 0}^{B} L_{ij}^{\text{obj}} \left( C_i - \hat{C}_i \right)^2 \\ &+ \lambda_\textrm{noobj} \sum_{i = 0}^{S^2} \sum_{j = 0}^{B} L_{ij}^{\text{noobj}} \left( C_i - \hat{C}_i \right)^2 \\ &+ \sum_{i = 0}^{S^2} L_i^{\text{obj}} \sum_{c \in \textrm{classes}} \left( p_i(c) - \hat{p}_i(c) \right)^2 \end{aligned} L=λcoordi=0∑S2j=0∑BLijobj[(xi−x^i)2+(yi−y^i)2]+λcoordi=0∑S2j=0∑BLijobj[(wi−w^i)2+(hi−h^i)2]+i=0∑S2j=0∑BLijobj(Ci−C^i)2+λnoobji=0∑S2j=0∑BLijnoobj(Ci−C^i)2+i=0∑S2Liobjc∈classes∑(pi(c)−p^i(c))2

def loss(y_true, y_pred):

"""

A custom loss is defined for YOLO, which is not implemented in Keras.

"""

mask_shape = tf.shape(y_true)[:4]

cell_x = tf.to_float(tf.reshape(tf.tile(tf.range(GRID_W), [GRID_H]), (1, GRID_H, GRID_W, 1, 1)))

cell_y = tf.transpose(cell_x, (0,2,1,3,4))

cell_grid = tf.tile(tf.concat([cell_x,cell_y], -1), [BATCH_SIZE, 1, 1, BOX, 1])

coord_mask = tf.zeros(mask_shape)

conf_mask = tf.zeros(mask_shape)

class_mask = tf.zeros(mask_shape)

seen = tf.Variable(0.)

total_recall = tf.Variable(0.)

"""

Adjust prediction

"""

### adjust x and y

pred_box_xy = tf.sigmoid(y_pred[..., :2]) + cell_grid

### adjust w and h

pred_box_wh = tf.exp(y_pred[..., 2:4]) * np.reshape(ANCHORS, [1,1,1,BOX,2])

### adjust confidence

pred_box_conf = tf.sigmoid(y_pred[..., 4])

### adjust class probabilities

pred_box_class = y_pred[..., 5:]

"""

Adjust ground truth

"""

### adjust x and y

true_box_xy = y_true[..., 0:2] # relative position to the containing cell

### adjust w and h

true_box_wh = y_true[..., 2:4] # number of cells accross, horizontally and vertically

### adjust confidence

true_wh_half = true_box_wh / 2.

true_mins = true_box_xy - true_wh_half

true_maxes = true_box_xy + true_wh_half

pred_wh_half = pred_box_wh / 2.

pred_mins = pred_box_xy - pred_wh_half

pred_maxes = pred_box_xy + pred_wh_half

intersect_mins = tf.maximum(pred_mins, true_mins)

intersect_maxes = tf.minimum(pred_maxes, true_maxes)

intersect_wh = tf.maximum(intersect_maxes - intersect_mins, 0.)

intersect_areas = intersect_wh[..., 0] * intersect_wh[..., 1]

true_areas = true_box_wh[..., 0] * true_box_wh[..., 1]

pred_areas = pred_box_wh[..., 0] * pred_box_wh[..., 1]

union_areas = pred_areas + true_areas - intersect_areas

iou_scores = tf.truediv(intersect_areas, union_areas)

true_box_conf = iou_scores * y_true[..., 4]

### adjust class probabilities

true_box_class = tf.argmax(y_true[..., 5:], -1)

"""

Determine the masks

"""

### coordinate mask: simply the position of the ground truth boxes (the predictors)

coord_mask = tf.expand_dims(y_true[..., 4], axis=-1) * COORD_SCALE

### confidence mask: penelize predictors + penalize boxes with low IOU

# penalize the confidence of the boxes, which have IOU with some ground truth box < 0.6

true_xy = true_boxes_2[..., 0:2]

true_wh = true_boxes_2[..., 2:4]

true_wh_half = true_wh / 2.

true_mins = true_xy - true_wh_half

true_maxes = true_xy + true_wh_half

pred_xy = tf.expand_dims(pred_box_xy, 4)

pred_wh = tf.expand_dims(pred_box_wh, 4)

pred_wh_half = pred_wh / 2.

pred_mins = pred_xy - pred_wh_half

pred_maxes = pred_xy + pred_wh_half

intersect_mins = tf.maximum(pred_mins, true_mins)

intersect_maxes = tf.minimum(pred_maxes, true_maxes)

intersect_wh = tf.maximum(intersect_maxes - intersect_mins, 0.)

intersect_areas = intersect_wh[..., 0] * intersect_wh[..., 1]

true_areas = true_wh[..., 0] * true_wh[..., 1]

pred_areas = pred_wh[..., 0] * pred_wh[..., 1]

union_areas = pred_areas + true_areas - intersect_areas

iou_scores = tf.truediv(intersect_areas, union_areas)

best_ious = tf.reduce_max(iou_scores, axis=4)

conf_mask = conf_mask + tf.to_float(best_ious < 0.6) * (1 - y_true[..., 4]) * NO_OBJECT_SCALE

# penalize the confidence of the boxes, which are reponsible for corresponding ground truth box

conf_mask = conf_mask + y_true[..., 4] * OBJECT_SCALE

### class mask: simply the position of the ground truth boxes (the predictors)

class_mask = y_true[..., 4] * tf.gather(CLASS_WEIGHTS2, true_box_class) * CLASS_SCALE

"""

Warm-up training

"""

no_boxes_mask = tf.to_float(coord_mask < COORD_SCALE/2.)

seen = tf.assign_add(seen, 1.)

true_box_xy, true_box_wh, coord_mask = tf.cond(tf.less(seen, WARM_UP_BATCHES),

lambda: [true_box_xy + (0.5 + cell_grid) * no_boxes_mask,

true_box_wh + tf.ones_like(true_box_wh) * np.reshape(ANCHORS, [1,1,1,BOX,2]) * no_boxes_mask,

tf.ones_like(coord_mask)],

lambda: [true_box_xy,

true_box_wh,

coord_mask])

"""

Finalize the loss

"""

nb_coord_box = tf.reduce_sum(tf.to_float(coord_mask > 0.0))

nb_conf_box = tf.reduce_sum(tf.to_float(conf_mask > 0.0))

nb_class_box = tf.reduce_sum(tf.to_float(class_mask > 0.0))

loss_xy = tf.reduce_sum(tf.square(true_box_xy-pred_box_xy) * coord_mask) / (nb_coord_box + 1e-6) / 2.

loss_wh = tf.reduce_sum(tf.square(true_box_wh-pred_box_wh) * coord_mask) / (nb_coord_box + 1e-6) / 2.

loss_conf = tf.reduce_sum(tf.square(true_box_conf-pred_box_conf) * conf_mask) / (nb_conf_box + 1e-6) / 2.

loss_class = tf.nn.sparse_softmax_cross_entropy_with_logits(labels=true_box_class, logits=pred_box_class)

loss_class = tf.reduce_sum(loss_class * class_mask) / (nb_class_box + 1e-6)

loss = loss_xy + loss_wh + loss_conf + loss_class

nb_true_box = tf.reduce_sum(y_true[..., 4])

nb_pred_box = tf.reduce_sum(tf.to_float(true_box_conf > 0.5) * tf.to_float(pred_box_conf > 0.3))

"""

Debugging code

"""

current_recall = nb_pred_box/(nb_true_box + 1e-6)

total_recall = tf.assign_add(total_recall, current_recall)

loss = tf.Print(loss, [tf.zeros((1))], message='Dummy Line \t', summarize=1000)

loss = tf.Print(loss, [loss_xy], message='Loss XY \t', summarize=1000)

loss = tf.Print(loss, [loss_wh], message='Loss WH \t', summarize=1000)

loss = tf.Print(loss, [loss_conf], message='Loss Conf \t', summarize=1000)

loss = tf.Print(loss, [loss_class], message='Loss Class \t', summarize=1000)

loss = tf.Print(loss, [loss], message='Total Loss \t', summarize=1000)

loss = tf.Print(loss, [current_recall], message='Current Recall \t', summarize=1000)

loss = tf.Print(loss, [total_recall/seen], message='Average Recall \t', summarize=1000)

return loss

Next to defining the loss function, we define some extra information that is useful during training, namely a criterion for early stopping, to avoid overfitting of the network, and another criterion for saving the weights of the best network during training, namely the one that minimized the validation loss. The weights of that network will be saved as

在定义损失函数之后,我们定义了一些在训练期间有用的额外信息,即early stopping的标准,避免网络过度拟合,以及在训练期间保存最佳网络权重,即 最小化验证损失。 该网络的权重将保存为

weights_left_right_lung.h5.

early_stop = EarlyStopping(monitor='val_loss',

min_delta=0.001,

patience=10,

mode='min',

verbose=1)

checkpoint = ModelCheckpoint('weights_left_right_lung.h5',

monitor='val_loss',

verbose=1,

save_best_only=True,

mode='min',

period=1)

加载预训练的权重Pascal VOC数据集

我们已经初始化的YOLO网络具有随机初始参数。 但是,我们知道YOLO最初用于处理Pascal VOC数据集中的图像,以处理具有20个不同类别的自然图像中的对象检测。 你可以想象很多关于你可以在图像中找到的结构的信息,从边缘和角落到更复杂的结构,比如你在自然图像中找到的结构,已经被网络学习了。 因此,通过加载从其他数据集学习的参数,通常使用“预训练”网络是个好主意。 在这种情况下,我们可以使用Pascal VOC训练的YOLO参数,我们现在将加载它们。

# path to file of Pascal VOC YOLO weights

wt_path2 = os.path.join(os.getcwd(), 'pretrained_yolo_weights.h5')

model_2.load_weights(wt_path2)

print("Weights loaded from disk")

Weights loaded from disk

Train the network

到目前为止,我们有:

- 生成了一个YOLO模型的实例

- 在Pascal VOC上预训练模型上加载权重

- 定义了一个损失函数

- 定义用于检测左肺和右肺的训练集和验证集

- 定义的批处理生成器用于培训和验证集

现在我们准备好训练我们的网络了!

在下一个单元格中,我们建议学习的epoch和学习率的值。 首先尝试使用这两个值,这对于(1)获得相当稳定的损失值(我们已经看到高学习率通常给出NaN或非常高的损失值)和(2)实现需要多长时间是有用的。 训练YOLO(使用当前设置应该需要~15分钟/epoch!)。

# define number of epochs

n_epoch = 2

learning_rate = 1e-5

# define a folder where to store log files during training

logwrite = './logs'

if not os.path.exists(logwrite):

os.makedirs(logwrite)

# Define Adam optimizer

optimizer = Adam(lr=learning_rate, beta_1=0.9, beta_2=0.999, epsilon=1e-08, decay=0.0)

dummy_array = np.zeros((1,1,1,1,TRUE_BOX_BUFFER,4))

# compile YOLO model

model_2.compile(loss=loss, optimizer=optimizer)

# do training!

model_2.fit_generator(generator = train_batch,

steps_per_epoch = len(train_batch),

epochs = n_epoch,

verbose = 1,

validation_data = valid_batch,

validation_steps = len(valid_batch),

callbacks = [early_stop, checkpoint],

max_queue_size = 3)

Epoch 1/2

999/1000 [============================>.] - ETA: 0s - loss: 0.4634Epoch 00000: val_loss improved from inf to 0.28576, saving model to weights_left_right_lung.h5

1000/1000 [==============================] - 347s - loss: 0.4631 - val_loss: 0.2858

Epoch 2/2

999/1000 [============================>.] - ETA: 0s - loss: 0.2194Epoch 00001: val_loss improved from 0.28576 to 0.23901, saving model to weights_left_right_lung.h5

1000/1000 [==============================] - 346s - loss: 0.2194 - val_loss: 0.2390

在训练了几个epoch之后,我们可以将网络应用于来自测试集的随机采样的图像,并检查结果是否有意义。 调整值obj_threshold以从检测到的所有边界框中获得YOLO的最佳输出。

# read from test set

test_dir = os.path.join(workdir,'test_images')

test_file_list = os.listdir(test_dir)

img_index = np.random.randint(0, len(test_file_list))

test_img = cv2.imread(os.path.join(test_dir, test_file_list[img_index]))

# define a threshold to apply to predictions

obj_threshold=0.1

# predict bounding boxes using YOLO model2

boxes = predict_bounding_box(os.path.join(test_dir, test_file_list[img_index]), model_2, obj_threshold, NMS_THRESHOLD, ANCHORS, CLASS2)

print(test_file_list[img_index])

# get matplotlib bbox objects

plt_boxes = get_matplotlib_boxes(boxes, test_img.shape)

# visualize result

fig = plt.figure(figsize=(10, 10))

ax = fig.add_subplot(111, aspect='equal')

plt.imshow(test_img, cmap='gray')

for plt_box in plt_boxes:

ax.add_patch(plt_box)

plt.show()

00028934_000.png

在这一点上,如果你只训练了几个epoch的YOLO,你可能已经意识到模型的效果并不好。 我们用当前的架构做了一些实验,我们看到经过大约20个时期的训练后,性能实际上非常好! 所以在这一点上你有两个选择:

- 你可以训练当前模型约5个小时,或者

- 通过加载已经训练好的YOLO的权重来看看检测左右肺是什么性能

您可以通过执行下一个单元格来加载这些权重。

# path to file of Pascal VOC YOLO weights

wt_path2 = os.path.join(os.getcwd(), 'weights_both_lungs.h5')

model_2.load_weights(wt_path2)

print("Weights loaded from disk")

现在,您可以再次测试图像并检查预测是否有意义。 您自己训练了几个epoch的网络和刚加载的网络之间的差异只是训练时间! 在这种情况下,只需训练更长时间即可提高性能。

# read from test set

test_dir = os.path.join(workdir,'test_images')

test_file_list = os.listdir(test_dir)

img_index = np.random.randint(0, len(test_file_list))

test_img = cv2.imread(os.path.join(test_dir, test_file_list[img_index]))

# define a threshold to apply to predictions

obj_threshold=0.1

# predict bounding boxes using YOLO model2

boxes = predict_bounding_box(os.path.join(test_dir, test_file_list[img_index]), model_2, obj_threshold, NMS_THRESHOLD, ANCHORS, CLASS2)

print(test_file_list[img_index])

# get matplotlib bbox objects

plt_boxes = get_matplotlib_boxes(boxes, test_img.shape)

# visualize result

fig = plt.figure(figsize=(10, 10))

ax = fig.add_subplot(111, aspect='equal')

plt.imshow(test_img, cmap='gray')

for plt_box in plt_boxes:

ax.add_patch(plt_box)

plt.show()

00026473_000.png