用户行为分析大数据系统(实时统计每个分类被点击的次数,实时计算商品销售额,网站动态行为pv,uv )

https://blog.csdn.net/m0_37739193/article/details/74559826

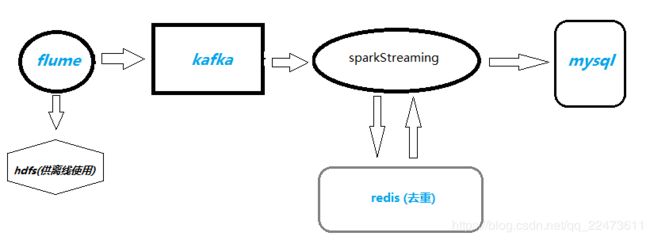

实时统计每天pv,uv的sparkStreaming结合redis结果存入mysql供前端展示

https://blog.csdn.net/ddxygq/article/details/81258643

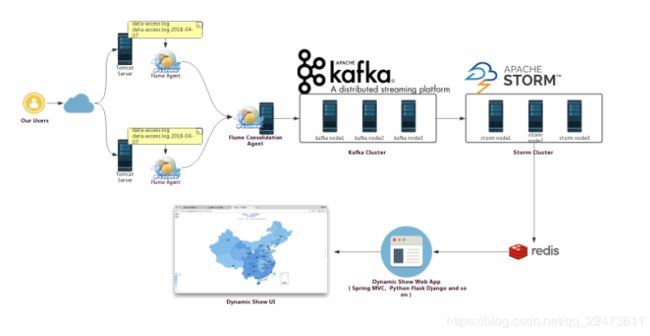

Flume+Kafka+Storm+Redis构建大数据实时处理系统:实时统计网站PV、UV+展示

flume+kafka+slipstream实现黑名单用户访问实时监测

实战SparkStream+Kafka+Redis实时计算商品销售额https://blog.csdn.net/whzhaochao/article/details/77717660

spark streaming从kafka获取数据,计算处理后存储到redis

https://blog.csdn.net/qq_26222859/article/details/79301205

大数据采集、清洗、处理:使用MapReduce进行离线数据分析完整案例https://blog.51cto.com/xpleaf/2095836

Flume+Kafka+Storm+Redis构建大数据实时处理系统:实时统计网站PV、UV+展示

- 1.如何一步步构建我们的实时处理系统(Flume+Kafka+Storm+Redis)

- 2.实时处理网站的用户访问日志,并统计出该网站的PV、UV

- 3.将实时分析出的PV、UV动态地展示在我们的前面页面上

1、大数据处理的常用方法、项目的流程:

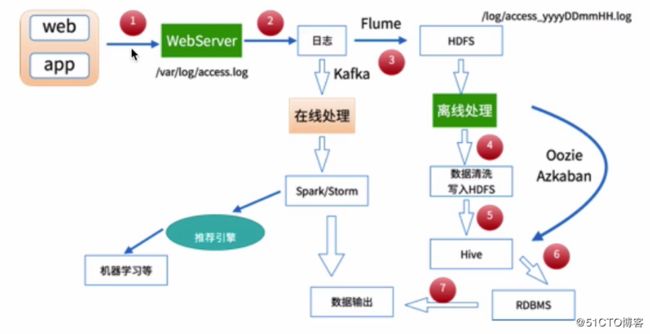

大数据处理目前比较流行的是两种方法,一种是离线处理,一种是在线处理,基本处理架构如下:

在互联网应用中,不管是哪一种处理方式,其基本的数据来源都是日志数据,例如对于web应用来说,则可能是用户的访问日志、用户的点击日志等。

如果对于数据的分析结果在时间上有比较严格的要求,则可以采用在线处理的方式来对数据进行分析,如使用Spark、Storm等进行处理。比较贴切的一个例子是天猫双十一的成交额,在其展板上,我们看到交易额是实时动态进行更新的,对于这种情况,则需要采用在线处理。

当然,如果只是希望得到数据的分析结果,对处理的时间要求不严格,就可以采用离线处理的方式,比如我们可以先将日志数据采集到HDFS中,之后再进一步使用MapReduce、Hive等来对数据进行分析,这也是可行的。

本文主要分享对某个电商网站产生的用户访问日志(access.log)进行离线处理与分析的过程,基于MapReduce的处理方式,最后会统计出某一天不同省份访问该网站的uv与pv。

1 、数据源

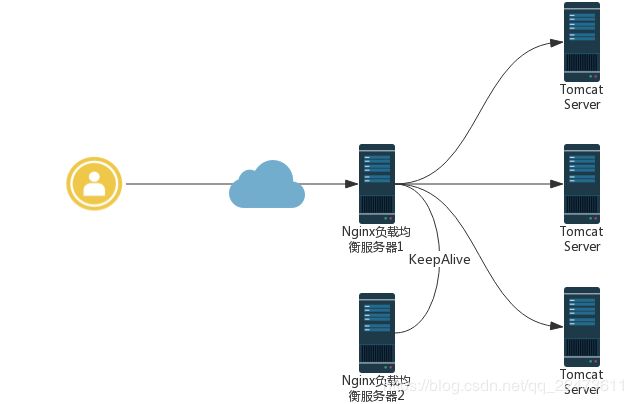

在我们的场景中,Web应用的部署是如下的架构:

即比较典型的Nginx负载均衡+KeepAlive高可用集群架构,在每台Web服务器上,都会产生用户的访问日志,业务需求方给出的日志格式如下:

1001 211.167.248.22 eecf0780-2578-4d77-a8d6-e2225e8b9169 40604 1 GET /top HTTP/1.0 408 null null 1523188122767

1003 222.68.207.11 eecf0780-2578-4d77-a8d6-e2225e8b9169 20202 1 GET /tologin HTTP/1.1 504 null Mozilla/5.0 (Windows; U; Windows NT 5.1)Gecko/20070309 Firefox/2.0.0.3 1523188123267

1001 61.53.137.50 c3966af9-8a43-4bda-b58c-c11525ca367b 0 1 GET /update/pass HTTP/1.0 302 null null 1523188123768

1000 221.195.40.145 1aa3b538-2f55-4cd7-9f46-6364fdd1e487 0 0 GET /user/add HTTP/1.1 200 null Mozilla/4.0 (compatible; MSIE 7.0; Windows NT5.2) 1523188124269

1000 121.11.87.171 8b0ea90a-77a5-4034-99ed-403c800263dd 20202 1 GET /top HTTP/1.0 408 null Mozilla/5.0 (Windows; U; Windows NT 5.1)Gecko/20070803 Firefox/1.5.0.12 1523188120263

appid ip mid userid login_type request status http_referer user_agent time

其中:

appid包括 : web:1000,android:1001,ios:1002,ipad:1003

mid: 唯一的id此id第一次会种在浏览器的cookie里。如果存在则不再种。作为浏览器唯一标示。移动端或者pad直接取机器码。

login_type: 登录状态,0未登录、1:登录用户

request: 类似于此种 "GET /userList HTTP/1.1"

status: 请求的状态主要有:200 ok、404 not found、408 Request Timeout、500 Internal Server Error、504 Gateway Timeout等

http_referer:请求该url的上一个url地址。

user_agent: 浏览器的信息,例如:"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/47.0.2526.106 Safari/537.36"

time: 时间的long格式:1451451433818。如果备份日志或者日志切割:

vim /opt/cut_nginx.sh

#!/bin/bash

#切割日志

datetime=$(date -d "-1 day" "+%Y%m%d")

log_path="/usr/local/nginx/logs"

pid_path="/usr/local/nginx/logs/nginx.pid"

[ -d $log_path/backup ] || mkdir -p $log_path/backup

if [ -f $pid_path ]

then

mv $log_path/access.log $log_path/backup/access.log-$datetime

kill -USR1 $(cat $pid_path)

find $log_path/backup -mtime +30 | xargs rm -f

#mtime :文件被修改时间 atime:访问时间(文件中的数据库最后被访问的时间) ctime:改变时间(文件的元数据发生变化。比如权限,所有者等)

else

echo "Error,Nginx is not working!" | tee -a /var/log/messages

fichmod +x /opt/cut_nginx.sh

crontab -e 设置定时任务

0 0 * * * /opt/cut_nginx.sh1、模拟生成实时数据

public class SimulateData {

public static void main(String[] args) {

BufferedWriter bw = null;

try {

bw = new BufferedWriter(new FileWriter("G:\\Scala\\实时统计每日的品类的点击次数\\data.txt"));

int i = 0;

while (i < 20000){

long time = System.currentTimeMillis();

int categoryid = new Random().nextInt(23);

bw.write("ver=1&en=e_pv&pl=website&sdk=js&b_rst=1920*1080&u_ud=12GH4079-223E-4A57-AC60-C1A04D8F7A2F&l=zh-CN&u_sd=8E9559B3-DA35-44E1-AC98-85EB37D1F263&c_time="+time+"&p_url=http://list.iqiyi.com/www/"+categoryid+"/---.html");

bw.newLine();

i++;

}

} catch (IOException e) {

e.printStackTrace();

}finally {

try {

bw.close();

} catch (IOException e) {

e.printStackTrace();

}

}

}

}

/*

ver=1&en=e_pv&pl=website&sdk=js&b_rst=1920*1080&u_ud=12GH4079-223E-4A57-AC60-C1A04D8F7A2F&l=zh-CN&u_sd=8E9559B3-DA35-44E1-AC98-85EB37D1F263&c_time=1526975174569&p_url=http://list.iqiyi.com/www/9/---.html

ver=1&en=e_pv&pl=website&sdk=js&b_rst=1920*1080&u_ud=12GH4079-223E-4A57-AC60-C1A04D8F7A2F&l=zh-CN&u_sd=8E9559B3-DA35-44E1-AC98-85EB37D1F263&c_time=1526975174570&p_url=http://list.iqiyi.com/www/4/---.html

ver=1&en=e_pv&pl=website&sdk=js&b_rst=1920*1080&u_ud=12GH4079-223E-4A57-AC60-C1A04D8F7A2F&l=zh-CN&u_sd=8E9559B3-DA35-44E1-AC98-85EB37D1F263&c_time=1526975174570&p_url=http://list.iqiyi.com/www/10/---.html

*/模拟数据实时的写入data.log:需要一直启动着:

#!/bin/bash

cat demo.csv | while read line

do

echo "$line" >> data.log

sleep 1

done或者生成数据直接发送kafka

/**

* 这里产生数据,就会发送给kafka,kafka那边启动消费者,就会接收到数据,这一步是用来测试生成数据和消费数据没有问题的,确定没问题后要关闭消费者,

* 启动OnlineBBSUserLogss.java的类作为消费者,就会按pv,uv等方式处理这些数据。

* 因为一个topic只能有一个消费者,所以启动程序前必须关闭kafka方式启动的消费者(我这里没有关闭关闭kafka方式启动的消费者也没正常啊)

*/

public class SparkStreamingDataManuallyProducerForKafkas extends Thread{

//具体的论坛频道

static String[] channelNames = new String[]{

"Spark","Scala","Kafka","Flink","Hadoop","Storm",

"Hive","Impala","HBase","ML"

};

//用户的两种行为模式

static String[] actionNames = new String[]{"View", "Register"};

private static Producer producerForKafka;

private static String dateToday;

private static Random random;

//2、作为线程而言,要复写run方法,先写业务逻辑,再写控制

@Override

public void run() {

int counter = 0;//搞500条

while(true){//模拟实际情况,不断循环,异步过程,不可能是同步过程

counter++;

String userLog = userlogs();

System.out.println("product:"+userLog);

//"test"为topic

producerForKafka.send(new KeyedMessage("test", userLog));

if(0 == counter%500){

counter = 0;

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

}

}

private static String userlogs() {

StringBuffer userLogBuffer = new StringBuffer("");

int[] unregisteredUsers = new int[]{1, 2, 3, 4, 5, 6, 7, 8};

long timestamp = new Date().getTime();

Long userID = 0L;

long pageID = 0L;

//随机生成的用户ID

if(unregisteredUsers[random.nextInt(8)] == 1) {

userID = null;

} else {

userID = (long) random.nextInt((int) 2000);

}

//随机生成的页面ID

pageID = random.nextInt((int) 2000);

//随机生成Channel

String channel = channelNames[random.nextInt(10)];

//随机生成action行为

String action = actionNames[random.nextInt(2)];

userLogBuffer.append(dateToday)

.append("\t")

.append(timestamp)

.append("\t")

.append(userID)

.append("\t")

.append(pageID)

.append("\t")

.append(channel)

.append("\t")

.append(action); //这里不要加\n换行符,因为kafka自己会换行,再append一个换行符,消费者那边就会处理不出数据

return userLogBuffer.toString();

}

public static void main(String[] args) throws Exception {

dateToday = new SimpleDateFormat("yyyy-MM-dd").format(new Date());

random = new Random();

Properties props = new Properties();

props.put("zk.connect", "h71:2181,h72:2181,h73:2181");

props.put("metadata.broker.list","h71:9092,h72:9092,h73:9092");

props.put("serializer.class", "kafka.serializer.StringEncoder");

ProducerConfig config = new ProducerConfig(props);

producerForKafka = new Producer(config);

new SparkStreamingDataManuallyProducerForKafkas().start();

}

}

/**

product:2017-06-20 1497948113827 633 1345 Hive View

product:2017-06-20 1497948113828 957 1381 Hadoop Register

product:2017-06-20 1497948113831 300 1781 Spark View

product:2017-06-20 1497948113832 1244 1076 Hadoop Register

**/ 2、数据采集:获取原生数据

数据采集工作:使用Flume对于用户访问日志的采集,将采集的数据保存到HDFS中 (离线)、发送数据到kafka(实时)

2、flume发送数据到kafka

从data.log文件中读取实时数据到kafka:

第一步:配置Flume文件:(file2kafka.properties)

a1.sources = r1

a1.sinks = k1

a1.channels =c1

a1.sources.r1.type = exec

a1.sources.r1.command = tail -F /home/hadoop/data.log

a1.channel.c1 = memory

a1.sinks.k1.type = org.apache.flume.sink.kafka.KafkaSink

a1.sinks.k1.topic = aura

a1.sinks.k1.brokerList = hodoop02:9092

a1.sinks.k1.requiredAcks = 1

a1.sinks.k1.batchSize = 5

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1第四步:启动Flume命令

[hadoop@hadoop02 apache-flume-1.8.0-bin]$

bin/flume-ng agent --conf conf --conf-file /usr/local/flume/example/file2kafka.properties --name a1 -Dflume.root.logger=INFO,console

第三步:启动kafka消费者

[hadoop@hadoop03 kafka_2.11-1.0.0]$

bin/kafka-console-consumer.sh --zookeeper hadoop:2181 --from-beginning --topic aura

4 数据清洗:将不规整数据转化为规整数据(存入hdfs或者hive用于离线分析统计)

4.3.3 执行MapReduce程序

将上面的mr程序打包后上传到我们的Hadoop环境中,这里,对2018-04-08这一天产生的日志数据进行清洗,执行如下命令:

yarn jar data-extract-clean-analysis-1.0-SNAPSHOT-jar-with-dependencies.jar\

cn.xpleaf.dataClean.mr.job.AccessLogCleanJob \

hdfs://ns1/input/data-clean/access/2018/04/08 \

hdfs://ns1/output/data-clean/access5 数据处理:对规整数据进行统计分析

6、Kafka消费者,SparkStream时实计算--redis

http://blog.csdn.net/whzhaochao/article/details/77717660

object OrderConsumer {

//Redis配置

val dbIndex = 0

//每件商品总销售额

val orderTotalKey = "app::order::total"

//每件商品上一分钟销售额

val oneMinTotalKey = "app::order::product"

//总销售额

val totalKey = "app::order::all"

def main(args: Array[String]): Unit = {

// 创建 StreamingContext 时间片为1秒

val conf = new SparkConf().setMaster("local").setAppName("UserClickCountStat")

val ssc = new StreamingContext(conf, Seconds(1))

// Kafka 配置

val topics = Set("order")

val brokers = "127.0.0.1:9092"

val kafkaParams = Map[String, String](

"metadata.broker.list" -> brokers,

"serializer.class" -> "kafka.serializer.StringEncoder")

// 创建一个 direct stream

val kafkaStream = KafkaUtils.createDirectStream[String, String, StringDecoder, StringDecoder](ssc, kafkaParams, topics)

//解析JSON

val events = kafkaStream.flatMap(line => Some(JSON.parseObject(line._2)))

// 按ID分组统计个数与价格总合

val orders = events.map(x => (x.getString("id"), x.getLong("price"))).groupByKey().map(x => (x._1, x._2.size, x._2.reduceLeft(_ + _)))

//输出

orders.foreachRDD(x =>

x.foreachPartition(partition =>

partition.foreach(x => {

println("id=" + x._1 + " count=" + x._2 + " price=" + x._3)

//保存到Redis中

val jedis = RedisClient.pool.getResource

jedis.select(dbIndex)

//每个商品销售额累加

jedis.hincrBy(orderTotalKey, x._1, x._3)

//上一分钟第每个商品销售额

jedis.hset(oneMinTotalKey, x._1.toString, x._3.toString)

//总销售额累加

jedis.incrBy(totalKey, x._3)

RedisClient.pool.returnResource(jedis)

})

))

ssc.start()

ssc.awaitTermination()

}

}

/*

id=4 count=3 price=7208

id=8 count=2 price=10152

id=7 count=1 price=6928

id=5 count=1 price=3327

id=6 count=3 price=20483

id=0 count=2 price=9882

*/Redis客户端

object RedisClient extends Serializable {

val redisHost = "127.0.0.1"

val redisPort = 6379

val redisTimeout = 30000

lazy val pool = new JedisPool(new GenericObjectPoolConfig(), redisHost, redisPort, redisTimeout)

lazy val hook = new Thread {

override def run = {

println("Execute hook thread: " + this)

pool.destroy()

}

}

sys.addShutdownHook(hook.run)

def main(args: Array[String]): Unit = {

val dbIndex = 0

val jedis = RedisClient.pool.getResource

jedis.select(dbIndex)

jedis.set("test", "1")

println(jedis.get("test"))

RedisClient.pool.returnResource(jedis)

}

}

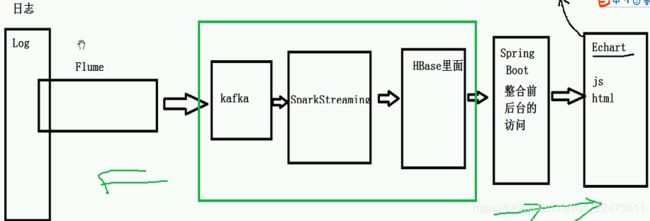

7、SparkStreaming接收kafka数据并处理--Hbase

创建一个HBase表:

实时统计每日的分类的点击次数,存储到HBase(HBase表示如何设计的,rowkey是怎样设计)

rowkey的设计是:时间+name

例:2018.05.22_电影。这样做为rowkey。

public class CategoryRealCount11 {

public static String ck = "G:\\Scala\\spark1711\\day25-项目实时统计\\资料\\新建文件夹";

public static void main(String[] args) {

//初始化程序入口

SparkConf conf = new SparkConf();

conf.setMaster("local");

conf.setAppName("CategoryRealCount");

JavaStreamingContext ssc = new JavaStreamingContext(conf,Durations.seconds(3));

ssc.checkpoint(ck);

//读取数据

/*HashMap kafkaParams = new HashMap<>();

kafkaParams.put("metadata.broker.list","hadoop02:9092,hadoop03:9092,hadoop04:9092");*/

Map kafkaParams = new HashMap<>();

kafkaParams.put("bootstrap.servers", "192.168.123.102:9092,192.168.123.103:9092");

kafkaParams.put("key.deserializer", StringDeserializer.class);

kafkaParams.put("value.deserializer", StringDeserializer.class);

kafkaParams.put("group.id", "use_a_separate_group_id_for_each_stream");

kafkaParams.put("auto.offset.reset", "latest");

kafkaParams.put("enable.auto.commit", false);

/*HashSet topics = new HashSet<>();

topics.add("aura");*/

Collection topics = Arrays.asList("aura");

JavaDStream logDStream = KafkaUtils.createDirectStream(

ssc,

LocationStrategies.PreferConsistent(),

ConsumerStrategies.Subscribe(topics, kafkaParams)

).map(new Function, String>() {

@Override

public String call(ConsumerRecord stringStringConsumerRecord) throws Exception {

return stringStringConsumerRecord.value();

}

});

logDStream.mapToPair(new PairFunction() {

@Override

public Tuple2 call(String line) throws Exception {

return new Tuple2(Utils.getKey(line),1L);

}

}).reduceByKey(new Function2() {

@Override

public Long call(Long aLong, Long aLong2) throws Exception {

return aLong + aLong2;

}

}).foreachRDD(new VoidFunction2, Time>() {

@Override

public void call(JavaPairRDD RDD, Time time) throws Exception {

RDD.foreachPartition(new VoidFunction>>() {

@Override

public void call(Iterator> partition) throws Exception {

HBaseDao hBaseDao = HBaseFactory.getHBaseDao();

while (partition.hasNext()){

Tuple2 tuple = partition.next();

hBaseDao.save("aura",tuple._1,"f","name",tuple._2);

System.out.println(tuple._1+" "+ tuple._2);

}

}

});

}

});

/* JavaDStream logDStream;

logDStream = KafkaUtils.createDirectStream(

ssc,

String.class,

String.class,

StringDecoder.class,

topics,

StringDecoder.class,

kafkaParams

).map(new Function, String>() {

@Override

public String call(Tuple2 tuple2) throws Exception {

return tuple2._2;

}

});*/

//代码的逻辑

//启动应用程序

ssc.start();

try {

ssc.awaitTermination();

} catch (InterruptedException e) {

e.printStackTrace();

}

ssc.stop();

}

}

(Utils):

public class Utils {

public static String getKey(String line) {

HashMap map = new HashMap();

map.put("0", "其他");

map.put("1", "电视剧");

map.put("2", "电影");

map.put("3", "综艺");

map.put("4", "动漫");

map.put("5", "纪录片");

map.put("6", "游戏");

map.put("7", "资讯");

map.put("8", "娱乐");

………………

//获取到品类ID

String categoryid = line.split("&")[9].split("/")[4];

//获取到品类的名称

String name = map.get(categoryid);

//获取用户访问数据的时间

String stringTime = line.split("&")[8].split("=")[1];

//获取日期

String date = getDay(Long.valueOf(stringTime));

return date + "_" + name;

}

public static String getDay(long time){

SimpleDateFormat simpleDateFormat = new SimpleDateFormat("yyyy-MM-dd");

return simpleDateFormat.format(new Date());

}

} (dao.impl):

public class HBaseImpl implements HBaseDao {

HConnection hatablePool = null;

public HBaseImpl(){

Configuration conf = HBaseConfiguration.create();

//HBase自带的zookeeper

conf.set("hbase.zookeeper.quorum","hadoop02:2181");

try {

hatablePool = HConnectionManager.createConnection(conf);

} catch (IOException e) {

e.printStackTrace();

}

}

/**

* 根据表名获取表对象

* @param tableName 表名

* @return 表对象

*/

public HTableInterface getTable(String tableName){

HTableInterface table = null;

try {

table = hatablePool.getTable(tableName);

} catch (IOException e) {

e.printStackTrace();

}

return table;

}

/**

* 往hbase里面插入一条数据

* @param tableName 表名

* @param rowkey rowkey

* @param family 列族

* @param q 品类

* @param value 出现了的次数

* 2018-12-12_电影 f q 19

* updateStateBykey 对内存的要求高一点

* reduceBykey 对内存要求低一点

*/

@Override

public void save(String tableName, String rowkey, String family, String q, long value) {

HTableInterface table = getTable(tableName);

try {

table.incrementColumnValue(rowkey.getBytes(),family.getBytes(),q.getBytes(),value);

} catch (IOException e) {

e.printStackTrace();

}finally {

if (table != null){

try {

table.close();

} catch (IOException e) {

e.printStackTrace();

}

}

}

}

/**

* 根据rowkey 返回数据

* @param tableName 表名

* @param rowkey rowkey

* @return

*/

@Override

public List count(String tableName, String rowkey) {

ArrayList list = new ArrayList<>();

HTableInterface table = getTable(tableName);

PrefixFilter prefixFilter = new PrefixFilter(rowkey.getBytes());//用左查询进行rowkey查询

Scan scan = new Scan();

scan.setFilter(prefixFilter);

try {

ResultScanner scanner = table.getScanner(scan);

for (Result result : scanner){

for (Cell cell : result.rawCells()){

byte[] date_name = CellUtil.cloneRow(cell);

String name = new String(date_name).split("_")[1];

byte[] value = CellUtil.cloneValue(cell);

long count = Bytes.toLong(value);

CategoryClickCount categoryClickCount = new CategoryClickCount(name, count);

list.add(categoryClickCount);

}

}

} catch (IOException e) {

e.printStackTrace();

}finally {

if (table != null){

try {

table.close();

} catch (IOException e) {

e.printStackTrace();

}

}

}

return list;

}

} (dao.factory)

public class HBaseFactory {

public static HBaseDao getHBaseDao(){

return new HBaseImpl();

}

}测试类:

public class Test {

public static void main(String[] args) {

HBaseDao hBaseDao = HBaseFactory.getHBaseDao();

hBaseDao.save("aura", "2018-05-23_电影","f","name",10L);

hBaseDao.save("aura", "2018-05-23_电影","f","name",20L);

hBaseDao.save("aura", "2018-05-21_电视剧","f","name",11L);

hBaseDao.save("aura", "2018-05-21_电视剧","f","name",24L);

hBaseDao.save("aura", "2018-05-23_电视剧","f","name",110L);

hBaseDao.save("aura", "2018-05-23_电视剧","f","name",210L);

List list = hBaseDao.count("aura", "2018-05-21");

for (CategoryClickCount cc : list){

System.out.println(cc.getName() + " "+ cc.getCount());

}

}

}

原文: https://blog.csdn.net/qq_41851454/article/details/80402483

object DauApp {

def main(args: Array[String]): Unit = {

val sparkConf: SparkConf = new SparkConf().setAppName("dau_app").setMaster("local[*]")

val ssc = new StreamingContext(sparkConf, Seconds(5))

val inputDstream: InputDStream[ConsumerRecord[String, String]] = MyKafkaUtil.getKafkaStream(GmallConstant.KAFKA_TOPIC_STARTUP, ssc)

// inputDstream.foreachRDD{rdd=>

// println(rdd.map(_.value()).collect().mkString("\n"))

// }

// val dStream: DStream[String] = inputDstream.map { record =>

// val jsonStr: String = record.value()

// jsonStr

// }

//处理数据,完了,保存偏移量

inputDstream.foreachRDD(rdd => {

//手动指定分区的地方

val ranges: Array[OffsetRange] = rdd.asInstanceOf[HasOffsetRanges].offsetRanges

println("长度=" + ranges.length)

ranges.foreach(println)

val result: RDD[(String, Int)] = rdd.map(_.value()).flatMap(_.split(",")).map((_, 1)).reduceByKey(_ + _)

result.foreach(println)

result.foreachPartition(p => {

val jedis: Jedis = RedisUtil.getJedisClient

p.foreach(rdd2 => {

// 数据数理逻辑

jedis.hincrBy("wc1", rdd2._1, rdd2._2)

})

//把通过hset,把对应的partition和offset写入到redis中

val map = new util.HashMap[String, String]()

for (o <- ranges) {

val offset = o.untilOffset

val partition = o.partition

val topic = o.topic

val group_id = "gmall_consumer_group"

map.put("offset", offset.toString)

jedis.hmset("offsetKey", map)

}

jedis.close()

})

// 把偏移量的Array 写入到mysql中

// ranges.foreach(rdd2 => {

// // 思考,需要保存哪些数据呢? 起始的offset不需要 还需要加上 groupid

// val pstm = conn.prepareStatement("replace into mysqloffset values (?,?,?,?)")

// pstm.setString(1, rdd2.topic)

// pstm.setInt(2, rdd2.partition)

// pstm.setLong(3, rdd2.untilOffset)

// pstm.setString(4, groupId)

// pstm.execute()

// pstm.close()

// })

})

// 转换处理

val startuplogStream: DStream[Startuplog] = inputDstream.map {

record =>

val jsonStr: String = record.value()

val startuplog: Startuplog = JSON.parseObject(jsonStr, classOf[Startuplog])

val date = new Date(startuplog.ts)

val dateStr: String = new SimpleDateFormat("yyyy-MM-dd HH:mm").format(date)

val dateArr: Array[String] = dateStr.split(" ")

startuplog.logDate = dateArr(0)

startuplog.logHour = dateArr(1).split(":")(0)

startuplog.logHourMinute = dateArr(1)

startuplog

}

// 利用redis进行去重过滤

val filteredDstream: DStream[Startuplog] = startuplogStream.transform {

rdd =>

println("过滤前:" + rdd.count())

//driver //周期性执行DataFrame

val curdate: String = new SimpleDateFormat("yyyy-MM-dd").format(new Date)

val jedis: Jedis = RedisUtil.getJedisClient

val key = "dau:" + curdate

val dauSet: util.Set[String] = jedis.smembers(key) //SMEMBERS key 获取集合里面的所有key

val dauBC: Broadcast[util.Set[String]] = ssc.sparkContext.broadcast(dauSet)

val filteredRDD: RDD[Startuplog] = rdd.filter {

startuplog =>

//executor

val dauSet: util.Set[String] = dauBC.value

!dauSet.contains(startuplog.mid)

}

println("过滤后:" + filteredRDD.count())

filteredRDD

}

//去重思路;把相同的mid的数据分成一组 ,每组取第一个

val groupbyMidDstream: DStream[(String, Iterable[Startuplog])] = filteredDstream

.map(startuplog => (startuplog.mid, startuplog))

.groupByKey()

val distinctDstream: DStream[Startuplog] = groupbyMidDstream.flatMap {

case (mid, startulogItr) =>

startulogItr.take(1)

}

// 保存到redis中

distinctDstream.foreachRDD { rdd =>

// redis type set

// key dau:2019-06-03 value : mids

rdd.foreachPartition { startuplogItr =>

//executor

val jedis: Jedis = RedisUtil.getJedisClient

val list: List[Startuplog] = startuplogItr.toList

for (startuplog <- list) {

val key = "dau:" + startuplog.logDate

val value = startuplog.mid

jedis.sadd(key, value)

println(startuplog) //往es中保存

}

MyEsUtil.indexBulk(GmallConstant.ES_INDEX_DAU, list)

jedis.close()

}

}

ssc.start()

ssc.awaitTermination()

}

}