ELK学习(一)ELK服务部署

ELK学习(一)ELK服务部署

一、概述

Elasticsearch + Logstash + Kibana(ELK)是一套开源的日志管理方案。

官网:https://www.elastic.co/guide/index.html

1. ElasticSearch(管理)

ElasticSearch是一个基于Lucene的搜索服务器。它提供了一个分布式多用户能力的全文搜索引擎,基于RESTful web接口。Elasticsearch是用Java开发的,并作为Apache许可条款下的开放源码发布,是当前流行的企业级搜索引擎。设计用于云计算中,能够达到实时搜索,稳定,可靠,快速,安装使用方便。

2. Logstash(收集)

Logstash是一个用于管理日志和事件的工具,你可以用它去收集日志、转换日志、解析日志并将他们作为数据提供给其它模块调用,例如搜索、存储等。

3. Kibana(可视化)

Kibana是一个优秀的前端日志展示框架,它可以非常详细的将日志转化为各种图表,为用户提供强大的数据可视化支持。

二、准备环境

测试环境:centos7 2G 1核;hostname:tg01(不能再低了) ;ip:10.0.0.203

版本:elk 6.4.0

三、服务部署

1、安装Elasticsearch

将 Elasticsearch 公共 GPG 密钥导入 rpm:

rpm --import https://artifacts.elastic.co/GPG-KEY-elasticsearch在/etc/yum.repos.d/目录中,创建一个名为elasticsearch.repo的文件,添加下面配置:

cd /etc/yum.repos.d/

vim elasticsearch.repo

##############################################

[elasticsearch-6.x]

name=Elasticsearch repository for 6.x packages

baseurl=https://artifacts.elastic.co/packages/6.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-md安装

yum makecache

yum install elasticsearch -y修改配置(启动地址和端口):

vim /etc/elasticsearch/elasticsearch.yml

##############################################

node.name: tg_test_01

network.host: 10.0.0.203 # 默认localhost,自定义为ip

http.port: 9200设置开机启动

systemctl daemon-reload

systemctl enable elasticsearch.service启动

systemctl start elasticsearch.service查看状态

systemctl status elasticsearch.service

##############################################

● elasticsearch.service - Elasticsearch

Loaded: loaded (/usr/lib/systemd/system/elasticsearch.service; enabled; vendor preset: disabled)

Active: active (running) since 三 2018-09-12 09:00:12 CST; 5min ago

Docs: http://www.elastic.co

Main PID: 3843 (java)

CGroup: /system.slice/elasticsearch.service

├─3843 /bin/java -Xms1g -Xmx1g -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=75 -XX...

└─3895 /usr/share/elasticsearch/modules/x-pack-ml/platform/linux-x86_64/bin/controller

9月 12 09:00:12 tg01 systemd[1]: Started Elasticsearch.

9月 12 09:00:12 tg01 systemd[1]: Starting Elasticsearch...正在运行,查看端口

netstat -lntup

##############################################

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 935/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 1074/master

tcp6 0 0 10.0.0.203:9200 :::* LISTEN 3843/java

tcp6 0 0 10.0.0.203:9300 :::* LISTEN 3843/java

tcp6 0 0 :::22 :::* LISTEN 935/sshd

tcp6 0 0 ::1:25 :::* LISTEN 1074/master列出服务日志

journalctl --unit elasticsearch

##############################################

-- Logs begin at 三 2018-09-12 08:02:17 CST, end at 三 2018-09-12 09:01:01 CST. --

9月 12 09:00:12 tg01 systemd[1]: Started Elasticsearch.

9月 12 09:00:12 tg01 systemd[1]: Starting Elasticsearch...查看信息,也是一种检测。若能出现如下信息,则说明配置正确

curl http://10.0.0.203:9200

##############################################

{

"name" : "tg_test_01",

"cluster_name" : "elasticsearch",

"cluster_uuid" : "7wdhbmETSfWXU6z2KDiiKA",

"version" : {

"number" : "6.4.0",

"build_flavor" : "default",

"build_type" : "rpm",

"build_hash" : "595516e",

"build_date" : "2018-08-17T23:18:47.308994Z",

"build_snapshot" : false,

"lucene_version" : "7.4.0",

"minimum_wire_compatibility_version" : "5.6.0",

"minimum_index_compatibility_version" : "5.0.0"

},

"tagline" : "You Know, for Search"

}2、安装Kibana

将 Elasticsearch 公共 GPG 密钥导入 rpm:

rpm --import https://artifacts.elastic.co/GPG-KEY-elasticsearch在/etc/yum.repos.d/目录中,创建一个名为kibana.repo的文件,添加下面配置:

cd /etc/yum.repos.d/

vim kibana.repo

############################################

[kibana-6.x]

name=Kibana repository for 6.x packages

baseurl=https://artifacts.elastic.co/packages/6.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-md安装kibana

yum makecache

yum install kibana -y修改配置(地址和端口,以及 Elasticsearch 的地址,注意server.host只能填写服务器的 IP 地址):

vi /etc/kibana/kibana.yml

####################################################3

server.host: "10.0.0.203"

server.name: "tg01"

elasticsearch.url: "http://10.0.0.203:9200"

logging.dest: /var/log/kibana.log设置为开机启动

systemctl daemon-reload

systemctl enable kibana.service启动

systemctl start kibana.service查看状态

systemctl status kibana.service

##############################################

● kibana.service - Kibana

Loaded: loaded (/etc/systemd/system/kibana.service; enabled; vendor preset: disabled)

Active: active (running) since 三 2018-09-12 09:34:51 CST; 1min 25s ago

Main PID: 6356 (node)

CGroup: /system.slice/kibana.service

└─6356 /usr/share/kibana/bin/../node/bin/node --no-warnings /usr/share/kibana/bin/../src/cli -c ...

9月 12 09:35:12 tg01 kibana[6356]: {"type":"log","@timestamp":"2018-09-12T01:35:12Z","tags":["status"...rch"}

9月 12 09:35:12 tg01 kibana[6356]: {"type":"log","@timestamp":"2018-09-12T01:35:12Z","tags":["status"...rch"}

9月 12 09:35:12 tg01 kibana[6356]: {"type":"log","@timestamp":"2018-09-12T01:35:12Z","tags":["status"...rch"}

9月 12 09:35:12 tg01 kibana[6356]: {"type":"log","@timestamp":"2018-09-12T01:35:12Z","tags":["status"...rch"}

9月 12 09:35:12 tg01 kibana[6356]: {"type":"log","@timestamp":"2018-09-12T01:35:12Z","tags":["status"...rch"}

9月 12 09:35:12 tg01 kibana[6356]: {"type":"log","@timestamp":"2018-09-12T01:35:12Z","tags":["status"...rch"}

9月 12 09:35:12 tg01 kibana[6356]: {"type":"log","@timestamp":"2018-09-12T01:35:12Z","tags":["info","...ion"}

9月 12 09:35:12 tg01 kibana[6356]: {"type":"log","@timestamp":"2018-09-12T01:35:12Z","tags":["status"...rch"}

9月 12 09:35:13 tg01 kibana[6356]: {"type":"log","@timestamp":"2018-09-12T01:35:13Z","tags":["license...ive"}

9月 12 09:35:29 tg01 kibana[6356]: {"type":"log","@timestamp":"2018-09-12T01:35:29Z","tags":["info","...601"}

Hint: Some lines were ellipsized, use -l to show in full.查看端口

netstat -lntup

##############################################

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 10.0.0.203:5601 0.0.0.0:* LISTEN 6356/node

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 935/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 1074/master

tcp6 0 0 10.0.0.203:9200 :::* LISTEN 3843/java

tcp6 0 0 10.0.0.203:9300 :::* LISTEN 3843/java

tcp6 0 0 :::22 :::* LISTEN 935/sshd

tcp6 0 0 ::1:25 :::* LISTEN 1074/master查看活动日志

journalctl --unit kibana

########################################

-- Logs begin at 三 2018-09-12 08:02:17 CST, end at 三 2018-09-12 09:35:29 CST. --

9月 12 09:34:51 tg01 systemd[1]: Started Kibana.

9月 12 09:34:51 tg01 systemd[1]: Starting Kibana...

9月 12 09:35:09 tg01 kibana[6356]: {"type":"log","@timestamp":"2018-09-12T01:35:09Z","tags":["status","plugin:

9月 12 09:35:09 tg01 kibana[6356]: {"type":"log","@timestamp":"2018-09-12T01:35:09Z","tags":["status","plugin:

9月 12 09:35:09 tg01 kibana[6356]: {"type":"log","@timestamp":"2018-09-12T01:35:09Z","tags":["status","plugin:

9月 12 09:35:09 tg01 kibana[6356]: {"type":"log","@timestamp":"2018-09-12T01:35:09Z","tags":["status","plugin:

9月 12 09:35:09 tg01 kibana[6356]: {"type":"log","@timestamp":"2018-09-12T01:35:09Z","tags":["status","plugin:

9月 12 09:35:10 tg01 kibana[6356]: {"type":"log","@timestamp":"2018-09-12T01:35:10Z","tags":["status","plugin:

9月 12 09:35:10 tg01 kibana[6356]: {"type":"log","@timestamp":"2018-09-12T01:35:10Z","tags":["status","plugin:

9月 12 09:35:10 tg01 kibana[6356]: {"type":"log","@timestamp":"2018-09-12T01:35:10Z","tags":["status","plugin:

9月 12 09:35:10 tg01 kibana[6356]: {"type":"log","@timestamp":"2018-09-12T01:35:10Z","tags":["status","plugin:

9月 12 09:35:10 tg01 kibana[6356]: {"type":"log","@timestamp":"2018-09-12T01:35:10Z","tags":["status","plugin:

9月 12 09:35:10 tg01 kibana[6356]: {"type":"log","@timestamp":"2018-09-12T01:35:10Z","tags":["status","plugin:

9月 12 09:35:10 tg01 kibana[6356]: {"type":"log","@timestamp":"2018-09-12T01:35:10Z","tags":["status","plugin:

9月 12 09:35:10 tg01 kibana[6356]: {"type":"log","@timestamp":"2018-09-12T01:35:10Z","tags":["security","warni登录网页http://10.0.0.203:5601

3、创建索引

curl命令

curl -X '://:/?' -d ''

VERB HTTP方法:GET, POST, PUT, HEAD, DELETE

PROTOCOL http或者https协议(只有在Elasticsearch前面有https代理的时候可用)

HOST Elasticsearch集群中的任何一个节点的主机名

PORT Elasticsearch HTTP服务所在的端口,默认为9200

PATH API路径,资源路径(例如_count将返回集群中文档的数量)

QUERY_STRING 一些可选的查询请求参数,例如?pretty参数将返回易读的JSON数据

BODY 一个JSON格式的请求主体(如果请求需要的话) 即使打得开网页,但也一直提示没有索引是怎么回事

查看索引列表

[root@tg01 yum.repos.d]# curl http://10.0.0.203:9200/_cat/indices

green open .kibana jVwLlSzQQHuTx0biFSiPNw 1 0 1 0 4kb 4kb有一个默认的.kibana才对啊。既然没有,那我就创一个

curl -XPUT 'http://10.0.0.203:9200/tg_test'过了一会,它才出现,并且连同默认的索引也一起出现了。但.monitoring-kibana-6-2018.09.12和open .monitoring-es-6-2018.09.12这两个索引是什么目前还不了解

[root@tg01 tools]# curl -XGET http://10.0.0.203:9200/_cat/indices

green open .kibana jVwLlSzQQHuTx0biFSiPNw 1 0 1 0 4kb 4kb

green open .monitoring-kibana-6-2018.09.12 1F_EkSNYSfqiHOW4B352qw 1 0 50 0 71.5kb 71.5kb

yellow open tg_test 2q_si7CEQ_-mU2_APKwmPw 5 1 0 0 1.2kb 1.2kb

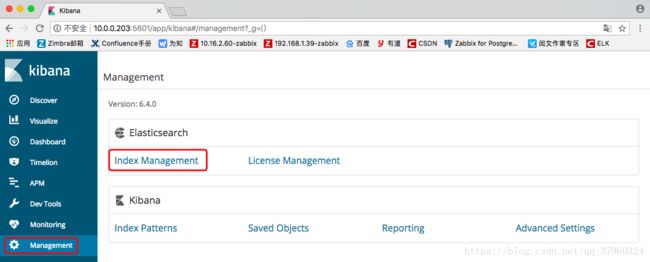

green open .monitoring-es-6-2018.09.12 I14uthd5TY-splmVw1iuDg 1 0 416 52 416.9kb 416.9kb在浏览器中“Management”下选择“Index Management”

可以看到刚刚创建好的索引“tg_test”

也可以点击旁边的“include system indices”查看到默认的索引

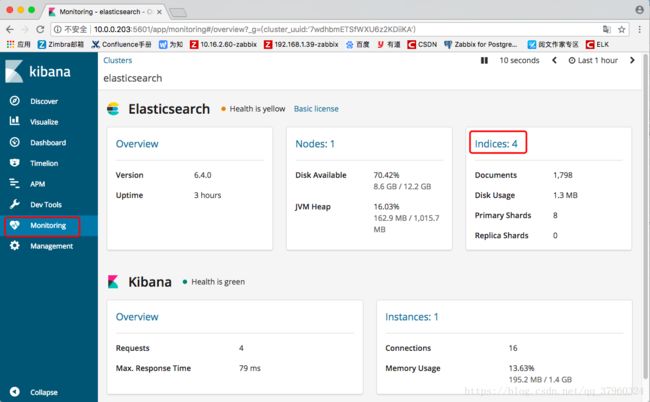

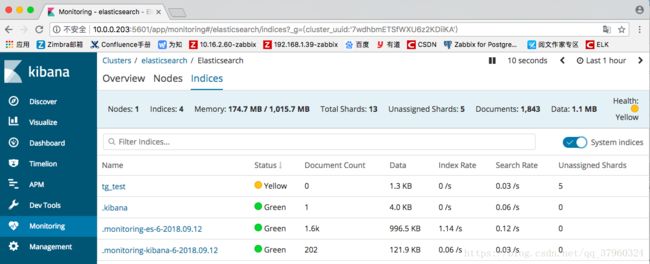

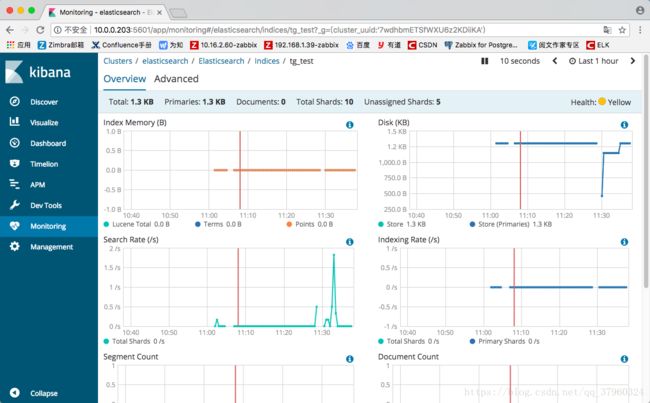

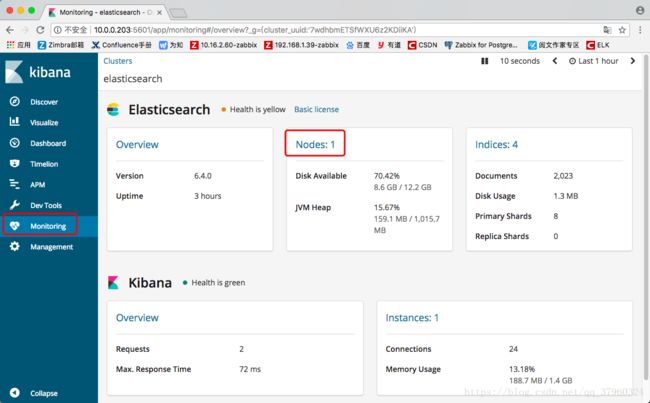

点击“Monitoring”选择“indices:4”

可以看到创建的tg_test索引已加入监控

点击“tg_test”

可以查看监控项

而“Monitoring”“Nodes”表示节点

点击“Nodes:1”查看到设置的节点

这个节点就是在配置文件里设置的node.name

![]()

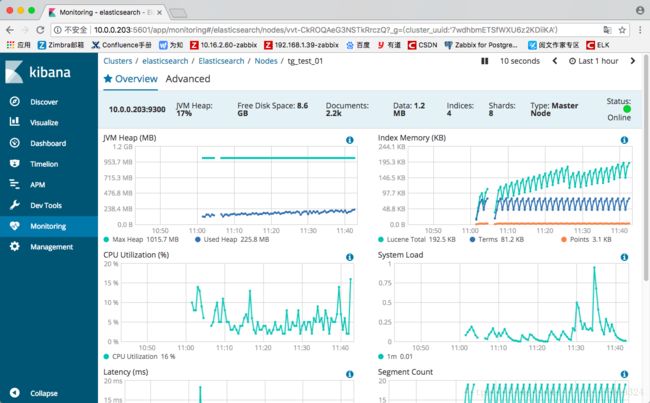

点击“tg_test_01”

也是有着监控项的,具体怎么用,还得后续研究

4、安装logstash

将 Elasticsearch 公共 GPG 密钥导入 rpm:

rpm --import https://artifacts.elastic.co/GPG-KEY-elasticsearch在/etc/yum.repos.d/目录中,创建一个名为logstash.repo的文件,添加下面配置:

cd /etc/yum.repos.d/

vim logstash.repo

###################################

[logstash-6.x]

name=Kibana repository for 6.x packages

baseurl=https://artifacts.elastic.co/packages/6.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-md安装logstash

yum makecache

yum install -y logstash测试配置

logstash收集syslog日志

vim /etc/logstash/conf.d/syslog.conf

###########################################

input { # 定义日志源

syslog {

type => "system-syslog" # 定义类型

port => 10086 # 定义监听端口

}

}

output { # 定义日志输出

stdout {

codec => rubydebug # 将日志输出到当前的终端上显示

}

}检测配置文件是否有错:

[root@tg01 yum.repos.d]# cd /usr/share/logstash/bin/

[root@tg01 bin]# ./logstash --path.settings /etc/logstash/ -f /etc/logstash/conf.d/syslog.conf --config.test_and_exit

Sending Logstash logs to /var/log/logstash which is now configured via log4j2.properties

[2018-09-12T14:26:56,905][INFO ][logstash.setting.writabledirectory] Creating directory {:setting=>"path.queue", :path=>"/var/lib/logstash/queue"}

[2018-09-12T14:26:56,937][INFO ][logstash.setting.writabledirectory] Creating directory {:setting=>"path.dead_letter_queue", :path=>"/var/lib/logstash/dead_letter_queue"}

[2018-09-12T14:26:57,937][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified

Configuration OK

[2018-09-12T14:27:03,203][INFO ][logstash.runner ] Using config.test_and_exit mode. Config Validation Result: OK. Exiting Logstash命令说明:

--path.settings 用于指定logstash的配置文件所在的目录

-f 指定需要被检测的配置文件的路径

--config.test_and_exit 指定检测完之后就退出,不然就会直接启动配置kibana服务器的ip以及配置的监听端口:

vim /etc/rsyslog.conf

#### RULES ####

*.* @@10.0.0.203:10086重启rsyslog,让配置生效:

systemctl restart rsyslog指定配置文件,启动logstash:

cd /usr/share/logstash/bin

[root@tg01 bin]# ./logstash --path.settings /etc/logstash/ -f /etc/logstash/conf.d/syslog.conf

Sending Logstash logs to /var/log/logstash which is now configured via log4j2.properties这时终端夯住,因为我们在配置文件中定义的是将信息输出到当前终端

打开新终端检查一下10086端口是否已被监听:

[root@tg01 ~]# netstat -lntup

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 10.0.0.203:5601 0.0.0.0:* LISTEN 14945/node

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 935/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 1074/master

tcp6 0 0 10.0.0.203:9200 :::* LISTEN 3843/java

tcp6 0 0 10.0.0.203:9300 :::* LISTEN 3843/java

tcp6 0 0 :::22 :::* LISTEN 935/sshd

tcp6 0 0 ::1:25 :::* LISTEN 1074/master 这时,夯住的终端有了新的信息

[2018-09-12T14:32:04,287][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified

[2018-09-12T14:32:04,387][INFO ][logstash.agent ] No persistent UUID file found. Generating new UUID {:uuid=>"85ef4c07-d0ff-430a-b5aa-48835f265599", :path=>"/var/lib/logstash/uuid"}

[2018-09-12T14:32:06,107][INFO ][logstash.runner ] Starting Logstash {"logstash.version"=>"6.4.0"}

[2018-09-12T14:32:11,843][INFO ][logstash.pipeline ] Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>1, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50}

[2018-09-12T14:32:12,827][INFO ][logstash.pipeline ] Pipeline started successfully {:pipeline_id=>"main", :thread=>"#"}

[2018-09-12T14:32:12,931][INFO ][logstash.agent ] Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

[2018-09-12T14:32:13,096][INFO ][logstash.inputs.syslog ] Starting syslog udp listener {:address=>"0.0.0.0:10086"}

[2018-09-12T14:32:13,113][INFO ][logstash.inputs.syslog ] Starting syslog tcp listener {:address=>"0.0.0.0:10086"}

[2018-09-12T14:32:13,691][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

[2018-09-12T14:32:57,818][INFO ][logstash.inputs.syslog ] new connection {:client=>"10.0.0.203:48690"} 再查询端口

[root@tg01 ~]# netstat -lntup

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 10.0.0.203:5601 0.0.0.0:* LISTEN 14945/node

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 935/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 1074/master

tcp6 0 0 127.0.0.1:9600 :::* LISTEN 17533/java

tcp6 0 0 10.0.0.203:9200 :::* LISTEN 3843/java

tcp6 0 0 :::10514 :::* LISTEN 17533/java

tcp6 0 0 10.0.0.203:9300 :::* LISTEN 3843/java

tcp6 0 0 :::22 :::* LISTEN 935/sshd

tcp6 0 0 ::1:25 :::* LISTEN 1074/master

udp 0 0 0.0.0.0:10086 0.0.0.0:* 17533/java又加了几个端口,用途尚且不知。再从本地ssh连接10.0.0.203

夯住的终端输出日志

{

"type" => "system-syslog",

"facility" => 10,

"program" => "sshd",

"severity_label" => "Informational",

"pid" => "17688",

"facility_label" => "security/authorization",

"host" => "10.0.0.203",

"@version" => "1",

"severity" => 6,

"timestamp" => "Sep 12 14:32:57",

"logsource" => "tg01",

"priority" => 86,

"message" => "Accepted publickey for root from 10.0.0.1 port 53459 ssh2: RSA SHA256:aQCfdpzYLzbQOVU6CKReBBbbfAnjzm6Jcy72/wSCqb0\n",

"@timestamp" => 2018-09-12T06:32:57.000Z

}

{

"type" => "system-syslog",

"facility" => 5,

"program" => "rsyslogd",

"severity_label" => "Informational",

"facility_label" => "syslogd",

"host" => "10.0.0.203",

"@version" => "1",

"severity" => 6,

"timestamp" => "Sep 12 14:32:57",

"logsource" => "tg01",

"priority" => 46,

"message" => "action 'action 0' resumed (module 'builtin:omfwd') [v8.24.0 try http://www.rsyslog.com/e/2359 ]\n",

"@timestamp" => 2018-09-12T06:32:57.000Z

}

{

"type" => "system-syslog",

"facility" => 5,

"program" => "rsyslogd",

"severity_label" => "Informational",

"facility_label" => "syslogd",

"host" => "10.0.0.203",

"@version" => "1",

"severity" => 6,

"timestamp" => "Sep 12 14:32:57",

"logsource" => "tg01",

"priority" => 46,

"message" => "action 'action 0' resumed (module 'builtin:omfwd') [v8.24.0 try http://www.rsyslog.com/e/2359 ]\n",

"@timestamp" => 2018-09-12T06:32:57.000Z

}

{

"type" => "system-syslog",

"facility" => 3,

"program" => "systemd",

"severity_label" => "Informational",

"facility_label" => "system",

"host" => "10.0.0.203",

"@version" => "1",

"severity" => 6,

"timestamp" => "Sep 12 14:32:57",

"logsource" => "tg01",

"priority" => 30,

"message" => "Started Session 17 of user root.\n",

"@timestamp" => 2018-09-12T06:32:57.000Z

}

{

"type" => "system-syslog",

"facility" => 4,

"program" => "systemd-logind",

"severity_label" => "Informational",

"facility_label" => "security/authorization",

"host" => "10.0.0.203",

"@version" => "1",

"severity" => 6,

"timestamp" => "Sep 12 14:32:57",

"logsource" => "tg01",

"priority" => 38,

"message" => "New session 17 of user root.\n",

"@timestamp" => 2018-09-12T06:32:57.000Z

}

{

"type" => "system-syslog",

"facility" => 10,

"program" => "sshd",

"severity_label" => "Informational",

"pid" => "17688",

"facility_label" => "security/authorization",

"host" => "10.0.0.203",

"@version" => "1",

"severity" => 6,

"timestamp" => "Sep 12 14:32:57",

"logsource" => "tg01",

"priority" => 86,

"message" => "pam_unix(sshd:session): session opened for user root by (uid=0)\n",

"@timestamp" => 2018-09-12T06:32:57.000Z

}

{

"type" => "system-syslog",

"facility" => 3,

"program" => "systemd",

"severity_label" => "Informational",

"facility_label" => "system",

"host" => "10.0.0.203",

"@version" => "1",

"severity" => 6,

"timestamp" => "Sep 12 14:32:57",

"logsource" => "tg01",

"priority" => 30,

"message" => "Starting Session 17 of user root.\n",

"@timestamp" => 2018-09-12T06:32:57.000Z

}

{

"type" => "system-syslog",

"facility" => 10,

"program" => "sshd",

"severity_label" => "Informational",

"pid" => "17688",

"facility_label" => "security/authorization",

"host" => "10.0.0.203",

"@version" => "1",

"severity" => 6,

"timestamp" => "Sep 12 14:33:23",

"logsource" => "tg01",

"priority" => 86,

"message" => "Received disconnect from 10.0.0.1 port 53459:11: disconnected by user\n",

"@timestamp" => 2018-09-12T06:33:23.000Z

}

{

"type" => "system-syslog",

"facility" => 10,

"program" => "sshd",

"severity_label" => "Informational",

"pid" => "17688",

"facility_label" => "security/authorization",

"host" => "10.0.0.203",

"@version" => "1",

"severity" => 6,

"timestamp" => "Sep 12 14:33:23",

"logsource" => "tg01",

"priority" => 86,

"message" => "Disconnected from 10.0.0.1 port 53459\n",

"@timestamp" => 2018-09-12T06:33:23.000Z

}

{

"type" => "system-syslog",

"facility" => 10,

"program" => "sshd",

"severity_label" => "Informational",

"pid" => "17688",

"facility_label" => "security/authorization",

"host" => "10.0.0.203",

"@version" => "1",

"severity" => 6,

"timestamp" => "Sep 12 14:33:23",

"logsource" => "tg01",

"priority" => 86,

"message" => "pam_unix(sshd:session): session closed for user root\n",

"@timestamp" => 2018-09-12T06:33:23.000Z

}

{

"type" => "system-syslog",

"facility" => 4,

"program" => "systemd-logind",

"severity_label" => "Informational",

"facility_label" => "security/authorization",

"host" => "10.0.0.203",

"@version" => "1",

"severity" => 6,

"timestamp" => "Sep 12 14:33:23",

"logsource" => "tg01",

"priority" => 38,

"message" => "Removed session 17.\n",

"@timestamp" => 2018-09-12T06:33:23.000Z

}

如上,可以看到,终端中以JSON的格式打印了收集到的日志,测试成功。

配置logstash

以上只是测试的配置,这一步我们需要重新改一下配置文件,让收集的日志信息输出到es服务器中,而不是当前终端:

vim /etc/logstash/conf.d/syslog.conf # 更改为如下内容

input {

syslog {

type => "system-syslog"

port => 10086

}

}

output {

elasticsearch {

hosts => ["10.0.0.203:9200"] # 定义es服务器的ip

index => "system-syslog-%{+YYYY.MM}" # 定义索引

}

}同样的需要检测配置文件有没有错:

cd /usr/share/logstash/bin

[root@tg01 bin]# ./logstash --path.settings /etc/logstash/ -f /etc/logstash/conf.d/syslog.conf --config.test_and_exit

Sending Logstash logs to /var/log/logstash which is now configured via log4j2.properties

[2018-09-12T14:48:42,992][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified

Configuration OK

[2018-09-12T14:48:47,042][INFO ][logstash.runner ] Using config.test_and_exit mode. Config Validation Result: OK. Exiting Logstash给文件赋予logstash用户权限

chown -R logstash /var/lib/logstash/

chown -R logstash /var/log/logstash/*设置为开机启动

systemctl daemon-reload

systemctl enable logstash.service启动logstash

systemctl start logstash.service查看端口

[root@tg01 bin]# netstat -lntup

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 10.0.0.203:5601 0.0.0.0:* LISTEN 14945/node

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 935/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 1074/master

tcp6 0 0 127.0.0.1:9600 :::* LISTEN 19094/java

tcp6 0 0 10.0.0.203:9200 :::* LISTEN 3843/java

tcp6 0 0 :::10514 :::* LISTEN 19094/java

tcp6 0 0 10.0.0.203:9300 :::* LISTEN 3843/java

tcp6 0 0 :::22 :::* LISTEN 935/sshd

tcp6 0 0 ::1:25 :::* LISTEN 1074/master

udp 0 0 0.0.0.0:10086 0.0.0.0:* 19094/java但是可以看到,logstash的监听ip是127.0.0.1这个本地ip,本地ip无法远程通信,所以需要修改一下配置文件,配置一下监听的ip:

vim /etc/logstash/logstash.yml

#####################################

http.host: "10.0.0.203"重启服务

systemctl restart logstash.service检查端口

[root@tg01 bin]# netstat -lntup

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 10.0.0.203:5601 0.0.0.0:* LISTEN 14945/node

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 935/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 1074/master

tcp6 0 0 10.0.0.203:9600 :::* LISTEN 19775/java

tcp6 0 0 10.0.0.203:9200 :::* LISTEN 3843/java

tcp6 0 0 :::10514 :::* LISTEN 19775/java

tcp6 0 0 10.0.0.203:9300 :::* LISTEN 3843/java

tcp6 0 0 :::22 :::* LISTEN 935/sshd

tcp6 0 0 ::1:25 :::* LISTEN 1074/master

udp 0 0 0.0.0.0:10086 0.0.0.0:* 5、kibana上查看日志

完成了logstash服务器的搭建之后,回到kibana服务器上查看日志,执行以下命令可以获取索引信息:

[root@tg01 bin]# curl 'http://10.0.0.203:9200/_cat/indices?v'

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

green open .kibana jVwLlSzQQHuTx0biFSiPNw 1 0 2 0 13.6kb 13.6kb

green open .monitoring-kibana-6-2018.09.12 1F_EkSNYSfqiHOW4B352qw 1 0 997 0 374kb 374kb

yellow open system-syslog-2018.09 OqWFiqvtRVip660jN4kUNA 5 1 11 0 79.9kb 79.9kb

yellow open tg_test ZidQDQwBRHirzN-47EeaKw 5 1 0 0 1.2kb 1.2kb

green open .monitoring-es-6-2018.09.12 I14uthd5TY-splmVw1iuDg 1 0 8164 23 3.6mb如上,可以看到,在logstash配置文件中定义的system-syslog索引成功获取到了,证明配置没问题,logstash与es通信正常。

获取指定索引详细信息:

[root@tg01 bin]# curl -XGET '10.0.0.203:9200/system-syslog-2018.09?pretty'

{

"system-syslog-2018.09" : {

"aliases" : { },

"mappings" : {

"doc" : {

"properties" : {

"@timestamp" : {

"type" : "date"

},

"@version" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

},

"facility" : {

"type" : "long"

},

"facility_label" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

},

"host" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

},

"logsource" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

},

"message" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

},

"pid" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

},

"priority" : {

"type" : "long"

},

"program" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

},

"severity" : {

"type" : "long"

},

"severity_label" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

},

"timestamp" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

},

"type" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

}

}

}

},

"settings" : {

"index" : {

"creation_date" : "1536735662461",

"number_of_shards" : "5",

"number_of_replicas" : "1",

"uuid" : "OqWFiqvtRVip660jN4kUNA",

"version" : {

"created" : "6040099"

},

"provided_name" : "system-syslog-2018.09"

}

}

}

}删除索引:

curl -XDELETE '10.0.0.203:9200/system-syslog-2018.09'(如果你想删除的话)es与logstash能够正常通信后就可以去配置kibana了

10.0.0.203:5601

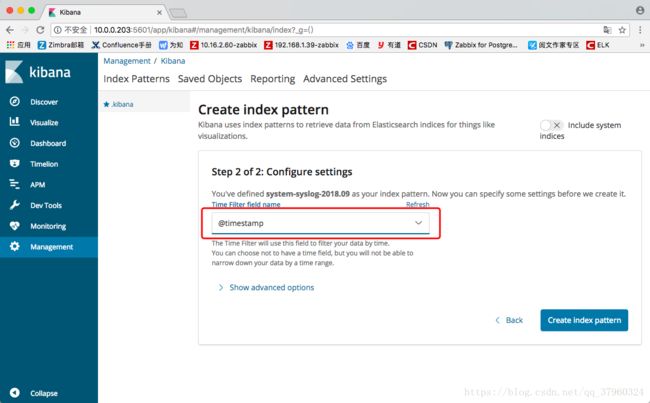

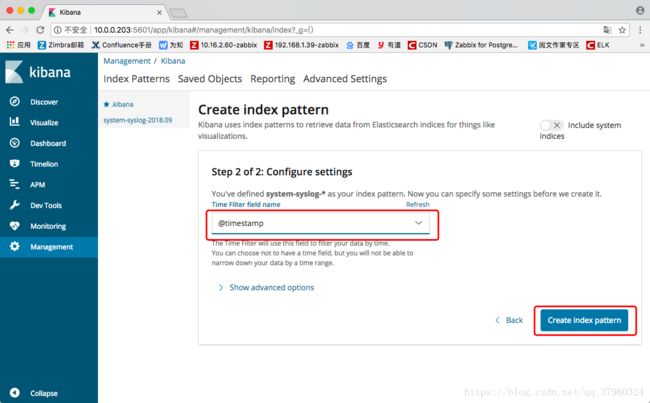

点击“Management”填入一个指定索引“system-syslog-2018.09 ”

选择时间文件

点击“Management”设置通配符,进行批量匹配“system-syslog-*”

以system-syslog-开头的就会被匹配;选择时间文件

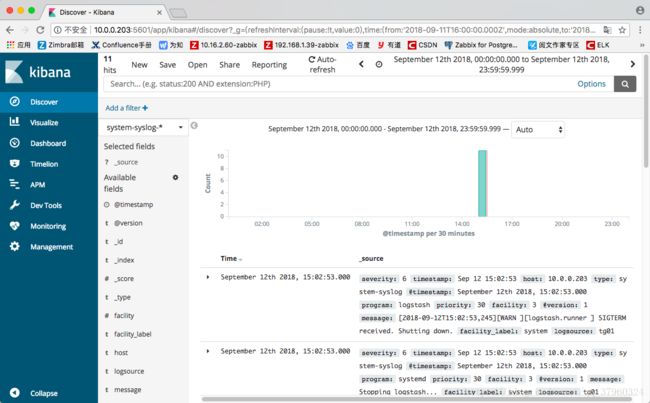

成功后点击“Discover”

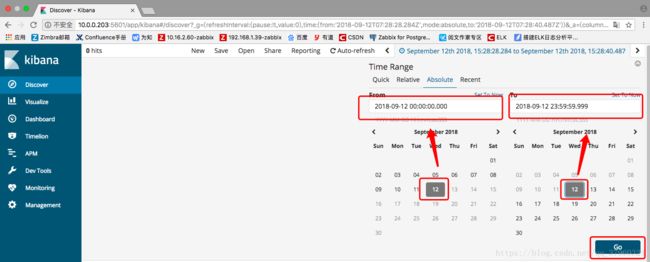

选择system-syslog-*,如果出现以下提示,表示监测时间段没设置好。

点击右上角的“Last 15 minutes”设置时间为今天的时间段“From 2018-09-12 00:00:00.000”“To 2018-09-12 23:59:59.999”,即点击下方日历的“12”;“12”。“OK退出”

再来看,日志监控成功了

至此,ELK环境搭建完成,接下来就是使用了。