Structed Streaming(Continuous Processing报错):StreamingQueryException;java.util.NoSuchElementException

问题描述

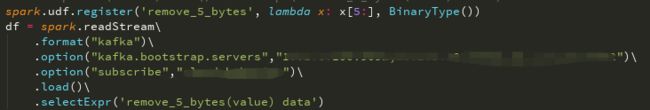

我在查询中使用了udf导致报错,因为目前spark2.4对Continuous Processing的查询仅支持投影类(projections),如select, map, flatMap, mapPartitions,etc。或者是选择类(selections),如where, filter, etc。

官网描述

As of Spark 2.4, only the following type of queries are supported in the continuous processing mode.

Operations: Only map-like Dataset/DataFrame operations are supported in continuous mode, that is, only projections (select, map, flatMap, mapPartitions, etc.) and selections (where, filter, etc.).

All SQL functions are supported except aggregation functions (since aggregations are not yet supported), current_timestamp() and current_date() (deterministic computations using time is challenging).报错信息

[Stage 0:>(0 + 3) / 36]2019-09-29 11:26:05 WARN TaskSetManager:66 - Lost task 2.0 in stage 0.0: java.util.NoSuchElementException: None.get

at scala.None$.get(Option.scala:347)

at scala.None$.get(Option.scala:345)

at org.apache.spark.sql.execution.streaming.continuous.ContinuousQueuedDataReader.next(ContinuousQueuedDataReader.scala:116)

at org.apache.spark.sql.execution.streaming.continuous.ContinuousDataSourceRDD$$anon$1.getNext(ContinuousDataSourceRDD.scala:83)

at org.apache.spark.sql.execution.streaming.continuous.ContinuousDataSourceRDD$$anon$1.getNext(ContinuousDataSourceRDD.scala:81)

at org.apache.spark.util.NextIterator.hasNext(NextIterator.scala:73)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage1.processNext(Unknown Source)

at org.apache.spark.sql.execution.BufferedRowIterator.hasNext(BufferedRowIterator.java:43)

at org.apache.spark.sql.execution.WholeStageCodegenExec$$anonfun$11$$anon$1.hasNext(WholeStageCodegenExec.scala:619)

at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:409)

at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:409)

at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:409)

at scala.collection.Iterator$GroupedIterator.fill(Iterator.scala:1124)

at scala.collection.Iterator$GroupedIterator.hasNext(Iterator.scala:1130)

at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:409)

at scala.collection.Iterator$class.foreach(Iterator.scala:891)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1334)

at org.apache.spark.api.python.PythonRDD$.writeIteratorToStream(PythonRDD.scala:224)

at org.apache.spark.sql.execution.python.PythonUDFRunner$$anon$2.writeIteratorToStream(PythonUDFRunner.scala:50)

at org.apache.spark.api.python.BasePythonRunner$WriterThread$$anonfun$run$1.apply(PythonRunner.scala:345)

at org.apache.spark.util.Utils$.logUncaughtExceptions(Utils.scala:1945)

at org.apache.spark.api.python.BasePythonRunner$WriterThread.run(PythonRunner.scala:194)

2019-09-29 11:26:05 WARN TaskSetManager:66 - Lost task 1.1 in stage 0.0: org.apache.spark.sql.execution.streaming.continuous.ContinuousTaskRetryException: Continuous execution does not support task retry

at org.apache.spark.sql.execution.streaming.continuous.ContinuousDataSourceRDD.compute(ContinuousDataSourceRDD.scala:68)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:324)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:288)

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:52)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:324)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:288)

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:52)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:324)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:288)

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:52)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:324)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:288)

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:52)

at org.apache.spark.sql.execution.streaming.continuous.ContinuousWriteRDD$$anonfun$compute$1.apply$mcV$sp(ContinuousWriteRDD.scala:52)

at org.apache.spark.sql.execution.streaming.continuous.ContinuousWriteRDD$$anonfun$compute$1.apply(ContinuousWriteRDD.scala:51)

at org.apache.spark.sql.execution.streaming.continuous.ContinuousWriteRDD$$anonfun$compute$1.apply(ContinuousWriteRDD.scala:51)

at org.apache.spark.util.Utils$.tryWithSafeFinallyAndFailureCallbacks(Utils.scala:1394)

at org.apache.spark.sql.execution.streaming.continuous.ContinuousWriteRDD.compute(ContinuousWriteRDD.scala:76)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:324)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:288)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:121)

at org.apache.spark.executor.Executor$TaskRunner$$anonfun$10.apply(Executor.scala:402)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:408)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

2019-09-29 11:26:05 ERROR TaskSetManager:70 - Task 1 in stage 0.0 failed 4 times; aborting job

2019-09-29 11:26:05 ERROR ContinuousExecution:91 - Query [id = 1c99b68c-b629-4eac-b797-6d41a8050773, runId = 2f4cb83d-6ca9-4fd1-bb9d-2133b277e663] terminated with error

org.apache.spark.SparkException: Writing job aborted.

at org.apache.spark.sql.execution.streaming.continuous.WriteToContinuousDataSourceExec.doExecute(WriteToContinuousDataSourceExec.scala:62)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:131)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:127)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$executeQuery$1.apply(SparkPlan.scala:155)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.sql.execution.SparkPlan.executeQuery(SparkPlan.scala:152)

at org.apache.spark.sql.execution.SparkPlan.execute(SparkPlan.scala:127)

at org.apache.spark.sql.execution.QueryExecution.toRdd$lzycompute(QueryExecution.scala:80)

at org.apache.spark.sql.execution.QueryExecution.toRdd(QueryExecution.scala:80)

at org.apache.spark.sql.execution.streaming.continuous.ContinuousExecution$$anonfun$runContinuous$3$$anonfun$apply$1.apply(ContinuousExecution.scala:262)

at org.apache.spark.sql.execution.streaming.continuous.ContinuousExecution$$anonfun$runContinuous$3$$anonfun$apply$1.apply(ContinuousExecution.scala:262)

at org.apache.spark.sql.execution.SQLExecution$$anonfun$withNewExecutionId$1.apply(SQLExecution.scala:78)

at org.apache.spark.sql.execution.SQLExecution$.withSQLConfPropagated(SQLExecution.scala:125)

at org.apache.spark.sql.execution.SQLExecution$.withNewExecutionId(SQLExecution.scala:73)

at org.apache.spark.sql.execution.streaming.continuous.ContinuousExecution$$anonfun$runContinuous$3.apply(ContinuousExecution.scala:262)

at org.apache.spark.sql.execution.streaming.continuous.ContinuousExecution$$anonfun$runContinuous$3.apply(ContinuousExecution.scala:262)

at org.apache.spark.sql.execution.streaming.ProgressReporter$class.reportTimeTaken(ProgressReporter.scala:351)

at org.apache.spark.sql.execution.streaming.StreamExecution.reportTimeTaken(StreamExecution.scala:58)

at org.apache.spark.sql.execution.streaming.continuous.ContinuousExecution.runContinuous(ContinuousExecution.scala:260)

at org.apache.spark.sql.execution.streaming.continuous.ContinuousExecution.runActivatedStream(ContinuousExecution.scala:90)

at org.apache.spark.sql.execution.streaming.StreamExecution.org$apache$spark$sql$execution$streaming$StreamExecution$$runStream(StreamExecution.scala:279)

at org.apache.spark.sql.execution.streaming.StreamExecution$$anon$1.run(StreamExecution.scala:189)

Caused by: org.apache.spark.SparkException: Job aborted due to stage failure: Task 1 in stage 0.0 failed 4 times, most recent failure: Lost task 1.3 in stage 0.0 : org.apache.spark.sql.execution.streaming.continuous.ContinuousTaskRetryException: Continuous execution does not support task retry

at org.apache.spark.sql.execution.streaming.continuous.ContinuousDataSourceRDD.compute(ContinuousDataSourceRDD.scala:68)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:324)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:288)

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:52)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:324)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:288)

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:52)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:324)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:288)

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:52)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:324)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:288)

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:52)

at org.apache.spark.sql.execution.streaming.continuous.ContinuousWriteRDD$$anonfun$compute$1.apply$mcV$sp(ContinuousWriteRDD.scala:52)

at org.apache.spark.sql.execution.streaming.continuous.ContinuousWriteRDD$$anonfun$compute$1.apply(ContinuousWriteRDD.scala:51)

at org.apache.spark.sql.execution.streaming.continuous.ContinuousWriteRDD$$anonfun$compute$1.apply(ContinuousWriteRDD.scala:51)

at org.apache.spark.util.Utils$.tryWithSafeFinallyAndFailureCallbacks(Utils.scala:1394)

at org.apache.spark.sql.execution.streaming.continuous.ContinuousWriteRDD.compute(ContinuousWriteRDD.scala:76)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:324)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:288)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:121)

at org.apache.spark.executor.Executor$TaskRunner$$anonfun$10.apply(Executor.scala:402)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:408)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Driver stacktrace:

at org.apache.spark.scheduler.DAGScheduler.org$apache$spark$scheduler$DAGScheduler$$failJobAndIndependentStages(DAGScheduler.scala:1887)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$abortStage$1.apply(DAGScheduler.scala:1875)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$abortStage$1.apply(DAGScheduler.scala:1874)

at scala.collection.mutable.ResizableArray$class.foreach(ResizableArray.scala:59)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:48)

at org.apache.spark.scheduler.DAGScheduler.abortStage(DAGScheduler.scala:1874)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$handleTaskSetFailed$1.apply(DAGScheduler.scala:926)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$handleTaskSetFailed$1.apply(DAGScheduler.scala:926)

at scala.Option.foreach(Option.scala:257)

at org.apache.spark.scheduler.DAGScheduler.handleTaskSetFailed(DAGScheduler.scala:926)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.doOnReceive(DAGScheduler.scala:2108)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2057)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2046)

at org.apache.spark.util.EventLoop$$anon$1.run(EventLoop.scala:49)

at org.apache.spark.scheduler.DAGScheduler.runJob(DAGScheduler.scala:737)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2061)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2082)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2101)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2126)

at org.apache.spark.rdd.RDD$$anonfun$collect$1.apply(RDD.scala:945)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:112)

at org.apache.spark.rdd.RDD.withScope(RDD.scala:363)

at org.apache.spark.rdd.RDD.collect(RDD.scala:944)

at org.apache.spark.sql.execution.streaming.continuous.WriteToContinuousDataSourceExec.doExecute(WriteToContinuousDataSourceExec.scala:53)

... 21 more

Caused by: org.apache.spark.sql.execution.streaming.continuous.ContinuousTaskRetryException: Continuous execution does not support task retry

at org.apache.spark.sql.execution.streaming.continuous.ContinuousDataSourceRDD.compute(ContinuousDataSourceRDD.scala:68)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:324)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:288)

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:52)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:324)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:288)

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:52)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:324)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:288)

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:52)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:324)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:288)

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:52)

at org.apache.spark.sql.execution.streaming.continuous.ContinuousWriteRDD$$anonfun$compute$1.apply$mcV$sp(ContinuousWriteRDD.scala:52)

at org.apache.spark.sql.execution.streaming.continuous.ContinuousWriteRDD$$anonfun$compute$1.apply(ContinuousWriteRDD.scala:51)

at org.apache.spark.sql.execution.streaming.continuous.ContinuousWriteRDD$$anonfun$compute$1.apply(ContinuousWriteRDD.scala:51)

at org.apache.spark.util.Utils$.tryWithSafeFinallyAndFailureCallbacks(Utils.scala:1394)

at org.apache.spark.sql.execution.streaming.continuous.ContinuousWriteRDD.compute(ContinuousWriteRDD.scala:76)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:324)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:288)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:121)

at org.apache.spark.executor.Executor$TaskRunner$$anonfun$10.apply(Executor.scala:402)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:408)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

2019-09-29 11:26:05 WARN ContinuousExecution:87 - Failed to stop streaming source: KafkaSource[Subscribe[cloud_behavior]]. Resources may have leaked.

java.lang.IllegalStateException: This consumer has already been closed.

at org.apache.kafka.clients.consumer.KafkaConsumer.ensureNotClosed(KafkaConsumer.java:1416)

at org.apache.kafka.clients.consumer.KafkaConsumer.acquire(KafkaConsumer.java:1427)

at org.apache.kafka.clients.consumer.KafkaConsumer.close(KafkaConsumer.java:1360)

at org.apache.spark.sql.kafka010.KafkaOffsetReader.org$apache$spark$sql$kafka010$KafkaOffsetReader$$stopConsumer(KafkaOffsetReader.scala:317)

at org.apache.spark.sql.kafka010.KafkaOffsetReader$$anonfun$close$1.apply$mcV$sp(KafkaOffsetReader.scala:108)

at org.apache.spark.sql.kafka010.KafkaOffsetReader$$anonfun$close$1.apply(KafkaOffsetReader.scala:108)

at org.apache.spark.sql.kafka010.KafkaOffsetReader$$anonfun$close$1.apply(KafkaOffsetReader.scala:108)

at org.apache.spark.sql.kafka010.KafkaOffsetReader.runUninterruptibly(KafkaOffsetReader.scala:255)

at org.apache.spark.sql.kafka010.KafkaOffsetReader.close(KafkaOffsetReader.scala:108)

at org.apache.spark.sql.kafka010.KafkaContinuousReader.stop(KafkaContinuousReader.scala:118)

at org.apache.spark.sql.execution.streaming.StreamExecution$$anonfun$stopSources$1.apply(StreamExecution.scala:373)

at org.apache.spark.sql.execution.streaming.StreamExecution$$anonfun$stopSources$1.apply(StreamExecution.scala:371)

at scala.collection.mutable.ResizableArray$class.foreach(ResizableArray.scala:59)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:48)

at org.apache.spark.sql.execution.streaming.StreamExecution.stopSources(StreamExecution.scala:371)

at org.apache.spark.sql.execution.streaming.StreamExecution$$anonfun$org$apache$spark$sql$execution$streaming$StreamExecution$$runStream$2.apply(StreamExecution.scala:319)

at org.apache.spark.util.UninterruptibleThread.runUninterruptibly(UninterruptibleThread.scala:77)

at org.apache.spark.sql.execution.streaming.StreamExecution.org$apache$spark$sql$execution$streaming$StreamExecution$$runStream(StreamExecution.scala:308)

at org.apache.spark.sql.execution.streaming.StreamExecution$$anon$1.run(StreamExecution.scala:189)