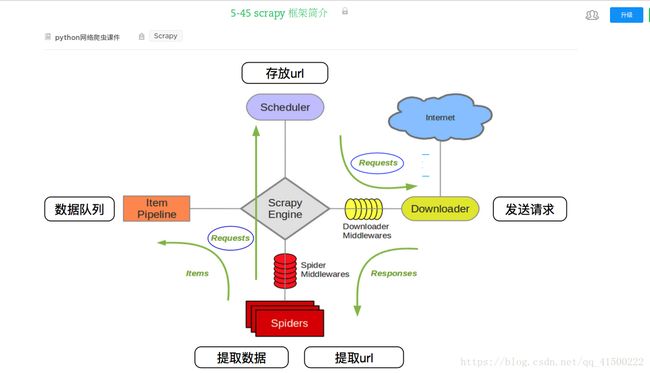

流程

将数据存储到Mongodb数据库:

import pymongo

from jobboleproject.items import JobboleprojectItem

class JobboleprojectPipeline(object):

def __init__(self,mongo_host,mongo_port,mongo_db):

self.mongoConn = pymongo.MongoClient(mongo_host,mongo_port)

self.db = self.mongoConn[mongo_db]

self.col = self.db.student

@classmethod

def from_crawler(cls, crawler):

return cls(

mongo_host=crawler.settings.get('MONGODB_HOST'),

mongo_port=crawler.settings.get('MONGODB_PORT'),

mongo_db = crawler.settings.get('MONGODB_DB')

)

def open_spider(self,spider):

print('爬虫文件开始执行',spider.name)

def process_item(self, item, spider):

print('管道文件我来了')

self.col.insert(dict(item))

return item

def close_spider(self,spider):

print('爬虫文件结束执行',spider.name)

将数据存储到Mysql数据库:

import pymysql

from jobboleproject.items import JobboleprojectItem

class JobboleprojectPipeline(object):

def __init__(self,host,port,db,user,pwd):

self.mysqlConn = pymysql.Connect(host=host,user=user,password=pwd,database=db,port=port,charset='utf8')

self.cursor = self.mysqlConn.cursor()

@classmethod

def from_crawler(cls, crawler):

return cls(

host = crawler.settings.get('MYSQL_HOST'),

port = crawler.settings.get('MYSQL_PORT'),

db = crawler.settings.get('MYSQL_DB'),

user = crawler.settings.get('MYSQL_USER'),

pwd = crawler.settings.get('MYSQL_PWD'),

)

def open_spider(self,spider):

print('爬虫文件开始执行',spider.name)

def process_item(self, item, spider):

print('管道文件我来了')

sql = """

INSERT INTO abc(coverImage,link)

VALUES (%s,%s)

"""

try:

self.cursor.execute(sql,(item['coverImage'],item['link']))

self.mysqlConn.commit()

except Exception as err:

print(err)

self.mysqlConn.rollback()

return item

def close_spider(self,spider):

print('爬虫文件结束执行',spider.name)

self.cursor.close()

self.mysqlConn.close()

class JobboleprojectPipeline1(object):

def process_item(self, item, spider):

print('管道文件我来了1')

return item

将数据存储为Json文件:

import json

class JobboleprojectPipeline(object):

def __init__(self):

self.file = open('data.json','a+')

def open_spider(self,spider):

print('爬虫文件开始执行',spider.name)

def process_item(self, item, spider):

print('管道文件我来了')

data = json.dumps(dict(item),ensure_ascii=False)+'\n'

self.file.write(data)

return item

def close_spider(self,spider):

print('爬虫文件结束执行',spider.name)

self.file.close()

在settings文件中设置链接数据库代码

MONGODB_HOST = 'localhost'

MONGODB_PORT = 27017

MONGODB_DB = 'scrapy1803'

MYSQL_HOST = 'localhost'

MYSQL_PORT = 3306

MYSQL_USER = 'root'

MYSQL_PWD = 'ljh1314'

MYSQL_DB = 'class1803'