maskrcnn-benchmask训练自己的数据集(voc格式)

去年十二月facebook发布了pytorch1.0,并且基于pytorch1.0的MASK RCNN和FPN实现也开源了。其实现相较于Detectron拥有更快的训练速度和更低的GPU内存占用。具体可以参考:

https://github.com/facebookresearch/maskrcnn-benchmark

pytorch1.0安装请参考pytorch官网,maskrcnn-benchmask安装请参考:

https://github.com/facebookresearch/maskrcnn-benchmark/blob/master/INSTALL.md

demo测试

安装完成后跑一下demo以验证安装,在maskrcnn-benchmask根目录下(接下来所有命令默认在此目录下)输入以下命令(要有摄像头哦):

cd demo

# by default, it runs on the GPU

# for best results, use min-image-size 800

python webcam.py --min-image-size 800

你会看到近乎实时的检测效果。

训练自己的数据集

maskrcnn-benchmask在更新后已经支持voc格式的数据集,所以我们就不用再转换数据集格式了。

数据准备

1、在根目录下新建experiments文件夹并在其中新建cfgs和result两个文件夹。从configs中拷贝e2e_faster_rcnn_R_50_FPN_1x.yaml文件到cfgs文件夹中作为我们的配置文件。

2、在根目录下新建pre_train文件夹用以存放预训练模型。从https://github.com/facebookresearch/maskrcnn-benchmark/blob/master/MODEL_ZOO.md上下载预训练模型到此文件夹中。

![]()

在该网址中点击上图中的蓝色id即可下载。

3、创建数据集链接:

ln -s /path_to_VOCdevkit_dir datasets/voc

4、修改配置文件,打开上面我们拷贝的配置文件:

主要修改内容如下:

MODEL.WEIGHT:我们刚才下载的预训练模型路径

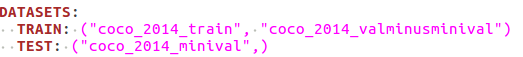

DATASETS.TRAIN:你自己的数据集

DATASETS.TEST:你自己的数据集

数据集指定时如果仅有一个数据集不要忘了逗号

在配置文件最后一行加上你自己的结果保存路径:

OUTPUT_DIR:./experiments/result

最近在github上看到关于参数设置的一些问题,在这里更新一下。

关于maskrcnn_banchmask/config/default.py中的

MODEL.RPN.FPN_POST_NMS_TOP_N_TRAIN 2000

这里的2000是每个batch在NMS之后选取2000个锚框,而不是每张图片选取2000个,这个参数的设置规则:1000 × images_per_GPU。

通常来说这个是不用更改的,我们只需要根据自己的GPU数量设置

_C.SOLVER.IMS_PER_BATCH = 16 // 16是2×8,即有8个GPU

_C.TEST.IMS_PER_BATCH = 8 //1×8

代码修改

使用预训练模型需要修改部分代码,主要由于自己的数据集类别和预训练的81类对不上。

修改maskrcnn-benchmask/config/default.py:

_C.MODEL.ROI_BOX_HEAD.NUM_CLASSES修改为自己的数据集类别加1

_C.SOLVER.CHECKPOINT_PERIOD修改为10000(可选),设置大点是为了减少保存的模型数

修改maskrcnn-benchmask/modeling/roi_heads/box_head/roi_box_predictors.py:

由于我们最终输出的类别和预训练模型不一致,所以我们需要对最后的几层网络修改一下名字,以免读取模型错误。

class FPNPredictor(nn.Module):

def __init__(self, cfg):

super(FPNPredictor, self).__init__()

num_classes = cfg.MODEL.ROI_BOX_HEAD.NUM_CLASSES

representation_size = cfg.MODEL.ROI_BOX_HEAD.MLP_HEAD_DIM

# change cls_score to cls_score_kitti, change bbox_pred to bbox_pred_kitti.by GX

self.cls_score_kitti = nn.Linear(representation_size, num_classes)

self.bbox_pred_kitti = nn.Linear(representation_size, num_classes * 4)

nn.init.normal_(self.cls_score_kitti.weight, std=0.01)

nn.init.normal_(self.bbox_pred_kitti.weight, std=0.001)

for l in [self.cls_score_kitti, self.bbox_pred_kitti]:

nn.init.constant_(l.bias, 0)

def forward(self, x):

scores = self.cls_score_kitti(x)

bbox_deltas = self.bbox_pred_kitti(x)

return scores, bbox_deltas

修改maskrcnn-benchmask/utils/checkpoint.py,在其中注释读取optimizer和scheduler的代码,这也是由于数据集类别对不上导致的。

def load(self, f=None):

if self.has_checkpoint():

# override argument with existing checkpoint

f = self.get_checkpoint_file()

if not f:

# no checkpoint could be found

self.logger.info("No checkpoint found. Initializing model from scratch")

return {}

self.logger.info("Loading checkpoint from {}".format(f))

checkpoint = self._load_file(f)

self._load_model(checkpoint)

# if "optimizer" in checkpoint and self.optimizer:

# self.logger.info("Loading optimizer from {}".format(f))

# self.optimizer.load_state_dict(checkpoint.pop("optimizer"))

# if "scheduler" in checkpoint and self.scheduler:

# self.logger.info("Loading scheduler from {}".format(f))

# self.scheduler.load_state_dict(checkpoint.pop("scheduler"))

# return any further checkpoint data

return checkpoint

修改maskrcnn-benchmask/data/datasets/voc.py,修改其中的CLASSES为你自己的数据集的类别。

修改tools/train_net.py,由于读取预训练模型会将其训练次数也读进来,导致训练不是从0开始,因此我们需要在arguments.update(extra_checkpoint_data)下面添加一行代码:arguments[“iteration”] = 0(当你需要接着训练次数训练时注释掉)

至此,所有准备完毕:

在根目录下输入:

python tools/train_net.py --config-file "experiments/cfgs/e2e_faster_rcnn_R_50_FPN_1x.yaml" SOLVER.IMS_PER_BATCH 2 SOLVER.BASE_LR 0.0025 SOLVER.MAX_ITER 720000 TEST.IMS_PER_BATCH 1

开始训练。默认情况下训练完会自动进行最终模型的测试。

写结果

由于数据集问题,我需要将我的测试结果保存下来以数据集标准进行评估,所以有此部分。(大家可以跳过)

在maskrcnn-benchmask/data/datasets/voc.py中添加返回图片名(006457.png中的006457)的函数

# to get the file_name of image. add by GX.

def get_image_id(self, index):

return self.ids[index]

在maskrcnn-benchmask/data/datasets/evaluation/voc/voc_eval.py中各函数添加image_names参数

def do_voc_evaluation(dataset, predictions, output_folder, logger):

# TODO need to make the use_07_metric format available

# for the user to choose

pred_boxlists = []

gt_boxlists = []

image_names = [] # add image_names.by GX

for image_id, prediction in enumerate(predictions):

img_info = dataset.get_img_info(image_id)

if len(prediction) == 0:

continue

image_name = dataset.get_image_id(image_id) # .by GX

image_names.append(image_name) # .by GX

image_width = img_info["width"]

image_height = img_info["height"]

prediction = prediction.resize((image_width, image_height))

pred_boxlists.append(prediction)

gt_boxlist = dataset.get_groundtruth(image_id)

gt_boxlists.append(gt_boxlist)

result = eval_detection_voc(

image_names=image_names, # .by GX

pred_boxlists=pred_boxlists,

gt_boxlists=gt_boxlists,

iou_thresh=0.5,

use_07_metric=True,

)

def calc_detection_voc_prec_rec(image_names, gt_boxlists, pred_boxlists, iou_thresh=0.5):

"""Calculate precision and recall based on evaluation code of PASCAL VOC.

This function calculates precision and recall of

predicted bounding boxes obtained from a dataset which has :math:`N`

images.

The code is based on the evaluation code used in PASCAL VOC Challenge.

"""

n_pos = defaultdict(int)

score = defaultdict(list)

match = defaultdict(list)

for gt_boxlist, pred_boxlist, image_id in zip(gt_boxlists, pred_boxlists, image_names): # .by GX

pred_bbox = pred_boxlist.bbox.numpy()

pred_label = pred_boxlist.get_field("labels").numpy()

pred_score = pred_boxlist.get_field("scores").numpy()

gt_bbox = gt_boxlist.bbox.numpy()

gt_label = gt_boxlist.get_field("labels").numpy()

gt_difficult = gt_boxlist.get_field("difficult").numpy()

# write result.by GX

write_result(pred_bbox, pred_label, pred_score, image_id)

写结果函数如下:

# write result in txt file for kitti evaluation.by GX

CLASSES = ('__background__', 'pedestrian', 'car', 'cyclist')

def write_result(pred_bbox,pred_label, pred_score, file_name):

device=torch.device("cpu")

result_file_path = os.path.join(cfg.OUTPUT_DIR, 'Ori_70anchors_detections', str(file_name) + '.txt')

result_file = open(result_file_path, 'w')

for l in np.unique(pred_label).astype(int):

if l == 0: # skip background

continue

pred_mask_l = pred_label == l

pred_bbox_l = pred_bbox[pred_mask_l]

pred_score_l = pred_score[pred_mask_l]

# sort by score

order = pred_score_l.argsort()[::-1]

pred_bbox_l = pred_bbox_l[order]

pred_score_l = pred_score_l[order]

# add nms.by GX

bbox = torch.as_tensor(pred_bbox_l, dtype=torch.float32, device=device)

scores = torch.as_tensor(pred_score_l,dtype=torch.float32,device=device)

keep = _box_nms(bbox,scores,0.5).numpy()

if len(keep)>100:

keep = keep[:100]

#print(type(keep))

pred_bbox_l = pred_bbox_l[keep]

pred_score_l = pred_score_l[keep]

#################

cls = CLASSES[l]

inds = np.where(pred_score_l >= 0.8)[0]

index = 0

if len(inds) == 0 and index == len(CLASSES[1:]):

result_file.close()

return

elif len(inds) == 0 and index