图像语义分割模型——SegNet(四)

简介

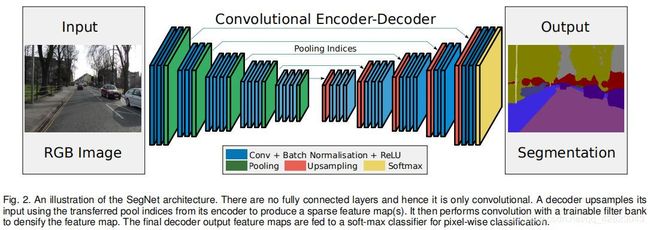

补充一下2015年发表的SegNet模型,它是由剑桥大学团队开发的图像分割的开源项目,该项目可以对图像中的物体所在区域进行分割。SegNet是在FCN的语义分割任务基础上,搭建encoder-decoder对称结构,实现端到端的像素级别图像分割。其新颖之处在于解码器对其较低分辨率的输入特征图进行上采样的方式。

SegNet论文地址:https://arxiv.org/abs/1511.00561

SegNet原理:

可以看出SegNet整体的网络结构与FCN相似,它也是最开始明确定义ecoder端和decoder端,它的ecoder端是使用Vgg16,总计使用了Vgg16的13个卷积层,其主要的特点是在decoder端将pooling indices技术应用在max pooling过程中来连接encoder的输出做一个非线性的上采样。

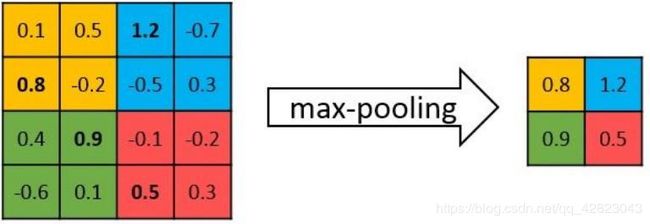

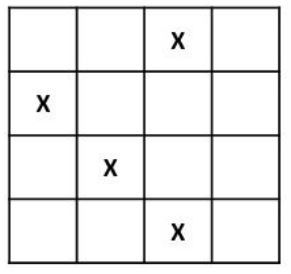

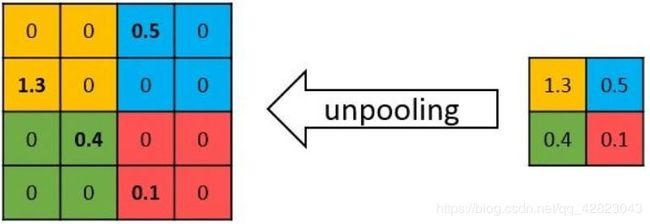

如下图所示,encoder在最大池化操作时记录了最大值所在位置(索引),用于之后在decoder中使用那些存储的索引来对相应特征图进行去池化操作,这样在上采样阶段就无需学习。这有助于保持高频信息的完整性,但当对低分辨率的特征图进行去池化时,它也会忽略邻近的信息。上采样后得到的是一个稀疏特征图,再通过普通的卷积得到稠密特征图,再重复上采样。最后再用激活函数得到onehot 分类结果。

SegNet 主要比较的是 FCN,FCN解码时用反卷积操作来获得特征图,再和对应 encoder 的特征图相加得到输出。SegNet 的优势就在于不用保存整个 encoder 部分的特征图,只需保存池化索引,节省内存空间;第二个是不用反卷积,上采样阶段无需学习。

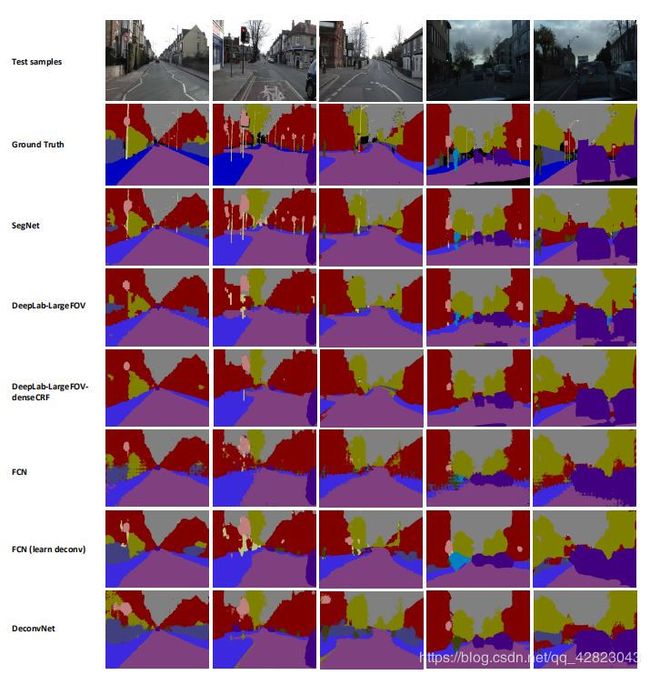

看下几个对比的效果:

Python代码:

from keras import Model,layers

from keras.layers import Input,Conv2D,BatchNormalization,Activation,Reshape

from Models.utils import MaxUnpooling2D,MaxPoolingWithArgmax2D

def SegNet(nClasses, input_height, input_width):

assert input_height % 32 == 0

assert input_width % 32 == 0

img_input = Input(shape=( input_height, input_width,3))

# Block 1

x = layers.Conv2D(64, (3, 3),

activation='relu',

padding='same',

name='block1_conv1')(img_input)

x = layers.Conv2D(64, (3, 3),

activation='relu',

padding='same',

name='block1_conv2')(x)

x, mask_1 = MaxPoolingWithArgmax2D(name='block1_pool')(x)

# Block 2

x = layers.Conv2D(128, (3, 3),

activation='relu',

padding='same',

name='block2_conv1')(x)

x = layers.Conv2D(128, (3, 3),

activation='relu',

padding='same',

name='block2_conv2')(x)

x , mask_2 = MaxPoolingWithArgmax2D(name='block2_pool')(x)

# Block 3

x = layers.Conv2D(256, (3, 3),

activation='relu',

padding='same',

name='block3_conv1')(x)

x = layers.Conv2D(256, (3, 3),

activation='relu',

padding='same',

name='block3_conv2')(x)

x = layers.Conv2D(256, (3, 3),

activation='relu',

padding='same',

name='block3_conv3')(x)

x, mask_3 = MaxPoolingWithArgmax2D(name='block3_pool')(x)

# Block 4

x = layers.Conv2D(512, (3, 3),

activation='relu',

padding='same',

name='block4_conv1')(x)

x = layers.Conv2D(512, (3, 3),

activation='relu',

padding='same',

name='block4_conv2')(x)

x = layers.Conv2D(512, (3, 3),

activation='relu',

padding='same',

name='block4_conv3')(x)

x, mask_4 = MaxPoolingWithArgmax2D(name='block4_pool')(x)

# Block 5

x = layers.Conv2D(512, (3, 3),

activation='relu',

padding='same',

name='block5_conv1')(x)

x = layers.Conv2D(512, (3, 3),

activation='relu',

padding='same',

name='block5_conv2')(x)

x = layers.Conv2D(512, (3, 3),

activation='relu',

padding='same',

name='block5_conv3')(x)

x, mask_5 = MaxPoolingWithArgmax2D(name='block5_pool')(x)

Vgg_streamlined=Model(inputs=img_input,outputs=x)

# 加载vgg16的预训练权重

Vgg_streamlined.load_weights(r"..\vgg16_weights_tf_dim_ordering_tf_kernels_notop.h5")

# 解码层

unpool_1 = MaxUnpooling2D()([x, mask_5])

y = Conv2D(512, (3,3), padding="same")(unpool_1)

y = BatchNormalization()(y)

y = Activation("relu")(y)

y = Conv2D(512, (3, 3), padding="same")(y)

y = BatchNormalization()(y)

y = Activation("relu")(y)

y = Conv2D(512, (3, 3), padding="same")(y)

y = BatchNormalization()(y)

y = Activation("relu")(y)

unpool_2 = MaxUnpooling2D()([y, mask_4])

y = Conv2D(512, (3, 3), padding="same")(unpool_2)

y = BatchNormalization()(y)

y = Activation("relu")(y)

y = Conv2D(512, (3, 3), padding="same")(y)

y = BatchNormalization()(y)

y = Activation("relu")(y)

y = Conv2D(256, (3, 3), padding="same")(y)

y = BatchNormalization()(y)

y = Activation("relu")(y)

unpool_3 = MaxUnpooling2D()([y, mask_3])

y = Conv2D(256, (3, 3), padding="same")(unpool_3)

y = BatchNormalization()(y)

y = Activation("relu")(y)

y = Conv2D(256, (3, 3), padding="same")(y)

y = BatchNormalization()(y)

y = Activation("relu")(y)

y = Conv2D(128, (3, 3), padding="same")(y)

y = BatchNormalization()(y)

y = Activation("relu")(y)

unpool_4 = MaxUnpooling2D()([y, mask_2])

y = Conv2D(128, (3, 3), padding="same")(unpool_4)

y = BatchNormalization()(y)

y = Activation("relu")(y)

y = Conv2D(64, (3, 3), padding="same")(y)

y = BatchNormalization()(y)

y = Activation("relu")(y)

unpool_5 = MaxUnpooling2D()([y, mask_1])

y = Conv2D(64, (3, 3), padding="same")(unpool_5)

y = BatchNormalization()(y)

y = Activation("relu")(y)

y = Conv2D(nClasses, (1, 1), padding="same")(y)

y = BatchNormalization()(y)

y = Activation("relu")(y)

y = Reshape((-1, nClasses))(y)

y = Activation("softmax")(y)

model=Model(inputs=img_input,outputs=y)

return model

if __name__ == '__main__':

m = SegNet(15,320, 320)

# print(m.get_weights()[2]) # 看看权重改变没,加载vgg权重测试用

from keras.utils import plot_model

plot_model(m, show_shapes=True, to_file='model_segnet.png')

print(len(m.layers))

m.summary()