Flume学习--------常用配置文件

监听端口数据(netcat source----->memory channel —>logger sink)

监听端口数据(netcat source----->memory channel --->logger sink)

# Name the components on this agent

a1.sources = r1

a1.sinks = k1

a1.channels = c1

# Describe/configure the source

a1.sources.r1.type = netcat

a1.sources.r1.bind = localhost

a1.sources.r1.port = 44444

# Describe the sink

a1.sinks.k1.type = logger

# Use a channel which buffers events in memory

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

监听本地文件数据(exec source —> memory channel —>hdfs sink)

# Name the components on this agent

a1.sources = r1

a1.sinks = k1

a1.channels = c1

# Describe/configure the source

a1.sources.r1.type = exec

a1.sources.r1.command = tail -F /userspace/text.txt

# Describe the sink

a1.sinks.k1.type = hdfs

a1.sinks.k1.hdfs.path = hdfs://node01/flume/webdata/%y-%m-%d/%H%M/

a1.sinks.k1.hdfs.filePrefix = log_

a1.sinks.k1.hdfs.fileSuffix = .log

a1.sinks.k1.hdfs.rollInterval =10

a1.sinks.k1.hdfs.rollSize = 1024

a1.sinks.k1.hdfs.rollCount = 10

a1.sinks.k1.hdfs.round = true

a1.sinks.k1.hdfs.roundValue = 1

a1.sinks.k1.hdfs.roundUnit = hour

a1.sinks.k1.hdfs.useLocalTimeStamp = true

# Use a channel which buffers events in memory

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

监听一个目录(spooldir ---->memory channel —>hdfs sink)

# Name the components on this agent

a1.sources = r1

a1.sinks = k1

a1.channels = c1

# Describe/configure the source

a1.sources.r1.type = spooldir

a1.sources.r1.spoolDir = /userspace/spooltest/

# Describe the sink

a1.sinks.k1.type = hdfs

a1.sinks.k1.hdfs.path = hdfs://node01/usr/

a1.sinks.k1.hdfs.filePrefix = log_

a1.sinks.k1.hdfs.fileSuffix = .txt

a1.sinks.k1.hdfs.rollInterval =10

a1.sinks.k1.hdfs.rollSize = 1024

a1.sinks.k1.hdfs.rollCount = 10

a1.sinks.k1.hdfs.round = true

a1.sinks.k1.hdfs.roundValue = 1

a1.sinks.k1.hdfs.roundUnit = hour

a1.sinks.k1.hdfs.useLocalTimeStamp = true

# Use a channel which buffers events in memory

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

监听多个文件,并且存在断点续传(flume 1.7以后才支持)(taildir -->memory–>logger)

# Name the components on this agent

a1.sources = r1

a1.sinks = k1

a1.channels = c1

# Describe/configure the source

a1.sources.r1.type = TAILDIR

a1.sources.r1.filegroups = f1 f2

a1.sources.r1.filegroups.f1 = /userspace/spooltest/f1.text

a1.sources.r1.filegroups.f2 = /userspace/spooltest/f2.txt

# Describe the sink

a1.sinks.k1.type = logger

# Use a channel which buffers events in memory

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

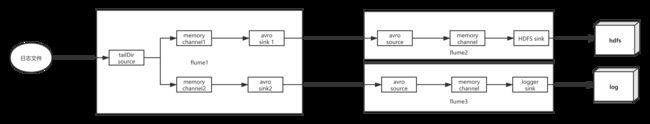

单源多出口配置(channel selector:replicating)

************flume1.conf (taildir -->memory-->avro)************

# Name the components on this agent

a1.sources = r1

a1.sinks = k1 k2

a1.channels = c1 c2

# Describe/configure the source

a1.sources.r1.type = TAILDIR

a1.sources.r1.filegroups = f1

a1.sources.r1.filegroups.f1 = /userspace/text.txt

a1.sources.r1.selector.type = replicating

# Describe the sink

a1.sinks.k1.type = avro

a1.sinks.k1.hostname = node01

a1.sinks.k1.port = 4444

a1.sinks.k2.type = avro

a1.sinks.k2.hostname = node01

a1.sinks.k2.port = 5555

# Use a channel which buffers events in memory

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

a1.channels.c2.type = memory

a1.channels.c2.capacity = 1000

a1.channels.c2.transactionCapacity = 100

# Bind the source and sink to the channel

a1.sources.r1.channels = c1 c2

a1.sinks.k1.channel = c1

a1.sinks.k2.channel = c2

flume2.conf

************flume2.conf (avro --> memory --> hdfs)************

# Name the components on this agent

a2.sources = r1

a2.sinks = k1

a2.channels = c1

# Describe/configure the source

a2.sources.r1.type = avro

a2.sources.r1.bind = node01

a2.sources.r1.port = 4444

# Describe the sink

a2.sinks.k1.type = hdfs

a2.sinks.k1.hdfs.path = hdfs://node01/usr/

a2.sinks.k1.hdfs.filePrefix = log_

a2.sinks.k1.hdfs.fileSuffix = .log

a2.sinks.k1.hdfs.rollInterval =10

a2.sinks.k1.hdfs.rollSize = 1024

a2.sinks.k1.hdfs.rollCount = 10

a2.sinks.k1.hdfs.round = true

a2.sinks.k1.hdfs.roundValue = 1

a2.sinks.k1.hdfs.roundUnit = hour

a2.sinks.k1.hdfs.useLocalTimeStamp = true

# Use a channel which buffers events in memory

a2.channels.c1.type = memory

a2.channels.c1.capacity = 1000

a2.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a2.sources.r1.channels = c1

a2.sinks.k1.channel = c1

flume3.conf

************flume3.conf (avro-->memory-->logger)************

# Name the components on this agent

a3.sources = r1

a3.sinks = k1

a3.channels = c1

# Describe/configure the source

a3.sources.r1.type = avro

a3.sources.r1.bind = node01

a3.sources.r1.port = 5555

# Describe the sink

a3.sinks.k1.type = logger

# Use a channel which buffers events in memory

a3.channels.c1.type = memory

a3.channels.c1.capacity = 1000

a3.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a3.sources.r1.channels = c1

a3.sinks.k1.channel = c1

多源聚合

flume1.conf

# Name the components on this agent

a1.sources = r1

a1.sinks = k1

a1.channels = c1

# Describe/configure the source

a1.sources.r1.type = TAILDIR

a1.sources.r1.filegroups = f1

a1.sources.r1.filegroups.f1 = /userspace/text.txt

# Describe the sink

a1.sinks.k1.type = avro

a1.sinks.k1.hostname = node03

a1.sinks.k1.port = 4444

# Use a channel which buffers events in memory

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

flume2.conf

# Name the components on this agent

a2.sources = r1

a2.sinks = k1

a2.channels = c1

# Describe/configure the source

a2.sources.r1.type = netcat

a2.sources.r1.bind = node02

a2.sources.r1.port = 5555

# Describe the sink

a2.sinks.k1.type = avro

a2.sinks.k1.hostname = node03

a2.sinks.k1.port = 4444

# Use a channel which buffers events in memory

a2.channels.c1.type = memory

a2.channels.c1.capacity = 1000

a2.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a2.sources.r1.channels = c1

a2.sinks.k1.channel = c1

flume3.conf

# Name the components on this agent

a3.sources = r1

a3.sinks = k1

a3.channels = c1

# Describe/configure the source

a3.sources.r1.type = avro

a3.sources.r1.bind = node03

a3.sources.r1.port = 4444

# Describe the sink

a3.sinks.k1.type = logger

# Use a channel which buffers events in memory

a3.channels.c1.type = memory

a3.channels.c1.capacity = 1000

a3.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a3.sources.r1.channels = c1

a3.sinks.k1.channel = c1

flume启动

$ bin/flume-ng agent --conf conf --conf-file example.conf --name a1 -Dflume.root.logger=INFO,console

简化版:

$ bin/flume-ng agent -c conf -f example.conf -n a1 -Dflume.root.logger=INFO,console

-Dflume.root.logger=INFO,console(当sink为logger sink时使用)

HDFS sink 配置参数

path:写入hdfs的路径,需要包含文件系统标识,可以使用flume提供的日期及%{host}表达式。比如:hdfs://namenode/flume/webdata/

注:这里可以使用flume提供的日期相关表达式,常用的有, hdfs://hadoop-jy-namenode/data/qytt/flume/ttengine_api/dt=%Y-%m-%d/hour=%H

filePrefix:写入hdfs的文件名前缀,可以使用flume提供的日期及%{host}表达式。默认值:FlumeData

fileSuffix:写入hdfs的文件名后缀,比如:.lzo .log等。

inUsePrefix:临时文件的文件名前缀,hdfs sink会先往目标目录中写临时文件,再根据相关规则重命名成最终目标文件;

inUseSuffix:临时文件的文件名后缀。默认值:.tmp

rollInterval:hdfs sink间隔多长将临时文件滚动成最终目标文件,单位:秒。默认值:30,如果设置成0,则表示不根据时间来滚动文件;

注:滚动(roll)指的是,hdfs sink将临时文件重命名成最终目标文件,并新打开一个临时文件来写入数据;

rollSize:当临时文件达到该大小(单位:bytes)时,滚动成目标文件;默认值:1024,如果设置成0,则表示不根据临时文件大小来滚动文件;

rollCount:当events数据达到该数量时候,将临时文件滚动成目标文件;默认值:10,如果设置成0,则表示不根据events数据来滚动文件;

idleTimeout:当目前被打开的临时文件在该参数指定的时间(秒)内,没有任何数据写入,则将该临时文件关闭并重命名成目标文件;默认值:0

batchSize:每个批次刷新到HDFS上的events数量;默认值:100

codeC:文件压缩格式,包括:gzip, bzip2, lzo, lzop, snappy

fileType:文件格式,包括:SequenceFile, DataStream,CompressedStream,默认值:SequenceFile

当使用DataStream时候,文件不会被压缩,不需要设置hdfs.codeC;

当使用CompressedStream时候,必须设置一个正确的hdfs.codeC值;

maxOpenFiles:最大允许打开的HDFS文件数,当打开的文件数达到该值,最早打开的文件将会被关闭;默认值:5000

minBlockReplicas:写入HDFS文件块的最小副本数。该参数会影响文件的滚动配置,一般将该参数配置成1,才可以按照配置正确滚动文件。默认值:HDFS副本数

writeFormat:写sequence文件的格式。包含:Text, Writable(默认)

callTimeout:执行HDFS操作的超时时间(单位:毫秒);默认值:10000

threadsPoolSize:hdfs sink启动的操作HDFS的线程数。默认值:10

rollTimerPoolSize:hdfs sink启动的根据时间滚动文件的线程数。默认值:1

kerberosPrincipal:HDFS安全认证kerberos配置;

kerberosKeytab:HDFS安全认证kerberos配置;

proxyUser:代理用户

round:是否启用时间上的”舍弃”,这里的”舍弃”,类似于”四舍五入”,后面再介绍。如果启用,则会影响除了%t的其他所有时间表达式;默认值:false

roundValue:时间上进行“舍弃”的值;默认值:1

roundUnit:时间上进行”舍弃”的单位,包含:second,minute,hour,默认值:seconds

示例:

a1.sinks.k1.hdfs.path = /flume/events/%y-%m-%d/%H%M/%S

a1.sinks.k1.hdfs.round = true

a1.sinks.k1.hdfs.roundValue = 10

a1.sinks.k1.hdfs.roundUnit = minute

当时间为2015-10-16 17:38:59时候,hdfs.path依然会被解析为:/flume/events/20151016/17:30/00 因为设置的是舍弃10分钟内的时间,因此,该目录每10分钟新生成一个。

timeZone:时区。默认值:Local Time

useLocalTimeStamp:是否使用当地时间。默认值:flase

closeTries:hdfs sink关闭文件的尝试次数;默认值:0。如果设置为1,当一次关闭文件失败后,hdfs sink将不会再次尝试关闭文件,这个未关闭的文件将会一直留在那,并且是打开状态。设置为0,当一次关闭失败后,hdfs sink会继续尝试下一次关闭,直到成功。

retryInterval:hdfs sink尝试关闭文件的时间间隔,如果设置为0,表示不尝试,相当于于将hdfs.closeTries设置成1。默认值:180(秒)

serializer:序列化类型。其他还有:avro_event或者是实现了EventSerializer.Builder的类名。默认值:TEXT