介绍

当我们的netty服务端启动后,就可以对外服务了,此时客户端会发送一个请求过来,这个时候会有对应的线程进行处理,第一个阶段就是accept过程,它为后面的read和write做准备,下面介绍下这个过程中,监听线程和服务线程都干了些什么。

监听线程

这个线程是在我们应用中创建的NioEventLoopGroup对象parent中的一个线程,一般我们看到他们的名称“nioEventLoopGroup-2-1”。

当我们的服务端的系统启动后,这个线程就在等待这客户端的链接,当链接过来的时,会运行到NioEventLoop类中的processSelectedKeys这个函数中

private void processSelectedKeys() {

if (selectedKeys != null) {

processSelectedKeysOptimized(selectedKeys.flip());

} else {

processSelectedKeysPlain(selector.selectedKeys());

}

}

这里看是否进行过优化的,具体如何优化的后面再介绍。我们直接看processSelectedKeysOptimized

private void processSelectedKeysOptimized(SelectionKey[] selectedKeys) {

for (int i = 0;; i ++) {

final SelectionKey k = selectedKeys[i];

if (k == null) {

break;

}

// null out entry in the array to allow to have it GC'ed once the Channel close

// See https://github.com/netty/netty/issues/2363

selectedKeys[i] = null;

final Object a = k.attachment();

if (a instanceof AbstractNioChannel) {

processSelectedKey(k, (AbstractNioChannel) a);

} else {

@SuppressWarnings("unchecked")

NioTask task = (NioTask) a;

processSelectedKey(k, task);

}

if (needsToSelectAgain) {

// null out entries in the array to allow to have it GC'ed once the Channel close

// See https://github.com/netty/netty/issues/2363

for (;;) {

if (selectedKeys[i] == null) {

break;

}

selectedKeys[i] = null;

i++;

}

selectAgain();

// Need to flip the optimized selectedKeys to get the right reference to the array

// and reset the index to -1 which will then set to 0 on the for loop

// to start over again.

//

// See https://github.com/netty/netty/issues/1523

selectedKeys = this.selectedKeys.flip();

i = -1;

}

}

}

这里是通过循环处理所有的事件:从事件中附带的信息中,获取了nioChannel,然后调用processSelectedKey

private static void processSelectedKey(SelectionKey k, AbstractNioChannel ch) {

final NioUnsafe unsafe = ch.unsafe();

if (!k.isValid()) {

// close the channel if the key is not valid anymore

unsafe.close(unsafe.voidPromise());

return;

}

try {

int readyOps = k.readyOps();

// Also check for readOps of 0 to workaround possible JDK bug which may otherwise lead

// to a spin loop

if ((readyOps & (SelectionKey.OP_READ | SelectionKey.OP_ACCEPT)) != 0 || readyOps == 0) {

unsafe.read();

if (!ch.isOpen()) {

// Connection already closed - no need to handle write.

return;

}

}

if ((readyOps & SelectionKey.OP_WRITE) != 0) {

// Call forceFlush which will also take care of clear the OP_WRITE once there is nothing left to write

ch.unsafe().forceFlush();

}

if ((readyOps & SelectionKey.OP_CONNECT) != 0) {

// remove OP_CONNECT as otherwise Selector.select(..) will always return without blocking

// See https://github.com/netty/netty/issues/924

int ops = k.interestOps();

ops &= ~SelectionKey.OP_CONNECT;

k.interestOps(ops);

unsafe.finishConnect();

}

} catch (CancelledKeyException ignored) {

unsafe.close(unsafe.voidPromise());

}

}

这里的readOps中存储了具体的事件的类型,比如对应的值SelectionKey.OP_ACCEPT,所以也就走到unsafe.read()调用(AbstractNioMessageChannel.java中定义的NioMessageUnsafe类中函数)

public void read() {

assert eventLoop().inEventLoop();

final ChannelConfig config = config();

if (!config.isAutoRead() && !isReadPending()) {

// ChannelConfig.setAutoRead(false) was called in the meantime

removeReadOp();

return;

}

final int maxMessagesPerRead = config.getMaxMessagesPerRead();

final ChannelPipeline pipeline = pipeline();

boolean closed = false;

Throwable exception = null;

try {

try {

for (;;) {

int localRead = doReadMessages(readBuf);

if (localRead == 0) {

break;

}

if (localRead < 0) {

closed = true;

break;

}

// stop reading and remove op

if (!config.isAutoRead()) {

break;

}

if (readBuf.size() >= maxMessagesPerRead) {

break;

}

}

} catch (Throwable t) {

exception = t;

}

setReadPending(false);

int size = readBuf.size();

for (int i = 0; i < size; i ++) {

pipeline.fireChannelRead(readBuf.get(i));

}

readBuf.clear();

pipeline.fireChannelReadComplete();

if (exception != null) {

if (exception instanceof IOException) {

// ServerChannel should not be closed even on IOException because it can often continue

// accepting incoming connections. (e.g. too many open files)

closed = !(AbstractNioMessageChannel.this instanceof ServerChannel);

}

pipeline.fireExceptionCaught(exception);

}

if (closed) {

if (isOpen()) {

close(voidPromise());

}

}

} finally {

// Check if there is a readPending which was not processed yet.

// This could be for two reasons:

// * The user called Channel.read() or ChannelHandlerContext.read() in channelRead(...) method

// * The user called Channel.read() or ChannelHandlerContext.read() in channelReadComplete(...) method

//

// See https://github.com/netty/netty/issues/2254

if (!config.isAutoRead() && !isReadPending()) {

removeReadOp();

}

}

}

}

- 读取从客户端的数据,其实这里就是 nioSocketChannel对象

- 通过循环处理这些接受到的数据pipeline.fireChannelRead(readBuf.get(i));

我们继续往下

@Override

public ChannelPipeline fireChannelRead(Object msg) {

head.fireChannelRead(msg);

return this;

}

直接调用pipeline.head函数

@Override

public ChannelHandlerContext fireChannelRead(final Object msg) {

if (msg == null) {

throw new NullPointerException("msg");

}

final AbstractChannelHandlerContext next = findContextInbound();

EventExecutor executor = next.executor();

if (executor.inEventLoop()) {

next.invokeChannelRead(msg);

} else {

executor.execute(new OneTimeTask() {

@Override

public void run() {

next.invokeChannelRead(msg);

}

});

}

return this;

}

- 从流水线中找到一个inbound,也就是我们在流水线中的一个节点。

- 然后调用这个inbound的invokeChannelRead函数

我们继续看

private void invokeChannelRead(Object msg) {

try {

((ChannelInboundHandler) handler()).channelRead(this, msg);

} catch (Throwable t) {

notifyHandlerException(t);

}

}

我们继续

public void channelRead(ChannelHandlerContext ctx, Object msg) {

final Channel child = (Channel) msg;

child.pipeline().addLast(childHandler);

for (Entry, Object> e: childOptions) {

try {

if (!child.config().setOption((ChannelOption 1 首先给child的流水处理添加了一个节点,这个节点的事件就是我们在应用程序中定义你的childHandler,比如我们的服务端就是NettyEchoServer

2 设置属性

3 通过register把Channel给了childGroup,这个childGroup就是用来处理的线程所在的地方,感觉看到了一些曙光了。

@Override

public ChannelFuture register(Channel channel) {

return next().register(channel);

}

其中next就是从nioEventLoopGroup中取一个nioEventLoop,就类似从线程池中选择一个线程,让后把channel注册到nioEventLoop

public ChannelFuture register(Channel channel) {

return register(channel, new DefaultChannelPromise(channel, this));

}

封装了一个DefaultChannelPromise,然后

@Override

public ChannelFuture register(final Channel channel, final ChannelPromise promise) {

if (channel == null) {

throw new NullPointerException("channel");

}

if (promise == null) {

throw new NullPointerException("promise");

}

channel.unsafe().register(this, promise);

return promise;

}

真正起作用到是unsafe对象(AbstractChannel类)

@Override

public final void register(EventLoop eventLoop, final ChannelPromise promise) {

if (eventLoop == null) {

throw new NullPointerException("eventLoop");

}

if (isRegistered()) {

promise.setFailure(new IllegalStateException("registered to an event loop already"));

return;

}

if (!isCompatible(eventLoop)) {

promise.setFailure(

new IllegalStateException("incompatible event loop type: " + eventLoop.getClass().getName()));

return;

}

AbstractChannel.this.eventLoop = eventLoop;

if (eventLoop.inEventLoop()) {

register0(promise);

} else {

try {

eventLoop.execute(new OneTimeTask() {

@Override

public void run() {

register0(promise);

}

});

} catch (Throwable t) {

logger.warn(

"Force-closing a channel whose registration task was not accepted by an event loop: {}",

AbstractChannel.this, t);

closeForcibly();

closeFuture.setClosed();

safeSetFailure(promise, t);

}

}

}

1 看这个 channel是否已经注册了,如果已经注册直接退出

2 看是否兼容,也就是这个eventLoop是否NioEventLooop对象

3 因为这里的eventloop已经是处理的线程,而当前是监听线程,所以eventLoop.inEventLoop()返回false, 走到了eventLoop.execute.在这里还创建了一个task,同时重载了run函数,这个后面还会用到。 ```markup

@Override

public void execute(Runnable task) {

if (task == null) {

throw new NullPointerException(“task”);

}

boolean inEventLoop = inEventLoop();

if (inEventLoop) {

addTask(task);

} else {

startThread();

addTask(task);

if (isShutdown() && removeTask(task)) {

reject();

}

}

if (!addTaskWakesUp && wakesUpForTask(task)) {

wakeup(inEventLoop);

}

}

同样,当前线程不是执行线程,这里会调用:

1 startThread() 启动线程

2 addTask

详细看下这两个函数

private void startThread() {

if (STATE_UPDATER.get(this) == ST_NOT_STARTED) {

if (STATE_UPDATER.compareAndSet(this, ST_NOT_STARTED, ST_STARTED)) {

delayedTaskQueue.add(new ScheduledFutureTask(

this, delayedTaskQueue, Executors.callable(new PurgeTask(), null),

ScheduledFutureTask.deadlineNanos(SCHEDULE_PURGE_INTERVAL), -SCHEDULE_PURGE_INTERVAL));

thread.start();

}

}

}

这里首先判断线程的是否未启动,如果是,那么设置状态并通过start启动线程

再来看另外一个函数

```markup

protected void addTask(Runnable task) {

if (task == null) {

throw new NullPointerException("task");

}

if (isShutdown()) {

reject();

}

taskQueue.add(task);

}

这个也很简单,就是把任务放到了这个服务线程的任务队列中。到此,监听线程的使命已经完成:把任务交给了服务线程。

服务线程

服务线程和监听的线程执行的还是同一个代码:NioEventLoop中的run函数,其中会去调用runAllTasks这个函数

protected boolean runAllTasks(long timeoutNanos) {

fetchFromDelayedQueue();

Runnable task = pollTask();

if (task == null) {

return false;

}

final long deadline = ScheduledFutureTask.nanoTime() + timeoutNanos;

long runTasks = 0;

long lastExecutionTime;

for (;;) {

try {

task.run();

} catch (Throwable t) {

logger.warn("A task raised an exception.", t);

}

runTasks ++;

// Check timeout every 64 tasks because nanoTime() is relatively expensive.

// XXX: Hard-coded value - will make it configurable if it is really a problem.

if ((runTasks & 0x3F) == 0) {

lastExecutionTime = ScheduledFutureTask.nanoTime();

if (lastExecutionTime >= deadline) {

break;

}

}

task = pollTask();

if (task == null) {

lastExecutionTime = ScheduledFutureTask.nanoTime();

break;

}

}

this.lastExecutionTime = lastExecutionTime;

return true;

}

这个函数就是执行任务,其中的入参timeoutNanos表明这个函数能执行的最长时间:

1 从延时队列中获取所有已经到时间的任务,放到了taskQueue中:

private void fetchFromDelayedQueue() {

long nanoTime = 0L;

for (;;) {

ScheduledFutureTask delayedTask = delayedTaskQueue.peek();

if (delayedTask == null) {

break;

}

if (nanoTime == 0L) {

nanoTime = ScheduledFutureTask.nanoTime();

}

if (delayedTask.deadlineNanos() <= nanoTime) {

delayedTaskQueue.remove();

taskQueue.add(delayedTask);

} else {

break;

}

}

}

2 不断从任务队列中拿去任务,并执行任务的run()函数。上面说了这里有个时间限制,按理说每次执行完成一个任务都检测下,但是获取时间本身代价相对比较大,所有这里每执行64个任务才检测一次。

我们在来看看这个run具体执行的是什么?从监听线程代码中,我们看到对这个task的run方式进行了重写,会调用到register0这个函数(AbstractChannel类中)```markup

private void register0(ChannelPromise promise) {

try {

// check if the channel is still open as it could be closed in the mean time when the register

// call was outside of the eventLoop

if (!promise.setUncancellable() || !ensureOpen(promise)) {

return;

}

doRegister();

registered = true;

safeSetSuccess(promise);

pipeline.fireChannelRegistered();

if (isActive()) {

pipeline.fireChannelActive();

}

} catch (Throwable t) {

// Close the channel directly to avoid FD leak.

closeForcibly();

closeFuture.setClosed();

safeSetFailure(promise, t);

}

}

- 通过doRegister(),把channel放到select中,用于监听事件,不过这里传了个0,也就是没有任何事件。

@Override

protected void doRegister() throws Exception {

boolean selected = false;

for (;;) {

try {

selectionKey = javaChannel().register(eventLoop().selector, 0, this);

return;

} catch (CancelledKeyException e) {

if (!selected) {

// Force the Selector to select now as the "canceled" SelectionKey may still be

// cached and not removed because no Select.select(..) operation was called yet.

eventLoop().selectNow();

selected = true;

} else {

// We forced a select operation on the selector before but the SelectionKey is still cached

// for whatever reason. JDK bug ?

throw e;

}

}

}

}

1. 通过pipeline.fireChannelRegistered,把我们应用中定义的EchoServerHandler添加piplline中,为后面的处理做好准备

2. 通过fireChannelActive,让channel监听读取事件。 看下详细的过程

这个是在DefaultChannelPipeline这个类中

```markup

public ChannelPipeline fireChannelActive() {

head.fireChannelActive();

if (channel.config().isAutoRead()) {

channel.read();

}

return this;

}

最重要的还是channel.read()

public Channel read() {

pipeline.read();

return this;

}

又回到pipeline

public ChannelPipeline read() {

tail.read();

return this;

}

来到了

public ChannelHandlerContext read() {

final AbstractChannelHandlerContext next = findContextOutbound();

EventExecutor executor = next.executor();

if (executor.inEventLoop()) {

next.invokeRead();

} else {

Runnable task = next.invokeReadTask;

if (task == null) {

next.invokeReadTask = task = new Runnable() {

@Override

public void run() {

next.invokeRead();

}

};

}

executor.execute(task);

}

return this;

}

从流水线中,返现找到一个outbound的handler,调用对应的invokeRead

private void invokeRead() {

try {

((ChannelOutboundHandler) handler()).read(this);

} catch (Throwable t) {

notifyHandlerException(t);

}

}

获取handler并且调用read函数

public void read(ChannelHandlerContext ctx) {

unsafe.beginRead();

}

还是委托给这个unsafe来操作

public final void beginRead() {

if (!isActive()) {

return;

}

try {

doBeginRead();

} catch (final Exception e) {

invokeLater(new OneTimeTask() {

@Override

public void run() {

pipeline.fireExceptionCaught(e);

}

});

close(voidPromise());

}

}

直接调用doBeginRead()

protected void doBeginRead() throws Exception {

// Channel.read() or ChannelHandlerContext.read() was called

if (inputShutdown) {

return;

}

final SelectionKey selectionKey = this.selectionKey;

if (!selectionKey.isValid()) {

return;

}

readPending = true;

final int interestOps = selectionKey.interestOps();

if ((interestOps & readInterestOp) == 0) {

selectionKey.interestOps(interestOps | readInterestOp);

}

}

这里就是设置selectKey.interestOps,这个值是从channel的成员变量readInterestOp,那么这个值是什么呢?在AbstractNioByteChannel类

protected AbstractNioByteChannel(Channel parent, SelectableChannel ch) {

super(parent, ch, SelectionKey.OP_READ);

}

所以这里channel关注的是”可读“事件。

总结

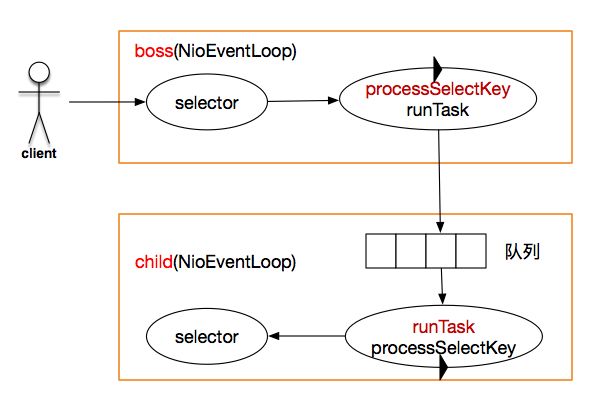

写到这里,都是文字,直接上图

我们看图在来总结下:

1 客户端发现连接

2 监听线程在循环处理processSelectKey中,对这个连接进行处理: 新建一个channel表示这个连接,里面包含socket、关注的事件(op_read),然后封装成一个task,选择一个工作线程,然后把任务放到线程的任务队列中

3 工作线程不断的循环,看到任务队列中有任务,执行任务:把处理任务的pipeline组装好,把channel放到select中监听(op_read),等待事件发生就好了。

个人看着代码和调试着来理解,中间有不对或偏差的,欢迎指正,谢谢