18.1:tensorflow分类模型mobilenetv2训练(数据增强,保存模型,衰减学习率,tensorboard),预测图像(单张,批量预测),导出为pb完整示例

从前有个小孩子在学习tensorflow,于是他买了几本tensorflow书籍,他发现各种书籍里面讲的示例都是使用mnist数据集。由于框架已经封装好了,在使用数据时用一条命令就可以加载数据了,他也不知道这些数据是怎么被读取的。于是乎,他在训练自己的模型时碰到的数据都是一张张图像,仍然无从下手(一脸懵逼)。除此之外,他还想加一些其他功能,如怎么动态调节学习率,怎么使用tensorboard查看loss, accuracy, learningrate的变化,怎么显示训练过程中的图像,怎么可视化模型的结构,怎么保存模型。后来他把这些问题搞定了,但是他并不满足,他还想使用保存的模型(model.ckpt)对每张新的图像进行预测,后来发现自己需要预测的图像太多了,比较耗时,然后他想能不能使用批量数据一次输入多个同时预测。最后他还发现使用训练好的模型进行预测时还得重新定义网络结构(虽然也可使用.meta不用重新定义网络结构,但是这种方法每次必须输入一个批次数据),于是后来发现了可以使用保存的模型生成一个pb文件,调用pb文件时只需指定输入,输出节点而无需重新定义网络结构就可以预测图像。再后来他使用模型预测新图像时发现所有图像都预测为同一类,经过分析他发现在使用batch normal时出现了问题,因为bn层在训练时和测试时不一样。。。

一 、训练

1. 数据集,批量数据的读取,数据增强

他使用的是自己的.jpg数据,不过和flower data的结构一样(http://download.tensorflow.org/example_images/flower_photos.tgz),一个大文件夹下分为各个小文件夹(flow photos的文件夹内有一个readme文件,得把它删掉),每个小文件夹名字为该图像的标签,小文件夹内是该类图像。在训练模型时他想到的第一件事是获取这些图像的路径及对应标签吧,因此他写了个函数,该函数可以返回所有图像的路径及标签(标签是各个小文件夹的名字):

def get_files(file_dir):

image_list, label_list = [], []

for label in os.listdir(file_dir):

for img in glob.glob(os.path.join(file_dir, label, "*.jpg")):

image_list.append(img)

label_list.append(int(label_dict[label]))

print('There are %d data' %(len(image_list)))

temp = np.array([image_list, label_list])

temp = temp.transpose()

np.random.shuffle(temp)

image_list = list(temp[:, 0])

label_list = list(temp[:, 1])

label_list = [int(i) for i in label_list]

return image_list, label_list他发现数据集的标签都是一个字符串,在模型训练时label需要为0,1,2,3,4...的整数,因此他自己对标签及label做了一个映射。

他建立了一个label.txt,里面为每一行为一个标签对映射,分别为:daisy:0, dandelion:1, roses:2, sunflowers:3, tulips:4。为了把标签和label对应起来他写了这几行代码:

label_dict, label_dict_res = {}, {}

# 手动指定一个从类别到label的映射关系

with open("label.txt", 'r') as f:

for line in f.readlines():

folder, label = line.strip().split(':')[0], line.strip().split(':')[1]

label_dict[folder] = label

label_dict_res[label] = folder

print(label_dict)他之前使用过caffe,他知道caffe可以直接读取图像也可以把图像转为lmbd再读。他发现tensorfow得先把数据转为tf-record文件再读取,他嫌先转文件格式这种方法有点麻烦(虽然在训练速度上和消耗资源上有优势),也想直接读取图像进行训练,于是后来他发现了tf.train.batch这个函数能解决这个问题。此外,他的数据不是很多,还想再对数据随机做一些变换来进行数据增强,后来他发了tf.image.random_flip_left_righ,tf.image.random_flip_up_down, tf.image.random_brightness, tf.image.random_contrast, tf.image.random_hue, tf.image.random_saturation这些函数。他还想用tensorboard查看训练过程中通过数据增强的图像是怎样的,于是他发现了tf.summary.image这个函数。最后他定义了获取批量数据的函数:

def get_batch(image, label, image_W, image_H, batch_size, capacity):

image = tf.cast(image, tf.string)

label = tf.cast(label, tf.int32)

# make an input queue

input_queue = tf.train.slice_input_producer([image, label], shuffle=False)

label = input_queue[1]

image_contents = tf.read_file(input_queue[0])

image = tf.image.decode_jpeg(image_contents, channels=3)

# 数据增强

#image = tf.image.resize_image_with_pad(image, target_height=image_W, target_width=image_H)

image = tf.image.resize_images(image, (image_W, image_H))

# 随机左右翻转

image = tf.image.random_flip_left_right(image)

# 随机上下翻转

image = tf.image.random_flip_up_down(image)

# 随机设置图片的亮度

image = tf.image.random_brightness(image, max_delta=32/255.0)

# 随机设置图片的对比度

#image = tf.image.random_contrast(image, lower=0.5, upper=1.5)

# 随机设置图片的色度

image = tf.image.random_hue(image, max_delta=0.05)

# 随机设置图片的饱和度

#image = tf.image.random_saturation(image, lower=0.5, upper=1.5)

# 标准化,使图片的均值为0,方差为1

image = tf.image.per_image_standardization(image)

image_batch, label_batch = tf.train.batch([image, label],

batch_size= batch_size,

num_threads= 64,

capacity = capacity)

tf.summary.image("input_img", image_batch, max_outputs=5)

label_batch = tf.reshape(label_batch, [batch_size])

image_batch = tf.cast(image_batch, tf.float32)

return image_batch, label_batch2. mobilenetv2网络

他在网上找到了一个mobilenetv2的网络,并建立了一个新的model.py文件,把该网络粘贴进去了(名字为class MobileNetV2)。这里他只是用了mobilenetv2,他也可以在网上找一个其他网络(resnet, vgg, inception, shufflenet...)前向传播的代码粘贴到这里,就可以训练其他的网络了。

#coding:utf-8

import tensorflow as tf

import tensorflow.contrib.slim as slim

from tensorflow.contrib.layers.python.layers import batch_norm

import tensorflow as tf

import tensorflow as tf

import tensorflow.contrib as tc

import numpy as np

import time

class MobileNetV1(object):

def __init__(self, is_training=True, input_size=224):

self.input_size = input_size

self.is_training = is_training

self.normalizer = tc.layers.batch_norm

self.bn_params = {'is_training': self.is_training}

with tf.variable_scope('MobileNetV1'):

self._create_placeholders()

self._build_model()

def _create_placeholders(self):

self.input = tf.placeholder(dtype=tf.float32, shape=[None, self.input_size, self.input_size, 3])

def _build_model(self):

i = 0

with tf.variable_scope('init_conv'):

self.conv1 = tc.layers.conv2d(self.input, num_outputs=32, kernel_size=3, stride=2,

normalizer_fn=self.normalizer, normalizer_params=self.bn_params)

# 1

with tf.variable_scope('dconv_block{}'.format(i)):

i += 1

self.dconv1 = tc.layers.separable_conv2d(self.conv1, num_outputs=None, kernel_size=3, depth_multiplier=1,

normalizer_fn=self.normalizer, normalizer_params=self.bn_params)

self.pconv1 = tc.layers.conv2d(self.dconv1, 64, 1, normalizer_fn=self.normalizer, normalizer_params=self.bn_params)

# 2

with tf.variable_scope('dconv_block{}'.format(i)):

i += 1

self.dconv2 = tc.layers.separable_conv2d(self.pconv1, None, 3, 1, 2,

normalizer_fn=self.normalizer, normalizer_params=self.bn_params)

self.pconv2 = tc.layers.conv2d(self.dconv2, 128, 1, normalizer_fn=self.normalizer, normalizer_params=self.bn_params)

# 3

with tf.variable_scope('dconv_block{}'.format(i)):

i += 1

self.dconv3 = tc.layers.separable_conv2d(self.pconv2, None, 3, 1, 1,

normalizer_fn=self.normalizer, normalizer_params=self.bn_params)

self.pconv3 = tc.layers.conv2d(self.dconv3, 128, 1, normalizer_fn=self.normalizer,

normalizer_params=self.bn_params)

# 4

with tf.variable_scope('dconv_block{}'.format(i)):

i += 1

self.dconv4 = tc.layers.separable_conv2d(self.pconv3, None, 3, 1, 2,

normalizer_fn=self.normalizer, normalizer_params=self.bn_params)

self.pconv4 = tc.layers.conv2d(self.dconv4, 256, 1, normalizer_fn=self.normalizer,

normalizer_params=self.bn_params)

# 5

with tf.variable_scope('dconv_block{}'.format(i)):

i += 1

self.dconv5 = tc.layers.separable_conv2d(self.pconv4, None, 3, 1, 1,

normalizer_fn=self.normalizer, normalizer_params=self.bn_params)

self.pconv5 = tc.layers.conv2d(self.dconv5, 256, 1, normalizer_fn=self.normalizer,

normalizer_params=self.bn_params)

# 6

with tf.variable_scope('dconv_block{}'.format(i)):

i += 1

self.dconv6 = tc.layers.separable_conv2d(self.pconv5, None, 3, 1, 2,

normalizer_fn=self.normalizer, normalizer_params=self.bn_params)

self.pconv6 = tc.layers.conv2d(self.dconv6, 512, 1, normalizer_fn=self.normalizer,

normalizer_params=self.bn_params)

# 7_1

with tf.variable_scope('dconv_block{}'.format(i)):

i += 1

self.dconv71 = tc.layers.separable_conv2d(self.pconv6, None, 3, 1, 1,

normalizer_fn=self.normalizer, normalizer_params=self.bn_params)

self.pconv71 = tc.layers.conv2d(self.dconv71, 512, 1, normalizer_fn=self.normalizer,

normalizer_params=self.bn_params)

# 7_2

with tf.variable_scope('dconv_block{}'.format(i)):

i += 1

self.dconv72 = tc.layers.separable_conv2d(self.pconv71, None, 3, 1, 1,

normalizer_fn=self.normalizer, normalizer_params=self.bn_params)

self.pconv72 = tc.layers.conv2d(self.dconv72, 512, 1, normalizer_fn=self.normalizer,

normalizer_params=self.bn_params)

# 7_3

with tf.variable_scope('dconv_block{}'.format(i)):

i += 1

self.dconv73 = tc.layers.separable_conv2d(self.pconv72, None, 3, 1, 1,

normalizer_fn=self.normalizer, normalizer_params=self.bn_params)

self.pconv73 = tc.layers.conv2d(self.dconv73, 512, 1, normalizer_fn=self.normalizer,

normalizer_params=self.bn_params)

# 7_4

with tf.variable_scope('dconv_block{}'.format(i)):

i += 1

self.dconv74 = tc.layers.separable_conv2d(self.pconv73, None, 3, 1, 1,

normalizer_fn=self.normalizer, normalizer_params=self.bn_params)

self.pconv74 = tc.layers.conv2d(self.dconv74, 512, 1, normalizer_fn=self.normalizer,

normalizer_params=self.bn_params)

# 7_5

with tf.variable_scope('dconv_block{}'.format(i)):

i += 1

self.dconv75 = tc.layers.separable_conv2d(self.pconv74, None, 3, 1, 1,

normalizer_fn=self.normalizer, normalizer_params=self.bn_params)

self.pconv75 = tc.layers.conv2d(self.dconv75, 512, 1, normalizer_fn=self.normalizer,

normalizer_params=self.bn_params)

# 8

with tf.variable_scope('dconv_block{}'.format(i)):

i += 1

self.dconv8 = tc.layers.separable_conv2d(self.pconv75, None, 3, 1, 2,

normalizer_fn=self.normalizer, normalizer_params=self.bn_params)

self.pconv8 = tc.layers.conv2d(self.dconv8, 1024, 1, normalizer_fn=self.normalizer,

normalizer_params=self.bn_params)

# 9

with tf.variable_scope('dconv_block{}'.format(i)):

i += 1

self.dconv9 = tc.layers.separable_conv2d(self.pconv8, None, 3, 1, 1,

normalizer_fn=self.normalizer, normalizer_params=self.bn_params)

self.pconv9 = tc.layers.conv2d(self.dconv9, 1024, 1, normalizer_fn=self.normalizer,

normalizer_params=self.bn_params)

with tf.variable_scope('global_max_pooling'):

self.pool = tc.layers.max_pool2d(self.pconv9, kernel_size=7, stride=1)

with tf.variable_scope('prediction'):

self.output = tc.layers.conv2d(self.pool, 1000, 1, activation_fn=None)

class MobileNetV2(object):

def __init__(self, input, num_classes=1000, is_training=True):

self.input = input

self.num_classes = num_classes

self.is_training = is_training

self.normalizer = tc.layers.batch_norm

self.bn_params = {'is_training': self.is_training}

with tf.variable_scope('MobileNetV2'):

self._build_model()

def _build_model(self):

self.i = 0

with tf.variable_scope('init_conv'):

output = tc.layers.conv2d(self.input, 32, 3, 2,

normalizer_fn=self.normalizer, normalizer_params=self.bn_params)

# print(output.get_shape())

self.output = self._inverted_bottleneck(output, 1, 16, 0)

self.output = self._inverted_bottleneck(self.output, 6, 24, 1)

self.output = self._inverted_bottleneck(self.output, 6, 24, 0)

self.output = self._inverted_bottleneck(self.output, 6, 32, 1)

self.output = self._inverted_bottleneck(self.output, 6, 32, 0)

self.output = self._inverted_bottleneck(self.output, 6, 32, 0)

self.output = self._inverted_bottleneck(self.output, 6, 64, 1)

self.output = self._inverted_bottleneck(self.output, 6, 64, 0)

self.output = self._inverted_bottleneck(self.output, 6, 64, 0)

self.output = self._inverted_bottleneck(self.output, 6, 64, 0)

self.output = self._inverted_bottleneck(self.output, 6, 96, 0)

self.output = self._inverted_bottleneck(self.output, 6, 96, 0)

self.output = self._inverted_bottleneck(self.output, 6, 96, 0)

self.output = self._inverted_bottleneck(self.output, 6, 160, 1)

self.output = self._inverted_bottleneck(self.output, 6, 160, 0)

self.output = self._inverted_bottleneck(self.output, 6, 160, 0)

self.output = self._inverted_bottleneck(self.output, 6, 320, 0)

self.output = tc.layers.conv2d(self.output, 1280, 1, normalizer_fn=self.normalizer, normalizer_params=self.bn_params)

self.output = tc.layers.avg_pool2d(self.output, 7)

self.output = tc.layers.conv2d(self.output, self.num_classes, 1, activation_fn=None)

self.output = tf.reshape(self.output, shape=[-1, self.num_classes], name="logit")

def _inverted_bottleneck(self, input, up_sample_rate, channels, subsample):

with tf.variable_scope('inverted_bottleneck{}_{}_{}'.format(self.i, up_sample_rate, subsample)):

self.i += 1

stride = 2 if subsample else 1

output = tc.layers.conv2d(input, up_sample_rate*input.get_shape().as_list()[-1], 1,

activation_fn=tf.nn.relu6,

normalizer_fn=self.normalizer, normalizer_params=self.bn_params)

output = tc.layers.separable_conv2d(output, None, 3, 1, stride=stride,

activation_fn=tf.nn.relu6,

normalizer_fn=self.normalizer, normalizer_params=self.bn_params)

output = tc.layers.conv2d(output, channels, 1, activation_fn=None,

normalizer_fn=self.normalizer, normalizer_params=self.bn_params)

if input.get_shape().as_list()[-1] == channels:

output = tf.add(input, output)

return output

# small inception

def model4(x, N_CLASSES, is_trian = False):

x = tf.contrib.layers.conv2d(x, 64, [5, 5], 1, 'SAME', activation_fn=tf.nn.relu)

x = batch_norm(x, decay=0.9, updates_collections=None, is_training=is_trian) # 训练阶段is_trainging设置为true,训练完毕后使用模型时设置为false

x = tf.contrib.layers.max_pool2d(x, [2, 2], stride=2, padding='SAME')

x1_1 = tf.contrib.layers.conv2d(x, 64, [1, 1], 1, 'SAME', activation_fn=tf.nn.relu) # 1X1 核

x1_1 = batch_norm(x1_1, decay=0.9, updates_collections=None, is_training=is_trian)

x3_3 = tf.contrib.layers.conv2d(x, 64, [3, 3], 1, 'SAME', activation_fn=tf.nn.relu) # 3x3 核

x3_3 = batch_norm(x3_3, decay=0.9, updates_collections=None, is_training=is_trian)

x5_5 = tf.contrib.layers.conv2d(x, 64, [5, 5], 1, 'SAME', activation_fn=tf.nn.relu) # 5x5 核

x5_5 = batch_norm(x5_5, decay=0.9, updates_collections=None, is_training=is_trian)

x = tf.concat([x1_1, x3_3, x5_5], axis=-1) # 连接在一起,得到64*3=192个通道

x = tf.contrib.layers.max_pool2d(x, [2, 2], stride=2, padding='SAME')

x1_1 = tf.contrib.layers.conv2d(x, 128, [1, 1], 1, 'SAME', activation_fn=tf.nn.relu)

x1_1 = batch_norm(x1_1, decay=0.9, updates_collections=None, is_training=is_trian)

x3_3 = tf.contrib.layers.conv2d(x, 128, [3, 3], 1, 'SAME', activation_fn=tf.nn.relu)

x3_3 = batch_norm(x3_3, decay=0.9, updates_collections=None, is_training=is_trian)

x5_5 = tf.contrib.layers.conv2d(x, 128, [5, 5], 1, 'SAME', activation_fn=tf.nn.relu)

x5_5 = batch_norm(x5_5, decay=0.9, updates_collections=None, is_training=is_trian)

x = tf.concat([x1_1, x3_3, x5_5], axis=-1)

x = tf.contrib.layers.max_pool2d(x, [2, 2], stride=2, padding='SAME')

shp = x.get_shape()

x = tf.reshape(x, [-1, shp[1]*shp[2]*shp[3]]) # flatten

logits = tf.contrib.layers.fully_connected(x, N_CLASSES, activation_fn=None) # output logist without softmax

return logits

# 2conv + 3fc

def model2(images, batch_size, n_classes):

'''Build the model

Args:

images: image batch, 4D tensor, tf.float32, [batch_size, width, height, channels]

Returns:

output tensor with the computed logits, float, [batch_size, n_classes]

'''

#conv1, shape = [kernel size, kernel size, channels, kernel numbers]

with tf.variable_scope('conv1') as scope:

weights = tf.get_variable('weights',

shape = [3,3,3, 16],

dtype = tf.float32,

initializer=tf.truncated_normal_initializer(stddev=0.1,dtype=tf.float32))

biases = tf.get_variable('biases',

shape=[16],

dtype=tf.float32,

initializer=tf.constant_initializer(0.1))

conv = tf.nn.conv2d(images, weights, strides=[1,1,1,1], padding='SAME')

pre_activation = tf.nn.bias_add(conv, biases)

conv1 = tf.nn.relu(pre_activation, name= scope.name)

#pool1 and norm1

with tf.variable_scope('pooling1_lrn') as scope:

pool1 = tf.nn.max_pool(conv1, ksize=[1,3,3,1],strides=[1,2,2,1],

padding='SAME', name='pooling1')

norm1 = tf.nn.lrn(pool1, depth_radius=4, bias=1.0, alpha=0.001/9.0,

beta=0.75,name='norm1')

#conv2

with tf.variable_scope('conv2') as scope:

weights = tf.get_variable('weights',

shape=[3,3,16,16],

dtype=tf.float32,

initializer=tf.truncated_normal_initializer(stddev=0.1,dtype=tf.float32))

biases = tf.get_variable('biases',

shape=[16],

dtype=tf.float32,

initializer=tf.constant_initializer(0.1))

conv = tf.nn.conv2d(norm1, weights, strides=[1,1,1,1],padding='SAME')

pre_activation = tf.nn.bias_add(conv, biases)

conv2 = tf.nn.relu(pre_activation, name='conv2')

#pool2 and norm2

with tf.variable_scope('pooling2_lrn') as scope:

norm2 = tf.nn.lrn(conv2, depth_radius=4, bias=1.0, alpha=0.001/9.0,

beta=0.75,name='norm2')

pool2 = tf.nn.max_pool(norm2, ksize=[1,3,3,1], strides=[1,1,1,1],

padding='SAME',name='pooling2')

#local3

with tf.variable_scope('local3') as scope:

reshape = tf.reshape(pool2, shape=[batch_size, -1])

dim = reshape.get_shape()[1].value

weights = tf.get_variable('weights',

shape=[dim,128],

dtype=tf.float32,

initializer=tf.truncated_normal_initializer(stddev=0.005,dtype=tf.float32))

biases = tf.get_variable('biases',

shape=[128],

dtype=tf.float32,

initializer=tf.constant_initializer(0.1))

local3 = tf.nn.relu(tf.matmul(reshape, weights) + biases, name=scope.name)

#local4

with tf.variable_scope('local4') as scope:

weights = tf.get_variable('weights',

shape=[128,128],

dtype=tf.float32,

initializer=tf.truncated_normal_initializer(stddev=0.005,dtype=tf.float32))

biases = tf.get_variable('biases',

shape=[128],

dtype=tf.float32,

initializer=tf.constant_initializer(0.1))

local4 = tf.nn.relu(tf.matmul(local3, weights) + biases, name='local4')

# full connect

with tf.variable_scope('softmax_linear') as scope:

weights = tf.get_variable('softmax_linear',

shape=[128, n_classes],

dtype=tf.float32,

initializer=tf.truncated_normal_initializer(stddev=0.005,dtype=tf.float32))

biases = tf.get_variable('biases',

shape=[n_classes],

dtype=tf.float32,

initializer=tf.constant_initializer(0.1))

logits = tf.add(tf.matmul(local4, weights), biases, name='softmax_linear')

return logits

if __name__ == '__main__':

x = tf.placeholder(tf.float32, shape=[None, 224, 224, 3])

model = MobileNetV2(x, num_classes=5, is_training=True)

print "output size:"

print model.output.get_shape()

board_writer = tf.summary.FileWriter(logdir='./', graph=tf.get_default_graph())

fake_data = np.ones(shape=(1, 224, 224, 3))

sess_config = tf.ConfigProto(device_count={'GPU':0})

with tf.Session(config=sess_config) as sess:

sess.run(tf.global_variables_initializer())

cnt = 0

for i in range(101):

t1 = time.time()

output = sess.run(model.output, feed_dict={x: fake_data})

if i != 0:

cnt += time.time() - t1

print(cnt / 100)

3. 训练

他的训练文件train.py如下。tf.summary.*相关的为保存各个变量值的变化情况,便于在tensorboard中查看。 lr = tf.train.exponential_decay(learning_rate=init_lr, global_step=global_step, decay_steps=decay_steps, decay_rate=0.1) 为学习率随着迭代此时指数下降。

update_ops = tf.get_collection(tf.GraphKeys.UPDATE_OPS)

以及后面的:

var_list = tf.trainable_variables()

g_list = tf.global_variables()

bn_moving_vars = [g for g in g_list if 'moving_mean' in g.name]

bn_moving_vars += [g for g in g_list if 'moving_variance' in g.name]

var_list += bn_moving_vars

saver = tf.train.Saver(var_list=var_list, max_to_keep=10)

表示更新mobilenetv2中batchnormal的移动平均和标准差,如果没有则训练时很好而预测时完全错误!!!

saver.save(sess, checkpoint_path, global_step=step)表示保存模型

#coding:utf-8

import os

import numpy as np

import tensorflow as tf

import glob

import model

def get_files(file_dir):

image_list, label_list = [], []

for label in os.listdir(file_dir):

for img in glob.glob(os.path.join(file_dir, label, "*.jpg")):

image_list.append(img)

label_list.append(int(label_dict[label]))

print('There are %d data' %(len(image_list)))

temp = np.array([image_list, label_list])

temp = temp.transpose()

np.random.shuffle(temp)

image_list = list(temp[:, 0])

label_list = list(temp[:, 1])

label_list = [int(i) for i in label_list]

return image_list, label_list

label_dict, label_dict_res = {}, {}

# 手动指定一个从类别到label的映射关系

with open("label.txt", 'r') as f:

for line in f.readlines():

folder, label = line.strip().split(':')[0], line.strip().split(':')[1]

label_dict[folder] = label

label_dict_res[label] = folder

print(label_dict)

train_dir = "/media/DATA2/sku_train"

logs_train_dir = './model_save'

init_lr = 0.1

BATCH_SIZE = 64

train, train_label = get_files(train_dir)

one_epoch_step = len(train) / BATCH_SIZE

decay_steps = 20*one_epoch_step

MAX_STEP = 100*one_epoch_step

N_CLASSES = len(label_dict)

IMG_W = 224

IMG_H = 224

CAPACITY = 1000

os.environ["CUDA_VISIBLE_DEVICES"] = "0" # gpu编号

config = tf.ConfigProto()

config.gpu_options.allow_growth = True # 设置最小gpu使用量

def get_batch(image, label, image_W, image_H, batch_size, capacity):

image = tf.cast(image, tf.string)

label = tf.cast(label, tf.int32)

# make an input queue

input_queue = tf.train.slice_input_producer([image, label], shuffle=False)

label = input_queue[1]

image_contents = tf.read_file(input_queue[0])

image = tf.image.decode_jpeg(image_contents, channels=3)

# 数据增强

#image = tf.image.resize_image_with_pad(image, target_height=image_W, target_width=image_H)

image = tf.image.resize_images(image, (image_W, image_H))

# 随机左右翻转

image = tf.image.random_flip_left_right(image)

# 随机上下翻转

image = tf.image.random_flip_up_down(image)

# 随机设置图片的亮度

image = tf.image.random_brightness(image, max_delta=32/255.0)

# 随机设置图片的对比度

#image = tf.image.random_contrast(image, lower=0.5, upper=1.5)

# 随机设置图片的色度

image = tf.image.random_hue(image, max_delta=0.05)

# 随机设置图片的饱和度

#image = tf.image.random_saturation(image, lower=0.5, upper=1.5)

# 标准化,使图片的均值为0,方差为1

image = tf.image.per_image_standardization(image)

image_batch, label_batch = tf.train.batch([image, label],

batch_size= batch_size,

num_threads= 64,

capacity = capacity)

tf.summary.image("input_img", image_batch, max_outputs=5)

label_batch = tf.reshape(label_batch, [batch_size])

image_batch = tf.cast(image_batch, tf.float32)

return image_batch, label_batch

def main():

global_step = tf.Variable(0, name='global_step', trainable=False)

# label without one-hot

batch_train, batch_labels = get_batch(train,

train_label,

IMG_W,

IMG_H,

BATCH_SIZE,

CAPACITY)

# network

logits = model.MobileNetV2(batch_train, num_classes=N_CLASSES, is_training=True).output

#logits = model.model2(batch_train, BATCH_SIZE, N_CLASSES)

#logits = model.model4(batch_train, N_CLASSES, is_trian=True)

print logits.get_shape()

# loss

cross_entropy = tf.nn.sparse_softmax_cross_entropy_with_logits(logits=logits, labels=batch_labels)

loss = tf.reduce_mean(cross_entropy, name='loss')

tf.summary.scalar('train_loss', loss)

# optimizer

lr = tf.train.exponential_decay(learning_rate=init_lr, global_step=global_step, decay_steps=decay_steps, decay_rate=0.1)

tf.summary.scalar('learning_rate', lr)

update_ops = tf.get_collection(tf.GraphKeys.UPDATE_OPS)

with tf.control_dependencies(update_ops):

optimizer = tf.train.AdamOptimizer(learning_rate=lr).minimize(loss, global_step=global_step)

# accuracy

correct = tf.nn.in_top_k(logits, batch_labels, 1)

correct = tf.cast(correct, tf.float16)

accuracy = tf.reduce_mean(correct)

tf.summary.scalar('train_acc', accuracy)

summary_op = tf.summary.merge_all()

sess = tf.Session(config=config)

train_writer = tf.summary.FileWriter(logs_train_dir, sess.graph)

#saver = tf.train.Saver()

var_list = tf.trainable_variables()

g_list = tf.global_variables()

bn_moving_vars = [g for g in g_list if 'moving_mean' in g.name]

bn_moving_vars += [g for g in g_list if 'moving_variance' in g.name]

var_list += bn_moving_vars

saver = tf.train.Saver(var_list=var_list, max_to_keep=10)

sess.run(tf.global_variables_initializer())

coord = tf.train.Coordinator()

threads = tf.train.start_queue_runners(sess=sess, coord=coord)

#saver.restore(sess, logs_train_dir+'/model.ckpt-174000')

try:

for step in range(MAX_STEP):

if coord.should_stop():

break

_, learning_rate, tra_loss, tra_acc = sess.run([optimizer, lr, loss, accuracy])

if step % 100 == 0:

print('Epoch %3d/%d, Step %6d/%d, lr %f, train loss = %.2f, train accuracy = %.2f%%' %(step/one_epoch_step, MAX_STEP/one_epoch_step, step, MAX_STEP, learning_rate, tra_loss, tra_acc*100.0))

summary_str = sess.run(summary_op)

train_writer.add_summary(summary_str, step)

if step % 2000 == 0 or (step + 1) == MAX_STEP:

checkpoint_path = os.path.join(logs_train_dir, 'model.ckpt')

saver.save(sess, checkpoint_path, global_step=step)

except tf.errors.OutOfRangeError:

print('Done training -- epoch limit reached')

finally:

coord.request_stop()

coord.join(threads)

sess.close()

if __name__ == '__main__':

main()

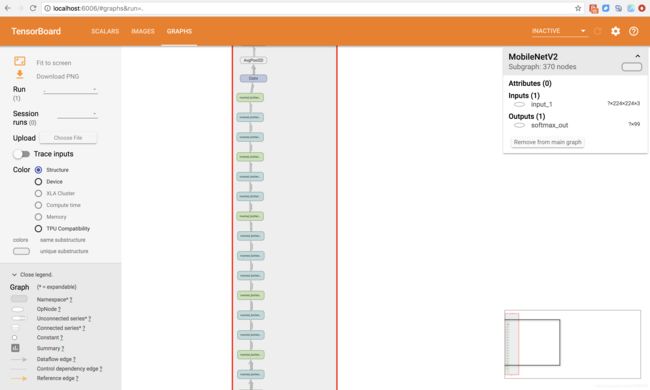

开始训练后,他在model_save文件夹下输入了:tensorboard --logdir=model_save

然后打开:http://localhost:6006/

他发现能看到,learning rate, accuracy, loss的变化情况了,也能看到训练过程中的图像及网络结构了。