Spark core 编程案例1

测试数据:

192.168.88.1 - - [30/Jul/2017:12:53:43 +0800] "GET /MyDemoWeb/ HTTP/1.1" 200 259

192.168.88.1 - - [30/Jul/2017:12:53:43 +0800] "GET /MyDemoWeb/head.jsp HTTP/1.1" 200 713

192.168.88.1 - - [30/Jul/2017:12:53:43 +0800] "GET /MyDemoWeb/body.jsp HTTP/1.1" 200 240

192.168.88.1 - - [30/Jul/2017:12:54:37 +0800] "GET /MyDemoWeb/oracle.jsp HTTP/1.1" 200 242

192.168.88.1 - - [30/Jul/2017:12:54:38 +0800] "GET /MyDemoWeb/hadoop.jsp HTTP/1.1" 200 242

192.168.88.1 - - [30/Jul/2017:12:54:38 +0800] "GET /MyDemoWeb/java.jsp HTTP/1.1" 200 240

192.168.88.1 - - [30/Jul/2017:12:54:40 +0800] "GET /MyDemoWeb/oracle.jsp HTTP/1.1" 200 242

192.168.88.1 - - [30/Jul/2017:12:54:40 +0800] "GET /MyDemoWeb/hadoop.jsp HTTP/1.1" 200 242

192.168.88.1 - - [30/Jul/2017:12:54:41 +0800] "GET /MyDemoWeb/mysql.jsp HTTP/1.1" 200 241

192.168.88.1 - - [30/Jul/2017:12:54:41 +0800] "GET /MyDemoWeb/hadoop.jsp HTTP/1.1" 200 242

192.168.88.1 - - [30/Jul/2017:12:54:42 +0800] "GET /MyDemoWeb/web.jsp HTTP/1.1" 200 239

192.168.88.1 - - [30/Jul/2017:12:54:42 +0800] "GET /MyDemoWeb/oracle.jsp HTTP/1.1" 200 242

192.168.88.1 - - [30/Jul/2017:12:54:52 +0800] "GET /MyDemoWeb/oracle.jsp HTTP/1.1" 200 242

192.168.88.1 - - [30/Jul/2017:12:54:52 +0800] "GET /MyDemoWeb/hadoop.jsp HTTP/1.1" 200 242

192.168.88.1 - - [30/Jul/2017:12:54:53 +0800] "GET /MyDemoWeb/oracle.jsp HTTP/1.1" 200 242

192.168.88.1 - - [30/Jul/2017:12:54:54 +0800] "GET /MyDemoWeb/mysql.jsp HTTP/1.1" 200 241

192.168.88.1 - - [30/Jul/2017:12:54:54 +0800] "GET /MyDemoWeb/hadoop.jsp HTTP/1.1" 200 242

192.168.88.1 - - [30/Jul/2017:12:54:54 +0800] "GET /MyDemoWeb/hadoop.jsp HTTP/1.1" 200 242

192.168.88.1 - - [30/Jul/2017:12:54:56 +0800] "GET /MyDemoWeb/web.jsp HTTP/1.1" 200 239

192.168.88.1 - - [30/Jul/2017:12:54:56 +0800] "GET /MyDemoWeb/java.jsp HTTP/1.1" 200 240

192.168.88.1 - - [30/Jul/2017:12:54:57 +0800] "GET /MyDemoWeb/oracle.jsp HTTP/1.1" 200 242

192.168.88.1 - - [30/Jul/2017:12:54:57 +0800] "GET /MyDemoWeb/java.jsp HTTP/1.1" 200 240

192.168.88.1 - - [30/Jul/2017:12:54:58 +0800] "GET /MyDemoWeb/oracle.jsp HTTP/1.1" 200 242

192.168.88.1 - - [30/Jul/2017:12:54:58 +0800] "GET /MyDemoWeb/hadoop.jsp HTTP/1.1" 200 242

192.168.88.1 - - [30/Jul/2017:12:54:59 +0800] "GET /MyDemoWeb/oracle.jsp HTTP/1.1" 200 242

192.168.88.1 - - [30/Jul/2017:12:54:59 +0800] "GET /MyDemoWeb/hadoop.jsp HTTP/1.1" 200 242

192.168.88.1 - - [30/Jul/2017:12:55:00 +0800] "GET /MyDemoWeb/mysql.jsp HTTP/1.1" 200 241

192.168.88.1 - - [30/Jul/2017:12:55:00 +0800] "GET /MyDemoWeb/oracle.jsp HTTP/1.1" 200 242

192.168.88.1 - - [30/Jul/2017:12:55:02 +0800] "GET /MyDemoWeb/web.jsp HTTP/1.1" 200 239

192.168.88.1 - - [30/Jul/2017:12:55:02 +0800] "GET /MyDemoWeb/hadoop.jsp HTTP/1.1" 200 242

案例一:分析tomcat的访问日志,求访问量最高的两个网页

1、对每个jps的访问量求和

2、排序

3、取前两条记录

package demo

import org.apache.spark.{SparkConf, SparkContext}

object MapPartitionsDemo{

def main(args: Array[String]): Unit = {

val conf = new SparkConf()

conf.setAppName("MapPartitionsDemo").setMaster("local")

val sc = new SparkContext(conf)

val rdd1 = sc.textFile("G:\\大数据第七期vip课程\\正式课\\0611-第三十二章节\\localhost_access_log.2017-07-30.txt").map{

line =>{

//192.168.88.1 - - [30/Jul/2017:12:53:43 +0800] "GET /MyDemoWeb/head.jsp HTTP/1.1" 200 713

//解析字符串,找到jsp 名字

//第一步解析GET /MyDemoWeb/head.jsp HTTP/1.1

val index1= line.indexOf("\"") //双引号开头的位置

val index2 = line.lastIndexOf("\"") //双引号结束的位置

val str = line.substring(index1+1,index2)

//解析/MyDemoWeb/head.jsp

val index3= str.indexOf(" ") //双引号开头的位置

val index4 = str.lastIndexOf(" ") //双引号结束的位置

val str1 = str.substring(index3+1,index4)

//解析***.jsp

val jspname=str1.substring(str1.lastIndexOf("/")+1)

//返回(***.jsp,1)

(jspname,1)

}

}

//按照jspname 进行累加

val rdd2 = rdd1.reduceByKey(_+_)

//按照访问量排序,降序

val rdd3 = rdd2.sortBy(_._2,false)

//取出前两条

println(rdd3.take(2).toBuffer)//ArrayBuffer((oracle.jsp,9), (hadoop.jsp,9))

sc.stop()

}

}

案例二:分析tomcat的访问日志,根据网页的名字进行分区(类似MapReduce中的自定义分区)

结果: 网页的名字 访问日志

oracle.jsp 192.168.88.1 - - [30/Jul/2017:12:54:37 +0800] "GET /MyDemoWeb/oracle.jsp HTTP/1.1" 200 242

oracle.jsp 192.168.88.1 - - [30/Jul/2017:12:54:53 +0800] "GET /MyDemoWeb/oracle.jsp HTTP/1.1" 200 242

package demo

import java.util

import org.apache.spark.{Partitioner, SparkConf, SparkContext}

object MyTomcatPartitioerDemo {

def main(args:Array[String]):Unit={

System.setProperty("hadoop.home.dir","G:\\hadoop\\hadoop-2.4.1\\hadoop-2.4.1")

val conf = new SparkConf()

conf.setAppName("MapPartitionsWithIndexDemo").setMaster("local")

val sc = new SparkContext(conf)

val rdd1 = sc.textFile("G:\\localhost_access_log.2017-07-30.txt").map{

line =>{

//192.168.88.1 - - [30/Jul/2017:12:53:43 +0800] "GET /MyDemoWeb/head.jsp HTTP/1.1" 200 713

//解析字符串,找到jsp 名字

//第一步解析GET /MyDemoWeb/head.jsp HTTP/1.1

val index1= line.indexOf("\"") //双引号开头的位置

val index2 = line.lastIndexOf("\"") //双引号结束的位置

val str = line.substring(index1+1,index2)

//解析/MyDemoWeb/head.jsp

val index3= str.indexOf(" ") //双引号开头的位置

val index4 = str.lastIndexOf(" ") //双引号结束的位置

val str1 = str.substring(index3+1,index4)

//解析***.jsp

val jspname=str1.substring(str1.lastIndexOf("/")+1)

//返回(***.jsp,对应的日志)

(jspname,line)

}

}

//根据jsp 的名字建立分区,得到jsp 名字的个数

//得到所有不重复的名字-->string

val rdd2 = rdd1.map(_._1).distinct().collect()

//根据jsp 的名字建立分区,创建分区规则

val mypartitioner = new MyPartitions(rdd2)

//注意:rdd1是一个

//执行分区

val result = rdd1.partitionBy(mypartitioner)

//输出

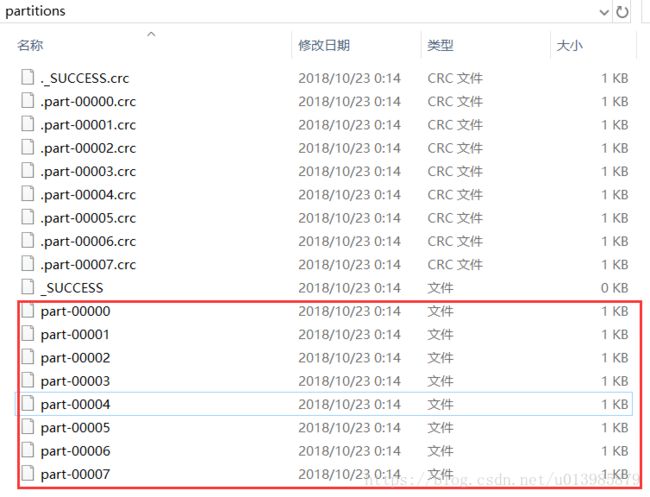

result.saveAsTextFile("G:\\partitions")

sc.stop()

}

}

class MyPartitions(allJspName:Array[String]) extends Partitioner{

//定义一个集合保存分区的条件

//String jsp名字 Int分区号

val partitionMap= new util.HashMap[String,Int]()

//建立分区规则

var partID = 0

for(name <- allJspName){

partitionMap.put(name,partID)

partID += 1

}

//返回分区的个数

override def numPartitions: Int = partitionMap.size()

//根据key(jsp的名字) 获取分区号

override def getPartition(key: Any): Int = {

partitionMap.getOrDefault(key.toString,0)

}

}输出结果:

注意:

1.输出目录 result.saveAsTextFile("G:\\partitions") 不能存在,否则提示already exists:

Exception in thread "main" org.apache.hadoop.mapred.FileAlreadyExistsException: Output directory file:/G:/partitions already exists

at org.apache.hadoop.mapred.FileOutputFormat.checkOutputSpecs(FileOutputFormat.java:131)

at org.apache.spark.rdd.PairRDDFunctions$$anonfun$saveAsHadoopDataset$1.apply$mcV$sp(PairRDDFunctions.scala:1191)

2.Windows环境需要设置 System.setProperty("hadoop.home.dir","G:\\hadoop\\hadoop-2.4.1\\hadoop-2.4.1")

否则可能提示:

Caused by: java.io.IOException: (null) entry in command string: null chmod 0644 G:\partitions1\_temporary\0\_temporary\attempt_20181023002946_0003_m_000000_3\part-00000

at org.apache.hadoop.util.Shell$ShellCommandExecutor.execute(Shell.java:770)

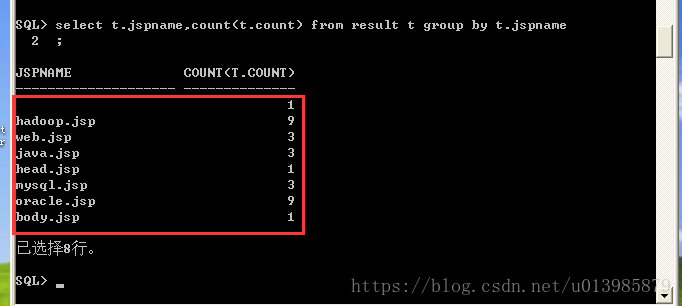

案例三:把上面分析的结果,保存到Oracle中(知识点:在哪里建立Connection?): 对于非序列化的对象,如何处理?

1、create table result(jspname varchar2(20), count number);

2、开发JDBC程序(Scala),包含Oracle的驱动

3、操作RDD的时候,尽量针对分区,避免序列化问题

package demo

import java.sql.{Connection, DriverManager, PreparedStatement}

import org.apache.spark.{SparkConf, SparkContext}

object MyOracleDemo {

def main(args: Array[String]): Unit = {

System.setProperty("hadoop.home.dir","G:\\hadoop\\hadoop-2.4.1\\hadoop-2.4.1")

val conf = new SparkConf()

conf.setAppName("MapPartitionsWithIndexDemo").setMaster("local")

val sc = new SparkContext(conf)

val rdd1 = sc.textFile("G:\\localhost_access_log.2017-07-30.txt").map{

line =>{

//192.168.88.1 - - [30/Jul/2017:12:53:43 +0800] "GET /MyDemoWeb/head.jsp HTTP/1.1" 200 713

//解析字符串,找到jsp 名字

//第一步解析GET /MyDemoWeb/head.jsp HTTP/1.1

val index1= line.indexOf("\"") //双引号开头的位置

val index2 = line.lastIndexOf("\"") //双引号结束的位置

val str = line.substring(index1+1,index2)

//解析/MyDemoWeb/head.jsp

val index3= str.indexOf(" ") //双引号开头的位置

val index4 = str.lastIndexOf(" ") //双引号结束的位置

val str1 = str.substring(index3+1,index4)

//解析***.jsp

val jspname=str1.substring(str1.lastIndexOf("/")+1)

//返回(***.jsp,对应的日志)

(jspname,1)

}

}

//针对分区,创建connection,将结果保存到数据库中

rdd1.foreachPartition(saveToOracle)

}

def saveToOracle(iter:Iterator[(String,Int)])={

var con:Connection = null

var pst:PreparedStatement = null

try{

Class.forName("oracle.jdbc.driver.OracleDriver").newInstance()

con = DriverManager.getConnection("jdbc:oracle:thin:@192.168.163.134:1521:orcl","scott","tiger")

pst = con.prepareStatement("insert into result values (?,?)")

iter.foreach(data =>{

pst.setString(1,data._1)

pst.setInt(2,data._2)

pst.executeUpdate()

})

}catch{

case e1:Exception => e1.printStackTrace()

}finally{

if(pst != null) pst.close()

if(con != null) con.close()

}

}

}

package demo

import java.sql.DriverManager

import org.apache.spark.rdd.JdbcRDD

import org.apache.spark.{SparkConf, SparkContext}

object MyJDBCRDDDemo {

val connection = () =>{

Class.forName("oracle.jdbc.driver.OracleDriver").newInstance()

DriverManager.getConnection("jdbc:oracle:thin:@192.168.163.134:1521:orcl","scott","tiger")

}

def main(args: Array[String]): Unit = {

val sparkconf = new SparkConf()

sparkconf.setAppName("MyJDBCRDDDemo").setMaster("local")

val sc = new SparkContext(sparkconf)

//10号部门,工资大于2000的员工姓名和薪水

val oracleRDD = new JdbcRDD(sc,connection,"select * from emp where sal > ? and deptno=?",2000,10,1,r=>{

// print(r.toString)

val ename=r.getString(2)

val sal = r.getInt(6)

(ename,sal)

})

val result = oracleRDD.collect()

println(result.toBuffer)

sc.stop()

}

}