opencv-特征检测与描述

参考:

1、http://docs.opencv.org/3.3.0/ 官方文档api

2、http://docs.opencv.org/3.3.0/d6/d00/tutorial_py_root.html 官方英文教程

3、https://opencv-python-tutroals.readthedocs.io/en/latest/py_tutorials/py_tutorials.html

4、https://github.com/makelove/OpenCV-Python-Tutorial# 进阶教程

5、https://docs.opencv.org/3.3.0/index.html 官方英文教程

6、https://github.com/abidrahmank/OpenCV2-Python-Tutorials

7、https://www.learnopencv.com/

8、http://answers.opencv.org/questions/ OpenCV论坛

9、https://github.com/opencv/opencv 官方github

10、https://github.com/abidrahmank/OpenCV2-Python-Tutorials

注:安装的版本 opencv_python-3.3.0-cp36-cp36m-win_amd64.whl

参考:https://opencv-python-tutroals.readthedocs.io/en/latest/py_tutorials/py_tutorials.html

Harris角检测

目标

In this chapter,

- We will understand the concepts behind Harris Corner Detection.

- We will see the functions: cv2.cornerHarris(), cv2.cornerSubPix()

OpenCV中的Harris Corner Detector

OpenCV具有函数cv2.cornerHarris()。 其参数是:

img - 输入图像,它应该是灰度和float32类型。

blockSize - 角点检测所考虑的邻域大小

ksize - 所用Sobel衍生物的孔径参数。

k - 哈里斯检测器自由参数的方程式。

import cv2 import numpy as np filename = 'chessboard.jpg' img = cv2.imread(filename) gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY) gray = np.float32(gray) dst = cv2.cornerHarris(gray,2,3,0.04) #result is dilated for marking the corners, not important dst = cv2.dilate(dst,None) # Threshold for an optimal value, it may vary depending on the image. img[dst>0.01*dst.max()]=[0,0,255] cv2.imshow('dst',img) if cv2.waitKey(0) & 0xff == 27: cv2.destroyAllWindows()

![]()

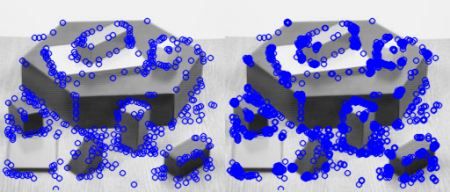

Corner与SubPixel精度

有时,您可能需要以最高的精度找到角落。 OpenCV带有一个函数cv2.cornerSubPix(),进一步优化了用子像素精度检测到的角。 以下是一个例子。 像往常一样,我们需要首先找到哈里角。 然后我们通过这些角的质心(在一个角落可能有一堆像素,我们采取他们的质心)来细化它们。 哈里斯角被标记为红色像素,精致的角落标记为绿色像素。 对于此功能,我们必须定义何时停止迭代。 我们在指定次数的迭代之后停止,或者达到一定的准确度,以先到者为准。 我们还需要定义它将搜索角落的邻域的大小。

import cv2 import numpy as np filename = 'chessboard.jpg' img = cv2.imread(filename) gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY) # find Harris corners gray = np.float32(gray) dst = cv2.cornerHarris(gray,2,3,0.04) dst = cv2.dilate(dst,None) ret, dst = cv2.threshold(dst,0.01*dst.max(),255,0) dst = np.uint8(dst) # find centroids ret, labels, stats, centroids = cv2.connectedComponentsWithStats(dst) # define the criteria to stop and refine the corners criteria = (cv2.TERM_CRITERIA_EPS + cv2.TERM_CRITERIA_MAX_ITER, 100, 0.001) corners = cv2.cornerSubPix(gray,np.float32(centroids),(5,5),(-1,-1),criteria) # Now draw them res = np.hstack((centroids,corners)) res = np.int0(res) img[res[:,1],res[:,0]]=[0,0,255] img[res[:,3],res[:,2]] = [0,255,0] # cv2.imwrite('subpixel5.png',img) cv2.imshow('subpixel5.png',img) cv2.waitKey(0) cv2.destroyAllWindows()

Shi-Tomasi角检测仪及追踪功能

目标

In this chapter,

- We will learn about the another corner detector: Shi-Tomasi Corner Detector

- We will see the function: cv2.goodFeaturesToTrack()

代码

OpenCV有一个功能,cv2.goodFeaturesToTrack()。 它通过Shi-Tomasi方法(或Harris Corner Detection,如果您指定)在图像中找到N个最强的角落。 像往常一样,图像应该是灰度图像。 然后指定要查找的角数。 然后指定质量水平,这是0-1之间的值,表示每个人被拒绝的最低角度质量。 然后我们提供检测到的角落之间的最小欧几里德距离。有了所有这些信息,该功能会在图像中找到角落。 质量水平以下的所有角落都被拒绝。 然后按照降序对质量进行排序。 然后功能首先是最强的角落,抛出最小距离范围内的所有附近角落,并返回N个最强的角落。

In below example, we will try to find 25 best corners:

import numpy as np import cv2 from matplotlib import pyplot as plt img = cv2.imread('chessboard.jpg') gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY) corners = cv2.goodFeaturesToTrack(gray,25,0.01,10) corners = np.int0(corners) for i in corners: x,y = i.ravel() cv2.circle(img,(x,y),3,255,-1) plt.imshow(img),plt.show()

SIFT简介(Scale-Invariant Feature Transform)

目标

- In this chapter,

-

- We will learn about the concepts of SIFT algorithm

- We will learn to find SIFT Keypoints and Descriptors.

SIFT算法主要涉及四个步骤。 我们会一个一个看到他们。

1. Scale-space Extrema Detection

尺度空间极值检测

2. Keypoint Localization

关键点本地化

3. Orientation Assignment

定位任务4. Keypoint Descriptor

关键描述符5. Keypoint Matching

关键点匹配

SIFT in OpenCV

所以现在看看OpenCV中提供的SIFT功能。 我们从关键点检测开始,并绘制它们。 首先我们要构造一个SIFT对象。 我们可以传递不同的参数,它们是可选的,它们在文档中有很好的解释。import cv2 import numpy as np img = cv2.imread('home.jpg') gray= cv2.cvtColor(img,cv2.COLOR_BGR2GRAY) sift = cv2.SIFT() kp = sift.detect(gray,None) img=cv2.drawKeypoints(gray,kp) # cv2.imwrite('sift_keypoints.jpg',img) cv2.imshow('sift_keypoints.jpg',img) cv2.waitKey(0) cv2.destroyAllWindows()

sift.detect()函数在图像中找到关键点。 如果您只想搜索图像的一部分,您可以mask。 每个关键点是一个特殊的结构,它具有许多属性,如(x,y)坐标,有意义的邻域的大小,指定其方向的角度,指定关键点强度的响应等。

OpenCV还提供cv2.drawKeyPoints()函数,它在关键点的位置绘制小圆。 如果你通过一个标志cv2.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS,它将绘制一个大小为关键点的圆圈,甚至显示它的方向。 见下面的例子。

img=cv2.drawKeypoints(gray,kp,flags=cv2.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS) cv2.imwrite('sift_keypoints.jpg',img)

![]()

现在要计算描述符,OpenCV提供了两种方法。

1、既然你已经找到了关键点,你可以调用sift.compute(),它从我们发现的关键点计算描述符。 例如:kp,des = sift.compute(gray,kp)

2、如果没有找到关键点,可以使用函数sift.detectAndCompute()在单个步骤中直接找到关键点和描述符。

sift = cv2.SIFT() kp, des = sift.detectAndCompute(gray,None)

这里的kp将是一个关键点列表,des是一个numpy数组的形状![]() 。

。

所以我们得到了关键点,描述符等。现在我们想看看如何匹配不同图像中的关键点。 我们将在下一章中学习。

Introduction to SURF (Speeded-Up Robust Features)

目标

- In this chapter,

-

- We will see the basics of SURF

- We will see SURF functionalities in OpenCV

SURF in OpenCV

OpenCV就像SIFT一样提供SURF功能。 您可以启动具有一些可选条件的SURF对象,如64/128-dim描述符,直立/正常SURF等。所有的细节都在文档中有很好的解释。 那么就像我们在SIFT中一样,我们可以使用SURF.detect(),SURF.compute()等来找到关键点和描述符。首先,我们将看到一个关于如何找到SURF关键点和描述符并绘制它的简单演示。 所有示例都显示在Python终端中,因为它与SIFT相同。

>>> img = cv2.imread('fly.png',0) # Create SURF object. You can specify params here or later. # Here I set Hessian Threshold to 400 >>> surf = cv2.SURF(400) # Find keypoints and descriptors directly >>> kp, des = surf.detectAndCompute(img,None) >>> len(kp) 699

1199个关键点太多,无法在图片中显示。 我们把它减少到50左右,画一幅图像。 在匹配的时候,我们可能需要所有这些功能,但现在不是。 所以我们增加了Hessian Threshold。

# Check present Hessian threshold >>> print surf.hessianThreshold 400.0 # We set it to some 50000. Remember, it is just for representing in picture. # In actual cases, it is better to have a value 300-500 >>> surf.hessianThreshold = 50000 # Again compute keypoints and check its number. >>> kp, des = surf.detectAndCompute(img,None) >>> print len(kp) 47

>>> img2 = cv2.drawKeypoints(img,kp,None,(255,0,0),4) >>> plt.imshow(img2),plt.show()看下面的结果。 您可以看到SURF更像是一个blob检测器。 它检测蝴蝶翅膀上的白色斑点。 您可以使用其他图像进行测试。

![]()

现在我要应用U-SURF,以便找不到方向。

# Check upright flag, if it False, set it to True >>> print surf.upright False >>> surf.upright = True # Recompute the feature points and draw it >>> kp = surf.detect(img,None) >>> img2 = cv2.drawKeypoints(img,kp,None,(255,0,0),4) >>> plt.imshow(img2),plt.show()

看下面的结果。 所有方向都显示在相同的方向。 它比以前更快。 如果您正在处理方向不是问题的情况(如全景拼接)等,这更好。

![]()

最后,我们检查描述符大小,如果只有64-dim,则将其更改为128。

# Find size of descriptor >>> print surf.descriptorSize() 64 # That means flag, "extended" is False. >>> surf.extended False # So we make it to True to get 128-dim descriptors. >>> surf.extended = True >>> kp, des = surf.detectAndCompute(img,None) >>> print surf.descriptorSize() 128 >>> print des.shape (47, 128)

FAST算法进行角检测

目标

- In this chapter,

-

- We will understand the basics of FAST algorithm

- We will find corners using OpenCV functionalities for FAST algorithm.

OpenCV中的快速特征检测器

它被称为OpenCV中的任何其他特征检测器。 如果需要,您可以指定阈值,是否应用非最大抑制,要使用的邻域等。对于邻域,定义了三个标志:cv2.FAST_FEATURE_DETECTOR_TYPE_5_8,cv2.FAST_FEATURE_DETECTOR_TYPE_7_12和cv2.FAST_FEATURE_DETECTOR_TYPE_9_16。 以下是有关如何检测和绘制FAST特征点的简单代码。

import numpy as np import cv2 from matplotlib import pyplot as plt img = cv2.imread('simple.jpg',0) # Initiate FAST object with default values fast = cv2.FastFeatureDetector() # find and draw the keypoints kp = fast.detect(img,None) img2 = cv2.drawKeypoints(img, kp, color=(255,0,0)) # Print all default params print "Threshold: ", fast.getInt('threshold') print "nonmaxSuppression: ", fast.getBool('nonmaxSuppression') print "neighborhood: ", fast.getInt('type') print "Total Keypoints with nonmaxSuppression: ", len(kp) cv2.imwrite('fast_true.png',img2) # Disable nonmaxSuppression fast.setBool('nonmaxSuppression',0) kp = fast.detect(img,None) print "Total Keypoints without nonmaxSuppression: ", len(kp) img3 = cv2.drawKeypoints(img, kp, color=(255,0,0)) cv2.imwrite('fast_false.png',img3)

BRIEF (二进制鲁棒独立基本特征)

BRIEF in OpenCV

下面的代码显示了在CenSurE检测器的帮助下的BRIEF 描述符的计算。 (CenSurE检测器在OpenCV中称为STAR检测器)

import numpy as np import cv2 from matplotlib import pyplot as plt img = cv2.imread('simple.jpg',0) # Initiate STAR detector star = cv2.FeatureDetector_create("STAR") # Initiate BRIEF extractor brief = cv2.DescriptorExtractor_create("BRIEF") # find the keypoints with STAR kp = star.detect(img,None) # compute the descriptors with BRIEF kp, des = brief.compute(img, kp) print brief.getInt('bytes') print des.shape

函数brief.getInt('bytes')给出以字节为单位的 ![]() 大小。 默认情况下为32.下一个是匹配,这将在另一章中完成。

大小。 默认情况下为32.下一个是匹配,这将在另一章中完成。

ORB (定向快速旋转简介)

ORB in OpenCV

像往常一样,我们必须创建一个带有函数cv2.ORB()或使用feature2d通用接口的ORB对象。 它有多个可选参数。 最有用的是nFeatures,其表示要保留的最大特征数(默认为500),scoreType表示Harris分数或FAST分数以排列特征(默认为Harris分数)等。另一参数WTA_K决定点数 它产生定向Brief描述符的每个元素。 默认情况下是两个,即一次选择两个点。 在这种情况下,为了匹配,使用NORM_HAMMING距离。 如果WTA_K为3或4,则需要3或4个点来生成简要描述符,则通过NORM_HAMMING2定义匹配距离。

import numpy as np import cv2 from matplotlib import pyplot as plt img = cv2.imread('simple.jpg',0) # Initiate STAR detector orb = cv2.ORB() # find the keypoints with ORB kp = orb.detect(img,None) # compute the descriptors with ORB kp, des = orb.compute(img, kp) # draw only keypoints location,not size and orientation img2 = cv2.drawKeypoints(img,kp,color=(0,255,0), flags=0) plt.imshow(img2),plt.show()

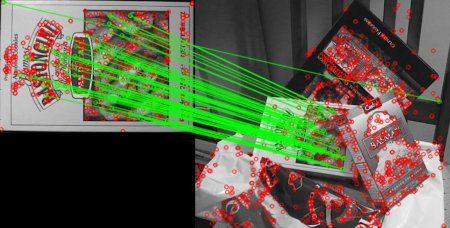

特征匹配

强力匹配ORB描述符

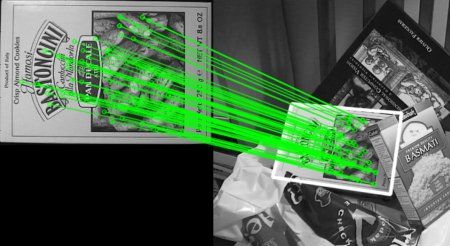

在这里,我们将看到一个关于如何匹配两个图像之间的功能的简单示例。 在这个例子,我有一个queryImage和一个trainImage。 我们将尝试使用特征匹配在trainImage中查找queryImage。 (图片是/samples/c/box.png和/samples/c/box_in_scene.png)我们正在使用SIFT描述符来匹配特征。 所以我们开始加载图像,查找描述符等

import numpy as np import cv2 from matplotlib import pyplot as plt img1 = cv2.imread('box.png',0) # queryImage img2 = cv2.imread('box_in_scene.png',0) # trainImage # Initiate SIFT detector orb = cv2.ORB() # find the keypoints and descriptors with SIFT kp1, des1 = orb.detectAndCompute(img1,None) kp2, des2 = orb.detectAndCompute(img2,None)

接下来,我们创建一个带距离测量的BFMatcher对象cv2.NORM_HAMMING(因为我们使用ORB),并且打开crossCheck以获得更好的结果。 然后我们使用Matcher.match()方法在两个图像中获得最佳匹配。 我们按照他们的距离的升序对它们进行排序,以便最好的匹配(低距离)来到前面。 然后我们只画出前10个匹配(只是为了能见度,你可以随意增加)

# create BFMatcher object bf = cv2.BFMatcher(cv2.NORM_HAMMING, crossCheck=True) # Match descriptors. matches = bf.match(des1,des2) # Sort them in the order of their distance. matches = sorted(matches, key = lambda x:x.distance) # Draw first 10 matches. img3 = cv2.drawMatches(img1,kp1,img2,kp2,matches[:10], flags=2) plt.imshow(img3),plt.show()

什么是Matcher对象?

matches = bf.match(des1,des2)行的结果是DMatch对象的列表。 此DMatch对象具有以下属性:DMatch.distance - 描述符之间的距离。 越低越好。

DMatch.trainIdx - 训练描述符中描述符的索引

DMatch.queryIdx - 查询描述符中描述符的索引

DMatch.imgIdx - 训练图像的索引。

强力匹配SIFT描述符和比率测试

这一次,我们将使用 BFMatcher.knnMatch()获得最佳匹配。 在这个例子中,我们将k = 2,以便我们可以在他的论文中应用D.Lowe解释的比例测试。

import numpy as np import cv2 from matplotlib import pyplot as plt img1 = cv2.imread('box.png',0) # queryImage img2 = cv2.imread('box_in_scene.png',0) # trainImage # Initiate SIFT detector sift = cv2.SIFT() # find the keypoints and descriptors with SIFT kp1, des1 = sift.detectAndCompute(img1,None) kp2, des2 = sift.detectAndCompute(img2,None) # BFMatcher with default params bf = cv2.BFMatcher() matches = bf.knnMatch(des1,des2, k=2) # Apply ratio test good = [] for m,n in matches: if m.distance < 0.75*n.distance: good.append([m]) # cv2.drawMatchesKnn expects list of lists as matches. img3 = cv2.drawMatchesKnn(img1,kp1,img2,kp2,good,flags=2) plt.imshow(img3),plt.show()

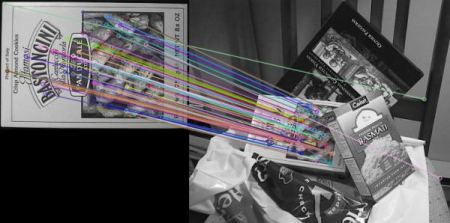

基于FLANN的Matcher

FLANN代表近似近邻的快速库。 它包含针对大型数据集中的快速最近邻搜索和高维度特征优化的算法集合。 它比BFMatcher对于大型数据集的工作速度更快。 我们将看到FLANN的匹配器的第二个例子。

对于基于FLANN的匹配器,我们需要通过两个指定要使用的算法的字典及其相关参数。首先是IndexParams。 对于各种算法,FLANN文档将介绍要传递的信息。总之,对于像SIFT,SURF等算法,您可以传递以下内容:

index_params = dict(algorithm = FLANN_INDEX_KDTREE, trees = 5)

使用ORB时,可以传递以下内容。 根据文档推荐注释的值,但在某些情况下不提供必需的结果。 其他值工作得很好

index_params= dict(algorithm = FLANN_INDEX_LSH, table_number = 6, # 12 key_size = 12, # 20 multi_probe_level = 1) #2

第二个字典是SearchParams。 它指定索引中的树应该递归遍历的次数。 更高的值提供更好的精度,但也需要更多的时间。 如果要更改该值,请传递search_params = dict(checks=100)。

import numpy as np import cv2 from matplotlib import pyplot as plt img1 = cv2.imread('box.png',0) # queryImage img2 = cv2.imread('box_in_scene.png',0) # trainImage # Initiate SIFT detector sift = cv2.SIFT() # find the keypoints and descriptors with SIFT kp1, des1 = sift.detectAndCompute(img1,None) kp2, des2 = sift.detectAndCompute(img2,None) # FLANN parameters FLANN_INDEX_KDTREE = 0 index_params = dict(algorithm = FLANN_INDEX_KDTREE, trees = 5) search_params = dict(checks=50) # or pass empty dictionary flann = cv2.FlannBasedMatcher(index_params,search_params) matches = flann.knnMatch(des1,des2,k=2) # Need to draw only good matches, so create a mask matchesMask = [[0,0] for i in xrange(len(matches))] # ratio test as per Lowe's paper for i,(m,n) in enumerate(matches): if m.distance < 0.7*n.distance: matchesMask[i]=[1,0] draw_params = dict(matchColor = (0,255,0), singlePointColor = (255,0,0), matchesMask = matchesMask, flags = 0) img3 = cv2.drawMatchesKnn(img1,kp1,img2,kp2,matches,None,**draw_params) plt.imshow(img3,),plt.show()

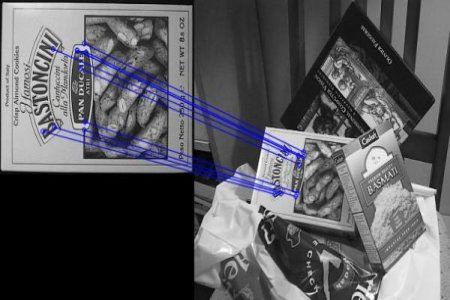

特征匹配+Homography 查找对象

代码

首先,像往常一样,让我们在图像中找到SIFT特征,并应用比例测试来找到最佳匹配。

import numpy as np import cv2 from matplotlib import pyplot as plt MIN_MATCH_COUNT = 10 img1 = cv2.imread('box.png',0) # queryImage img2 = cv2.imread('box_in_scene.png',0) # trainImage # Initiate SIFT detector sift = cv2.SIFT() # find the keypoints and descriptors with SIFT kp1, des1 = sift.detectAndCompute(img1,None) kp2, des2 = sift.detectAndCompute(img2,None) FLANN_INDEX_KDTREE = 0 index_params = dict(algorithm = FLANN_INDEX_KDTREE, trees = 5) search_params = dict(checks = 50) flann = cv2.FlannBasedMatcher(index_params, search_params) matches = flann.knnMatch(des1,des2,k=2) # store all the good matches as per Lowe's ratio test. good = [] for m,n in matches: if m.distance < 0.7*n.distance: good.append(m)

现在我们设置一个条件,至少10个匹配(由MIN_MATCH_COUNT定义)在那里找到对象。 否则,只需显示一条消息,表示不存在足够的匹配。

如果找到足够的匹配,我们在两个图像中提取匹配的关键点的位置。 他们被传递到找到相应的转型。 一旦我们得到这个3x3变换矩阵,我们可以使用它将queryImage的角点转换成trainImage中的相应点。 然后我们画它。

if len(good)>MIN_MATCH_COUNT: src_pts = np.float32([ kp1[m.queryIdx].pt for m in good ]).reshape(-1,1,2) dst_pts = np.float32([ kp2[m.trainIdx].pt for m in good ]).reshape(-1,1,2) M, mask = cv2.findHomography(src_pts, dst_pts, cv2.RANSAC,5.0) matchesMask = mask.ravel().tolist() h,w = img1.shape pts = np.float32([ [0,0],[0,h-1],[w-1,h-1],[w-1,0] ]).reshape(-1,1,2) dst = cv2.perspectiveTransform(pts,M) img2 = cv2.polylines(img2,[np.int32(dst)],True,255,3, cv2.LINE_AA) else: print "Not enough matches are found - %d/%d" % (len(good),MIN_MATCH_COUNT) matchesMask = None

最后,我们绘制我们的内联(如果成功找到对象)或匹配关键点(如果失败)。

draw_params = dict(matchColor = (0,255,0), # draw matches in green color singlePointColor = None, matchesMask = matchesMask, # draw only inliers flags = 2) img3 = cv2.drawMatches(img1,kp1,img2,kp2,good,None,**draw_params) plt.imshow(img3, 'gray'),plt.show()