Java打造RPC框架(四):支持zookeeper与负载均衡

Java打造RPC框架系列第四篇

上一篇文章中 给大家讲了zookeeper作为注册中心的基本原理

http://blog.csdn.net/we_phone/article/details/78993394

这篇文章中 我讲的是RPC框架接入对单点zookeeper的支持的源码实现

详细代码可见:Github MeiZhuoRPC

看懂这篇文章需要的前提

- 看了前面的系列文章

- 熟悉java.util.concurrent包

文章出于详细讲解的目的,篇幅较长 望耐心阅读 有疑问欢迎评论

首先看使用效果

我们启动两个提供者服务

@Test

public void multi1and2() throws InterruptedException, IOException {

ClassPathXmlApplicationContext context = new ClassPathXmlApplicationContext(

new String[] { "file:src/test/java/rpcTest/MultiServer1and2Context.xml" });

context.start();

//启动spring后才可启动 防止容器尚未加载完毕

RPC.start();

}

@Test

public void multi2() throws InterruptedException, IOException {

ClassPathXmlApplicationContext context = new ClassPathXmlApplicationContext(

new String[] { "file:src/test/java/rpcTest/MultiServer2Context.xml" });

context.start();

//启动spring后才可启动 防止容器尚未加载完毕

RPC.start();

}下面是他们对应的spring配置文件

接下来调用消费者

ExecutorService executorService= Executors.newFixedThreadPool(8);

for (int i = 0; i <1000 ; i++) {

int finalI = i+1;

executorService.execute(new Runnable() {

@Override

public void run() {

Service1 service1= (Service1) RPC.call(Service1.class);

Service2 service2= (Service2) RPC.call(Service2.class);

System.out.println("第"+ finalI +"次发出请求");

service1.count();

service2.count();

}

});

}

rpcTest.Service1

rpcTest.Service2

这里用线程池 发出1000个请求,每个提供者者接收到请求后只是计数后输出,这里我就不贴代码了 我github上的单元测试有

调用效果如下

即注册service1也注册2的提供者输出:Service1 计数:1000 Service2 计数:515

只注册service2的输出:Service2 计数:485

485+515 刚好是1000

也就是service1只有1个提供者 请求就全部落在了一个节点上 service2 就分摊给了两个节点

这就是我们通过zookeeper注册中心实现的负载均衡的RPC调用的效果

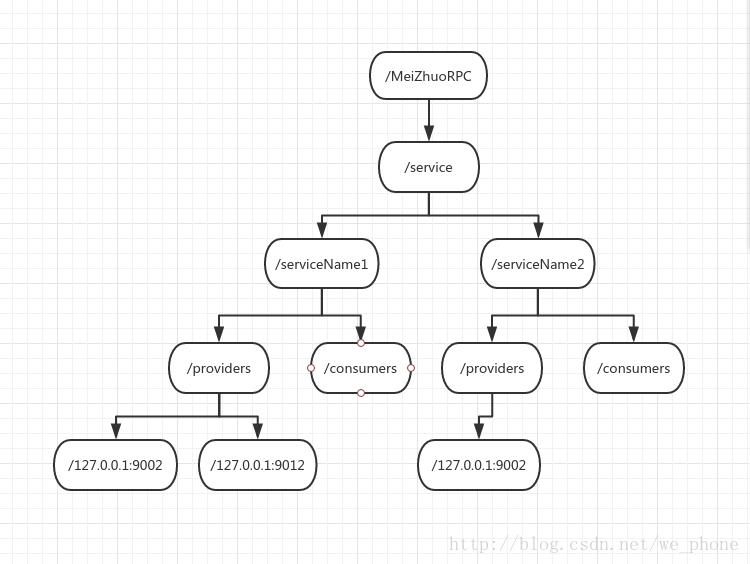

首先看MeiZhuoRPC中zookeeper数据模型

public class ZKConst {

public static final Integer sessionTimeout=2000;

public static final String rootPath="/MeiZhuoRPC";

public static final String providersPath="/providers";

public static final String consumersPath="/consumers";

public static final String servicePath="/service";

}zookeeper的操作

- 初始化一些根节点,例如/MeiZhuo /service这些 不存在则需要创建。

- 生成每个服务的providers和ip节点

- 获得并监听所有的IP

//生成所有注册的服务znode

public void createServerService() throws KeeperException, InterruptedException {

ZKTempZnodes zkTempZnodes=new ZKTempZnodes(zooKeeper);

Map serviceMap= RPC.getServerConfig().getServerImplMap();

String ip=RPC.getServerConfig().getServerHost();

for (Map.Entry entry:serviceMap.entrySet()){

//获取配置中设置的IP设置为IP顺序节点的值 默认127.0.0.1:8888

zkTempZnodes.createTempZnode(ZKConst.rootPath+ZKConst.servicePath+"/"+entry.getKey()+ZKConst.providersPath+"/"+ip,null);

//创建连接数节点 首次增加时连接数为0

// zkTempZnodes.createTempZnode(ZKConst.rootPath+ZKConst.balancePath+"/"+entry.getKey()+"/"+ip,0+"");

}

}

//获得这个服务所有的提供者 包含监听注册

public List getAllServiceIP(String serviceName) throws KeeperException, InterruptedException {

ZKTempZnodes zkTempZnodes=new ZKTempZnodes(zooKeeper);

IPWatcher ipWatcher=new IPWatcher(zooKeeper);

return zkTempZnodes.getPathChildren(ZKConst.rootPath+ZKConst.servicePath+"/"+serviceName+ZKConst.providersPath,ipWatcher);

}

//初始化根节点及服务提供者节点 均为持久节点

public void initZnode() throws KeeperException, InterruptedException {

ZKTempZnodes zkTempZnodes=new ZKTempZnodes(zooKeeper);

StringBuilder pathBuilder=new StringBuilder(ZKConst.rootPath);

// String balancePath=ZKConst.rootPath;

zkTempZnodes.createSimpleZnode(pathBuilder.toString(),null);

// balancePath=balancePath+ZKConst.balancePath;

// zkTempZnodes.createSimpleZnode(balancePath,null);

pathBuilder.append(ZKConst.servicePath);

zkTempZnodes.createSimpleZnode(pathBuilder.toString(),null);

Map serverImplMap=RPC.getServerConfig().getServerImplMap();

for (Map.Entry entry:serverImplMap.entrySet()){

// zkTempZnodes.createSimpleZnode(balancePath+"/"+entry.getKey(),null);

StringBuilder serviceBuilder=new StringBuilder(pathBuilder.toString());

serviceBuilder.append("/");

serviceBuilder.append(entry.getKey());

zkTempZnodes.createSimpleZnode(serviceBuilder.toString(),null);

serviceBuilder.append(ZKConst.providersPath);

zkTempZnodes.createSimpleZnode(serviceBuilder.toString(),null);

}

} 负载均衡策略

常见的负载均衡策略,(加权)随机,轮询,最小连接数,一致性Hash/**

* Created by wephone on 18-1-8.

* 负载均衡策略抽象接口

* 其他模块不耦合负载均衡代码

*/

public interface LoadBalance {

/**

* 负载均衡选择服务中已选中的IP之一

* @param serviceName

* @return

*/

String chooseIP(String serviceName) throws ProvidersNoFoundException;

}/**

* Created by wephone on 18-1-18.

*/

public class RandomBalance implements LoadBalance {

@Override

public String chooseIP(String serviceName) throws ProvidersNoFoundException {

RPCRequestNet.getInstance().serviceLockMap.get(serviceName).readLock().lock();

Set ipSet=RPCRequestNet.getInstance().serviceNameInfoMap.get(serviceName).getServiceIPSet();

int ipNum=ipSet.size();

if (ipNum==0){

throw new ProvidersNoFoundException();

}

RPCRequestNet.getInstance().serviceLockMap.get(serviceName).readLock().unlock();

Random random = new Random();

//生成[0,num)区间的整数:

int index = random.nextInt(ipNum);

int count = 0;

for (String ip : ipSet) {

if (count == index) {

//返回随机生成的索引位置ip

return ip;

}

count++;

}

return null;

}

} 服务端的代码

服务端相对比较简单,就是启动netty服务器,然后向zookeeper注册自己的IP节点RPC.start方法如下

public static void start() throws InterruptedException, IOException {

System.out.println("welcome to use MeiZhuoRPC");

ZooKeeper zooKeeper= new ZKConnect().serverConnect();

ZKServerService zkServerService=new ZKServerService(zooKeeper);

try {

zkServerService.initZnode();

//创建所有提供者服务的znode

zkServerService.createServerService();

} catch (KeeperException e) {

e.printStackTrace();

}

//阻塞服务端不会退出

RPCResponseNet.connect();

}服务端的更新基本在这里,读配置把提供的服务注册在zookeeper上(初始化节点,创建服务节点)

服务端如此操作就可以了 其余的和前面1.0版本的一样 等待消费者连接即可

接下来看调用者端

/**

* Created by wephone on 18-1-8.

* 每个服务对应的信息存放类

* 用在一个key为服务名字的serviceNameInfoMap里

*/

public class ServiceInfo {

//用于轮询负载均衡策略

private AtomicInteger index=new AtomicInteger(0);

//这个服务所连接的提供者IP Set 只能由负载均衡类操作

private Set serviceIPSet=new HashSet<>();

// public void setServiceIPSet(Set serviceIPSet) {

public void setServiceIPSet(List newIPSet) {

Set set=new HashSet<>();

set.addAll(newIPSet);

this.serviceIPSet.clear();

this.serviceIPSet.addAll(set);

}

public Set getServiceIPSet() {

return serviceIPSet;

}

public int getConnectIPSetCount(){

return serviceIPSet.size();

}

public void addConnectIP(String IP) {

serviceIPSet.add(IP);

}

public void removeConnectIP(String IP){

serviceIPSet.remove(IP);

}

} /**

* Created by wephone on 18-1-8.

* IP对应的channel类 用于一个IP映射的Map IPChannelMap

* 存放一个IP对应的channel

*/

public class IPChannelInfo {

private EventLoopGroup group;

private Channel channel;

// //保证多线程修改时引用计数正确

// private AtomicInteger serviceQuoteNum=new AtomicInteger(0);//原子变量要赋初值

public EventLoopGroup getGroup() {

return group;

}

public void setGroup(EventLoopGroup group) {

this.group = group;

}

public Channel getChannel() {

return channel;

}

public void setChannel(Channel channel) {

this.channel = channel;

}

}public class RPCRequestNet {

//全局map 每个请求对应的锁 用于同步等待每个异步的RPC请求

public Map requestLockMap=new ConcurrentHashMap();

//每个IP对应一个锁 防止重复连接一个IP多次

public Map connectlock=new ConcurrentHashMap();

//服务名称 映射 服务信息类

public Map serviceNameInfoMap=new ConcurrentHashMap<>();

//IP地址 映射 对应的NIO Channel及其引用次数

public Map IPChannelMap=new ConcurrentHashMap<>();

//全局读写锁 更新ip时为写操作 负载均衡选中IP为读操作

public ConcurrentHashMap serviceLockMap=new ConcurrentHashMap<>();

// public CountDownLatch countDownLatch=new CountDownLatch(1);

private LoadBalance loadBalance;

private static RPCRequestNet instance; 说完基本的类,我们来看看消费者端的启动

clientConfig获得IOC容器后 获得所有可用的提供者IP 并作为serviceInfo的初始值进行初始化@Override

public void setApplicationContext(ApplicationContext applicationContext) throws BeansException {

RPC.clientContext=applicationContext;

//获得IOC容器后 读取配置中的服务

try {

ZooKeeper zooKeeper= new ZKConnect().clientConnect();

ZKServerService zkServerService=new ZKServerService(zooKeeper);

Set services=RPC.getClientConfig().getServiceInterface();

//初始化所有可用IP 初始化读写锁

for (String service:services){

List ips=zkServerService.getAllServiceIP(service);

for (String ip:ips){

RPCRequestNet.getInstance().IPChannelMap.putIfAbsent(ip,new IPChannelInfo());

}

ServiceInfo serviceInfo=new ServiceInfo();

serviceInfo.setServiceIPSet(ips);

ReadWriteLock readWriteLock=new ReentrantReadWriteLock();

RPCRequestNet.getInstance().serviceLockMap.putIfAbsent(service,readWriteLock);

RPCRequestNet.getInstance().serviceNameInfoMap.putIfAbsent(service,serviceInfo);

}

} catch (IOException e) {

e.printStackTrace();

} catch (InterruptedException e) {

e.printStackTrace();

} catch (KeeperException e) {

e.printStackTrace();

}

} zookeeper的watcher

这里只使用到了一个watcher IPWatcher

/**

* Created by wephone on 18-1-7.

* 服务提供者和调用者的IP监控器 即监听服务的可用性

*/

public class IPWatcher implements Watcher{

private ZooKeeper zooKeeper;

public IPWatcher(ZooKeeper zooKeeper) {

this.zooKeeper = zooKeeper;

}

@Override

public void process(WatchedEvent watchedEvent) {

/**

* 监听到节点提供者IP节点变化时被调用

* 调用后进行平衡操作

*/

String path=watchedEvent.getPath();

String[] pathArr=path.split("/");

String serviceName=pathArr[3];//第四个部分则为服务名

RPCRequestNet.getInstance().serviceLockMap.get(serviceName).writeLock().lock();

System.out.println("providers changed...Lock write Lock");

try {

List children=zooKeeper.getChildren(path,this);

for (String ip:children){

RPCRequestNet.getInstance().IPChannelMap.putIfAbsent(ip,new IPChannelInfo());

}

RPCRequestNet.getInstance().serviceNameInfoMap.get(serviceName).setServiceIPSet(children);

} catch (KeeperException e) {

e.printStackTrace();

} catch (InterruptedException e) {

e.printStackTrace();

}

RPCRequestNet.getInstance().serviceLockMap.get(serviceName).writeLock().unlock();

}

} 也就是收到watch通知后 重新获取可用IP 写入serviceInfo 并再次注册watcher

这里我用到一种锁机制 读写锁当IP发生更新时,我们改写ServiceInfo中的IP集合

这时需要阻塞IP的获取操作 以防获取到已经不存在的IP节点

所以采用读写锁,各个RPC获取IP时加读锁,相互不阻塞,当IP发生改变时 上写锁,相互阻塞 直至IP更新完毕

发送RPC请求时操作如下

public void send(RPCRequest request) throws ProvidersNoFoundException {

String serviceName=request.getClassName();

String ip=loadBalance.chooseIP(serviceName);

Channel channel=connect(ip);

// System.out.println("Send RPC Thread:"+Thread.currentThread().getName());

try {

//编解码对象为json 发送请求

String requestJson= null;

try {

requestJson = RPC.requestEncode(request);

} catch (JsonProcessingException e) {

e.printStackTrace();

}

ByteBuf requestBuf= Unpooled.copiedBuffer(requestJson.getBytes());

channel.writeAndFlush(requestBuf);

// System.out.println("调用"+request.getRequestID()+"已发送");

//挂起等待实现端处理完毕返回 TODO 后续配置超时时间

synchronized (request) {

//放弃对象锁 并阻塞等待notify

request.wait();

}

// System.out.println("调用"+request.getRequestID()+"接收完毕");

} catch (InterruptedException e) {

e.printStackTrace();

}

}即选择IP 进行连接(如果已连接则在Map中取出Channel) 最后发送 基本和前面的版本一致

最后的补充

一致性hash策略每个调用者只会映射给一个提供者,在调用者数量远远大于提供者时,大大减少了多余的长连接,但在调用者数小于提供者的时候,会有部分提供者一直没有收到请求的情况。这时候建议使用随机等策略,保证每个提供者都能被负载均衡。

框架使用者可以根据提供者调用者数量比较来选择相应的负载均衡策略。

以上就是MeiZhuoRPC支持zookeeper注册中心的核心代码