彷徨 | MapReduce实例四 | 统计每个单词在每个文件里出现的次数

示例:一个目录下有多个文件,每个文件里有相同的单词,统计每个单词在每个文件里出现的次数

即同一个单词在不同文件下的词频统计

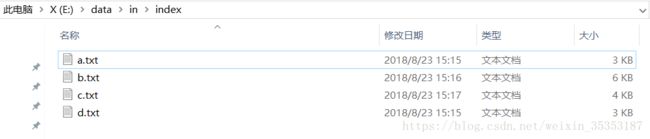

文件目录如下:

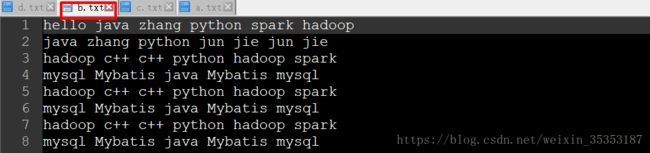

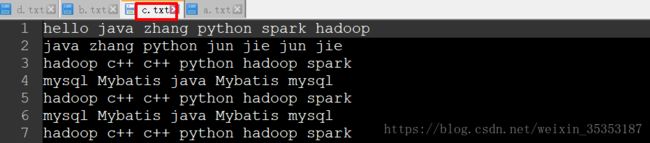

各文件内容片断:

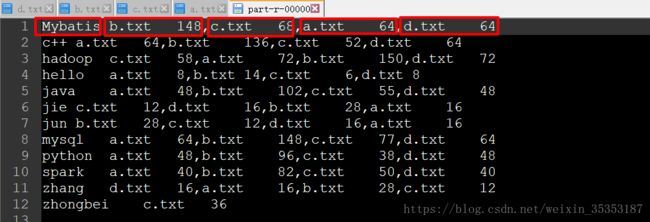

要求结果如下:及同一个单词在不同文件下的词频统计

思路:

第一步:我们可以先将单词和文件名作为key,将出现次数作为value,统计每个单词在每个文件里出现的次数

例:

Mybatis-a.txt 64

Mybatis-b.txt 148

Mybatis-c.txt 68

Mybatis-d.txt 64

c++-a.txt 64

c++-b.txt 136

c++-c.txt 52

c++-d.txt 64第二步:再将单词作为key,将文件名和出现次数作为value实现

例:

Mybatis b.txt 148,c.txt 68,a.txt 64,d.txt 64

c++ a.txt 64,b.txt 136,c.txt 52,d.txt 64

hadoop c.txt 58,a.txt 72,b.txt 150,d.txt 72

hello a.txt 8,b.txt 14,c.txt 6,d.txt 8

java a.txt 48,b.txt 102,c.txt 55,d.txt 48

jie c.txt 12,d.txt 16,b.txt 28,a.txt 16

jun b.txt 28,c.txt 12,d.txt 16,a.txt 16

mysql a.txt 64,b.txt 148,c.txt 77,d.txt 64

python a.txt 48,b.txt 96,c.txt 38,d.txt 48

spark a.txt 40,b.txt 82,c.txt 50,d.txt 40

zhang d.txt 16,a.txt 16,b.txt 28,c.txt 12

zhongbei c.txt 36

代码实现 :

第一步 :

package hadoop_day06.createIndex;

import java.io.File;

import java.io.IOException;

import org.apache.commons.io.FileUtils;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.FileSplit;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class CreateIndexOne {

//738273 hello hello hadoop ------> hello-a.txt 1

public static class MapTask extends Mapper{

String pathName = null;

@Override

protected void setup(Mapper.Context context)

throws IOException, InterruptedException {

FileSplit fileSplit = (FileSplit)context.getInputSplit();

pathName = fileSplit.getPath().getName();

}

@Override

protected void map(LongWritable key, Text value, Mapper.Context context)

throws IOException, InterruptedException {

String[] words = value.toString().split(" ");

for (String word : words) {

context.write(new Text(word+"-"+pathName), new IntWritable(1));

}

}

}

public static class ReduceTask extends Reducer{

@SuppressWarnings("unused")

@Override

protected void reduce(Text key, Iterable values,

Reducer.Context context) throws IOException, InterruptedException {

int count = 0;

for (IntWritable intWritable : values) {

count++;

}

context.write(key, new IntWritable(count));

}

}

public static void main(String[] args) throws Exception{

Configuration conf = new Configuration();

Job job = Job.getInstance(conf, "friendstwo");

//设置map和reduce,以及提交的jar

job.setMapperClass(MapTask.class);

job.setReducerClass(ReduceTask.class);

job.setJarByClass(CreateIndexOne.class);

//设置输出类型

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

//输入和输出目的?

FileInputFormat.addInputPath(job, new Path("E:\\data\\in\\index"));

FileOutputFormat.setOutputPath(job, new Path("E:\\data\\out\\indexOne"));

//判断文件是否存在

File file = new File("E:\\data\\out\\indexOne");

if(file.exists()){

FileUtils.deleteDirectory(file);

}

//提交任务

boolean completion = job.waitForCompletion(true);

System.out.println(completion?"你很优秀!!!":"滚去调bug!!!");

}

}

第二步 :

package hadoop_day06.createIndex;

import java.io.File;

import java.io.IOException;

import org.apache.commons.io.FileUtils;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class CreateIndexTwo {

public static class MapTask extends Mapper{

Text outKey = new Text();

Text outValue = new Text();

@Override

protected void map(LongWritable key, Text value, Mapper.Context context)

throws IOException, InterruptedException {

//c#-a.txt 1

String[] split = value.toString().split("-");

String word = split[0];

String nameNum = split[1];

outKey.set(word);

outValue.set(nameNum);

context.write(outKey, outValue);

}

}

public static class ReduceTask extends Reducer{

@Override

protected void reduce(Text key, Iterable values, Reducer.Context context)

throws IOException, InterruptedException {

StringBuilder sb = new StringBuilder();

boolean flag = true;

for (Text text : values) {

if(flag){

sb.append(text.toString());

flag = false;

}else{

sb.append(",");

sb.append(text.toString());

}

}

context.write(key, new Text(sb.toString()));

}

}

public static void main(String[] args) throws Exception{

Configuration conf = new Configuration();

Job job = Job.getInstance(conf, "indexTwo");

//设置map和reduce,以及提交的jar

job.setMapperClass(MapTask.class);

job.setReducerClass(ReduceTask.class);

job.setJarByClass(CreateIndexTwo.class);

//设置输出类型

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(Text.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

//输入和输出目的?

FileInputFormat.addInputPath(job, new Path("E:\\data\\out\\indexOne"));

FileOutputFormat.setOutputPath(job, new Path("E:\\data\\out\\indexTwo"));

//判断文件是否存在

File file = new File("E:\\data\\out\\indexTwo");

if(file.exists()){

FileUtils.deleteDirectory(file);

}

//提交任务

boolean completion = job.waitForCompletion(true);

System.out.println(completion?"你很优秀?":"滚去调bug");

}

}

第一步运行结果 :

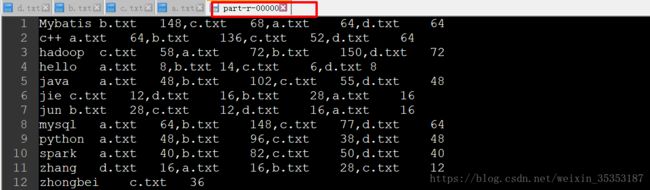

第二步运行结果:

最终结果 :