流式计算--整合kafka+flume+storm

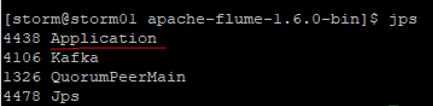

本篇博客基于之前搭建的kafka集群和storm集群

1.数据流向

日志系统=>flume=>kafka=>storm

2.安装flume

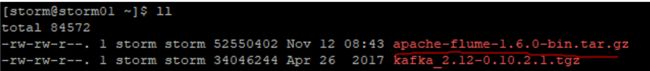

1.我们在storm01上安装flume1.6.0,上传安装包

2.解压到 /export/servers/flume,首先创建文件夹flume

命令: sudo tar -zxvf apache-flume-1.6.0-bin.tar.gz -C /export/servers/flume/

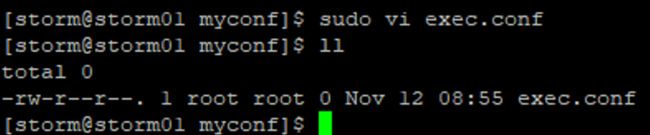

3.配置采集文件,在conf目录下创建一个myconf文件夹

4.继续在myconf文件夹下创建配置文件

配置文件的内容:主要是监听日志文件

agent.sinks = k1

agent.sources = s1

agent.sources = s1

agent.channels = c1

agent.sinks = k1

agent.sources.s1.type=exec

agent.sources.s1.command=tail -F /export/data/flume_source/click_log/1.log

agent.sources.s1.channels=c1

agent.channels.c1.type=memory

agent.channels.c1.capacity=10000

agent.channels.c1.transactionCapacity=100

#设置Kafka接收器

agent.sinks.k1.type= org.apache.flume.sink.kafka.KafkaSink

#设置Kafka的broker地址和端口

agent.sinks.k1.brokerList=kafka01:9092

#设置Kafka的Topic

agent.sinks.k1.topic=orderMq

#设置序列化方式

agent.sinks.k1.serializer.class=kafka.serializer.StringEncoder

agent.sinks.k1.channel=c1

准备监听的目录:

/export/data/flume_source/click_log

5.启动命令:

bin/flume-ng agent -n agent -c ./conf -f ./conf/myconf/exec.conf -Dflume.root.logger=INFO,console6. 测试数据从flume到kafka是否正确:

Kafka的shell消费:

bin/kafka-console-consumer.sh --zookeeper zk01:2181 --from-beginning --topic orderMq 不从开始消费而是从最大开始消费:

编写模拟生产日志数据的脚本文件:

vi click_log_out.sh

for((i=0;i<50000;i++));

do echo "message-"+$i >>/export/data/flume_source/click_log/1.log;

done

Sudo chmod u+x click_log_out.sh

执行脚本:sh click_log_out.sh 另外一边的customer就在开始采集数据了:

说明数据从flume到kafka是没有问题的

3.数据从kafka到Storm

新建maven工程:添加依赖

4.0.0

com.wx

stormkafka

1.0-SNAPSHOT

org.apache.storm

storm-core

0.9.5

org.apache.storm

storm-kafka

0.9.5

org.clojure

clojure

1.5.1

org.apache.kafka

kafka_2.8.2

0.8.1

jmxtools

com.sun.jdmk

jmxri

com.sun.jmx

jms

javax.jms

org.apache.zookeeper

zookeeper

org.slf4j

slf4j-log4j12

org.slf4j

slf4j-api

maven-assembly-plugin

jar-with-dependencies

cn.itcast.bigdata.hadoop.mapreduce.wordcount.WordCount

make-assembly

package

single

org.apache.maven.plugins

maven-compiler-plugin

1.7

1.7

编写一个topology:

package com.wx.kafkaandstorm;

import backtype.storm.Config;

import backtype.storm.LocalCluster;

import backtype.storm.StormSubmitter;

import backtype.storm.topology.TopologyBuilder;

import storm.kafka.KafkaSpout;

import storm.kafka.SpoutConfig;

import storm.kafka.ZkHosts;

public class KafkaAndStormTopologyMain {

public static void main(String[] args) throws Exception{

TopologyBuilder topologyBuilder = new TopologyBuilder();

topologyBuilder.setSpout("kafkaSpout",

new KafkaSpout(new SpoutConfig(

new ZkHosts("192.168.25.130:2181,192.168.25.131:2181,192.168.25.132:2181"),

"orderMq",

"/myKafka",

"kafkaSpout")),1);

topologyBuilder.setBolt("mybolt1",new ParserOrderMqBolt(),1).shuffleGrouping("kafkaSpout");

Config config = new Config();

config.setNumWorkers(1);

//3、提交任务 -----两种模式 本地模式和集群模式

if (args.length>0) {

StormSubmitter.submitTopology(args[0], config, topologyBuilder.createTopology());

}else {

LocalCluster localCluster = new LocalCluster();

localCluster.submitTopology("storm2kafka", config, topologyBuilder.createTopology());

}

}

}编写一个Bolt接收来自kafka的数据

package com.wx.kafkaandstorm;

import backtype.storm.task.OutputCollector;

import backtype.storm.task.TopologyContext;

import backtype.storm.topology.OutputFieldsDeclarer;

import backtype.storm.topology.base.BaseRichBolt;

import backtype.storm.tuple.Tuple;

import java.util.Map;

public class ParserOrderMqBolt extends BaseRichBolt {

@Override

public void prepare(Map map, TopologyContext topologyContext, OutputCollector outputCollector) {

}

@Override

public void execute(Tuple tuple) {

Object o=tuple.getValue(0);

System.out.printf(new String((byte[]) tuple.getValue(0))+"\n\t");

}

@Override

public void declareOutputFields(OutputFieldsDeclarer outputFieldsDeclarer) {

}

}

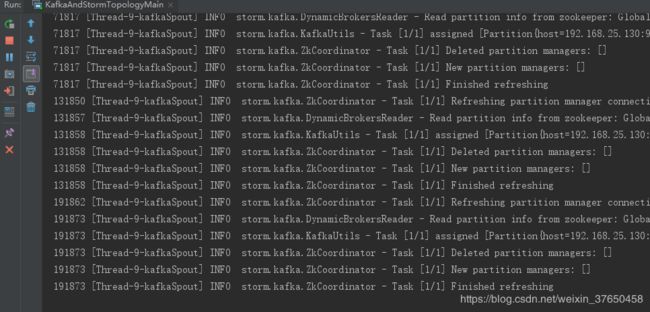

4.联合测试:

启动日志生产脚本:

数据也可以从kafka流到storm:

本地运行没有问题,但是把他打包上传到集群上去跑就出现问题了,报了个错:backtype.storm.topology.IRichSpout

这个错表示要么少包要么包冲突,本地能运行成功,应该不可能少包,应该是包冲突,到底是哪个包冲突了呢?集群的storm版本是1.0.6而本地运行的storm jar的版本是0.9.5,所以果断把本地的storm包换成1.0.6版本的,但是还是运行不成功,原因是clojure包的版本太低,storm1.0以上的需要clojure包的版本是1.7,所以改变包的版本,本地测试能通过,集群测试也能通过

贴出最终pom文件:

4.0.0

com.wx

stormkafka

1.0-SNAPSHOT

org.apache.storm

storm-core

1.0.6

provided

org.apache.storm

storm-kafka

1.0.6

org.clojure

clojure

1.7.0

org.apache.kafka

kafka_2.8.2

0.8.1

jmxtools

com.sun.jdmk

jmxri

com.sun.jmx

jms

javax.jms

org.apache.zookeeper

zookeeper

org.slf4j

slf4j-log4j12

org.slf4j

slf4j-api

maven-assembly-plugin

jar-with-dependencies

com.wx.kafkaandstorm.KafkaAndStormTopologyMain

make-assembly

package

single

org.apache.maven.plugins

maven-compiler-plugin

1.7

1.7