Yolo 3运行训练 + Tensorflow 2

这篇文章主要帮助复习Yolo3, 需要你首先对Yolo3有一定了解, 至少看过一边它.

Yolo3是一个端到端的模型, 为了方便理解, 我把它分为两个部分, 分别为特征提取网络和定位网络. 在特征提取网络中, 它使用了Darknet-53, 之所以有53这个数字, 是因为它有53层卷积. 在定位网络中, 它会输出基于图像金字塔结构的三个尺度定位结果.

特征提取网络:

我们先来看看这个Darknet-53, 它的特点是有53层卷积(除去最后一个FC总共52个卷积用于当做主体网络)和带有残差连接. 下图很好的可视化了该网络.

这里有两个细节需要注意

- 当Darknet-53中的DBL的strides为1的时候, 默认padding都是‘same’. (见上图中的Darknetconv2D_BN_leaky和Res_uinit模块)

- 当Darknet-53中的DBl的strides为2的时候, 默认padding都是valid, 但是在这之前会进行一个 zeros padding的步骤, 就是在左边和上面各加一行都为0的padding, 来保证经过卷积后, 长宽各缩小一倍. (见图中的Resblock_body)

定位网络:

在神经网络中, 随着网络层数的加深, 该层的视野区域就越大, 但信息也丢失的越多, 所以为了平衡在不同大小的标检测上的效果, Yolo3使用了基于图像金字塔结构的 多尺度 特征输出, 也就是Darknet-53中较浅的层数输出一组特征, Darknet-53中间的层数输出一组特征, Darknet-53最深的层数地方输出一组特征. 然后这三组特征, 会再经过神经网络的处理, 最后输出三组不同尺度的定位目标结果. 下图很好的展示了定位部分网络的结构

- y1, y2, y3分别对应了不同尺度的检测结果, 比如 y1中的 13 * 13 * 255, 表示把图片平分为 13 * 13个小格子, 255 表示了每个格子的预测目标结果, yolo3设定的是每个网格单元预测3个box,所以每个box需要有(x, y, w, h, confidence)五个基本参数,然后还要有80个类别的概率。所以3×(5 + 80) = 255

- y1, y2, y3 的输出都是没有经过归一化的(它们只是卷积之后的结果), 所以 [x, y, w, h, confidence, classes] 需要归一化, [x, y, confidence和classes] 用 sigmoid 归一化到 0 ~ 1, 同时[x, y] 需要再加上偏移量Cx(横向第几个格子)和Cy(竖向第几个格子), [w, h] 用prior anchors box来归一化 (width of prior anchor box* np.exp(w) 和 height of prior anchor box* np.exp(h)). w和h不用 sigmoid的原因有两点: (1): 它们有可能比 prior anchors box 大, 也就是比例大于1, 而sigmod的范围是 0 ~ 1. (2): yolo3想要建立起预测结果与prior anchors box的关系.

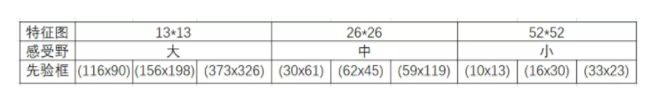

- Prior Anchors box 的大小定义见下图, 是使用K-Means在训练集中求得的.

- 目标定位的步骤如下. (1) 计算每个boudingbox中得分最高的confidences of class [np.max(confidence * possibilities of each class)] (2) 过滤所有confidence过低的boudingbox (3)对剩下的boudingbox进行Non-max suppression(基于每个class)

下面是预测结果的代码

Yolo3网络框架

# based on https://github.com/experiencor/keras-yolo3

import struct

import numpy as np

import tensorflow as tf

from tensorflow.keras.layers import *

from tensorflow.keras.models import *

def _conv_block(inp, convs, skip=True):

x = inp

count = 0

for conv in convs:

if count == (len(convs) - 2) and skip:

skip_connection = x

count += 1

if conv['stride'] > 1: x = ZeroPadding2D(((1,0),(1,0)))(x) # If tuple of 2 tuples of 2 ints: interpreted as ((top_pad, bottom_pad), (left_pad, right_pad))

x = Conv2D(conv['filter'],

conv['kernel'],

strides=conv['stride'],

padding='valid' if conv['stride'] > 1 else 'same', # peculiar padding as darknet prefer left and top

name='conv_' + str(conv['layer_idx']),

use_bias=False if conv['bnorm'] else True)(x)

if conv['bnorm']: x = BatchNormalization(epsilon=0.001, name='bnorm_' + str(conv['layer_idx']))(x)

if conv['leaky']: x = LeakyReLU(alpha=0.1, name='leaky_' + str(conv['layer_idx']))(x)

return add([skip_connection, x]) if skip else x

def make_yolov3_model():

input_image = Input(shape=(None, None, 3))

# Layer 0 => 4

x = _conv_block(input_image, [{'filter': 32, 'kernel': 3, 'stride': 1, 'bnorm': True, 'leaky': True, 'layer_idx': 0},

{'filter': 64, 'kernel': 3, 'stride': 2, 'bnorm': True, 'leaky': True, 'layer_idx': 1},

{'filter': 32, 'kernel': 1, 'stride': 1, 'bnorm': True, 'leaky': True, 'layer_idx': 2},

{'filter': 64, 'kernel': 3, 'stride': 1, 'bnorm': True, 'leaky': True, 'layer_idx': 3}])

# Layer 5 => 8

x = _conv_block(x, [{'filter': 128, 'kernel': 3, 'stride': 2, 'bnorm': True, 'leaky': True, 'layer_idx': 5},

{'filter': 64, 'kernel': 1, 'stride': 1, 'bnorm': True, 'leaky': True, 'layer_idx': 6},

{'filter': 128, 'kernel': 3, 'stride': 1, 'bnorm': True, 'leaky': True, 'layer_idx': 7}])

# Layer 9 => 11

x = _conv_block(x, [{'filter': 64, 'kernel': 1, 'stride': 1, 'bnorm': True, 'leaky': True, 'layer_idx': 9},

{'filter': 128, 'kernel': 3, 'stride': 1, 'bnorm': True, 'leaky': True, 'layer_idx': 10}])

# Layer 12 => 15

x = _conv_block(x, [{'filter': 256, 'kernel': 3, 'stride': 2, 'bnorm': True, 'leaky': True, 'layer_idx': 12},

{'filter': 128, 'kernel': 1, 'stride': 1, 'bnorm': True, 'leaky': True, 'layer_idx': 13},

{'filter': 256, 'kernel': 3, 'stride': 1, 'bnorm': True, 'leaky': True, 'layer_idx': 14}])

# Layer 16 => 36

for i in range(7):

x = _conv_block(x, [{'filter': 128, 'kernel': 1, 'stride': 1, 'bnorm': True, 'leaky': True, 'layer_idx': 16+i*3},

{'filter': 256, 'kernel': 3, 'stride': 1, 'bnorm': True, 'leaky': True, 'layer_idx': 17+i*3}])

skip_36 = x

# Layer 37 => 40

x = _conv_block(x, [{'filter': 512, 'kernel': 3, 'stride': 2, 'bnorm': True, 'leaky': True, 'layer_idx': 37},

{'filter': 256, 'kernel': 1, 'stride': 1, 'bnorm': True, 'leaky': True, 'layer_idx': 38},

{'filter': 512, 'kernel': 3, 'stride': 1, 'bnorm': True, 'leaky': True, 'layer_idx': 39}])

# Layer 41 => 61

for i in range(7):

x = _conv_block(x, [{'filter': 256, 'kernel': 1, 'stride': 1, 'bnorm': True, 'leaky': True, 'layer_idx': 41+i*3},

{'filter': 512, 'kernel': 3, 'stride': 1, 'bnorm': True, 'leaky': True, 'layer_idx': 42+i*3}])

skip_61 = x

# Layer 62 => 65

x = _conv_block(x, [{'filter': 1024, 'kernel': 3, 'stride': 2, 'bnorm': True, 'leaky': True, 'layer_idx': 62},

{'filter': 512, 'kernel': 1, 'stride': 1, 'bnorm': True, 'leaky': True, 'layer_idx': 63},

{'filter': 1024, 'kernel': 3, 'stride': 1, 'bnorm': True, 'leaky': True, 'layer_idx': 64}])

# Layer 66 => 74

for i in range(3):

x = _conv_block(x, [{'filter': 512, 'kernel': 1, 'stride': 1, 'bnorm': True, 'leaky': True, 'layer_idx': 66+i*3},

{'filter': 1024, 'kernel': 3, 'stride': 1, 'bnorm': True, 'leaky': True, 'layer_idx': 67+i*3}])

# Layer 75 => 79

x = _conv_block(x, [{'filter': 512, 'kernel': 1, 'stride': 1, 'bnorm': True, 'leaky': True, 'layer_idx': 75},

{'filter': 1024, 'kernel': 3, 'stride': 1, 'bnorm': True, 'leaky': True, 'layer_idx': 76},

{'filter': 512, 'kernel': 1, 'stride': 1, 'bnorm': True, 'leaky': True, 'layer_idx': 77},

{'filter': 1024, 'kernel': 3, 'stride': 1, 'bnorm': True, 'leaky': True, 'layer_idx': 78},

{'filter': 512, 'kernel': 1, 'stride': 1, 'bnorm': True, 'leaky': True, 'layer_idx': 79}], skip=False)

# Layer 80 => 82

yolo_82 = _conv_block(x, [{'filter': 1024, 'kernel': 3, 'stride': 1, 'bnorm': True, 'leaky': True, 'layer_idx': 80},

{'filter': 255, 'kernel': 1, 'stride': 1, 'bnorm': False, 'leaky': False, 'layer_idx': 81}], skip=False)

# Layer 83 => 86

x = _conv_block(x, [{'filter': 256, 'kernel': 1, 'stride': 1, 'bnorm': True, 'leaky': True, 'layer_idx': 84}], skip=False)

x = UpSampling2D(2)(x)

x = concatenate([x, skip_61])

# Layer 87 => 91

x = _conv_block(x, [{'filter': 256, 'kernel': 1, 'stride': 1, 'bnorm': True, 'leaky': True, 'layer_idx': 87},

{'filter': 512, 'kernel': 3, 'stride': 1, 'bnorm': True, 'leaky': True, 'layer_idx': 88},

{'filter': 256, 'kernel': 1, 'stride': 1, 'bnorm': True, 'leaky': True, 'layer_idx': 89},

{'filter': 512, 'kernel': 3, 'stride': 1, 'bnorm': True, 'leaky': True, 'layer_idx': 90},

{'filter': 256, 'kernel': 1, 'stride': 1, 'bnorm': True, 'leaky': True, 'layer_idx': 91}], skip=False)

# Layer 92 => 94

yolo_94 = _conv_block(x, [{'filter': 512, 'kernel': 3, 'stride': 1, 'bnorm': True, 'leaky': True, 'layer_idx': 92},

{'filter': 255, 'kernel': 1, 'stride': 1, 'bnorm': False, 'leaky': False, 'layer_idx': 93}], skip=False)

# Layer 95 => 98

x = _conv_block(x, [{'filter': 128, 'kernel': 1, 'stride': 1, 'bnorm': True, 'leaky': True, 'layer_idx': 96}], skip=False)

x = UpSampling2D(2)(x)

x = concatenate([x, skip_36])

# Layer 99 => 106

yolo_106 = _conv_block(x, [{'filter': 128, 'kernel': 1, 'stride': 1, 'bnorm': True, 'leaky': True, 'layer_idx': 99},

{'filter': 256, 'kernel': 3, 'stride': 1, 'bnorm': True, 'leaky': True, 'layer_idx': 100},

{'filter': 128, 'kernel': 1, 'stride': 1, 'bnorm': True, 'leaky': True, 'layer_idx': 101},

{'filter': 256, 'kernel': 3, 'stride': 1, 'bnorm': True, 'leaky': True, 'layer_idx': 102},

{'filter': 128, 'kernel': 1, 'stride': 1, 'bnorm': True, 'leaky': True, 'layer_idx': 103},

{'filter': 256, 'kernel': 3, 'stride': 1, 'bnorm': True, 'leaky': True, 'layer_idx': 104},

{'filter': 255, 'kernel': 1, 'stride': 1, 'bnorm': False, 'leaky': False, 'layer_idx': 105}], skip=False)

model = Model(input_image, [yolo_82, yolo_94, yolo_106])

return model

class WeightReader:

def __init__(self, weight_file):

with open(weight_file, 'rb') as w_f:

major, = struct.unpack('i', w_f.read(4))

minor, = struct.unpack('i', w_f.read(4))

revision, = struct.unpack('i', w_f.read(4))

if (major*10 + minor) >= 2 and major < 1000 and minor < 1000:

w_f.read(8)

else:

w_f.read(4)

transpose = (major > 1000) or (minor > 1000)

binary = w_f.read()

self.offset = 0

self.all_weights = np.frombuffer(binary, dtype='float32')

def read_bytes(self, size):

self.offset = self.offset + size

return self.all_weights[self.offset-size:self.offset]

def load_weights(self, model):

for i in range(106):

try:

conv_layer = model.get_layer('conv_' + str(i))

print("loading weights of convolution #" + str(i))

if i not in [81, 93, 105]:

norm_layer = model.get_layer('bnorm_' + str(i))

size = np.prod(norm_layer.get_weights()[0].shape)

beta = self.read_bytes(size) # bias

gamma = self.read_bytes(size) # scale

mean = self.read_bytes(size) # mean

var = self.read_bytes(size) # variance

weights = norm_layer.set_weights([gamma, beta, mean, var])

if len(conv_layer.get_weights()) > 1:

bias = self.read_bytes(np.prod(conv_layer.get_weights()[1].shape))

kernel = self.read_bytes(np.prod(conv_layer.get_weights()[0].shape))

kernel = kernel.reshape(list(reversed(conv_layer.get_weights()[0].shape)))

kernel = kernel.transpose([2,3,1,0])

conv_layer.set_weights([kernel, bias])

else:

kernel = self.read_bytes(np.prod(conv_layer.get_weights()[0].shape))

kernel = kernel.reshape(list(reversed(conv_layer.get_weights()[0].shape)))

kernel = kernel.transpose([2,3,1,0])

conv_layer.set_weights([kernel])

except ValueError:

print("no convolution #" + str(i))

def reset(self):

self.offset = 0

# define the model

model = make_yolov3_model()

# load the model weights

weight_reader = WeightReader('yolov3.weights')

# set the model weights into the model

weight_reader.load_weights(model)

#model.compile(optimizer=Adam(5e-3), loss='sparse_categorical_crossentropy', metrics=['accuracy'])

# save the model to file

model.save('model.h5')

模型输出后期处理

import numpy as np

from numpy import expand_dims

from tensorflow.keras.models import load_model

from tensorflow.keras.preprocessing.image import load_img

from tensorflow.keras.preprocessing.image import img_to_array

from matplotlib import pyplot

from matplotlib.patches import Rectangle

class BoundBox:

def __init__(self, xmin, ymin, xmax, ymax, objness = None, classes = None):

self.xmin = xmin

self.ymin = ymin

self.xmax = xmax

self.ymax = ymax

self.objness = objness

self.classes = classes

self.label = -1

self.score = -1

def get_label(self):

if self.label == -1:

self.label = np.argmax(self.classes)

return self.label

def get_score(self):

if self.score == -1:

self.score = self.classes[self.get_label()]

return self.score

def _sigmoid(x):

return 1. / (1. + np.exp(-x))

def decode_netout(netout, anchors, obj_thresh, net_h, net_w):

#(13, 13, 255) / (26, 26, 255) / (52, 52, 255)

#print(netout.shape)

grid_h, grid_w = netout.shape[:2]

nb_box = 3

netout = netout.reshape((grid_h, grid_w, nb_box, -1))

# (13, 13, 3, 85) / (26, 26, 3, 85) / (52, 52, 3, 85)

# 85 => tx, ty, bh, bw, objection prediction , classes(80)

boxes = []

for i in range(grid_h * grid_w):

row = i / grid_w

col = i % grid_w

for b in range(nb_box):

# 4th element is objectness score

objectness = _sigmoid(netout[int(row)][int(col)][b][4])

if(objectness <= obj_thresh): continue

# first 4 elements are x, y, w, and h

x, y, w, h = netout[int(row)][int(col)][b][:4]

x = (col + _sigmoid(x)) / grid_w # center position, unit: image width

y = (row + _sigmoid(y)) / grid_h # center position, unit: image height

w = anchors[2 * b + 0] * np.exp(w) / net_w # unit: image width

h = anchors[2 * b + 1] * np.exp(h) / net_h # unit: image height

# last elements are class probabilities

classes = objectness * _sigmoid(netout[int(row)][col][b][5:])

classes = np.where(classes > obj_thresh, classes, 0)

box = BoundBox(x-w/2, y-h/2, x+w/2, y+h/2, objectness, classes)

boxes.append(box)

return boxes

def correct_yolo_boxes(boxes, image_h, image_w, net_h, net_w):

new_w, new_h = net_w, net_h

for i in range(len(boxes)):

x_offset, x_scale = (net_w - new_w)/2./net_w, float(new_w)/net_w

y_offset, y_scale = (net_h - new_h)/2./net_h, float(new_h)/net_h

boxes[i].xmin = int((boxes[i].xmin - x_offset) / x_scale * image_w)

boxes[i].xmax = int((boxes[i].xmax - x_offset) / x_scale * image_w)

boxes[i].ymin = int((boxes[i].ymin - y_offset) / y_scale * image_h)

boxes[i].ymax = int((boxes[i].ymax - y_offset) / y_scale * image_h)

def _interval_overlap(interval_a, interval_b):

x1, x2 = interval_a

x3, x4 = interval_b

if x3 < x1:

if x4 < x1:

return 0

else:

return min(x2,x4) - x1

else:

if x2 < x3:

return 0

else:

return min(x2,x4) - x3

def bbox_iou(box1, box2):

intersect_w = _interval_overlap([box1.xmin, box1.xmax], [box2.xmin, box2.xmax])

intersect_h = _interval_overlap([box1.ymin, box1.ymax], [box2.ymin, box2.ymax])

intersect = intersect_w * intersect_h

w1, h1 = box1.xmax-box1.xmin, box1.ymax-box1.ymin

w2, h2 = box2.xmax-box2.xmin, box2.ymax-box2.ymin

union = w1*h1 + w2*h2 - intersect

return float(intersect) / union

def do_nms(boxes, nms_thresh):

if len(boxes) > 0:

nb_class = len(boxes[0].classes)

else:

return

for c in range(nb_class):

sorted_indices = np.argsort([-box.classes[c] for box in boxes])

for i in range(len(sorted_indices)):

index_i = sorted_indices[i]

if boxes[index_i].classes[c] == 0: continue

for j in range(i+1, len(sorted_indices)):

index_j = sorted_indices[j]

if bbox_iou(boxes[index_i], boxes[index_j]) >= nms_thresh:

boxes[index_j].classes[c] = 0

# load and prepare an image

def load_image_pixels(filename, shape):

# load the image to get its shape

image = load_img(filename)

width, height = image.size

# load the image with the required size

image = load_img(filename, target_size=shape)

# convert to numpy array

image = img_to_array(image)

# scale pixel values to [0, 1]

image = image.astype('float32')

image /= 255.0

# add a dimension so that we have one sample

image = expand_dims(image, 0)

print(image.shape)

return image, width, height

# get all of the results above a threshold

def get_boxes(boxes, labels, thresh):

v_boxes, v_labels, v_scores = list(), list(), list()

# enumerate all boxes

for box in boxes:

# enumerate all possible labels

for i in range(len(labels)):

# check if the threshold for this label is high enough

if box.classes[i] > thresh:

v_boxes.append(box)

v_labels.append(labels[i])

v_scores.append(box.classes[i]*100)

# don't break, many labels may trigger for one box

return v_boxes, v_labels, v_scores

# draw all results

def draw_boxes(filename, v_boxes, v_labels, v_scores):

# load the image

data = pyplot.imread(filename)

# plot the image

pyplot.imshow(data)

# get the context for drawing boxes

ax = pyplot.gca()

# plot each box

for i in range(len(v_boxes)):

box = v_boxes[i]

# get coordinates

y1, x1, y2, x2 = box.ymin, box.xmin, box.ymax, box.xmax

# calculate width and height of the box

width, height = x2 - x1, y2 - y1

# create the shape

rect = Rectangle((x1, y1), width, height, fill=False, color='white')

# draw the box

ax.add_patch(rect)

# draw text and score in top left corner

label = "%s (%.3f)" % (v_labels[i], v_scores[i])

pyplot.text(x1, y1, label, color='white')

# show the plot

pyplot.show()

labels = ["person", "bicycle", "car", "motorbike", "aeroplane", "bus", "train", "truck",

"boat", "traffic light", "fire hydrant", "stop sign", "parking meter", "bench",

"bird", "cat", "dog", "horse", "sheep", "cow", "elephant", "bear", "zebra", "giraffe",

"backpack", "umbrella", "handbag", "tie", "suitcase", "frisbee", "skis", "snowboard",

"sports ball", "kite", "baseball bat", "baseball glove", "skateboard", "surfboard",

"tennis racket", "bottle", "wine glass", "cup", "fork", "knife", "spoon", "bowl", "banana",

"apple", "sandwich", "orange", "broccoli", "carrot", "hot dog", "pizza", "donut", "cake",

"chair", "sofa", "pottedplant", "bed", "diningtable", "toilet", "tvmonitor", "laptop", "mouse",

"remote", "keyboard", "cell phone", "microwave", "oven", "toaster", "sink", "refrigerator",

"book", "clock", "vase", "scissors", "teddy bear", "hair drier", "toothbrush"]

input_w, input_h = 416, 416

anchors = [[116,90, 156,198, 373,326], [30,61, 62,45, 59,119], [10,13, 16,30, 33,23]]

class_threshold = 0.6

# load yolov3 model

model = load_model('model.h5')

最终结果

photo_filename = 'street.jpg'

# load and prepare image

image, image_w, image_h = load_image_pixels(photo_filename, (input_w, input_h))

# make prediction

yhat = model.predict(image)

# define the probability threshold for detected objects

boxes = list()

for i in range(len(yhat)):

# decode the output of the network

boxes += decode_netout(yhat[i][0], anchors[i], class_threshold, input_h, input_w)

# correct the sizes of the bounding boxes for the shape of the image

correct_yolo_boxes(boxes, image_h, image_w, input_h, input_w)

# suppress non-maximal boxes

do_nms(boxes, 0.5)

# get the details of the detected objects

v_boxes, v_labels, v_scores = get_boxes(boxes, labels, class_threshold)

# draw what we found

draw_boxes(photo_filename, v_boxes, v_labels, v_scores)

如何使用Tensorflow 2来训练Yolo 3呢. 我把训练Yolo3分为4步, 包括 加载数据, 边界框标注归一化为Yolo格式,定义损失函数和编译模型开始训练.

**1. 加载数据 **

import cv2

import numpy as np

# A sample of Dataset Generator

class DatasetGen(object):

def __init__(self):

self.imgs_cnt = 1

self.imgs_idx = 0

def __next__(self):

if self.imgs_idx < self.imgs_cnt:

self.imgs_idx += 1

# read image from local

x = cv2.imread('./data/girl.png')

x = cv2.resize(x, (416, 416))

# bounding boxes [x1, y1, x2, y2, class]

y = np.array([

[0.18494931, 0.03049111, 0.9435849, 0.96302897, 0],

[0.22494931, 0.01949111, 0.3435849, 0.56302897, 2],

[0.01586703, 0.35938117, 0.01686703, 0.36938117, 56]])

return x, y

else:

raise StopIteration

def __iter__(self):

return self

# Test whether raw dataset can be loaded

for x, y in DatasetGen():

#output will be (416, 416, 3) (3, 5)

print(x.shape, y.shape)

- 边界框标注归一化为Yolo格式

import cv2

import numpy as np

class DatasetPre(object):

def __init__(self,

img_size = 416,

anchors = np.array([[(116, 90), (156, 198), (373, 326)],

[(30, 61), (62, 45),(59, 119)],

[(10, 13), (16, 30), (33, 23)]])):

self.img_size = img_size

self.anchors = anchors / img_size

self.anchors_info = np.array([[x, y, (self.img_size // 32) * (2 ** x)]

for x in range(anchors.shape[1])

for y in range(anchors.shape[0])])

self.anchors_flat = self.anchors.reshape(-1, self.anchors.shape[-1])

@staticmethod

def expand_repeat_axis(var, expand_axis, repat_cnt):

return np.expand_dims(var, expand_axis).repeat(repat_cnt, axis=expand_axis)

# Transform

def transform(self, x, y):

assert(x.shape == (self.img_size, self.img_size, 3))

new_x = x / 255

# calculate anchor index for true boxes

box_xy = (y[..., 2:4] + y[..., 0:2]) / 2

box_wh = y[..., 2:4] - y[..., 0:2]

anchor_area = self.anchors_flat[..., 0] * self.anchors_flat[..., 1]

box_area = box_wh[..., 0] * box_wh[..., 1]

box_wh_er = self.expand_repeat_axis(box_wh, 1, self.anchors_flat.shape[0])

intersection = np.minimum(box_wh_er[..., 0], self.anchors_flat[..., 0]) * \

np.minimum(box_wh_er[..., 1], self.anchors_flat[..., 1])

iou = intersection / (self.expand_repeat_axis(box_area, -1, self.anchors_flat.shape[0]) + anchor_area - intersection)

anchor_idxs = np.argmax(iou, axis=-1)

# new_y: list [(grid, grid, anchors, [x1, y1, x2, y2, obj, class])]

new_y = [np.zeros((grid_size, grid_size, self.anchors.shape[1], 6))

for grid_size in np.unique(self.anchors_info[:,2])]

for idx in range(anchor_idxs.shape[0]):

i, j, grid_size = self.anchors_info[anchor_idxs[idx]]

s_grid_xy = (box_xy[idx] // (1 / grid_size)).astype(np.uint8)

s_box_loc = y[..., 0:4][idx]

new_y[i][s_grid_xy[1], s_grid_xy[0], j] = [s_box_loc[0], s_box_loc[1], s_box_loc[2], s_box_loc[3], 1, y[idx, 4]]

return new_x, new_y

# Test whether dataset can be preprocessed

dataset_preprocess = DatasetPre()

for x, y in DatasetGen():

new_x, new_y = dataset_preprocess.transform(x, y)

# Output will be (416, 416, 3) [(13, 13, 3, 6), (26, 26, 3, 6), (52, 52, 3, 6)]

print(new_x.shape, [elem.shape for elem in new_y])

- 定义Yolo3的loss函数

import cv2

import numpy as np

import tensorflow as tf

def YoloLoss(anchors, classes=80, ignore_thresh=0.5):

def yolo_boxes(pred):

# pred: (batch_size, grid, grid, anchors, (x, y, w, h, obj, ...classes))

grid_size = tf.shape(pred)[1]

box_xy, box_wh, objectness, class_probs = tf.split(pred, (2, 2, 1, classes), axis=-1)

box_xy = tf.sigmoid(box_xy)

objectness = tf.sigmoid(objectness)

class_probs = tf.sigmoid(class_probs)

pred_box = tf.concat((box_xy, box_wh), axis=-1) # original xywh for loss

# !!! grid[x][y] == (y, x)

grid = tf.meshgrid(tf.range(grid_size), tf.range(grid_size))

grid = tf.expand_dims(tf.stack(grid, axis=-1), axis=2) # [gx, gy, 1, 2]

box_xy = (box_xy + tf.cast(grid, tf.float32)) / tf.cast(grid_size, tf.float32)

box_wh = tf.exp(box_wh) * anchors

box_x1y1 = box_xy - box_wh / 2

box_x2y2 = box_xy + box_wh / 2

bbox = tf.concat([box_x1y1, box_x2y2], axis=-1)

return bbox, objectness, class_probs, pred_box

def broadcast_iou(box_1, box_2):

# box_1: (..., (x1, y1, x2, y2))

# box_2: (N, (x1, y1, x2, y2))

# broadcast boxes

box_1 = tf.expand_dims(box_1, -2)

box_2 = tf.expand_dims(box_2, 0)

# new_shape: (..., N, (x1, y1, x2, y2))

new_shape = tf.broadcast_dynamic_shape(tf.shape(box_1), tf.shape(box_2))

box_1 = tf.broadcast_to(box_1, new_shape)

box_2 = tf.broadcast_to(box_2, new_shape)

int_w = tf.maximum(tf.minimum(box_1[..., 2], box_2[..., 2]) - tf.maximum(box_1[..., 0], box_2[..., 0]), 0)

int_h = tf.maximum(tf.minimum(box_1[..., 3], box_2[..., 3]) - tf.maximum(box_1[..., 1], box_2[..., 1]), 0)

int_area = int_w * int_h

box_1_area = (box_1[..., 2] - box_1[..., 0]) * (box_1[..., 3] - box_1[..., 1])

box_2_area = (box_2[..., 2] - box_2[..., 0]) * (box_2[..., 3] - box_2[..., 1])

return int_area / (box_1_area + box_2_area - int_area)

def yolo_loss(y_true, y_pred):

# 1. transform all pred outputs

# y_pred: (batch_size, grid, grid, anchors, (x, y, w, h, obj, ...cls))

pred_box, pred_obj, pred_class, pred_xywh = yolo_boxes(y_pred)

pred_xy = pred_xywh[..., 0:2]

pred_wh = pred_xywh[..., 2:4]

# 2. transform all true outputs

# y_true: (batch_size, grid, grid, anchors, (x1, y1, x2, y2, obj, cls))

true_box, true_obj, true_class_idx = tf.split(y_true, (4, 1, 1), axis=-1)

true_xy = (true_box[..., 0:2] + true_box[..., 2:4]) / 2

true_wh = true_box[..., 2:4] - true_box[..., 0:2]

# give higher weights to small boxes

box_loss_scale = 2 - true_wh[..., 0] * true_wh[..., 1]

# 3. inverting the pred box equations

grid_size = tf.shape(y_true)[1]

grid = tf.meshgrid(tf.range(grid_size), tf.range(grid_size))

grid = tf.expand_dims(tf.stack(grid, axis=-1), axis=2)

true_xy = true_xy * tf.cast(grid_size, tf.float32) - tf.cast(grid, tf.float32)

true_wh = tf.math.log(true_wh / anchors)

true_wh = tf.where(tf.math.is_inf(true_wh), tf.zeros_like(true_wh), true_wh)

# 4. calculate all masks

obj_mask = tf.squeeze(true_obj, -1)

# ignore false positive when iou is over threshold

best_iou = tf.map_fn(

lambda x: tf.reduce_max(broadcast_iou(x[0], tf.boolean_mask(

x[1], tf.cast(x[2], tf.bool))), axis=-1),

(pred_box, true_box, obj_mask),

tf.float32)

ignore_mask = tf.cast(best_iou < ignore_thresh, tf.float32)

# 5. calculate all losses

xy_loss = obj_mask * box_loss_scale * tf.reduce_sum(tf.square(true_xy - pred_xy), axis=-1)

wh_loss = obj_mask * box_loss_scale * tf.reduce_sum(tf.square(true_wh - pred_wh), axis=-1)

obj_loss = tf.keras.losses.binary_crossentropy(true_obj, pred_obj)

obj_loss = obj_mask * obj_loss + (1 - obj_mask) * ignore_mask * obj_loss

# TODO: use binary_crossentropy instead

class_loss = obj_mask * tf.keras.losses.sparse_categorical_crossentropy(true_class_idx, pred_class)

# 6. sum over (batch, gridx, gridy, anchors) => (batch, 1)

xy_loss = tf.reduce_sum(xy_loss, axis=(1, 2, 3))

wh_loss = tf.reduce_sum(wh_loss, axis=(1, 2, 3))

obj_loss = tf.reduce_sum(obj_loss, axis=(1, 2, 3))

class_loss = tf.reduce_sum(class_loss, axis=(1, 2, 3))

return xy_loss + wh_loss + obj_loss + class_loss

return yolo_loss

4.加载模型并训练

import cv2

import numpy as np

import tensorflow as tf

optimizer = tf.keras.optimizers.Adam(lr=0.01)

anchor_boxes = np.array([[(116, 90), (156, 198), (373, 326)], [(30, 61), (62, 45),(59, 119)], [(10, 13), (16, 30), (33, 23)]])

num_classes = 80

loss = [YoloLoss(mask, classes=num_classes) for mask in anchor_boxes]

optimizer = tf.keras.optimizers.Adam(lr=0.01)

model = tf.keras.models.load_model('yolo3.h5')

model.compile(optimizer=optimizer, loss=loss)

callbacks = [

ReduceLROnPlateau(verbose=1),

EarlyStopping(patience=3, verbose=1),

ModelCheckpoint('yolov3_train_{epoch}.tf',verbose=1, save_weights_only=True),

TensorBoard(log_dir='logs')

]

#train_dataset = Load your own training dataset

#validaion_data = Load your own validation dataset

history = model.fit(train_dataset, epochs=100, callbacks=callbacks, validation_data=val_dataset)