百度Apollo无人车车道线检测挑战赛(一)

百度Apollo车道线分割比赛一(数据处理)

百度Apollo车道线分割比赛二(模型搭建)

百度Apollo车道线分割比赛三(模型训练)

完整代码:Github

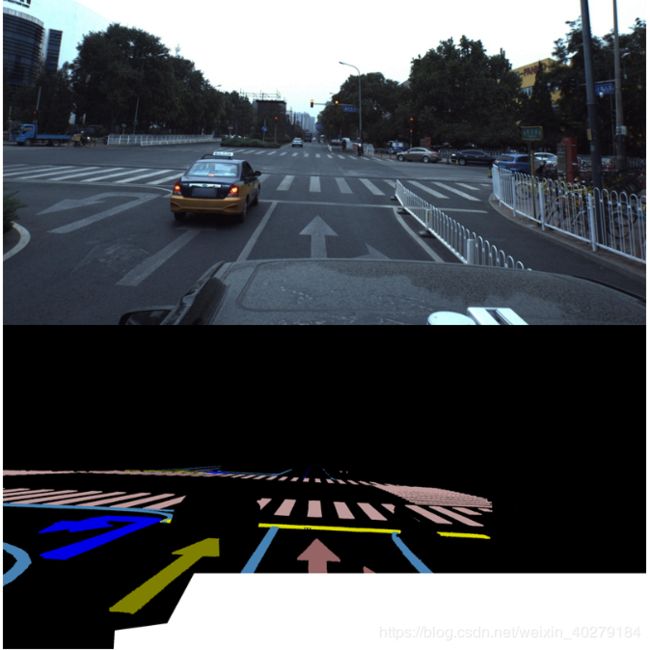

百度Apollo车道线检测比赛用的数据集是百度自己制作的数据集,数据集是像素级的标注,比tusimple的数据集要更加精准,下图为原图和标签,数据集下载地址

百度比赛要求采用百度自己的深度学习框架飞浆(paddlepadle)实现,比赛前35名方案

本方案采用pytorch实现,且采用复赛的数据集,标签为灰度值图像。

本实现数据集处理为实时预处理的形式,这样可以节省内存空间,特别使用服务器去训练时会场方便,首先下载复赛的数据集,包含样本和灰度值的标签。

实时预处理的思路很简单,将数据集的样本和标签映射到csv文件中,训练时按照csv的顺序去读取样本,并且处理好送到训练的模型。

***第一步***将下载的数据集映射到csv文件。处理文件utils.make_list.py,代码如下:

#coding=utf-8

#@author : Jiangnan He

#@date : 2019.12.25 15:47

"""

img_process 生成的文件结构如下:

--train_set :D:\Dataset\Apollolaneline\train_set - gt_image

- src_image

-- test_set :D:\Dataset\Apollolaneline\test_set - src_image

"""

import pandas as pd

import os

from sklearn.utils import shuffle

import glob

# train_set 共有21914 个样本 我们按照 train:val:test=3:1:1 的比例划分 训练集和 验证集 测试集

datasetpath1=datasetpath='D:/Dataset/Apollolaneline/'

#================================================

# make train & validation & test lists

#================================================

def get_train_val_test():

img_list=[]

lab_list=[]

for file in glob.glob(str(datasetpath1)+"/Road*/"):

if file.find("zip")!=-1:

continue

for i in glob.glob(str(file)+"/ColorImage_road*"):

file1=os.path.join(datasetpath1,i,"ColorImage")

for j in os.listdir(file1):

path=os.path.join(file1,j)

for k in os.listdir(path):

path1=os.path.join(path,k)

print(path1,len(os.listdir(path1)))

for z in os.listdir(path1):

image_path=os.path.join(path1,z)

#样本D:\Dataset\Apollolaneline\Road02\ColorImage_road02\ColorImage\Record001\Camera 5\170927_063811892_Camera_5.jpg

#标签D:\Dataset\Apollolaneline\Gray_Label\Gray_Label\Label_road02\Label\Record001\Camera 5\170927_063811892_Camera_5_bin.png

print(image_path,"image_path")

image_name=str(image_path.split()[1]).split('\\')[1].split(".")[0]+'_bin.png'

print("image_name",image_name)

#获取src_img 文件地址

src_path=os.path.join(path1,z)

# 获取gt_img 文件地址

gt_path=os.path.join("D:/Dataset/Apollolaneline/Gray_Label/Gray_Label/","Label_"+str(image_path.split("\\")[2]).split("_")[1],"Label","/".join(image_path.split("\\")[4:6]),image_name)

img_list.append(src_path)

lab_list.append(gt_path)

assert len(img_list)==len(lab_list)

total_len=len(img_list)

six_part=int(total_len*0.6)

eight_part=int(total_len*0.8)

all=pd.DataFrame({'image': img_list, 'label': lab_list})

all_shuffle = shuffle(all) # 顺序打乱存储

train_list=all_shuffle[:six_part]

val_list=all_shuffle[six_part:eight_part]

test_list=all_shuffle[eight_part:]

#生成val.csv

train_list.to_csv('../data_list/train.csv', index=False)#生成val的数据集csv文件

# 生成train.csv

val_list.to_csv('../data_list/val.csv', index=False)#生成train的数据集csv文件

#生成test.csv

test_list.to_csv('../data_list/test.csv', index=False) # 生成test的数据集csv文件

if __name__=="__main__":

get_train_val_test()

运行make_list.py将生成3个list文件,分别为train.csv, val.csv,test.csv

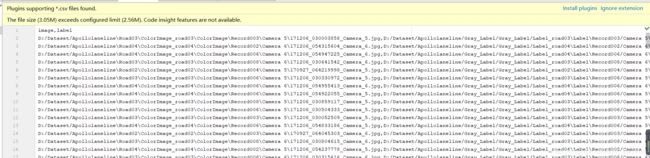

csv文件为一个字典 键“image”,“label”,一一对应样本标签的存储地址,如下图

***第二步***样本标签处理:

1.样本处理:尺寸,颜色随机变换,添加高斯噪声,随机剪裁cutout(类似dropout),pytorch有很多样本增强工具,例如apex,torchvision.transform,opencv,PIL(轻量级),albumentations,imgaug,numpy,这里用的opencv 增强方式较为简单,因为数据集较大,没有对数据量扩充。

2.标签处理:尺寸,旋转变化时要求和样本处理同步。处理文件utils.img_process.py,处理代码如下:

#coding: utf-8

#@author jiangnan He

#@date: 15:00 2019.11.13

'''

APollo laneline dataset

'''

############################### config ##########################

#原图尺寸3384x1710 wh

#这里采用三种尺寸进行训练 768x256,1024x384,1536x512(wh)

imgH=384

imgW=1024

offset=690

###############################################################

import numpy as np

import cv2

import torch

def gamma_transform(img, gamma):#gamma变换

gamma_table = [np.power(x / 255.0, gamma) * 255.0 for x in range(256)]

gamma_table = np.round(np.array(gamma_table)).astype(np.uint8)

return cv2.LUT(img, gamma_table)

def random_gamma_transform(img, gamma_vari):

log_gamma_vari = np.log(gamma_vari)

alpha = np.random.uniform(-log_gamma_vari, log_gamma_vari)

gamma = np.exp(alpha)

return gamma_transform(img, gamma)

def rotate(xb,yb, angle):#旋转变换

M_rotate = cv2.getRotationMatrix2D((imgH/2, imgW/2), angle, 1)

xb = cv2.warpAffine(xb, M_rotate, (imgH , imgW ))

yb = cv2.warpAffine(yb, M_rotate, (imgH , imgW ))

return xb, yb

def blur(img):

img = cv2.blur(img, (3, 3))

return img

def add_noise(img):

for i in range(200): # 添加点噪声

temp_x = np.random.randint(0, img.shape[0])

temp_y = np.random.randint(0, img.shape[1])

img[temp_x][temp_y] = 255

return img

def data_augment(xb, yb):#样本增强函数

if np.random.random() < 0.25:#只对原图操作

xb = random_gamma_transform(xb, 1.0)

if np.random.random() < 0.25:#只对原图操作

xb = blur(xb)

if np.random.random() < 0.2:#只对原图操作

xb = add_noise(xb)

return xb, yb

class Process_dataset(object):

def __call__(self,sample):

image, mask=sample

src_img=image[offset:,:]#hw

gt_img =mask[offset:,:] #先将 原图上部分的填空部分 3384x690 剪裁掉

#1 图像尺度处理 将图像缩放到训练尺寸 这里采用三种尺寸进行训练 768x256,1024x384,1536x512(wh)

src_img = cv2.resize(src_img,

dsize=(imgW, imgH),

interpolation=cv2.INTER_LINEAR)

gt_img = cv2.resize(gt_img,

dsize=(imgW, imgH),

interpolation=cv2.INTER_NEAREST)# 样本图片缩放用双线性插值,标签尺寸缩放时用最近邻处理,双线性插值将产生浮点型标注

#样本增强

src_img,gt_img=data_augment(src_img,gt_img)

return src_img,gt_img

class ToTensor(object):#将样本numpy格式转为pytorch的tensor

def __call__(self,sample ):

image, mask=sample

image = np.transpose(image,(2,0,1))

image = image.astype(np.float32)

mask = mask.astype(np.uint8)

return torch.from_numpy(image.copy()), torch.from_numpy(mask.copy())

# crop the image to discard useless parts 测试时用到这个函数

def crop_resize_data(image, label=None, image_size=(1024, 384), offset=690):

"""

Attention:

h,w, c = image.shape

cv2.resize(image,(w,h))

"""

roi_image = image[offset:, :]

if label is not None:

roi_label = label[offset:, :]

train_image = cv2.resize(roi_image, image_size, interpolation=cv2.INTER_LINEAR)

train_label = cv2.resize(roi_label, image_size, interpolation=cv2.INTER_NEAREST)

return train_image, train_label

else:

train_image = cv2.resize(roi_image, image_size, interpolation=cv2.INTER_LINEAR)

return train_image

***第三步***标签编码,将label中不同灰度值(代表不同类别的车道线),用0-7去标注,这里没有直接处理成one-hot形式,后面模型计算loss时会根据需要去看是否需要处理成one-hot形式

处理文件utils.lab_process.py,处理代码如下

#coding=utf-8

#@author : Jiangnan He

#@data : 2019.12.25 20:00

import numpy as np

def encode_labels(color_mask):#编码 灰度值转为类别 0-7

encode_mask = np.zeros((color_mask.shape[0], color_mask.shape[1]))

# 0

encode_mask[color_mask == 0] = 0

encode_mask[color_mask == 249] = 0

encode_mask[color_mask == 255] = 0

# 1

encode_mask[color_mask == 200] = 1

encode_mask[color_mask == 204] = 1

encode_mask[color_mask == 213] = 0

encode_mask[color_mask == 209] = 1

encode_mask[color_mask == 206] = 0

encode_mask[color_mask == 207] = 0

# 2

encode_mask[color_mask == 201] = 2

encode_mask[color_mask == 203] = 2

encode_mask[color_mask == 211] = 0

encode_mask[color_mask == 208] = 0

# 3

encode_mask[color_mask == 216] = 0

encode_mask[color_mask == 217] = 3

encode_mask[color_mask == 215] = 0

# 4 In the test, it will be ignored

encode_mask[color_mask == 218] = 0

encode_mask[color_mask == 219] = 0

# 4

encode_mask[color_mask == 210] = 4

encode_mask[color_mask == 232] = 0

# 5

encode_mask[color_mask == 214] = 5

# 6

encode_mask[color_mask == 202] = 0

encode_mask[color_mask == 220] = 6

encode_mask[color_mask == 221] = 6

encode_mask[color_mask == 222] = 6

encode_mask[color_mask == 231] = 0

encode_mask[color_mask == 224] = 6

encode_mask[color_mask == 225] = 6

encode_mask[color_mask == 226] = 6

encode_mask[color_mask == 230] = 0

encode_mask[color_mask == 228] = 0

encode_mask[color_mask == 229] = 0

encode_mask[color_mask == 233] = 0

# 7

encode_mask[color_mask == 205] = 7

encode_mask[color_mask == 212] = 0

encode_mask[color_mask == 227] = 7

encode_mask[color_mask == 223] = 0

encode_mask[color_mask == 250] = 7

return encode_mask

***第四步***生成数据集,运行文件create_data.py,代码如下:

# coding=utf-8

# @author:Jiangnan He

# @date: 2019.12.25 18:54

# 本实现用于生成 pytorch 的数据集

import torch

import pandas as pd

from torchvision import transforms

from torch.utils.data.dataset import Dataset

from utils.lab_process import encode_labels

from utils.img_process import Process_dataset

from utils.img_process import ToTensor

import cv2

class DatasetFromCSV(Dataset):

def __init__(self, csv_path, transforms=None):

self.data = pd.read_csv(csv_path,header=None, names=["image","label"])

self.images = self.data["image"].values[1:]

self.labels = self.data["label"].values[1:]

self.transforms = transforms

def __len__(self):

return self.labels.shape[0]

def __getitem__(self, index):

image=self.images[index]

label=self.labels[index]

image=cv2.imread(image,cv2.IMREAD_COLOR)

lab_as_np=cv2.imread(label, cv2.IMREAD_GRAYSCALE)

label=encode_labels(lab_as_np)#将不同灰度值标记为不同类 # 后续需要将其转为one-hot编码

sample=[image,label]

img_as_tensor ,lab_as_tensor= self.transforms(sample)

return img_as_tensor, lab_as_tensor

#测试代码

if __name__=="__main__":

batch_size =2

transform = transforms.Compose([Process_dataset(),ToTensor()] )

train_data = DatasetFromCSV('../data_list/train.csv',transform)

val_data = DatasetFromCSV('../data_list/val.csv', transform)

test_data = DatasetFromCSV("../data_list/test.csv",transform)

train_loader = torch.utils.data.DataLoader(train_data, batch_size=batch_size)

val_loader = torch.utils.data.DataLoader(val_data, batch_size=batch_size)

test_loader = torch.utils.data.DataLoader(test_data, batch_size=batch_size)

for batch in train_loader:

img, lab = batch

lab=torch.unsqueeze(lab,1)

print(img.shape,lab.shape)

其中DatasetFromCSV,继承了pytorch的Dataset的类制作自己的数据集只需要重写这个类的__len__(),和__getitem__()两个函数即可,transform就是我们预处理样本的函数组合加上ToTensro函数,这样才能完整将样本处理成pytorch能够接受的Tensor

运行测试代码效果如下: