- 四步解决你的情绪失控

麦小范一日一书

随着生活节奏的不断加快,大多数人现如今的城市人群的情绪状态陷入了易燃易爆炸的状态,开车在路上被人超车就会莫名的暴躁,挤公交被人蹭一下就感觉一股无名火上头,面对处理不完的工作事物越发急躁.....那么如何让自己的情绪在失控的边缘拉回来呢?给你分享一个a4纸解决工具,把a4纸对折两次,然后张开,分别在a4纸四个空白地方写上四个大标题:1.这件事情是什么?(也就是让你生气的事情是什么),在写的过程中尽量

- 如何区分Bug是前端问题还是后端问题?

海姐软件测试

缺陷管理bug前端

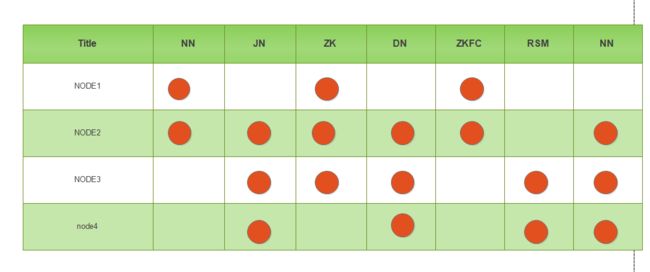

在软件测试中,精准定位Bug的归属(前端or后端)是高效协作的关键。以下是系统化的排查方法,结合技术细节和实战技巧:1.核心判断逻辑「数据vs展示」二分法:后端问题:数据本身错误(API返回错误数据/逻辑错误/数据库问题)前端问题:数据正确但展示异常(UI渲染错误/交互逻辑问题)2.四步定位法第一步:抓包分析(必做)工具:ChromeDevTools>Network/Fiddler/Charles

- 画一本书《学会提问》(第四章之3)检查和判定歧义

福二姨

做这个练习的时候,你要不断地追问作者这么说是什么意思?尤其是抽象词语的使用。通常,广告词就喜欢使用这种意思模棱两可的词语,希望通过歧义词来说服你相信他们的产品比所有竞争对手的产品都要棒。在推理过程中出现的意思不明确的词最为关键,我们要避免总是认为自己想的和作者表达的就是一个意思,也不能认为术语只存在一个明显的定义。

- 亲子日记第二百二十七篇

赵羽斐

四月十四日星期六晴我最近发现一个问题,宝贝不喜欢阅读课本,但是他很喜欢读手机的短息。今天她跟姑姑聊天,姑姑打字,他语音,基本没有问题!所以我决定改变战略——让她每天阅读我的日记,监督我!知道妈妈的日记是怎样一番天地。这样我想肯定能够提高宝贝的兴趣,增长阅读能力!希望我的新办法能够带来不一样的效果!只要方法对了,必会事半功倍!一起努力吧!加油加油加油^0^~

- 2019.1.24星期四亲子日记95

李妈妈

图片发自App图片发自App图片发自App大宝被评为三好学生了!一年级的第一学期马上结束了,虽然期末考试的成绩不理想,但是这一学期大宝还是很努力的,大宝的缺点就是学东西还不够扎实,做题的时候读题不认真,不会检查!希望假期里我们一起努力改掉这些坏习惯!让大宝在新的学期里更加的主动认真的去学习,也希望大宝能够再活跃一点,能够积极参与学校的各项活动!做一个全面发展的好学生!!!厦小一年六班李新妈妈

- 送什么东西给父母好?2024中秋节送长辈/父母礼品详尽清单

直返APP抖音优惠券

中秋节是阖家团圆的节日,在这个特别的时刻,为长辈或父母挑选一份合适的礼物,能表达我们对他们深深的敬意和爱意。以下是一份详尽的礼品清单,供你参考:1.健康保健类:按摩椅:让长辈在家就能享受舒适的按摩,缓解身体的疲劳。足浴盆:促进血液循环,有益于身体健康。保健品:如燕窝、海参、灵芝等,增强体质。2.生活用品类:高品质床上用品:一套舒适的四件套,让他们能睡个好觉。保暖衣物:如羊毛衫、羽绒服等,在秋冬季节

- @选调生 在传承红色基因中汲取奋进力量

神奇咩咩咩

《习近平谈治国理政》生动记录了习近平总书记领导党和人民应变局、开新局的伟大实践,集中展现了马克思主义中国化的最新成果,是系统反映习近平新时代中国特色社会主义思想的权威著作。作为选调生,学习跟进、认识跟进、行动跟进,全面系统地读原著学原文、悟原理、知原义,重点关注第四卷提出的一系列原创性的治国理政新理念新思想新战略,对于我们进一步加深对中国之路、中国之治、中国之理的理解,深刻体悟这一思想强大的真理力

- 2019年12月大学英语四级考试(第一套)翻译真题

kandang

2019年12月大学英语四级考试(第一套)翻译真题PartⅣTranslation(30minutes)Directions:Forthispart,youareallowed30minutestotranslateapassagefromChineseintoEnglish.YoushouldwriteyouransweronAnswerSheet2.中国家庭十分重视孩子的教育。许多父母认为应该

- 甄嬛理解皇帝的忍辱负重(四)-我看《甄嬛传》

宝藏姐李泱

摘要:皇帝决定暂时忍耐,甄嬛提出怎样的忍耐建议而为皇帝分忧?1、殿外的苏培盛忧心忡忡,殿内的甄嬛一边拾碎片一边说:“那就请皇上追封温僖贵妃为贵太妃,加以封号,迁葬入先帝妃陵。同时加封宫中各位太妃,加以尊号。”皇帝摔杯,发气也好,发誓也罢,甄嬛知道他选择了忍耐。眼见皇帝心意已决,甄嬛平和地拾起碎片,表达对皇帝摔杯这种失常行为的理解,同时温和淡定地提出可行性建议,帮皇帝解决实际问题。皇帝面无表情地听着

- 如何用六步法来写听书稿?

晨星如希

什么是听书稿?听书稿就是听书音频的逐字稿,用20-30分钟解读一本书。每本书音频产品的文字稿大约在6000-8000字之间,讲解一本书的核心知识点和精华内容,让读者在20-30分钟内,能够掌握一本书的精华内容。那么听书稿应该如何来写呢?这里给大家分享一下写听书稿的六个步骤:一是固定语开篇,用一句话总结全局精华。二是引题和破题。三是作者的概况简介。四是概括全书的精华。五是详细介绍本书的重点,一般写三

- 玩转Docker | 使用Docker部署TeamMapper思维导图应用程序

心随_风动

玩转Dockerdockereureka容器

玩转Docker|使用Docker部署TeamMapper思维导图应用程序前言一、TeamMapper介绍TeamMapper简介TeamMapper功能二、系统要求环境要求环境检查Docker版本检查检查操作系统版本三、部署TeamMapper服务下载TeamMapper镜像编辑部署文件创建容器检查容器状态检查服务端口安全设置四、访问TeamMapper服务五、TeamMapper基本体验打开新

- 《无耻之徒》——属于他们港湾

天呐呐呐呐

“来,让我们一起说motherfucker!”即使生活多么狗屎,我们还是得磕磕绊绊继续丧下去。图片发自Appshameless一部神剧,无下限,无节操,无尿点的美剧,无论你觉得生活多么不容易,只要你看到Gallager一家鸡飞狗跳的生活,你绝对会默默感叹:“还可以这样?”每当Frank道德败坏用下三滥的态度四处坑蒙拐骗的时候,我是痛恨frank的,讨厌frank不负责任,酗酒成瘾的生活。最后还不忘

- Docker实战系列:使用Docker部署AI SSH客户端工具IntelliSSH

江湖有缘

Docker部署项目实战合集docker人工智能ssh

Docker实战系列:使用Docker部署AISSH客户端工具IntelliSSH前言一、IntelliSSH介绍1.1IntelliSSH简介1.2IntelliSSH主要特点1.3主要使用场景二、本次实践规划2.1本地环境规划2.2本次实践介绍三、本地环境检查3.1检查Docker服务状态3.2检查Docker版本3.3检查dockercompose版本四、拉取IntelliSSH镜像五、部署

- 选择比努力更重要

chocolatemamama

少即多,少给孩子贴不好标签比如孩子在物权意识比较强的阶段不愿意分享的时候,不给孩子贴"小气、吝啬"的标签,多给他说"不"的权力我们要给予孩子尊重。这时的少即收获孩子更愿意多的分享。少即多,无效的社交。减少了灯红酒绿的社交后,小喝几杯的习惯也还是有的,但会选择在家自己喝一些,看几页书。减少无效社交,多了独处学习空间。少即多,少责怪多引导一次孩子在湿的地板赤脚玩滑冰,一不留意神,女儿摔个四脚朝天。见此

- 2018-01-20

姜月萍

目前市盈率主要分三种,静态、动态、滚动。举例分享区别:老王花300万买一套房子,租出去,按季度收房租。去年第一年,第一个季度收了2万,第二季度收了3万,第三个季度收了3万,第四个季度收了2万,全年房租累计收入是10万;今年第二年,第一个季度收了4万,第二个季度收了3万,第三个季度收了3万,第四个季度收了3万,全年房租累计收入是13万;明年第三年,根据经验判断预计收入是15万。假设现在是第二年的第三

- 2023-08-11

yxf430421

昨天早晨我到松木塘菜市场转了一圈,看到我们家乡有人在那里卖西瓜。我问他什么时候到松木塘菜市场来的,他告诉我四点钟就来了。我们家乡离这里大约有五十多里,一个老农为了西瓜卖一个好价钱,竟然从家里挑着西瓜赶到五十多里外的地方去卖,我不得不佩服他!我想我们地方干部应该想方设法鼓励农民搞些深加工,这样农民就不必为担心地里种植出来的东西卖不到好价钱而赶到几十里甚至上百里的外地去卖了。

- 思维导图学习之二阶:实践力第四节

纯优妈咪

时间:2021.5.29课程:简快导图之《杨柳》,核心心法:关键词和逻辑。课程收获:首先老师讲了拆解的意义,比如我们在给孩子讲解绘本的时候,我们会把它拆解成大类,中类,小类,小小类。那么拆解有什么意义呢?它可以使我们思路更清晰,把复杂的事情经过层层的分层分级,最终明白和理解的一个过程。这节课还是进行收敛型思维的训练,以《杨柳》为例展开。第一步:通读全文;第二步:通文理解(略);第三步:逐句找关键词

- Selenium+Java 自动化测试入门到实践:从环境搭建到元素操作

yy鹈鹕灌顶

seleniumjava测试工具

在自动化测试领域,Selenium凭借其强大的跨浏览器兼容性和灵活的API,成为Web应用测试的首选工具。而Java作为一门稳定且广泛应用的编程语言,与Selenium结合能构建出高效、可维护的自动化测试框架。本文将从环境搭建开始,逐步介绍Selenium+Java的核心用法,帮助新手快速上手。一、环境搭建:让工具跑起来1.安装Java开发环境Selenium的Java客户端需要依赖JDK,建议安

- 范琳的感恩日记《1》

天禅

一.今天是新年的第六天,感恩自己允许睡到自然醒二.感恩自己的双手做早餐,平时都是婆婆做的三.感恩孩子呈现的喜悦,自己自觉完成照顾好自己四.感恩快递员通知我取件五.感恩今天来看我们的亲人六.感恩今天感觉爽歪歪,允许自己舒服慵懒七.感恩手机宝贝,实现了今天的学习八.感恩女性能量群主分享,清晰了自己目标九.感恩女儿清清,非常有耐心十.感恩今天的钱先生到来,实现了买礼物图片发自App图片发自App

- 癸卯兔年立春到,双春双喜,注定是个吉祥年

生活清泉涌

2023,癸卯兔年,立春到,双春双喜,闰年闰月,注定是个吉祥年。一年四季春为首,万物复苏春意盎然,兔年开好头,万事皆不愁;一年之计在于春,春天起好步,全年都不负;出门见喜行好运;最是一年春好处。春眠不觉晓,生活幸福无烦恼;一江春水向东流,平安如意少忧愁;春风不度玉门关,月月收入翻几番!四季如春生活美,春意盎然好人缘,春暖花开好心情,春景常在好运气!不论是天南海北,还是地北天南,都是春风得意,春心荡

- 《大话生涯:自我发现之旅》:重新认识你自己!

二货菇凉

这本书写的是唐轩臧,但是更是我们自己。认识自己,认识你的最内在的自我,那个使你之所以成为你的核心和根源。认识了这个东西,你就心中有数了,知道怎样的生活才是合乎你的本性的,你究竟应该要什么和可以要什么了。认识自己,发现自我。认识自己,也就是我们必须要清楚我们自身的四个点:人格、兴趣、能力和价值观!“我是谁”“我要去做什么”“我能做什么”,其实这些困扰一直都在,从学生到初入社会,甚至中年时候。这本书的

- 亲子日记545篇2019.3.27

明懿妈妈

今晚下班回家,大宝已经和爸爸在下象棋,第一句话就是骄傲的告诉我,作业已经完成,而且在学校等校车时已经写完。一定是昨天跟他二姨家哥哥聊天时受苦的影响。他的表哥今年四年级,作业从来不带回家,在学校全部完成,英语也很棒。有这样的榜样,大宝加油哦!晚上吃过饭已经八点多,洗漱完,大宝拿着书到我们卧室,和小宝一起看书(小宝只是不停的翻着图片问这这、那那的让我回答),读了一篇后就回房间睡觉了。

- 重学前端006 --- 响应式网页设计 CSS 弹性盒子

文章目录盒模型一、盒模型的基本概念二、两种盒模型的对比举例三、总结Flexbox弹性盒子布局一、Flexbox的核心概念二、Flexbox的基本语法1.定义Flex容器2.Flex容器的主要属性3.Flex项目的主要属性三、Flexbox的常见布局示例四、FlexboxvsGrid布局五、总结imgobject-fitgapCSS::after伪元素详解1.基本概念2.基础语法3.关键注意事项以下

- 2021-06-11

拿大顶的宇航员

在长满树胡须的树下喝茶,广场里有着青草新鲜的香气,有着鸟儿叽咋,间杂着布谷叫,满眼都是树,灌木,草丛,竹林,青绿,黄绿,赭红,放下心思,放缓步伐,放空头脑,慢慢来四川的节奏,蜀人的心性,享受生活的态度

- 亲子日记七十九

快乐到永远_6120

天天下了早班,急急忙忙到诊所和孩子打针。这次的针打的特别慢,大约四个半到五个小时左右,昨晚打完针都九点多了。打针钱给他去买了碗拉面,不吃点东西特别刺激胃。可能是上火的原因,吃的并不多。打针的过程中给他预习了预习语文课本,该背的让他背了背。还特别高兴的和我说刚刚发了一本书,特别好看。我一看是《当代小学生》,想着以前我们上学的时候特别喜欢看这本书。打完针的时候不到八点半,回到家里非愿意转呼拉圈,看来感

- 阅读札记二十四—《红星照耀中国》8(九、十章)

三九陈皮

第九章战争与和平二、“红小鬼”>>红军里有许多像他这样的少年。少年先锋队是由共产主义青年团组织的>>但是他们的刚毅坚忍精神令人叹服,他们对红军的忠贞不贰、坚定如一,只有很年轻的人才能做到。四、关于朱德>>朱德貌不惊人——一个沉默谦虚、说话轻声、有点饱经沧桑的人,眼睛很大(“眼光非常和蔼”这是大家常用的话),身材不高,但很结实,胳膊和双腿都像铁打的一样。>>朱德爱护他的部下是天下闻名的。>>朱德这样

- 菊花茶的功效

A小脑萎缩呀

1、预防感冒,咽喉肿痛菊花,金银花,茉莉花一起泡着喝,可清热解毒,可防治风热感冒、咽喉肿痛、痈疮等,常喝更可降火,有宁神静思的效用。2、恢复视力平常泡一杯菊花茶来喝,能使眼睛疲劳的症状消退,如果每天喝三到四杯的菊花茶,对恢复视力也有帮助,经常玩微信的朋友一定试试。3、抵抗辐射日常生活中多喝菊花茶有助于防止辐射,经常对着电脑的上班族,应该多喝菊花茶,这样能够抵触电脑的辐射。

- 今日读书之你一定要懂的人情世故

爱容容

书名:《别人不说,你一定要懂的人情世故》——送给中国打拼一族的成人礼——墨墨编著编者语:人情有尺度,有深浅,有轻重,出来混的都要懂。简而言之:人情世故就是做人的艺术。关键词:说话,利益,面子,分寸,人性,职场,交际应酬、情感主要内容:第一章,话说七分,酒至微醺——言语中的人情世故第二章,利益很重要,不要忽略利益的考量第三章,伤什么都不要伤了别人的面子第四章,为人处世要把握一些分寸第五章,不要抱怨人

- 《王阳明:知行合一》读后

达到成长

王阳明从小就放荡不羁,一心想做圣贤,每天专研通往圣贤的道路。最开始他认为成为圣人要“为万世开太平”,靠出色的军事能力才能经略四方。1489年他拜见了娄谅,娄谅告诉王阳明,成为圣人就要“格物致知”。这也是朱熹理学的治学方法,人在面对自己所不知道的物时,要通过各种方式来把它搞明白。搞明白一切事物的道理后,你就是圣人了。但王阳明发现从“格物”中并非能“致知”,他开始怀疑朱熹的“格物致知”是错的。1499

- 【软考速通笔记】系统架构设计师⑱——大数据架构设计理论与实践

小康师兄

系统架构设计师笔记系统架构大数据LanbdaKappa数据湖批处理

文章目录一、前言二、传统数据库遇到的问题2.1问题的根源2.2传统解决方法三、大数据基础3.1大数据处理技术3.2大数据利用过程3.3大数据处理系统面临的挑战3.4大数据具有的属性和特征四、Lanbda架构4.1批处理层4.2加速层4.3服务层五、Kappa架构5.1实时层5.2服务层六、Lambda和Kappa对比七、其他一、前言笔记目录大纲请查阅:【软考速通笔记】系统架构设计师——导读关注【小

- SQL的各种连接查询

xieke90

UNION ALLUNION外连接内连接JOIN

一、内连接

概念:内连接就是使用比较运算符根据每个表共有的列的值匹配两个表中的行。

内连接(join 或者inner join )

SQL语法:

select * fron

- java编程思想--复用类

百合不是茶

java继承代理组合final类

复用类看着标题都不知道是什么,再加上java编程思想翻译的比价难懂,所以知道现在才看这本软件界的奇书

一:组合语法:就是将对象的引用放到新类中即可

代码:

package com.wj.reuse;

/**

*

* @author Administrator 组

- [开源与生态系统]国产CPU的生态系统

comsci

cpu

计算机要从娃娃抓起...而孩子最喜欢玩游戏....

要让国产CPU在国内市场形成自己的生态系统和产业链,国家和企业就不能够忘记游戏这个非常关键的环节....

投入一些资金和资源,人力和政策,让游

- JVM内存区域划分Eden Space、Survivor Space、Tenured Gen,Perm Gen解释

商人shang

jvm内存

jvm区域总体分两类,heap区和非heap区。heap区又分:Eden Space(伊甸园)、Survivor Space(幸存者区)、Tenured Gen(老年代-养老区)。 非heap区又分:Code Cache(代码缓存区)、Perm Gen(永久代)、Jvm Stack(java虚拟机栈)、Local Method Statck(本地方法栈)。

HotSpot虚拟机GC算法采用分代收

- 页面上调用 QQ

oloz

qq

<A href="tencent://message/?uin=707321921&Site=有事Q我&Menu=yes">

<img style="border:0px;" src=http://wpa.qq.com/pa?p=1:707321921:1></a>

- 一些问题

文强chu

问题

1.eclipse 导出 doc 出现“The Javadoc command does not exist.” javadoc command 选择 jdk/bin/javadoc.exe 2.tomcate 配置 web 项目 .....

SQL:3.mysql * 必须得放前面 否则 select&nbs

- 生活没有安全感

小桔子

生活孤独安全感

圈子好小,身边朋友没几个,交心的更是少之又少。在深圳,除了男朋友,没几个亲密的人。不知不觉男朋友成了唯一的依靠,毫不夸张的说,业余生活的全部。现在感情好,也很幸福的。但是说不准难免人心会变嘛,不发生什么大家都乐融融,发生什么很难处理。我想说如果不幸被分手(无论原因如何),生活难免变化很大,在深圳,我没交心的朋友。明

- php 基础语法

aichenglong

php 基本语法

1 .1 php变量必须以$开头

<?php

$a=” b”;

echo

?>

1 .2 php基本数据库类型 Integer float/double Boolean string

1 .3 复合数据类型 数组array和对象 object

1 .4 特殊数据类型 null 资源类型(resource) $co

- mybatis tools 配置详解

AILIKES

mybatis

MyBatis Generator中文文档

MyBatis Generator中文文档地址:

http://generator.sturgeon.mopaas.com/

该中文文档由于尽可能和原文内容一致,所以有些地方如果不熟悉,看中文版的文档的也会有一定的障碍,所以本章根据该中文文档以及实际应用,使用通俗的语言来讲解详细的配置。

本文使用Markdown进行编辑,但是博客显示效

- 继承与多态的探讨

百合不是茶

JAVA面向对象 继承 对象

继承 extends 多态

继承是面向对象最经常使用的特征之一:继承语法是通过继承发、基类的域和方法 //继承就是从现有的类中生成一个新的类,这个新类拥有现有类的所有extends是使用继承的关键字:

在A类中定义属性和方法;

class A{

//定义属性

int age;

//定义方法

public void go

- JS的undefined与null的实例

bijian1013

JavaScriptJavaScript

<form name="theform" id="theform">

</form>

<script language="javascript">

var a

alert(typeof(b)); //这里提示undefined

if(theform.datas

- TDD实践(一)

bijian1013

java敏捷TDD

一.TDD概述

TDD:测试驱动开发,它的基本思想就是在开发功能代码之前,先编写测试代码。也就是说在明确要开发某个功能后,首先思考如何对这个功能进行测试,并完成测试代码的编写,然后编写相关的代码满足这些测试用例。然后循环进行添加其他功能,直到完全部功能的开发。

- [Maven学习笔记十]Maven Profile与资源文件过滤器

bit1129

maven

什么是Maven Profile

Maven Profile的含义是针对编译打包环境和编译打包目的配置定制,可以在不同的环境上选择相应的配置,例如DB信息,可以根据是为开发环境编译打包,还是为生产环境编译打包,动态的选择正确的DB配置信息

Profile的激活机制

1.Profile可以手工激活,比如在Intellij Idea的Maven Project视图中可以选择一个P

- 【Hive八】Hive用户自定义生成表函数(UDTF)

bit1129

hive

1. 什么是UDTF

UDTF,是User Defined Table-Generating Functions,一眼看上去,貌似是用户自定义生成表函数,这个生成表不应该理解为生成了一个HQL Table, 貌似更应该理解为生成了类似关系表的二维行数据集

2. 如何实现UDTF

继承org.apache.hadoop.hive.ql.udf.generic

- tfs restful api 加auth 2.0认计

ronin47

目前思考如何给tfs的ngx-tfs api增加安全性。有如下两点:

一是基于客户端的ip设置。这个比较容易实现。

二是基于OAuth2.0认证,这个需要lua,实现起来相对于一来说,有些难度。

现在重点介绍第二种方法实现思路。

前言:我们使用Nginx的Lua中间件建立了OAuth2认证和授权层。如果你也有此打算,阅读下面的文档,实现自动化并获得收益。SeatGe

- jdk环境变量配置

byalias

javajdk

进行java开发,首先要安装jdk,安装了jdk后还要进行环境变量配置:

1、下载jdk(http://java.sun.com/javase/downloads/index.jsp),我下载的版本是:jdk-7u79-windows-x64.exe

2、安装jdk-7u79-windows-x64.exe

3、配置环境变量:右击"计算机"-->&quo

- 《代码大全》表驱动法-Table Driven Approach-2

bylijinnan

java

package com.ljn.base;

import java.io.BufferedReader;

import java.io.FileInputStream;

import java.io.InputStreamReader;

import java.util.ArrayList;

import java.util.Collections;

import java.uti

- SQL 数值四舍五入 小数点后保留2位

chicony

四舍五入

1.round() 函数是四舍五入用,第一个参数是我们要被操作的数据,第二个参数是设置我们四舍五入之后小数点后显示几位。

2.numeric 函数的2个参数,第一个表示数据长度,第二个参数表示小数点后位数。

例如:

select cast(round(12.5,2) as numeric(5,2))

- c++运算符重载

CrazyMizzz

C++

一、加+,减-,乘*,除/ 的运算符重载

Rational operator*(const Rational &x) const{

return Rational(x.a * this->a);

}

在这里只写乘法的,加减除的写法类似

二、<<输出,>>输入的运算符重载

&nb

- hive DDL语法汇总

daizj

hive修改列DDL修改表

hive DDL语法汇总

1、对表重命名

hive> ALTER TABLE table_name RENAME TO new_table_name;

2、修改表备注

hive> ALTER TABLE table_name SET TBLPROPERTIES ('comment' = new_comm

- jbox使用说明

dcj3sjt126com

Web

参考网址:http://www.kudystudio.com/jbox/jbox-demo.html jBox v2.3 beta [

点击下载]

技术交流QQGroup:172543951 100521167

[2011-11-11] jBox v2.3 正式版

- [调整&修复] IE6下有iframe或页面有active、applet控件

- UISegmentedControl 开发笔记

dcj3sjt126com

// typedef NS_ENUM(NSInteger, UISegmentedControlStyle) {

// UISegmentedControlStylePlain, // large plain

&

- Slick生成表映射文件

ekian

scala

Scala添加SLICK进行数据库操作,需在sbt文件上添加slick-codegen包

"com.typesafe.slick" %% "slick-codegen" % slickVersion

因为我是连接SQL Server数据库,还需添加slick-extensions,jtds包

"com.typesa

- ES-TEST

gengzg

test

package com.MarkNum;

import java.io.IOException;

import java.util.Date;

import java.util.HashMap;

import java.util.Map;

import javax.servlet.ServletException;

import javax.servlet.annotation

- 为何外键不再推荐使用

hugh.wang

mysqlDB

表的关联,是一种逻辑关系,并不需要进行物理上的“硬关联”,而且你所期望的关联,其实只是其数据上存在一定的联系而已,而这种联系实际上是在设计之初就定义好的固有逻辑。

在业务代码中实现的时候,只要按照设计之初的这种固有关联逻辑来处理数据即可,并不需要在数据库层面进行“硬关联”,因为在数据库层面通过使用外键的方式进行“硬关联”,会带来很多额外的资源消耗来进行一致性和完整性校验,即使很多时候我们并不

- 领域驱动设计

julyflame

VODAO设计模式DTOpo

概念:

VO(View Object):视图对象,用于展示层,它的作用是把某个指定页面(或组件)的所有数据封装起来。

DTO(Data Transfer Object):数据传输对象,这个概念来源于J2EE的设计模式,原来的目的是为了EJB的分布式应用提供粗粒度的数据实体,以减少分布式调用的次数,从而提高分布式调用的性能和降低网络负载,但在这里,我泛指用于展示层与服务层之间的数据传输对

- 单例设计模式

hm4123660

javaSingleton单例设计模式懒汉式饿汉式

单例模式是一种常用的软件设计模式。在它的核心结构中只包含一个被称为单例类的特殊类。通过单例模式可以保证系统中一个类只有一个实例而且该实例易于外界访问,从而方便对实例个数的控制并节约系统源。如果希望在系统中某个类的对象只能存在一个,单例模式是最好的解决方案。

&nb

- logback

zhb8015

loglogback

一、logback的介绍

Logback是由log4j创始人设计的又一个开源日志组件。logback当前分成三个模块:logback-core,logback- classic和logback-access。logback-core是其它两个模块的基础模块。logback-classic是log4j的一个 改良版本。此外logback-class

- 整合Kafka到Spark Streaming——代码示例和挑战

Stark_Summer

sparkstormzookeeperPARALLELISMprocessing

作者Michael G. Noll是瑞士的一位工程师和研究员,效力于Verisign,是Verisign实验室的大规模数据分析基础设施(基础Hadoop)的技术主管。本文,Michael详细的演示了如何将Kafka整合到Spark Streaming中。 期间, Michael还提到了将Kafka整合到 Spark Streaming中的一些现状,非常值得阅读,虽然有一些信息在Spark 1.2版

- spring-master-slave-commondao

王新春

DAOspringdataSourceslavemaster

互联网的web项目,都有个特点:请求的并发量高,其中请求最耗时的db操作,又是系统优化的重中之重。

为此,往往搭建 db的 一主多从库的 数据库架构。作为web的DAO层,要保证针对主库进行写操作,对多个从库进行读操作。当然在一些请求中,为了避免主从复制的延迟导致的数据不一致性,部分的读操作也要到主库上。(这种需求一般通过业务垂直分开,比如下单业务的代码所部署的机器,读去应该也要从主库读取数