Kubernetes入门系列--kubeadm部署K8S集群(二)

Kubernetes入门系列–kubeadm部署K8S集群(二 )

kubernetes官方提供的三种部署方式

- minikube

Minikube是一个工具,可以在本地快速运行一个单点kubernetes,仅用于尝试Kubernetes或日常开发的用户使用。 - kubeadm

Kubeadm是一个工具,提供kubeadm init和kubeadm join,用于快速部署Kubernetes集群。 - 二进制包

推荐,从官方下载发行版的二进制包,手动部署每个组件,组成kubernetes集群。下载地址: https://github.com/kubernetes/kubernetes/releases.

一、kubeadm快速部署K8S集群

kubeadm可以帮助我们快速部署一套kubernetes集群,kubeadm设计目的为新用户开始尝试kubernetes提供一种简单的方法。

这个工具能通过两条命名完成一个kubernetes集群的部署

# 创建一个Master节点

$ kubeadm init

# 将一个node节点加入到集群当中

$ kubeadm join

1、安装要求

在开始之前,部署kubernetes集群机器需要满足以下几个条件:

- 一台或多台运行deb/rpm兼容操作系统的机器,例如Ubuntu或Centos

- 硬件配置:2G或更多RAM,2个CPU或更多CPU,硬盘30G或更多

- 集群中所有机器之间网络互通

- 可以访问外网,需要拉取镜像

- 禁止swap分区

2、学习目标

- 在所有节点上安装Docker和kubeadm

- 部署kubernetes Master

- 部署容器网络插件

- 部署kubernetes Node,将节点加入kubernetes集群中

- 部署Dashboard Web页面,可视化查看Kubernetes资源

3、准备环境

| IP | 角色 | 安装软件 |

|---|---|---|

| 192.168.199.100 | k8s-master | kube-apiserver/kube-schduler/kube-controller-manager docker flannel kubelet |

| 192.168.199.101 | k8s-node01 | kubelet/kube-proxy/docker/flannel |

| 192.168.199.102 | k8s-node02 | kubelet kube-proxy docker flannel |

关闭防火墙

# systemctl stop firewalld && systemctl disable firewalld

关闭selinux

$ sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config && setenforce 0

关闭swap

# swapoff -a //临时关闭

# vim /etc/fstab //永久

/dev/mapper/centos-swap swap swap defaults 0 0 //删除或注释

添加主机名与IP对应关系“:

192.168.199.100 k8s-master

192.168.199.101 k8s-node01

192.168.199.102 k8s-node02

将桥接的IPv4流量传递到iptables的链

# cat > /etc/sysctl.d/k8s.conf <配置阿里yum源

centos7更换阿里yum源

1、备份

mv /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.backup

2、下载新的CentOS-Base.repo 到/etc/yum.repos.d/

wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

3、生成缓存

yum makecache

四、所有节点安装Docker/kubernetes/kublet

4.1、安装Docker

# wget http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo //配置阿里docker源

# yum -y install docker-ce-18.06.1.ce-3.el7

# systemctl start docker && systemctl enable docker

# docker --version

Docker version 18.06.1-ce, build e68fc7a

docker镜像加速

# cat /etc/docker/daemon.json <4.2、配置cgroup驱动类型

docker中有两种cgroup驱动类型:cgroupfs,systemd

1. 查看docker使用的驱动类型:# docker info|grep -i cgroup

2. vim /usr/lib/systemd/system/docker.service

#ExecStart=/usr/bin/dockerd

ExecStart=/usr/bin/dockerd --exec-opt native.cgroupdriver=systemd

3. 使上一步配置生效

systemctl daemon-reload && systemctl restart docker

4.3、添加阿里云yum软件源

vim /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

# yum makecache

# yum repolist

4.4、安装kubeadm、kubelet和kubectl(所有节点操作)

所有主机都需要操作,由于版本更新频繁,这里指定版本号部署

# yum install -y kubelet-1.15.0 kubeadm-1.15.0 kubectl-1.15.0 //安装3大组件

# systemctl enable kubelet //设置kubelet开机自启动

4.5、部署kubernetes master

kubeadm init --kubernetes-version=1.15.0 --apiserver-advertise-address=192.168.199.100 --image-repository registry.aliyuncs.com/google_containers --service-cidr=10.1.0.0/16 --pod-network-cidr=10.244.0.0/16

由于默认拉取镜像地址k8s.gcr.io国内无法访问,这里指定阿里云镜像仓库地址。

其中kubernetes-version参数是k8s版本

apiserver-advertise-address是master节点的ip

pod-network-cidr是定义POD的网段(不用想这个网段是否存在,因为这是k8s的内部虚拟的网络)

PS:kubeadm init很容易出错,如果出错可以运行kubeadm reset重置,然后就可以重新kubeadm init

输出结果:

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Activating the kubelet service

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.1.0.1 192.168.4.34]

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-master localhost] and IPs [192.168.4.34 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-master localhost] and IPs [192.168.4.34 127.0.0.1 ::1]

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

......(省略)

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.73.138:6443 --token 2nm5l9.jtp4zwnvce4yt4oj \

--discovery-token-ca-cert-hash sha256:12f628a21e8d4a7262f57d4f21bc85f8802bb717dd6f513bf9d33f254fea3e89

根据输出提示操作:

# mkdir -p $HOME/.kube

# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

# sudo chown $(id -u):$(id -g) $HOME/.kube/config

# kubectl get node //可以查看到master节点

NAME STATUS ROLES AGE VERSION

kube-master NotReady master 3m59s v1.15.0

4.6、安装Pod网络插件

# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/a70459be0084506e4ec919aa1c114638878db11b/Documentation/kube-flannel.yml

# ps -ef|grep flannel //查看插件信息

安装提示信息:

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.extensions/kube-flannel-ds-amd64 created

daemonset.extensions/kube-flannel-ds-arm64 created

daemonset.extensions/kube-flannel-ds-arm created

daemonset.extensions/kube-flannel-ds-ppc64le created

daemonset.extensions/kube-flannel-ds-s390x created

4.7、加入Kubernetes Node

要向集群添加新节点,执行kubeadm join --token :–discovery-token-ca-cert-hash sha256

【重要】在两个 Node 节点执行

kubeadm join 192.168.199.100:6443 --token yfm5zq.xmox8q37dy3voqv9 \

> --discovery-token-ca-cert-hash sha256:3796f8c41843cf87970b868871eb669e4464732c149c563a1ba9b91e62c4c829

4.8、查看集群node状态

查看集群的node状态,安装完网络工具之后,只有显示如下状态,所有节点全部都Ready好了之后才能继续后面的操作

# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready master 6h45m v1.15.0

k8s-node01 Ready 5h29m v1.15.0

k8s-node02 Ready 5h26m v1.15.0

kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-bccdc95cf-czwf7 1/1 Running 1 6h45m

coredns-bccdc95cf-dxrwq 1/1 Running 1 6h45m

etcd-k8s-master 1/1 Running 0 6h45m

kube-apiserver-k8s-master 1/1 Running 1 6h45m

kube-controller-manager-k8s-master 1/1 Running 11 6h46m

kube-flannel-ds-amd64-8xl56 1/1 Running 0 5h27m

kube-flannel-ds-amd64-cqzqz 1/1 Running 0 5h31m

kube-flannel-ds-amd64-cxc42 1/1 Running 0 6h1m

kube-proxy-77w5c 1/1 Running 0 6h45m

kube-proxy-lrxqr 1/1 Running 0 5h31m

kube-proxy-nzdf4 1/1 Running 0 5h27m

kube-scheduler-k8s-master 1/1 Running 11 6h46m

只有全部都为1/1则可以成功执行后续步骤,如果flannel需检查网络情况,重新进行如下操作

kubectl delete -f kube-flannel.yml

然后重新wget,然后修改镜像地址,然后

kubectl apply -f kube-flannel.yml

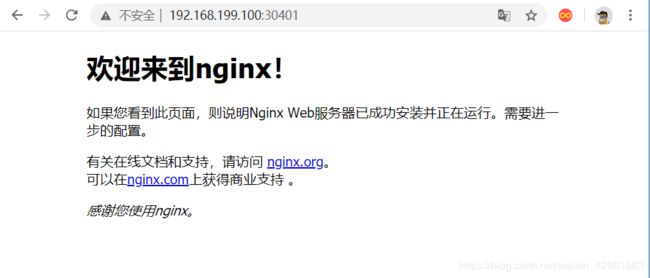

4.10、检测K8S集群

在Kubernetes集群中创建一个pod,然后暴露端口,验证是否正常访问:

[root@k8s-master ~]# kubectl create deployment nginx --image=nginx

deployment.apps/nginx created

[root@k8s-master ~]# kubectl expose deployment nginx --port=80 --type=NodePort

service/nginx exposed

[root@k8s-master ~]# kubectl get pods,svc

NAME READY STATUS RESTARTS AGE

pod/nginx-554b9c67f9-wf5lm 1/1 Running 0 24s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.1.0.1 443/TCP 39m

service/nginx NodePort 10.1.224.251 80:32039/TCP 9s

访问地址:http://NodeIP:Port ,此例就是:http://192.168.199.100:32039

4.11 Dashboard

[root@k8s-master ~]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v1.10.1/src/deploy/recommended/kubernetes-dashboard.yaml

[root@k8s-master ~]# kubectl create -f kubernetes-dashboard.yaml

生成验证秘钥

kubectl create clusterrolebinding dashboard-cluster-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

kubectl describe secret -n kube-system dashboard-admin