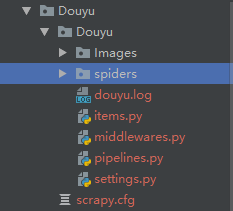

Python - 爬虫 使用scrapy框架获取豆瓣图片信息

下面是主代码逻辑,非常简单

# coding:utf-8

import json

import scrapy

from ..items import DouyuItem

class DouyuSpider(scrapy.Spider):

name = "douyu"

allowed_domains = ["douyucdn.cn"]

base_url = "http://capi.douyucdn.cn/api/v1/getVerticalRoom?limit=100&offset="

offset = 0

start_urls = [base_url + str(offset)]

def parse(self, response):

data_list = json.loads(response.body)['data']

# 判断响应数据是否为空,如果为空直接退出

if not data_list:

return

for data in data_list:

item = DouyuItem()

item['room_link'] = u"http://www.douyu.com/" + data['room_id']

item['image_src'] = data['vertical_src']

item['nick_name'] = data['nickname']

item['anchor_city'] = data['anchor_city']

# 发送图片的链接请求,传递nick_name字符串并调用回调函数parse_page处理响应

yield scrapy.Request(item['image_src'], meta={"name": item['nick_name']}, callback=self.parse_image)

self.offset += 100

yield scrapy.Request(self.base_url + str(self.offset), callback=self.parse)

# 1. 通用方式,直接获取资源文件的链接,发送请求再用open方法写入响应数据保存

def parse_image(self, response):

filename = response.meta['name'] + ".jpg"

# 获取响应,按 "wb" 模式保存图片数据

with open(filename, "wb") as f:

f.write(response.body)

items

# -*- coding: utf-8 -*-

import scrapy

class DouyuItem(scrapy.Item):

# 直播间链接

room_link = scrapy.Field()

# 图片链接

image_src = scrapy.Field()

# 艺名

nick_name = scrapy.Field()

# 所在城市

anchor_city = scrapy.Field()

# 图片在磁盘中的路径

image_path = scrapy.Field()

管道

# -*- coding: utf-8 -*-

import os

import logging

import scrapy

from scrapy.pipelines.images import ImagesPipeline

from .settings import IMAGES_STORE

class DouyuImagesPipeline(ImagesPipeline):

def get_media_requests(self, item, info):

# 发送每个图片的请求,响应数据自动保存在 settings.py 的 IMAGES_STORE字段对应的路径里

yield scrapy.Request(item['image_src'])

def item_completed(self, results, item, info):

old_name = IMAGES_STORE + [x['path'] for ok, x in results if ok][0]

new_name = IMAGES_STORE + item['nick_name'] + ".jpg"

try:

os.rename(old_name, new_name)

except Exception as e:

logging.error(e)

return item

class DouyuPipeline(object):

def process_item(self, item, spider):

return item

运行结果