基于logstash-input-jdbc索引构建

基于ElasticSearch和MySQL索引构建

# 定义门店的索引结构

PUT /shop

{

"settings": {

"number_of_shards": 1,

"number_of_replicas": 1

},

"mappings": {

"properties": {

// 标识字段 id,尽量和数据库中保持一致,没有真正含义

"id": {

"type": "integer"

},

// 门店名称,为主要的搜索字段,分词采用 ik_max_word,搜索分词采用 ik_smart

"name": {

"type": "text",

"analyzer": "ik_max_word",

"search_analyzer": "ik_smart"

},

// 标签字段,也存在业务需求,所以设置其为 text,按照空格分开即可

"tags": {

"type": "text",

"analyzer": "whitespace",

"fielddata": true

},

// 经纬度标识字段,识别地理位置

"location": {

"type": "geo_point"

},

// 门店评分,参与推荐排序

"remark_score": {

"type": "double"

},

// 门店平均消费水平

"price_per_man": {

"type": "integer"

},

// 品类 id

"category_id": {

"type": "integer"

},

// 品类名称:只作为冗余字段,所以设置为 keyword

"category_name": {

"type": "keyword"

},

// 商家 id

"seller_id": {

"type": "integer"

},

// 商家评分

"seller_remark_score": {

"type": "double"

},

// 商家是否被禁用

"seller_disabled_flag": {

"type": "integer"

}

}

}

}

索引构建

# 下载 logstash-7.3.0,并解压到对应的目录下

[yangqi@yankee software]$ tar -zvxf logstash-7.3.0.tar.gz -C ../apps

[yangqi@yankee software]$ cd ../apps

[yangqi@yankee apps]$ cd logstash-7.3.0

[yangqi@yankee logstash-7.3.0]$ ./bin/logstash-plugin install logstash-input-jdbc

如果安装不成功的话,可以尝试进行以下操作:

[yangqi@yankee logstash-7.3.0]$ sudo yum -y install gem

# 安装完成后,替换为国内下载源(ruby-china)

[yangqi@yankee logstash-7.3.0]$ gem sources --add https://gems.ruby-china.com/ --remove https://rubygems.org/

# 使用 gem source -l 查看

[yanqi@yankee logstash-7.3.0]$ gem source -l

# 替换完成后,进入 logstash-7.3.0 修改 Gemfile

[yangqi@yankee logstash-7.3.0]$ vi Gemfile

# 修改第四行内容为:source "https://gems.ruby-china.com"

# 继续安装 logstash-input-jdbc 插件

[yangqi@yankee logstash-7.3.0]$ ./bin/logstash-plugin install logstash-input-jdbc

Validating logstash-input-jdbc

Installing logstash-input-jdbc

Installation successful

# 提示以上信息即安装成功

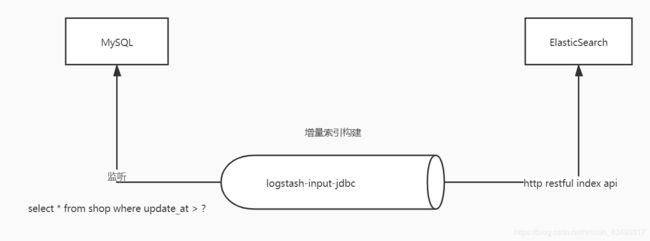

logstash-input-jdbc全量更新工作流程图:

全量索引构建

练习所用的sql数据

/*

Navicat Premium Data Transfer

Source Server : MySQL

Source Server Type : MySQL

Source Server Version : 50727

Source Host : localhost:3306

Source Schema : recommendedsystem

Target Server Type : MySQL

Target Server Version : 50727

File Encoding : 65001

Date: 29/02/2020 12:53:56

*/

SET NAMES utf8mb4;

SET FOREIGN_KEY_CHECKS = 0;

-- ----------------------------

-- Table structure for category

-- ----------------------------

DROP TABLE IF EXISTS `category`;

CREATE TABLE `category` (

`id` int(11) NOT NULL AUTO_INCREMENT COMMENT '自增 id',

`created_at` datetime(0) NOT NULL COMMENT '创建时间',

`updated_at` datetime(0) NOT NULL COMMENT '更新时间',

`name` varchar(20) CHARACTER SET utf8 COLLATE utf8_unicode_ci NOT NULL DEFAULT '' COMMENT '品类名称',

`icon_url` varchar(200) CHARACTER SET utf8 COLLATE utf8_unicode_ci NOT NULL DEFAULT '' COMMENT '品类图片url',

`sort` int(11) NOT NULL DEFAULT 0 COMMENT '品类排序',

PRIMARY KEY (`id`) USING BTREE,

UNIQUE INDEX `name_unique_index`(`name`) USING BTREE COMMENT '品类名称唯一索引'

) ENGINE = InnoDB AUTO_INCREMENT = 9 CHARACTER SET = utf8 COLLATE = utf8_unicode_ci ROW_FORMAT = Dynamic;

-- ----------------------------

-- Records of category

-- ----------------------------

INSERT INTO `category` VALUES (1, '2019-06-10 15:33:37', '2019-06-10 15:33:37', '美食', '/static/image/firstpage/food_u.png', 99);

INSERT INTO `category` VALUES (2, '2019-06-10 15:34:34', '2019-06-10 15:34:34', '酒店', '/static/image/firstpage/snack_u.png', 98);

INSERT INTO `category` VALUES (3, '2019-06-10 15:36:36', '2019-06-10 15:36:36', '休闲娱乐', '/static/image/firstpage/bar_o.png', 97);

INSERT INTO `category` VALUES (4, '2019-06-10 15:37:09', '2019-06-10 15:37:09', '结婚', '/static/image/firstpage/jiehun.png', 96);

INSERT INTO `category` VALUES (5, '2019-06-10 15:37:31', '2019-06-10 15:37:31', '足疗按摩', '/static/image/firstpage/zuliao.png', 96);

INSERT INTO `category` VALUES (6, '2019-06-10 15:37:49', '2019-06-10 15:37:49', 'KTV', '/static/image/firstpage/ktv_u.png', 95);

INSERT INTO `category` VALUES (7, '2019-06-10 15:38:14', '2019-06-10 15:38:14', '景点', '/static/image/firstpage/jingdian.png', 94);

INSERT INTO `category` VALUES (8, '2019-06-10 15:38:35', '2019-06-10 15:38:35', '丽人', '/static/image/firstpage/liren.png', 93);

-- ----------------------------

-- Table structure for seller

-- ----------------------------

DROP TABLE IF EXISTS `seller`;

CREATE TABLE `seller` (

`id` int(11) NOT NULL AUTO_INCREMENT COMMENT '自增 id',

`name` varchar(80) CHARACTER SET utf8 COLLATE utf8_unicode_ci NOT NULL DEFAULT '' COMMENT '商户名称',

`created_at` datetime(0) NOT NULL COMMENT '注册时间',

`updated_at` datetime(0) NOT NULL COMMENT '更新时间',

`remark_score` decimal(2, 1) NOT NULL DEFAULT 0.0 COMMENT '评分',

`disabled_flag` int(11) NOT NULL DEFAULT 0 COMMENT '启用和停用标记',

PRIMARY KEY (`id`) USING BTREE

) ENGINE = InnoDB AUTO_INCREMENT = 27 CHARACTER SET = utf8 COLLATE = utf8_unicode_ci ROW_FORMAT = Dynamic;

-- ----------------------------

-- Records of seller

-- ----------------------------

INSERT INTO `seller` VALUES (1, '江苏和府餐饮管理有限公司', '2020-02-24 00:04:30', '2020-02-24 00:04:30', 2.5, 0);

INSERT INTO `seller` VALUES (2, '北京烤鸭有限公司', '2020-02-24 00:04:30', '2020-02-24 00:04:30', 2.0, 0);

INSERT INTO `seller` VALUES (3, '合肥食品制造有限公司', '2020-02-24 00:04:30', '2020-02-24 00:04:30', 2.6, 0);

INSERT INTO `seller` VALUES (4, '青岛啤酒厂', '2020-02-24 00:04:30', '2020-02-24 00:04:30', 0.9, 0);

INSERT INTO `seller` VALUES (5, '杭州轻食有限公司', '2020-02-24 00:04:30', '2020-02-24 00:04:30', 3.0, 0);

INSERT INTO `seller` VALUES (6, '九竹食品加工公司', '2020-02-24 00:04:30', '2020-02-24 00:04:30', 5.0, 0);

INSERT INTO `seller` VALUES (7, '奔潮食品加工公司', '2020-02-24 00:04:30', '2020-02-24 00:04:30', 2.7, 0);

INSERT INTO `seller` VALUES (8, '百沐食品加工公司', '2020-02-24 00:04:30', '2020-02-24 00:04:30', 2.0, 0);

INSERT INTO `seller` VALUES (9, '韩蒂衣食品加工公司', '2020-02-24 00:04:30', '2020-02-24 00:04:30', 1.5, 0);

INSERT INTO `seller` VALUES (10, '城外食品加工公司', '2020-02-24 00:04:30', '2020-02-24 00:04:30', 1.8, 0);

INSERT INTO `seller` VALUES (11, '雪兔食品加工公司', '2020-02-24 00:04:30', '2020-02-24 00:04:30', 4.6, 0);

INSERT INTO `seller` VALUES (12, '琳德食品公司', '2020-02-24 00:04:30', '2020-02-24 00:04:30', 5.0, 0);

INSERT INTO `seller` VALUES (13, '深圳市盛华莲蓉食品厂', '2020-02-24 00:04:30', '2020-02-24 00:04:30', 0.7, 0);

INSERT INTO `seller` VALUES (14, '桂林聚德苑食品有限公司', '2020-02-24 00:04:30', '2020-02-24 00:04:30', 5.0, 0);

INSERT INTO `seller` VALUES (15, '天津达瑞仿真蛋糕模型厂', '2020-02-24 00:04:30', '2020-02-24 00:04:30', 1.7, 0);

INSERT INTO `seller` VALUES (16, '上海镭德杰喷码技术有限公司', '2020-02-24 00:04:30', '2020-02-24 00:04:30', 5.0, 0);

INSERT INTO `seller` VALUES (17, '凯悦饭店集团', '2020-02-24 00:04:30', '2020-02-24 00:04:30', 3.0, 0);

INSERT INTO `seller` VALUES (18, '卡尔森环球酒店公司', '2020-02-24 00:04:30', '2020-02-24 00:04:30', 3.1, 0);

INSERT INTO `seller` VALUES (19, '喜达屋酒店集团', '2020-02-24 00:04:30', '2020-02-24 00:04:30', 0.2, 0);

INSERT INTO `seller` VALUES (20, '最佳西方国际集团', '2020-02-24 00:04:30', '2020-02-24 00:04:30', 3.8, 0);

INSERT INTO `seller` VALUES (21, '精品国际饭店公司', '2020-02-24 00:04:30', '2020-02-24 00:04:30', 0.2, 0);

INSERT INTO `seller` VALUES (22, '希尔顿集团', '2020-02-24 00:04:30', '2020-02-24 00:04:30', 1.7, 0);

INSERT INTO `seller` VALUES (23, '雅高集团', '2020-02-24 00:04:30', '2020-02-24 00:04:30', 1.8, 0);

INSERT INTO `seller` VALUES (24, '万豪国际集团', '2020-02-24 00:04:30', '2020-02-24 00:04:30', 4.1, 0);

INSERT INTO `seller` VALUES (25, '胜腾酒店集团', '2020-02-24 00:04:30', '2020-02-24 00:04:30', 3.0, 0);

INSERT INTO `seller` VALUES (26, '洲际酒店集团', '2020-02-24 00:04:30', '2020-02-24 00:04:30', 2.8, 0);

-- ----------------------------

-- Table structure for shop

-- ----------------------------

DROP TABLE IF EXISTS `shop`;

CREATE TABLE `shop` (

`id` int(11) NOT NULL AUTO_INCREMENT COMMENT '自增 id',

`created_at` datetime(0) NOT NULL COMMENT '创建时间',

`updated_at` datetime(0) NOT NULL COMMENT '更新时间',

`name` varchar(80) CHARACTER SET utf8 COLLATE utf8_unicode_ci NOT NULL DEFAULT '' COMMENT '门店名称',

`remark_score` decimal(2, 1) NOT NULL DEFAULT 0.0 COMMENT '评分',

`price_per_man` int(11) NOT NULL DEFAULT 0 COMMENT '人均价格',

`latitude` decimal(10, 6) NOT NULL DEFAULT 0.000000 COMMENT '维度',

`longitude` decimal(10, 6) NOT NULL DEFAULT 0.000000 COMMENT '经度',

`category_id` int(11) NOT NULL DEFAULT 0 COMMENT '品类 id',

`tags` varchar(2000) CHARACTER SET utf8 COLLATE utf8_unicode_ci NOT NULL DEFAULT '' COMMENT '标签',

`start_time` varchar(200) CHARACTER SET utf8 COLLATE utf8_unicode_ci NOT NULL DEFAULT '' COMMENT '门店开始运营时间',

`end_time` varchar(200) CHARACTER SET utf8 COLLATE utf8_unicode_ci NOT NULL DEFAULT '' COMMENT '门店结束运营时间',

`address` varchar(200) CHARACTER SET utf8 COLLATE utf8_unicode_ci NOT NULL DEFAULT '' COMMENT '门店地址',

`seller_id` int(11) NOT NULL DEFAULT 0 COMMENT 'seller id',

`icon_url` varchar(100) CHARACTER SET utf8 COLLATE utf8_unicode_ci NOT NULL DEFAULT '' COMMENT '门店图片',

PRIMARY KEY (`id`) USING BTREE

) ENGINE = InnoDB AUTO_INCREMENT = 17 CHARACTER SET = utf8 COLLATE = utf8_unicode_ci ROW_FORMAT = Dynamic;

-- ----------------------------

-- Records of shop

-- ----------------------------

INSERT INTO `shop` VALUES (1, '2020-02-24 00:04:30', '2020-02-24 00:04:30', '和府捞面(正大乐城店)', 4.9, 156, 31.195341, 120.915855, 1, '新开业 人气爆棚', '10:00', '22:00', '船厂路36号', 1, '/static/image/shopcover/xchg.jpg');

INSERT INTO `shop` VALUES (2, '2020-02-24 00:04:30', '2020-02-24 00:04:30', '和府捞面(飞洲国际店)', 0.4, 79, 31.189323, 121.443550, 1, '强烈推荐要点小食', '10:00', '22:00', '零陵路899号', 1, '/static/image/shopcover/zoocoffee.jpg');

INSERT INTO `shop` VALUES (3, '2020-02-24 00:04:30', '2020-02-24 00:04:30', '和府捞面(百脑汇店)', 4.7, 101, 31.189323, 121.443550, 1, '有大桌 有WIFI', '10:00', '22:00', '漕溪北路339号', 1, '/static/image/shopcover/six.jpg');

INSERT INTO `shop` VALUES (4, '2020-02-24 00:04:30', '2020-02-24 00:04:30', '花悦庭果木烤鸭', 2.0, 152, 31.306419, 121.524878, 1, '落地大窗 有WIFI', '11:00', '21:00', '翔殷路1099号', 2, '/static/image/shopcover/yjbf.jpg');

INSERT INTO `shop` VALUES (5, '2020-02-24 00:04:30', '2020-02-24 00:04:30', '德泰丰北京烤鸭', 2.3, 187, 31.305236, 121.519875, 1, '五花肉味道', '11:00', '21:00', '邯郸路国宾路路口', 2, '/static/image/shopcover/jbw.jpg');

INSERT INTO `shop` VALUES (6, '2020-02-24 00:04:30', '2020-02-24 00:04:30', '烧肉居酒屋', 2.3, 78, 31.306419, 121.524878, 1, '有包厢', '11:00', '21:00', '翔殷路1099号', 4, '/static/image/shopcover/mwsk.jpg');

INSERT INTO `shop` VALUES (7, '2020-02-24 00:04:30', '2020-02-24 00:04:30', '西界', 4.7, 100, 31.309411, 121.515074, 1, '帅哥多', '11:00', '21:00', '大学路246号', 4, '/static/image/shopcover/lsy.jpg');

INSERT INTO `shop` VALUES (8, '2020-02-24 00:04:30', '2020-02-24 00:04:30', 'LAVA酒吧', 2.0, 152, 31.308370, 121.521360, 1, '帅哥多', '11:00', '21:00', '淞沪路98号', 4, '/static/image/shopcover/jtyjj.jpg');

INSERT INTO `shop` VALUES (9, '2020-02-24 00:04:30', '2020-02-24 00:04:30', '凯悦酒店', 2.2, 176, 31.306172, 121.525843, 2, '落地大窗', '11:00', '21:00', '国定东路88号', 17, '/static/image/shopcover/dfjzw.jpg');

INSERT INTO `shop` VALUES (10, '2020-02-24 00:04:30', '2020-02-24 00:04:30', '凯悦嘉轩酒店', 0.5, 182, 31.196742, 121.322846, 2, '自助餐', '11:00', '21:00', '申虹路9号', 17, '/static/image/shopcover/secretroom09.jpg');

INSERT INTO `shop` VALUES (11, '2020-02-24 00:04:30', '2020-02-24 00:04:30', '新虹桥凯悦酒店', 1.0, 74, 31.156899, 121.238362, 2, '自助餐', '11:00', '21:00', '沪青平公路2799弄', 17, '/static/image/shopcover/secretroom08.jpg');

INSERT INTO `shop` VALUES (12, '2020-02-24 00:04:30', '2020-02-24 00:04:30', '凯悦咖啡(新建西路店)', 2.0, 71, 30.679819, 121.651921, 1, '有包厢', '11:00', '21:00', '南桥环城西路665号', 17, '/static/image/shopcover/secretroom07.jpg');

INSERT INTO `shop` VALUES (13, '2020-02-24 00:04:30', '2020-02-24 00:04:30', '上海虹桥元希尔顿酒店', 4.5, 96, 31.193517, 121.401270, 2, '2019年上海必住酒店', '11:00', '21:00', '红松东路1116号', 22, '/static/image/shopcover/secretroom06.jpg');

INSERT INTO `shop` VALUES (14, '2020-02-24 00:04:30', '2020-02-24 00:04:30', '国家会展中心希尔顿欢朋酒店', 1.7, 176, 30.953049, 121.053774, 2, '高档', '11:00', '21:00', '华漕镇盘阳路59弄', 22, '/static/image/shopcover/secretroom05.jpg');

INSERT INTO `shop` VALUES (15, '2020-02-24 00:04:30', '2020-02-24 00:04:30', '上海绿地万豪酒店', 4.1, 187, 31.197688, 121.479098, 2, '高档', '11:00', '21:00', '江滨路99号', 23, '/static/image/shopcover/secretroom04.jpg');

INSERT INTO `shop` VALUES (16, '2020-02-24 00:04:30', '2020-02-24 00:04:30', '上海宝华万豪酒店', 3.0, 163, 31.285934, 121.452481, 2, '高档', '11:00', '21:00', '广中西路333号', 23, '/static/image/shopcover/secretroom03.jpg');

-- ----------------------------

-- Table structure for user

-- ----------------------------

DROP TABLE IF EXISTS `user`;

CREATE TABLE `user` (

`id` int(11) NOT NULL AUTO_INCREMENT COMMENT '自增id',

`create_at` datetime(0) NOT NULL COMMENT '创建时间',

`update_at` datetime(0) NOT NULL COMMENT '更新时间',

`telphone` varchar(40) CHARACTER SET utf8 COLLATE utf8_unicode_ci NOT NULL DEFAULT '' COMMENT '电话',

`password` varchar(200) CHARACTER SET utf8 COLLATE utf8_unicode_ci NOT NULL DEFAULT '' COMMENT '密码',

`nick_name` varchar(40) CHARACTER SET utf8 COLLATE utf8_unicode_ci NOT NULL DEFAULT '' COMMENT '昵称',

`gender` int(11) NOT NULL DEFAULT 0 COMMENT '性别',

PRIMARY KEY (`id`) USING BTREE,

UNIQUE INDEX `telphone_unique_index`(`telphone`) USING BTREE COMMENT 'telphone 的唯一索引'

) ENGINE = InnoDB AUTO_INCREMENT = 7 CHARACTER SET = utf8 COLLATE = utf8_unicode_ci ROW_FORMAT = Dynamic;

-- ----------------------------

-- Records of user

-- ----------------------------

INSERT INTO `user` VALUES (4, '2020-02-23 12:24:11', '2020-02-23 12:24:11', '17764776377', '4QrcOUm6Wau+VuBX8g+IPg==', 'xiaoer', 1);

INSERT INTO `user` VALUES (6, '2020-02-23 14:08:01', '2020-02-23 14:08:01', '13891496906', '4QrcOUm6Wau+VuBX8g+IPg==', 'xiaoer', 2);

SET FOREIGN_KEY_CHECKS = 1;

使用logstash-input-jdbc将mysql中的数据全量导入到elasticsearch进行构建索引。

# 进入 logstash-7.3.0/bin 目录下,新建 mysql 目录,用来存储 SQL 语句和 mysql 索引

[yangqi@yankee bin]$ mkdir mysql

# 将 mysql-connector-java 拷贝到 mysql 目录下

# 新建 jdbc.conf 文件,并输入以下内容:

[yangqi@yankee mysql]$ vi jdbc.conf

========================================================================

input {

jdbc {

# mysql 数据库链接,dianpingdb为数据库名

jdbc_connection_string => "jdbc:mysql://localhost:3306/recommendedsystem"

# 用户名和密码

jdbc_user => "root"

jdbc_password => "xiaoer"

# 驱动

jdbc_driver_library => "/opt/apps/logstash-7.3.0/bin/mysql/mysql-connector-java-5.1.34.jar"

# 驱动类名

jdbc_driver_class => "com.mysql.jdbc.Driver"

jdbc_paging_enabled => "true"

jdbc_page_size => "50000"

# 执行的sql 文件路径+名称;

statement_filepath => "/opt/apps/logstash-7.3.0/bin/mysql/jdbc.sql"

# 设置监听间隔 各字段含义(由左至右)分、时、天、月、年,全部为*默认含义为每分钟都更新

schedule => "* * * * *"

}

}

output {

elasticsearch {

# ES的IP地址及端口

hosts => ["192.168.21.89:9200"]

# 索引名称

index => "shop"

document_type => "_doc"

# 自增ID 需要关联的数据库中有有一个id字段,对应索引的id号

document_id => "%{id}"

}

stdout {

# JSON格式输出

codec => json_lines

}

}

========================================================================

# 新建 jdbc.sql 文件,并输入以下内容:

[yangqi@yankee mysql]$ vi jdbc.sql

========================================================================

select a.id,a.name,a.tags,concat(a.latitude,',',a.longitude) as location,a.remark_score,a.price_per_man,a.category_id,b.name as category_name,a.seller_id,c.remark_score as seller_remark_score,c.disabled_flag as seller_disabled_flag from shop a inner join category b on a.category_id = b.id inner join seller c on c.id = a.seller_id

========================================================================

# 启动 logstash

[yangqi@yankee logstash-7.3.0]$ ./bin/logstash -f bin/mysql/jdbc.conf

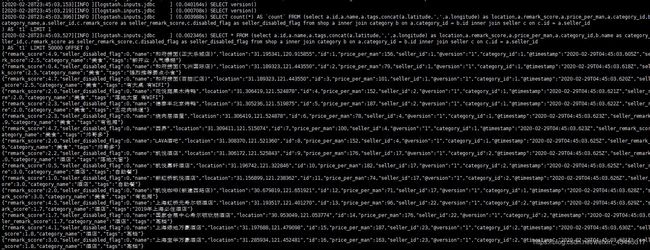

显示如下日志信息则信息导入成功:

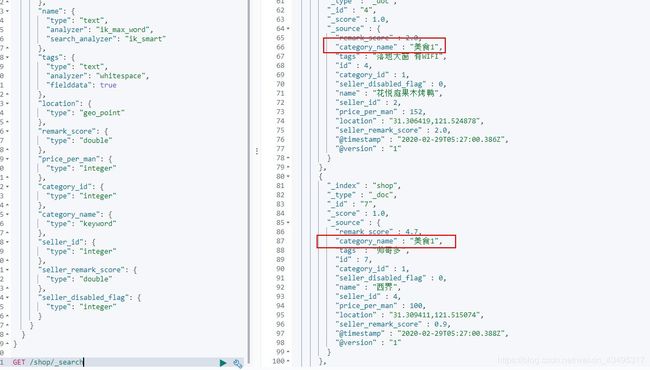

通过kibana查看shop索引的中的信息:

GET /shop/_search

logstash-input-jdbc增量更新工作流程图:

增量索引构建

更改logstash-7.3.0/bin/mysql目录下的jdbc.conf文件为以下内容:

input {

jdbc {

# mysql 数据库链接,dianpingdb为数据库名

jdbc_connection_string => "jdbc:mysql://localhost:3306/recommendedsystem"

# 用户名和密码

jdbc_user => "root"

jdbc_password => "xiaoer"

# 驱动

jdbc_driver_library => "/opt/apps/logstash-7.3.0/bin/mysql/mysql-connector-java-5.1.34.jar"

# 驱动类名

jdbc_driver_class => "com.mysql.jdbc.Driver"

jdbc_paging_enabled => "true"

jdbc_page_size => "50000"

last_run_metadata_path => "/opt/apps/logstash-7.3.0/bin/mysql/last_value_meta"

# 执行的sql 文件路径+名称;

statement_filepath => "/opt/apps/logstash-7.3.0/bin/mysql/jdbc.sql"

# 设置监听间隔 各字段含义(由左至右)分、时、天、月、年,全部为*默认含义为每分钟都更新

schedule => "* * * * *"

}

}

output {

elasticsearch {

# ES的IP地址及端口

hosts => ["192.168.21.89:9200"]

# 索引名称

index => "shop"

document_type => "_doc"

# 自增ID 需要关联的数据库中有有一个id字段,对应索引的id号

document_id => "%{id}"

}

stdout {

# JSON格式输出

codec => json_lines

}

}

更改logstash-7.3.0/bin/mysql目录下的jdbc.sql文件为以下内容:

select a.id,a.name,a.tags,concat(a.latitude,',',a.longitude) as location,a.remark_score,a.price_per_man,a.category_id,b.name as category_name,a.seller_id,c.remark_score as seller_remark_score,c.disabled_flag as seller_disabled_flag from shop a inner join category b on a.category_id = b.id inner join seller c on c.id = a.seller_id where a.updated_at > :sql_last_value or b.updated_at > :sql_last_value or c.updated_at > :sql_last_value

在logstash-7.3.0/bin/mysql目录下新建last_value_meta文件,写入初始内容:

# 由于是第一次测试增量索引,可以先设置一个比较小的值

2011-11-11 11:11:11

重新启动logstash-7.3.0:

[yangqi@yankee logstash-7.3.0]$ ./bin/logstash -f ./bin/mysql/jdbc.conf

手动修改mysql中的数据和update_at字段,再次查看结果:

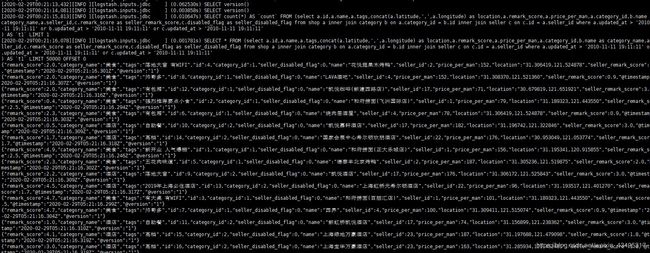

控制台输出日志结果:

通过kibana查看是是否更新成功:

弊端

尽管基于 logstash-input-jdbc 构建索引可以实现将 mysql 数据库中更新后的值同步更新到 elasticsearch 中,并由 elasticsearch 构建索引,但是当数据量巨大时,同步和构建索引也将会花费大量的时间。