Python Tensorflow 使用梯度下降法来预测股票价格

tensorflow 主要有三个层的使用

分别是 输入层 隐藏层 输出层

输入层: 主要是用来输入数据,设置数据,设置x and y

隐藏层: 使用w(权重)b(偏移量) 来度量数据的准确度,并且会随着训练次数的增多,从而降低误差,也会将w and b 的值设置得更加准确

输出层: 顾名思义,是训练结果数据的输出层

import numpy as np

import matplotlib.pyplot as plt

import tensorflow as tf

date = np.linspace(1,15,15)

endPrice = np.array([2511.90,2538.26,2510.68,2591.66,2732.98,2701.69,

2701.29,2678.67,2726.50,2681.50,2739.17,2715.07,

2823.58,2864.90,2919.08])

beginPrice = np.array([2438.71,2500.88,2534.95,2512.52,2594.04,2743.26,

2697.47,2695.24,2678.23,2722.13,2674.93,2744.13,

2717.46,2832.73,2877.40])

#数据的提取

print(date)

plt.figure()

#设置图形

for i in range(15): #15天的各天情况

dayOne = np.zeros([2]) #每天都要两种情况,开盘和收盘

dayOne[0] = i

dayOne[1] = i

price = np.zeros([2])

price[0] = endPrice[i]

price[1] = beginPrice[i]

if endPrice[i] > beginPrice[i]:

plt.plot(dayOne,price,'r',lw=8)

else:

plt.plot(dayOne,price,'g',lw=8)

# plt.show()

# A 输入层

dateNormal = np.zeros([15,1])

priceNormal = np.zeros([15,1])

for i in range(15):

dateNormal[i,0] = i/14.0

priceNormal[i,0] = endPrice[i]/3000.0

x = tf.placeholder(tf.float32,[None,1])

y = tf.placeholder(tf.float32,[None,1])

#B 隐藏层

w1 = tf.Variable(tf.random_uniform([1,10],0,1))

b1 = tf.Variable(tf.zeros([1,10]))

wb1 = tf.matmul(x,w1)+b1

layer1 = tf.nn.relu(wb1) #激励函数

#C 输出层

w2 = tf.Variable(tf.random_uniform([10,1],0,1))

b2 = tf.Variable(tf.zeros([15,1]))

wb2 = tf.matmul(layer1,w2)+b2

layer2 = tf.nn.relu(wb2)

#损失值 y是真实值 layer2 是计算值

loss = tf.reduce_mean(tf.square(y-layer2))

#梯度下降法来缩小loss的值

train_step = tf.train.GradientDescentOptimizer(0.1).minimize(loss)

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

for i in range(0,10001):

sess.run(train_step,feed_dict={x:dateNormal,y:priceNormal})

#w1 w2 b1 b1 A + wb --> layer2

pred = sess.run(layer2,feed_dict={x:dateNormal})

predPrice = np.zeros([15,1])

for i in range(0,15):

predPrice[i,0] = (pred*3000)[i,0]

plt.plot(date,predPrice,'b',lw=1)

plt.show()

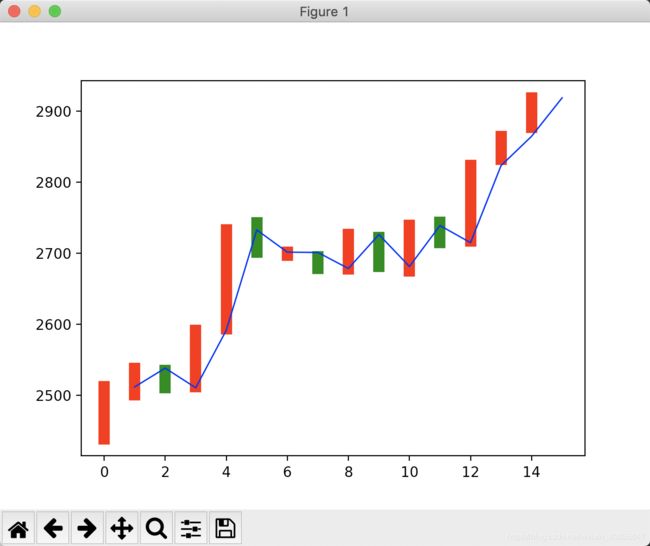

运行结果

蓝色的线就是通过神经网络训练出来的