Java爬虫项目(一 爬取)(岗位爬取并展示)WebMagic+MySQL+Echarts+IDEA

一:Jsoup+HttpClient爬取51job(前程无忧)网的岗位招聘信息

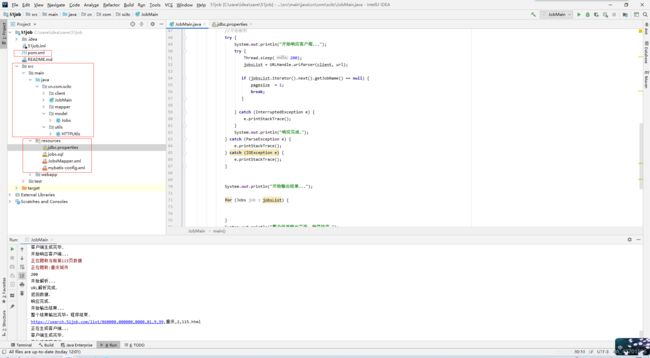

1.项目框架如下

用idea创建一个maven项目,然后按照以下步骤创建项目,或者直接将我的包解压了,拖到你创建好的项目路径下

2.pom.xml添加依赖

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/maven-v4_0_0.xsd">

<modelVersion>4.0.0modelVersion>

<packaging>warpackaging>

<name>51jobname>

<groupId>cn.com.scitcgroupId>

<artifactId>51jobartifactId>

<version>1.0-SNAPSHOTversion>

<build>

<plugins>

<plugin>

<groupId>org.mortbay.jettygroupId>

<artifactId>maven-jetty-pluginartifactId>

<version>6.1.7version>

<configuration>

<connectors>

<connector implementation="org.mortbay.jetty.nio.SelectChannelConnector">

<port>8888port>

<maxIdleTime>30000maxIdleTime>

connector>

connectors>

<webAppSourceDirectory>${project.build.directory}/${pom.artifactId}-${pom.version}webAppSourceDirectory>

<contextPath>/contextPath>

configuration>

plugin>

<plugin>

<groupId>org.apache.maven.pluginsgroupId>

<artifactId>maven-compiler-pluginartifactId>

<configuration>

<source>8source>

<target>8target>

configuration>

plugin>

plugins>

build>

<dependencies>

<dependency>

<groupId>commons-codecgroupId>

<artifactId>commons-codecartifactId>

<version>1.11version>

dependency>

<dependency>

<groupId>commons-logginggroupId>

<artifactId>commons-loggingartifactId>

<version>1.2version>

dependency>

<dependency>

<groupId>org.apache.httpcomponentsgroupId>

<artifactId>httpclientartifactId>

<version>4.5.9version>

dependency>

<dependency>

<groupId>org.apache.httpcomponentsgroupId>

<artifactId>httpcoreartifactId>

<version>4.4.11version>

dependency>

<dependency>

<groupId>junitgroupId>

<artifactId>junitartifactId>

<version>4.12version>

dependency>

<dependency>

<groupId>org.jsoupgroupId>

<artifactId>jsoupartifactId>

<version>1.12.1version>

dependency>

<dependency>

<groupId>org.mybatisgroupId>

<artifactId>mybatisartifactId>

<version>3.5.1version>

dependency>

<dependency>

<groupId>mysqlgroupId>

<artifactId>mysql-connector-javaartifactId>

<version>8.0.16version>

dependency>

dependencies>

project>

3.根据项目结构图创建包和类

JobMain类

package cn.com.scitc;

import cn.com.scitc.client.URLHandle;

import cn.com.scitc.model.Jobs;

import org.apache.http.ParseException;

import org.apache.http.client.HttpClient;

import org.apache.http.impl.client.HttpClientBuilder;

import java.io.IOException;

import java.util.*;

public class JobMain {

public static void main(String[] args) {

System.out.println("正在生成客户端...");

HttpClient client = null;

System.out.println("客户端生成完毕.");

String[] city = {"重庆","西安"};

String[] value = {

"060000","200200"

};

int pagesize = 1;

boolean splider = true;

for (int num = 0; num <410; num ++) {

while (splider) {

// 000000,0000,01,9,99 其中01是计算机的 打开51job网,搜索对应的之后看他的url地址栏变化

String url = "https://search.51job.com/list/"+ value[num] + ",000000,0000,01,9,99," + city[num] + ",2," + pagesize++ + ".html";

System.err.println("正在爬取当前第" + pagesize + "页数据");

System.err.println("正在爬取:" + city[num] + "城市" );

System.out.println(url);

List<Jobs> jobsList = null;

System.out.println("正在生成客户端...");

client = HttpClientBuilder.create().build();

System.out.println("客户端生成完毕.");

//开始解析

try {

System.out.println("开始响应客户端...");

try {

Thread.sleep(200);

jobsList = URLHandle.urlParser(client, url);

if (jobsList.iterator().next().getJobName() == null) {

pagesize = 1;

break;

}

} catch (InterruptedException e) {

e.printStackTrace();

}

System.out.println("响应完成.");

} catch (ParseException e) {

e.printStackTrace();

} catch (IOException e) {

e.printStackTrace();

}

System.out.println("开始输出结果...");

for (Jobs job : jobsList) {

}

System.out.println("整个结果输出完毕,程序结束.");

}

}

}

}

JobParse类

package cn.com.scitc.client;

import cn.com.scitc.mapper.JobsMapper;

import cn.com.scitc.model.Jobs;

import org.apache.ibatis.io.Resources;

import org.apache.ibatis.session.SqlSession;

import org.apache.ibatis.session.SqlSessionFactory;

import org.apache.ibatis.session.SqlSessionFactoryBuilder;

import org.jsoup.Jsoup;

import org.jsoup.nodes.Document;

import org.jsoup.nodes.Element;

import org.jsoup.select.Elements;

import java.io.IOException;

import java.io.InputStream;

import java.math.BigInteger;

import java.util.ArrayList;

import java.util.Date;

import java.util.List;

public class JobParse {

public static List<Jobs> getData(String entity){

/**

* 读取mybatis配置文件

*/

String resource = "mybatis-config.xml";

InputStream inputStream = null;

try {

inputStream = Resources.getResourceAsStream(resource);

} catch (IOException e) {

e.printStackTrace();

}

/**

* 得到连接对象注册sqlsession

*/

SqlSessionFactory sqlSessionFactory = new SqlSessionFactoryBuilder().build(inputStream);

SqlSession sqlSession = sqlSessionFactory.openSession();

JobsMapper jobsMapper = sqlSession.getMapper(JobsMapper.class);

List<Jobs> data = new ArrayList<Jobs>();

Document doc = Jsoup.parse(entity);

Elements elements = doc.select("div.el");

Elements title = elements.select("p.t1").select("span").select("a");

Elements complany = elements.select("span.t2").select("a");

Elements address = elements.select("span.t3");

Elements salary = elements.select("span.t4");

Elements datas = elements.select("span.t5");

Elements SrcId = elements.select("p.t1").select("input.checkbox");

Jobs jobs = new Jobs();

if (title !=null || title.equals("")) {

for (Element element : title) {

jobs.setJobName(element.text());

}

}

if (complany !=null || complany.equals("")) {

for (Element element : complany) {

jobs.setCompanyName(element.text());

}

}

if (address !=null || address.equals("")) {

for (Element element : address) {

jobs.setWorkAddr(element.text());

}

}

if (salary !=null || salary.equals("")) {

for (Element element : salary) {

jobs.setSalary(element.text());

}

}

if (datas !=null || datas.equals("")) {

for (Element element : datas) {

jobs.setPushDate(element.text());

}

}

if (SrcId !=null || SrcId.equals("")) {

for (Element element : SrcId) {

jobs.setJobKey(element.attr("value"));

}

}

jobsMapper.insert(jobs);

sqlSession.commit();

data.add(jobs);

return data;

}

}

URLHandle类

package cn.com.scitc.client;

import cn.com.scitc.model.Jobs;

import cn.com.scitc.utils.HTTPUtils;

import org.apache.http.HttpResponse;

import org.apache.http.client.HttpClient;

import org.apache.http.util.EntityUtils;

import java.io.IOException;

import java.util.ArrayList;

import java.util.List;

public class URLHandle {

public static List<Jobs> urlParser(HttpClient client, String url) throws IOException {

List<Jobs> data = new ArrayList<Jobs>();

//获取响应资源

HttpResponse response = HTTPUtils.getHtml(client,url);

//获取响应状态码

int statusCode = response.getStatusLine().getStatusCode();

System.out.println(statusCode);

if(statusCode == 200) {

//页面编码

String entity = EntityUtils.toString(response.getEntity(),"gbk");

System.out.println("开始解析...");

data = JobParse.getData(entity);

System.out.println("URL解析完成.");

} else {

EntityUtils.consume(response.getEntity());//释放资源实体

}

System.out.println("返回数据.");

return data;

}

}

JobsMapper接口类

package cn.com.scitc.mapper;

import cn.com.scitc.model.Jobs;

import java.util.List;

public interface JobsMapper {

void insert(Jobs jobs);

List<Jobs> findAll();

}

Jobs类 //这里声明一下 在idea中alt+insert键 可以创建getter和setter

package cn.com.scitc.model;

public class Jobs {

private Integer jobId;

private String jobName; //岗位

private String companyName;//公司名

private String workAddr;//公司地址

private String salary;//薪水

private String pushDate;//发布日期

private String jobKey;

public Integer getJobId() {

return jobId;

}

public void setJobId(Integer jobId) {

this.jobId = jobId;

}

public String getJobName() {

return jobName;

}

public void setJobName(String jobName) {

this.jobName = jobName;

}

public String getCompanyName() {

return companyName;

}

public void setCompanyName(String companyName) {

this.companyName = companyName;

}

public String getWorkAddr() {

return workAddr;

}

public void setWorkAddr(String workAddr) {

this.workAddr = workAddr;

}

public String getSalary() {

return salary;

}

public void setSalary(String salary) {

this.salary = salary;

}

public String getPushDate() {

return pushDate;

}

public void setPushDate(String pushDate) {

this.pushDate = pushDate;

}

public String getJobKey() {

return jobKey;

}

public void setJobKey(String jobKey) {

this.jobKey = jobKey;

}

}

HTTPUtils类

package cn.com.scitc.utils;

import org.apache.http.HttpResponse;

import org.apache.http.HttpStatus;

import org.apache.http.HttpVersion;

import org.apache.http.client.HttpClient;

import org.apache.http.client.methods.HttpGet;

import org.apache.http.message.BasicHttpResponse;

import java.io.IOException;

public class HTTPUtils {

public static HttpResponse getHtml(HttpClient client, String url){

//获取响应文件,即HTML,采用get方法获取响应数据

HttpGet getMethod = new HttpGet(url);

HttpResponse response = new BasicHttpResponse(HttpVersion.HTTP_1_1, HttpStatus.SC_OK, "OK");

try {

//通过client执行get方法

response = client.execute(getMethod);

} catch (IOException e) {

e.printStackTrace();

} finally {

//getMethod.abort();

}

return response;

}

}

4.在resource资源文件夹中创建配置文件

jdbc.properties

driver=com.mysql.cj.jdbc.Driver

url=jdbc:mysql://localhost:3306/job51?serverTimezone=UTC&autoReconnect=true&useUnicode=true&characterEncoding=UTF-8&zeroDateTimeBehavior=CONVERT_TO_NULL&useSSL=false

username=root

password=123456

JobsMapper.xml

<mapper namespace="cn.com.scitc.mapper.JobsMapper">

<resultMap id="JobsMapperMap" type="cn.com.scitc.model.Jobs">

<id column="job_id" property="jobId" jdbcType="INTEGER"/>

<id column="job_name" property="jobName" jdbcType="VARCHAR"/>

<id column="company_name" property="companyName" jdbcType="VARCHAR"/>

<id column="work_addr" property="workAddr" jdbcType="VARCHAR"/>

<id column="salary" property="salary" jdbcType="VARCHAR"/>

<id column="push_date" property="pushDate" jdbcType="VARCHAR"/>

<id column="job_key" property="jobKey" jdbcType="VARCHAR"/>

resultMap>

<insert id="insert" keyColumn="jobId" useGeneratedKeys="true" parameterType="cn.com.scitc.model.Jobs">

insert into jobs (job_name,company_name,work_addr,salary,push_date,job_key) values (#{jobName},#{companyName},#{workAddr},#{salary},#{pushDate},#{jobKey} )

insert>

<select id="findAll" resultMap="JobsMapperMap">

SELECT * FROM jobs

select>

mapper>

mybatis-config.xml

<configuration>

<properties resource="jdbc.properties">properties>

<environments default="development">

<environment id="development">

<transactionManager type="JDBC"/>

<dataSource type="POOLED">

<property name="driver" value="${driver}"/>

<property name="url" value="${url}"/>

<property name="username" value="${username}"/>

<property name="password" value="${password}"/>

dataSource>

environment>

environments>

<mappers>

<mapper resource="JobsMapper.xml"/>

mappers>

configuration>

5.数据库中操作如下

新建数据库job51

job51

执行sql语句,或者我的项目包里有一个51job.sql文件,直接拖进去就行

/*

Navicat Premium Data Transfer

Source Server : localhost

Source Server Type : MySQL

Source Server Version : 80016

Source Host : localhost:3306

Source Schema : job51

Target Server Type : MySQL

Target Server Version : 80016

File Encoding : 65001

Date: 08/07/2019 10:27:49

*/

SET NAMES utf8mb4;

SET FOREIGN_KEY_CHECKS = 0;

-- ----------------------------

-- Table structure for jobs

-- ----------------------------

DROP TABLE IF EXISTS `jobs`;

CREATE TABLE `jobs` (

`job_id` int(15) NOT NULL AUTO_INCREMENT,

`job_name` text CHARACTER SET utf8 COLLATE utf8_general_ci,

`company_name` text CHARACTER SET utf8 COLLATE utf8_general_ci,

`work_addr` text CHARACTER SET utf8 COLLATE utf8_general_ci,

`salary` text CHARACTER SET utf8 COLLATE utf8_general_ci,

`push_date` text CHARACTER SET utf8 COLLATE utf8_general_ci,

`job_key` text CHARACTER SET utf8 COLLATE utf8_general_ci,

PRIMARY KEY (`job_id`)

) ENGINE=InnoDB AUTO_INCREMENT=2341 DEFAULT CHARSET=utf8;

SET FOREIGN_KEY_CHECKS = 1;

效果如图

![]()

运行JobMain类,效果如下

查看数据库中爬取到的数据

6.说明 自己点进去51job网查询,相关的信息,看地址栏里的url的变化,然后按照注释修改JobMain和jdbc.properties中的设置即可

附赠项目源码下载链接:

https://download.csdn.net/download/weixin_43701595/12457577

7.echarts调取数据库信息并展示正在做,将在下一篇文章中演示,等不及的小伙伴可以自行查阅资料并告诉我,已经做好了,正在编写文章

Java爬虫项目(二 展示)(岗位爬取并展示)WebMagic+MySQL+Echarts+IDEA

二:echarts展示mysql数据库中的数据

Java爬虫项目(三 爬虫)(岗位爬取并展示)WebMagic+MySQL+Echarts+IDEA

三:使用webmagic爬取51job网站的招聘信息

Java爬虫项目(四 可视化)(岗位爬取并展示)WebMagic+MySQL+Echarts+IDEA

四:使用Echarts可视化,从51job网站爬取的招聘信息