MFS + Pacemaker + Corosync +ISCSI +Fence

systemctl status moosefs-master.service## 热备

1、server4安装软件

rpm -ivh moosefs-master-3.0.103-1.rhsystemd.x86_64.rpm

vim /etc/hosts

172.25.30.1 server1 mfsmaster

2、server1修改master启动脚本

# vim /usr/lib/systemd/system/moosefs-master.service

[Unit]

Description=MooseFS Master server

Wants=network-online.target

After=network.target network-online.target

[Service]

Type=forking

ExecStart=/usr/sbin/mfsmaster -a ##这里改为-a,master非正常关闭时就可以直接开启了

ExecStop=/usr/sbin/mfsmaster stop

ExecReload=/usr/sbin/mfsmaster reload

PIDFile=/var/lib/mfs/.mfsmaster.lock

TimeoutStopSec=1800

TimeoutStartSec=1800

Restart=no

[Install]

WantedBy=multi-user.target

# systemctl daemon-reload ##重新加载脚本

3、server1和4配置yum源

vim /etc/yum.repos.d/dvd.repo

[HighAvailability]

name=HighAvailability

baseurl=http://172.25.30.250/rhel7.3/addons/HighAvailability

gpgcheck=0

[ResilientStorage]

name=ResilientStorage

baseurl=http://172.25.30.250/rhel7.3/addons/ResilientStorage

gpgcheck=0

4、安装热备软件(server1和4都要进行配置)

yum install -y pacemaker corosync pcs

5、server1和server4做免密并开启pcsd服务

server1:

ssh-keygen

ssh-copy-id server4

systemctl start pcsd

systemctl enable pcsd

passwd hacluster #用户密码为redhat

server4:

ssh-keygen

ssh-copy-id server1

systemctl start pcsd

systemctl enable pcsd

passwd hacluster #用户密码为redhat

6、创建集群并启动

server1

# pcs cluster auth server1 server4 #认证

Username: hacluster

Password:

server4: Authorized

server1: Authorized

# pcs cluster setup --name mycluster server1 server4 #设置集群

# pcs cluster start --all #启动所有集群

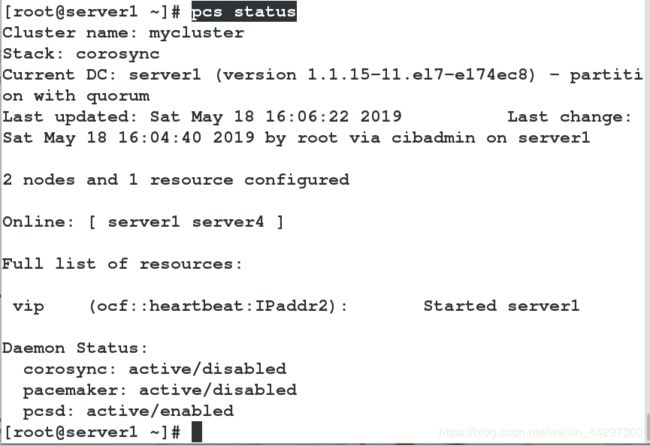

# pcs status nodes #显示所有集群集节点

# pcs status corosync #查看corosync状态

7、检查错误

server1和4

# journalctl | grep -i error #过滤日志中的错误

# crm_verify -L -V #根据报错提示,运行

# pcs property set stonith-enabled=false #修正错误

# crm_verify -L -V #再次检查解决错误

#没有错误输出即成功

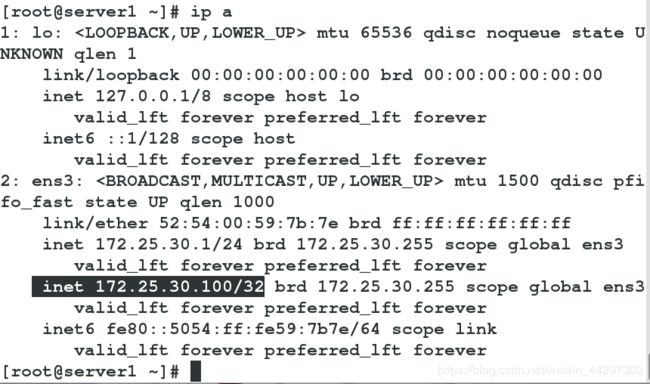

8、添加资源

server1

pcs resource standards

pcs resource providers

pcs resource agents ocf:heartbeat

pcs resource create vip ocf:heartbeat:IPaddr2 ip=172.25.30.100 cidr_netmask=32 op monitor interval=30s

#创建VIP

监控:

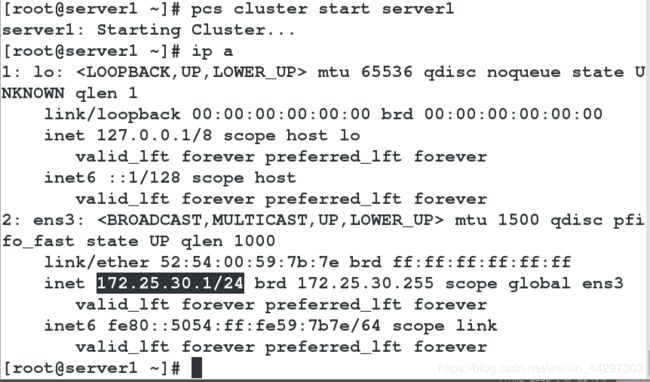

9、测试

# pcs cluster stop server1 #停掉mfs1

可以看到VIP漂移到了server4上,实现了高可用

# pcs cluster start server1 #启动

ISCIS的储存方式

1.清理之前的环境

客户端

umount /mnt/mfsmeta

master端

systemctl stop moosefs-master

chunkserver端

systemctl stop moosefs-chunkserver

2、在所有主机上添加解析

# vim /etc/hosts

172.25.30.100 mfsmaster

3、建立iscsi存储

server2:

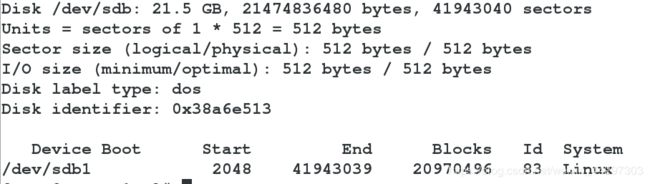

# fdisk -l

Disk /dev/vdb: 21.5 GB, 21474836480 bytes, 41943040 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

# yum install targetcli -y

# targetcli

/> cd backstores/block

/backstores/block> create my_disk1 /dev/vdb

/backstores/block> cd /iscsi

/iscsi> create iqn.2019-04.com.example:mfs2

/iscsi> cd iqn.2019-04.com.example:mfs2/tpg1/luns

/iscsi/iqn.20...fs2/tpg1/luns> create /backstores/block/my_disk1

/iscsi/iqn.20...fs2/tpg1/luns> cd ../acls

/iscsi/iqn.20...fs2/tpg1/acls> create iqn.2019-04.com.example:cli

/iscsi/iqn.20...fs2/tpg1/acls> exit

server1:

# yum install -y iscsi-*

# vim /etc/iscsi/initiatorname.iscsi #写入密码

InitiatorName=iqn.2019-04.com.example:client

# iscsiadm -m discovery -t st -p 172.25.30.2

172.25.30.2:3260,1 iqn.2019-04.com.example:mfs2

# systemctl restart iscsid

# iscsiadm -m node -l

建立分区并格式化

# fdisk /dev/sdb #建立一个分区

Command (m for help): n

Partition type:

p primary (0 primary, 0 extended, 4 free)

e extended

Select (default p):

Using default response p

Partition number (1-4, default 1):

First sector (2048-41943039, default 2048):

Using default value 2048

Last sector, +sectors or +size{K,M,G} (2048-41943039, default 41943039):

Using default value 41943039

Partition 1 of type Linux and of size 20 GiB is set

Command (m for help): wq

# mkfs.xfs /dev/sdb1 #格式化

[root@server1 ~]# mount /dev/sdb1 /mnt

[root@server1 ~]# cd /var/lib/mfs/

[root@server1 mfs]# cp -p * /mnt

[root@server1 mfs]# chown mfs:mfs /mnt

[root@server1 mfs]# umount /mnt

[root@server1 mfs]# cd

[root@server1 ~]# mount /dev/sdb1 /var/lib/mfs/

[root@server1 ~]# systemctl start moosefs-master