Kubernetes----双master节点二进制部署

文章目录

- 前言:

- 一、双master二进制集群分析

- 二、实验环境介绍

- 三、实验部署

- 3.1 搭建master2节点

- 3.2 nginx负载均衡部署

- 3.2.1 验证漂移地址

- 3.3 修改node节点配置文件

- 四、测试

- 4.1 在master1上进行操作

- 总结

前言:

- 本篇博客承接上篇单节点的环境添加一个master节点,同时添加创建了ngixn负载均衡+keepalived高可用集群,k8s单节点部署快速入口: K8s单节点部署

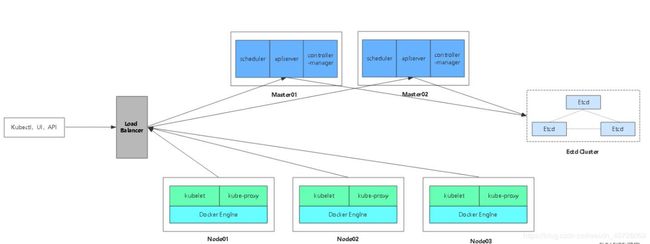

一、双master二进制集群分析

- 二进制集群

- 与单master的二进制集群相比,双master集群具备高可用的特性,当一个master宕机时,load Blance就会将VIP虚拟地址转移到另一只master上,保证了master的可靠性

- 双master的核心在于,需要指向一个核心地址,上一篇做单节点的时候,在证书生成等脚本中已定义了VIP地址(192.168.226.100),VIP开启apiserver,双master节点开启端口接收node节点的apiserver请求,其实如果有新的节点加入,不会直接找master节点,而且是直接找到vip进行apiserver请求,然后vip再进行调度,分发到某一个master中执行,此时master收到请求后会给新增的node节点颁发证书

- shuangmaster集群还简历了nginx负载均衡,缓解了node对master请求的压力,减轻了master对资源的使用(负担)。

二、实验环境介绍

- 双master节点部署角色如下:

- master1 IP地址:192.168.226.128

- 分配资源:centos7 2个CPU 4G内存

- 需求组件:kube-apiserver kube-controller-manager kube-scheduler etcd

- master2 IP地址:192.168.226.137

- 分配资源:centos7 2个CPU 4G内存

- 需求组件:kube-apiserver kube-controller-manager kube-scheduler

- node1节点 IP地址:192.168.226.132

- 分配资源:centos7 2个CPU 4G内存

- 需求组件:kubelet kube-proxy docker-ce flannel etcd

- node2节点 IP地址:192.168.226.133

- 分配资源:centos7 2个CPU 4G内存

- 需求组件:kubelet kube-proxy docker-ce flannel etcd

- nginx_lbm IP地址:192.168.226.148

- 分配资源:centos7 2个CPU 4G内存

- 需求组件:nginx keepalived

- nginx_lbb IP地址:192.168.226.167

- 分配资源:centos7 2个CPU 4G内存

- 需求组件:nginx keepalived

- VIP IP地址:192.168.226.100

三、实验部署

- 本篇博客部署的环境基于单节点集群,链接见 ”前言“

#所有节点关闭防火墙、核心防护和网络管理

##永久关闭安全性功能[root@master2 ~]# systemctl stop firewalld

[root@master2 ~]# systemctl disable firewalld

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@master2 ~]# setenforce 0

[root@master2 ~]# sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

##关闭网络管理,防止IP地址变化

systemctl stop NetworkManager

systemctl disable NetworkManager

3.1 搭建master2节点

- 在master1节点操作

[root@master ~]# scp -r /opt/kubernetes/ [email protected]:/opt

.............省略部分内容

[root@master ~]# scp /usr/lib/systemd/system/{kube-apiserver,kube-controller-manager,kube-scheduler}.service [email protected]:/usr/lib/systemd/system/

.....省略部分内容-

master2节点操作

修改配置文件kube-apiserver中的IP地址

#关闭防火墙、核心防护

[root@master2 ~]# systemctl stop firewalld[root@master2 ~]# systemctl disable firewalldRemoved symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.[root@master2 ~]# setenforce 0[root@master2 ~]# sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

#api-server

[root@master2 cfg]# vim kube-apiserver

KUBE_APISERVER_OPTS="--logtostderr=true \

--v=4 \

--etcd-servers=https://192.168.226.128:2379,https://192.168.226.132:2379,https://192.168.226.133:2379 \

--bind-address=192.168.226.137 \ #绑定bind地址(本地IP)

--secure-port=6443 \

--advertise-address=192.168.226.137 \ #修改对外展示地址

--allow-privileged=true \

--service-cluster-ip-range=10.0.0.0/24 \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \

--authorization-mode=RBAC,Node \

--kubelet-https=true \

--enable-bootstrap-token-auth \

--token-auth-file=/opt/kubernetes/cfg/token.csv \

--service-node-port-range=30000-50000 \

--tls-cert-file=/opt/kubernetes/ssl/server.pem \

--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \

--client-ca-file=/opt/kubernetes/ssl/ca.pem \

--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \

--etcd-cafile=/opt/etcd/ssl/ca.pem \

--etcd-certfile=/opt/etcd/ssl/server.pem \

--etcd-keyfile=/opt/etcd/ssl/server-key.pem"

-

master1节点操作

拷贝master01上已有的etcd证书给master2使用

- PS:因为新加入的master中也包含apiserver,在apiserver工作时,也会需要与ETCD进行交互,所以也需要ETCD证书进行认证

[root@master ~]# scp -r /opt/etcd/ [email protected]:/opt/-

master2中,启动服务

启动api-server、api-scheduler、api-controller-manager服务

#apiserver

[root@master2 ~]# systemctl start kube-apiserver.service

[root@master2 ~]# systemctl enable kube-apiserver.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-apiserver.service to /usr/lib/systemd/system/kube-apiserver.service.

[root@master2 ~]# systemctl status kube-apiserver.service

active(running)

#控制器

[root@master2 ~]# systemctl start kube-controller-manager.service

[root@master2 ~]# systemctl enable kube-controller-manager.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-controller-manager.service to /usr/lib/systemd/system/kube-controller-manager.service.

[root@master2 ~]# systemctl status kube-controller-manager.service

active(running)

#调度器

[root@master2 ~]# systemctl start kube-scheduler.service

[root@master2 ~]# systemctl enable kube-scheduler.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-scheduler.service to /usr/lib/systemd/system/kube-scheduler.service.

[root@master2 ~]# systemctl status kube-scheduler.service- 添加环境变量

[root@master2 ~]# echo "export PATH=$PATH:/opt/kubernetes/bin/" >> /etc/profile

[root@master2 ~]# tail -2 /etc/profile

unset -f pathmunge

export PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin:/opt/kubernetes/bin/

[root@master2 ~]# source /etc/profile

#查看集群节点信息

[root@master2 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.226.132 Ready 45h v1.12.3

192.168.226.133 Ready 43h v1.12.3 3.2 nginx负载均衡部署

- 在两台nginx服务器上关闭核心防护、清空防火墙列表

setenforce 0

iptables -F-

在lb1、lb2操作

添加nginx官方yum源、安装nginx

[root@localhost ~]# vim /etc/yum.repos.d/nginx.repo

[nginx]

name=nginx repo

baseurl=http://nginx.org/packages/centos/7/$basearch/

gpgcheck=0

----》wq

#使用nginx官方源安装

#重新加载yum仓库

[root@localhost ~]# yum list

#安装nginx

[root@localhost ~]# yum install nginx -y

.....省略部分内容- 这里使用nginx主要使用其stream模块做四层负载

- 在nginx配置文件中添加四层转发功能

[root@localhost ~]# vim /etc/nginx/nginx.conf

#在event模块和http模块间插入stream模块

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main; #指定日志目录

upstream k8s-apiserver { #此处为两个nginx地址池

server 192.168.226.128:6443; #端口为6443

server 192.168.226.137:6443;

}

server {

listen 6443;

proxy_pass k8s-apiserver; #反向代理指向nginx地址池

}

}

----》wq

#检查语法

[root@localhost ~]# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

#启动服务

[root@localhost ~]# systemctl start nginx

[root@localhost ~]# netstat -natp | grep nginx

tcp 0 0 0.0.0.0:6443 0.0.0.0:* LISTEN 29019/nginx: master

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 29019/nginx: master-

在两个nginx节点部署keeplived服务

下载keepalived

[root@localhost ~]# yum install keepalived -y-

上传修改的keepalived配置文件

nginx_lbm节点 keepalived配置

[root@localhost ~]# cp keepalived.conf /etc/keepalived/keepalived.conf

cp: overwrite ‘/etc/keepalived/keepalived.conf’? yes

[root@localhost ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

# 接收邮件地址

notification_email {

[email protected]

[email protected]

[email protected]

}

# 邮件发送地址

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx { #定义check_nginx函数 nginx检测脚本(此项保证了keepalived与nginx的关联)

script "/etc/nginx/check_nginx.sh"

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的

priority 100 # 优先级,备服务器设置 90

advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.226.100/24

}

track_script { #监控的促发脚本

check_nginx #函数名

}

}

-----》wq- nginx_lbb keepalived配置文件修改

[root@localhost ~]# cp keepalived.conf /etc/keepalived/keepalived.conf

cp: overwrite ‘/etc/keepalived/keepalived.conf’? yes

[root@localhost ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

# 接收邮件地址

notification_email {

[email protected]

[email protected]

[email protected]

}

# 邮件发送地址

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/etc/nginx/check_nginx.sh"

}

vrrp_instance VI_1 {

state BACKUP #修改为BACKUP

interface ens33

virtual_router_id 51

priority 90 #优先级的值要比MASTER小

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.226.100/24

}

track_script {

check_nginx

}

}

----》wq-

在两个nignx节点创建check_nginx.sh脚本

在/etc/nginx/目录下创建ngixn检测脚本,如下:

[root@localhost ~]# vim /etc/nginx/check_nginx.sh

count=$(ps -ef |grep nginx |egrep -cv "grep|$$") #过滤nginx进程数量

#如果nginx终止了,keepalived同时停止

if [ "$count" -eq 0 ];then

systemctl stop keepalived

fi

----》wq

[root@localhost ~]# chmod +x /etc/nginx/check_nginx.sh- 启动keepalived服务

[root@localhost ~]# systemctl start keepalived.service- 在nginx(Master节点查看是否有飘逸地址)

3.2.1 验证漂移地址

- pkill nginx_lbm节点的nginx服务,在nginx_lbb查看漂移地址是否转移

[root@localhost ~]# pkill nginx

[root@localhost ~]# ip addr

#vip地址没有了

#查看keepalvied服务

[root@localhost ~]# systemctl status keepalived

● keepalived.service - LVS and VRRP High Availability Monitor

Loaded: loaded (/usr/lib/systemd/system/keepalived.service; disabled; vendor preset: disabled)

Active: inactive (dead)

#因为nginx进程停止了,所以keepalived也停止了- 查看nginx_lbb(BACKUP)节点漂移地址

-

恢复操作

在nginx(Master)节点先启动nginx服务,再启动keepalived服务

3.3 修改node节点配置文件

-

修改两个node节点配置文件统一指向VIP地址

修改的配置文件包括:bootstrap.kubeconfig、kubelet.kubeconfig、kube-proxy.kubeconfig

把所有的server地址统一修改为VIP地址(192.168.226.100)

[root@node1 ~]# vim /opt/kubernetes/cfg/bootstrap.kubeconfig

server: https://192.168.226.100:6443

[root@node1 ~]# vim /opt/kubernetes/cfg/kubelet.kubeconfig

server: https://192.168.226.100:6443

[root@node1 ~]# vim /opt/kubernetes/cfg/kube-proxy.kubeconfig

server: https://192.168.226.100:6443

#node2做相同修改

#自检

[root@node1 cfg]# grep 100 *

bootstrap.kubeconfig: server: https://192.168.226.100:6443

kubelet.kubeconfig: server: https://192.168.226.100:6443

kube-proxy.kubeconfig: server: https://192.168.226.100:6443

-

在nginx lbm上查看nginx的k8s日志

已有负载均衡信息,因为VIP地址在nginx lbm上,所以nginx lbb中是没有访问日志的

而之所以会有访问地址,是因为重启了kubelet服务,kubelet在重启后,会访问VIP地址,而VIP地址在nginx lbm上,同时nginx lbm反向代理将请求交给后端两个master节点,所以产生了访问日志

[root@localhost ~]# tail /var/log/nginx/k8s-access.log

192.168.226.132 192.168.226.137:6443, 192.168.226.128:6443 - [04/May/2020:17:00:14 +0800] 200 0, 1121

192.168.226.132 192.168.226.128:6443 - [04/May/2020:17:00:14 +0800] 200 1121

192.168.226.133 192.168.226.128:6443 - [04/May/2020:17:00:35 +0800] 200 1122

192.168.226.133 192.168.226.137:6443, 192.168.226.128:6443 - [04/May/2020:17:00:35 +0800] 200 0, 1121四、测试

4.1 在master1上进行操作

- 创建pod

[root@master ~]# kubectl run nginx --image=nginx

kubectl run --generator=deployment/apps.v1beta1 is DEPRECATED and will be removed in a future version. Use kubectl create instead.

deployment.apps/nginx created

#run 在群集中运行一个指定的镜像

#nginx 容器名称

#--image=nginx:镜像名称

#查看状态

[root@master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-dbddb74b8-nztm8 1/1 Running 0 111s

#容器会有一个创建过程,创建过程中STATUS会显示为ContainerCreating,完成后会显示Running- 查看创建的pod位置

[root@master ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

nginx-dbddb74b8-nztm8 1/1 Running 0 4m33s 172.17.6.3 192.168.226.132

#-o 输出一个网段信息

#172.17.6.3 容器IP

#192.168.226.132 node1节点IP - 在node1节点查看容器列表

[root@node1 cfg]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

#第一个容器就是由K8s创建的

554969363e7f nginx "nginx -g 'daemon of…" 5 minutes ago Up 5 minutes k8s_nginx_nginx-dbddb74b8-nztm8_default_3e5e6b63-8de7-11ea-aded-000c29e424dc_0

39cefc4b2584 registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0 "/pause" 6 minutes ago Up 6 minutes k8s_POD_nginx-dbddb74b8-nztm8_default_3e5e6b63-8de7-11ea-aded-000c29e424dc_0

b3546847c994 centos:7 "/bin/bash" 4 days ago Up 4 days jolly_perlman

-

日志问题(日志无法查看)

直接查看日志是无法查看的,因为进入容器会被做降权处理,所以需要给其提升一个权限

在master节点给与权限(集群中只需要授权一次即可)

[root@master ~]# kubectl create clusterrolebinding cluster-system-anonymous --clusterrole=cluster-admin --user=system:anonymous

clusterrolebinding.rbac.authorization.k8s.io/cluster-system-anonymous created

[root@master ~]# kubectl logs nginx-dbddb74b8-nztm8

[root@master ~]# - 在node1节点访问Pod IP地址

[root@node1 cfg]# curl 172.17.6.3

Welcome to nginx!

Welcome to nginx!

If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.

For online documentation and support please refer to

"http://nginx.org/"

>nginx.org.

Commercial support is available at

"http://nginx.com/">nginx.com.

Thank you for using nginx.

- 回到master节点再次查看日志

[root@master ~]# kubectl logs nginx-dbddb74b8-nztm8

172.17.6.1 - - [04/May/2020:09:26:42 +0000] "GET / HTTP/1.1" 200 612 "-" "curl/7.29.0" "-"

#此时如果使用node1虚拟机访问此容器IP。在查看访问日志时,会查看到访问者IP为docker0的IP总结

-

多节点master的二进制集群基本已完成,之后会再加入一个harbor私有仓库

-

小结一下集群部分组件的一个作用

① master节点创建pod资源,依赖于apiserver

② apiserver指向的是load Balance

③ load Balance 在集群中担当着负载均衡的角色

④ 所有的kubectl 系列命令可以在两个master上做操作

⑤ 同时load Balance提供了一个漂移地址,为两个master节点做了负载均衡

⑥ 资源创建在node1、node2节点时,master节点的Scheduler 调度会使用评分机制验证调度算法,选出后端权重/评分高的node节点指定创建pod资源