Kubernetes----双master节点二进制群集(部署Web管理界面)+kubectl 命令管理

文章目录

- 前言:

- 一、Kubectl 概述

- 1.1 kuerber 命令行的语法

- 1.2 Kubectl 常用命令

- 1.2.1 常用子命令

- 1.2.2 常用参数

- 1.2.3 kubectl 输出选项

- 二、部署流程

- 2.1 环境

- 2.1 创建、加载所有文件

- 2.2 证书自签

- 三、Kubectl 命令管理

- 3.1 Kubectl 帮助信息

- 3.2 Kubectl 管理项目命令

- 3.2.1 kubectl run 命令

- 3.2.2 kubect delete 删除命令

- 3.2.2 项目周期流程

- 3.3 详细信息

- 总结:

前言:

-

基于上一篇多master节点二进制部署的环境,部署Web管理页面,多master节点二进制部署入口:多master二进制集群

通过对管理界面的操作,会调用集群中master节点的apiserver,创建Pod资源。

-

kubectl是Kubernetes集群的命令行工具,通过kubectl能够对集群本身进行管理(删除、更新、回滚)控制着Pod的生命周期,同时能够在集群上进行容器化应用的安装部署。

一、Kubectl 概述

1.1 kuerber 命令行的语法

- 语法:

kubectl [command] [TYPE] [NAME] [flags]-

基本参数介绍:

-

① command:

子命令,用于操作kubernetes集群资源对象的命令,例如create、delete、describe、get、apply等。

-

② TYPE:

指定资源类型。资源类型不区分大小写,您可以指定单数,复数或缩写形式。例如,以下命令产生相同的输出

kubectl get pod pod1

kubectl get pods pod1

kubectl get po pod1

-

③ NAME:

指定资源的名称。名称区分大小写。如果省略名称,则显示所有资源的详细信息

例如:kubectl gets pods

对多个资源执行操作时,可以按类型和名称指定每个资源,也可以指定一个或多个文件 :

-

Ⅰ 通过类型和名称指定资源:

-

如果资源均为同一类型,则将其分组:

格式:TYPE1 name1 name2 name<#>

示例:kubectl get pod pod1 example-pod2

-

若分别指定多个资源类型:

格式: TYPE1/name1 TYPE1/name2 TYPE2/name3 TYPE<#>/name<#>

示例: kubectl get pod/example-pod1 replicationcontroller/example-rc1

-

Ⅱ 若需要使用一个或多个文件指定资源:

格式: -f file1 -f file2 -f file<#>

示例: kubectl get pod -f ./pod.yaml

一般使用YAML而不是JSON,主要因为YAML往往更加的友好,尤其是对配置文件

-

④ flags:

指定可选标志。例如,可以使用 -s 或 --server 标志来指定Kubernetes API服务器的地址和端口

PS: 在命令行中指定的标志将覆盖默认值和任何相应的环境变量。

-

1.2 Kubectl 常用命令

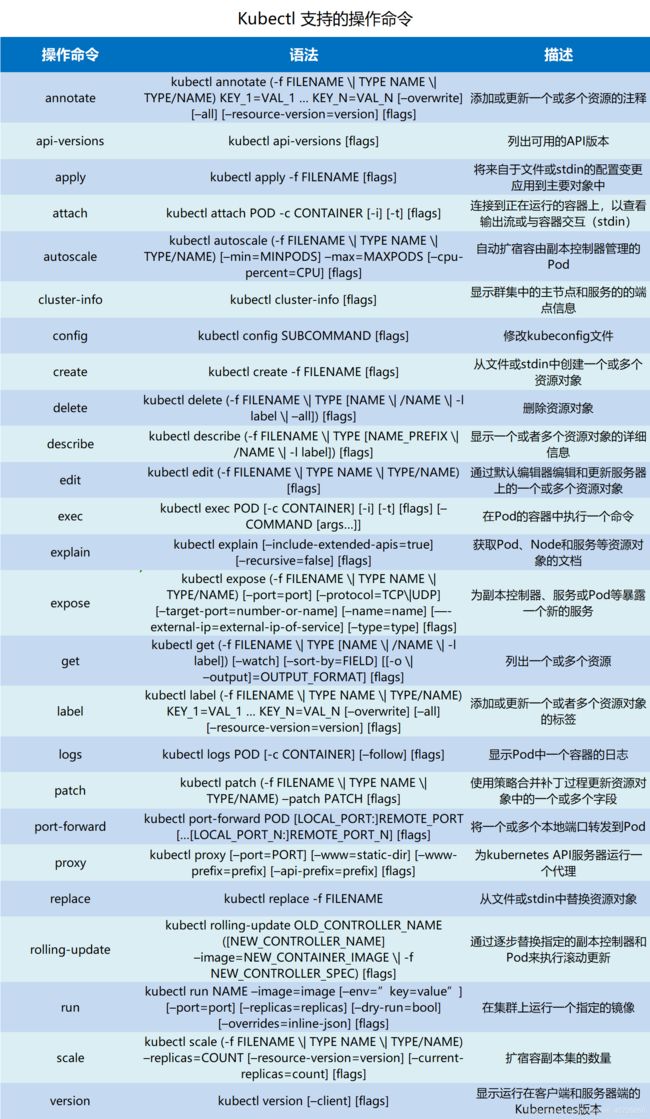

1.2.1 常用子命令

- kubectl作为kubernetes的命令行工具,主要的职责就是对集群中的资源的对象进行操作,这些操作包括对资源对象的创建、删除和查看等。下表中显示了kubectl支持的所有操作,以及这些操作的语法和描述信息

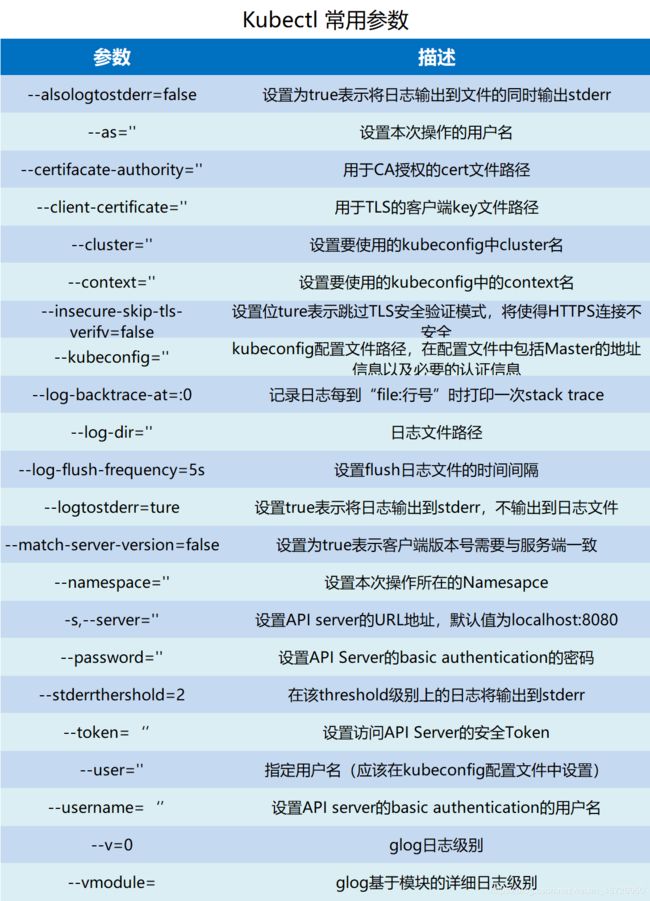

1.2.2 常用参数

1.2.3 kubectl 输出选项

-

kubectl 默认的输出格式为纯文本格式,可以通过-o或者-output字段指定命令的输出格式

语法如下:

kubectl [command] [TYPE] [NAME] -o=- 以下为可选的输出格式及对应功能

-o=custom-columns= 根据自定义列名进行输出,以逗号分隔

-o=custom-columns-file= 从文件中获取自定义列名进行输出

-o=json

以JSON格式显示结果

-o=jsonpath= 输出jsonpath表达式定义的字段信息

-o=jsonpath-file=

输出jsonpath表达式定义的字段信息,来源于文件

-o=name

仅输出资源对象的名称

-o=wide

输出额外信息,对于pod,将输出pod所在的Node名称

-o=yaml

以YAML格式显示结果 - 输出pod额外信息,示例:

kubectl get pod -o wide - 以YAML格式显示Pod详细信息,示例:

kubect get pod -o yaml - 自定义列表显示Pod信息,示例:

kubectl get pod -o=custom-columns=NAME:.metadata.name,RSRC:.metadata.resourceVersion - 基于文件的自定义列名输出

kubectl get pods -o=custom-columns-file=template.txt -

kubectl 还可以将输出的结果按指定字段进行排序,使用–sort-by参数以jsonpath表达式进行指定即可~

示例:

格式:kebuctl [command] [TYPE] [NAME] --sort-by=

按照名称进行排序

示例:

kubectl get pods --sort-by=.metadata.name

二、部署流程

2.1 环境

- 基于上一篇博客部署的多master集群的环境,部署Web界面,然后进行kubectl 命令操作演示

- 首先,需要至dashboard官网下载5个YAML文件,官方入口:https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/dashboard

- 下载后,在master1节点上创建dashboard工作目录,并上传已下载的五个文件,如下:

[root@master ~]# mkdir dashboard

[root@master ~]# cd dashboard/

[root@master dashboard]# rz -E

[root@master dashboard]# ls

dashboard-configmap.yaml dashboard-rbac.yaml dashboard-service.yaml

dashboard-controller.yaml dashboard-secret.yaml k8s-admin.yaml

#k8s-admin.yaml 是在本地创建的管理员命令资源配置,下文会进行说明- 创建顺序:rbac.yaml---->secret.yaml---->configmap.yaml---->controller.yaml----->dashboard.yaml

2.1 创建、加载所有文件

- ① dashboard-rbac.yaml 角色控制,访问控制资源

kind: Role #角色

apiVersion: rbac.authorization.k8s.io/v1 #api版本号(有专门的版本号控制)

metadata: #源信息

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

name: kubernetes-dashboard-minimal #创建的资源名称

namespace: kube-system

rules: #参数信息的传入

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics from heapster.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard-minimal

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system #名称空间的管理(默认为default)- 创建dashboard-rbac.yaml资源

#-f 以文件的格式创建yaml资源

[root@master dashboard]# kubectl create -f dashboard-rbac.yaml

role.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created- 使用-n 查看Role角色kube-system名称空间中的资源

[root@master dashboard]# kubectl get Role -n kube-system

NAME AGE

extension-apiserver-authentication-reader 5d18h

kubernetes-dashboard-minimal 9m43s #此项就是刚刚创建的资源

system::leader-locking-kube-controller-manager 5d18h

system::leader-locking-kube-scheduler 5d18h

system:controller:bootstrap-signer 5d18h

system:controller:cloud-provider 5d18h

system:controller:token-cleaner 5d18h

- ② dashboard-secret.yaml 安全

[root@master dashboard]# vim dashboard-secret.yaml

apiVersion: v1

kind: Secret #角色

metadata: #源信息

labels:

k8s-app: kubernetes-dashboard

# Allows editing resource and makes sure it is created first.

addonmanager.kubernetes.io/mode: EnsureExists

name: kubernetes-dashboard-certs #资源名称

namespace: kube-system #命名空间

type: Opaque

--- #--- 分段

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

# Allows editing resource and makes sure it is created first.

addonmanager.kubernetes.io/mode: EnsureExists

name: kubernetes-dashboard-key-holder #密钥

namespace: kube-system

type: Opaque

- 创建dashboard-secret.yaml 资源

[root@master dashboard]# kubectl create -f dashboard-secret.yaml

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-key-holder created

[root@master dashboard]# kubectl get Secret -n kube-system

NAME TYPE DATA AGE

default-token-xspc5 kubernetes.io/service-account-token 3 5d18h

kubernetes-dashboard-certs Opaque 0 23s

kubernetes-dashboard-key-holder Opaque 0 22s

#最后两个为Secret角色创建的证书和密钥资源- ③ dashboard-configmap.yaml 配置管理

[root@master dashboard]# vim dashboard-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

labels:

k8s-app: kubernetes-dashboard

# Allows editing resource and makes sure it is created first.

addonmanager.kubernetes.io/mode: EnsureExists

name: kubernetes-dashboard-settings

namespace: kube-system- 创建dashboard-configmap.yaml 资源

[root@master dashboard]# kubectl create -f dashboard-configmap.yaml

configmap/kubernetes-dashboard-settings created

[root@master dashboard]# kubectl get Configmap -n kube-system

NAME DATA AGE

extension-apiserver-authentication 1 5d19h

kubernetes-dashboard-settings 0 2m24s- ④ dashboard-controller.yaml 控制器

apiVersion: v1

kind: ServiceAccount #控制器名称(服务访问)

metadata:

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

name: kubernetes-dashboard

namespace: kube-system

---

apiVersion: apps/v1

kind: Deployment #控制器名称

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

seccomp.security.alpha.kubernetes.io/pod: 'docker/default'

spec:

priorityClassName: system-cluster-critical

containers: #资源指定的名称、镜像

- name: kubernetes-dashboard

image: siriuszg/kubernetes-dashboard-amd64:v1.8.3

resources: #设置了CPU和内存的上限

limits:

cpu: 100m

memory: 300Mi

requests:

cpu: 50m

memory: 100Mi

ports:

- containerPort: 8443 #8443提供对外的端口号(HTTPS协议)

protocol: TCP

args:

# PLATFORM-SPECIFIC ARGS HERE

- --auto-generate-certificates

volumeMounts: #容器卷

- name: kubernetes-dashboard-certs

mountPath: /certs

- name: tmp-volume

mountPath: /tmp

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"- 创建 dashboard-controller.yaml 资源

[root@master dashboard]# kubectl create -f dashboard-controller.yaml

serviceaccount/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

[root@master dashboard]# kubectl get ServiceAccount -n kube-system

NAME SECRETS AGE

default 1 5d19h

kubernetes-dashboard 1 40s

[root@master dashboard]# kubectl get deployment -n kube-system

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

kubernetes-dashboard 1 1 1 1 -

⑤ dashboard-service.yaml 服务

serive资源一旦开启,则代表资源已经提供出去了,

[root@master dashboard]# vim dashboard-service.yaml

apiVersion: v1

kind: Service #控制器名称

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

type: NodePort #提供的形式(访问node节点提供出来的端口,即nodeport

selector:

k8s-app: kubernetes-dashboard

ports:

- port: 443 #内部提供

targetPort: 8443 #Pod内部端口

nodePort: 30001 #节点对外提供的端口(映射端口)

#如果外网需要访问这个资源,需要访问服务器IP:30001

#而提供此功能支持的是node节点上的kube-proxy

#而master是在后端的管理员,无法被用户访问- 创建dashboard-service.yaml资源

[root@master dashboard]# kubectl create -f dashboard-service.yaml

service/kubernetes-dashboard created

[root@master dashboard]# kubectl get service -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes-dashboard NodePort 10.0.0.56 443:30001/TCP 35s

#10.0.0.56内部地址

#注意:443端口https协议,在之后访问的时候需要使用https - 以上,5个资源全部创建完成,此时可以查看pod资源,如下:

[root@master dashboard]# kubectl get pods,svc -n kube-system

NAME READY STATUS RESTARTS AGE

pod/kubernetes-dashboard-65f974f565-bh5zh 1/1 Running 1 37m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes-dashboard NodePort 10.0.0.56 443:30001/TCP 26m

#service 可以缩写为svc

#可以使用以下命令查看资源分配的位置

[root@master dashboard]# kubectl get pods -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

kubernetes-dashboard-65f974f565-bh5zh 1/1 Running 1 38m 172.17.6.4 192.168.226.132

#192.168.226.132 是node1节点的IP - 可以查看此pod资源的日志

[root@master dashboard]# kubectl logs kubernetes-dashboard-65f974f565-bh5zh -n kube-system

2020/05/08 04:11:35 Starting overwatch

2020/05/08 04:11:35 Using in-cluster config to connect to apiserver

2020/05/08 04:11:35 Using service account token for csrf signing

2020/05/08 04:11:35 No request provided. Skipping authorization

.......省略部分内容2.2 证书自签

- 如果使用宿主机的谷歌浏览器访问会出现一个问题,如下:

- 以上问题为谷歌浏览器的问题,有些老版本的微软浏览器可以访问,为解决此问题,需要在master节点颁发一个证书

[root@master dashboard]# vim dashboard-cert.sh

cat > dashboard-csr.json <

{

"CN": "Dashboard",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing"

}

]

}

EOF

K8S_CA=$1

#以下产生CA证书

cfssl gencert -ca=$K8S_CA/ca.pem -ca-key=$K8S_CA/ca-key.pem -config=$K8S_CA/ca-config.json -profile=kubernetes dashboard-csr.json | cfssljson -bare dashboard

#删除原本的证书凭据

kubectl delete secret kubernetes-dashboard-certs -n kube-system

#重新创建一个证书凭据

kubectl create secret generic kubernetes-dashboard-certs --from-file=./ -n kube-system

------》wq - 生成证书

#生成证书,放在/root/k8s/k8s-cert目录下

[root@master dashboard]# bash dashboard-cert.sh /root/k8s/k8s-cert/

2020/05/08 13:04:57 [INFO] generate received request

2020/05/08 13:04:57 [INFO] received CSR

2020/05/08 13:04:57 [INFO] generating key: rsa-2048

2020/05/08 13:04:57 [INFO] encoded CSR

2020/05/08 13:04:57 [INFO] signed certificate with serial number 551113815757827270782378002391446116439855285061

2020/05/08 13:04:57 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

secret "kubernetes-dashboard-certs" deleted

secret/kubernetes-dashboard-certs created- 编辑dashboard-controller.yaml,指向证书位置,完成证书自签,如下:

[root@master dashboard]# vim dashboard-controller.yaml

#47行左右,在--auto下插入tls文件目录,指向刚刚生成的证书和密钥文件

args:

# PLATFORM-SPECIFIC ARGS HERE

- --auto-generate-certificates

- --tls-key-file=dashboard-key.pem

- --tls-cert-file=dashboard.pem

---->wq- 重新部署

#apply -f 重新部署即可

#之前已经使用create创建完成后,才可以使用apply进行更新

[root@master dashboard]# kubectl apply -f dashboard-controller.yaml

Warning: kubectl apply should be used on resource created by either kubectl create --save-config or kubectl apply

serviceaccount/kubernetes-dashboard configured

Warning: kubectl apply should be used on resource created by either kubectl create --save-config or kubectl apply

deployment.apps/kubernetes-dashboard configured

- 需要注意一个问题,在重新部署的时候,资源可能会分配到其他节点,再次查看pod资源位置,如下:

[root@master dashboard]# kubectl get pods -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

kubernetes-dashboard-7dffbccd68-gf6mg 1/1 Running 1 6m14s 172.17.54.2 192.168.226.133

#192.168.226.133 资源已重新分配到133node2节点上 [root@master dashboard]# vim k8s-admin.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: dashboard-admin #创建dashboard-admin的资源,相当于一个管理员账户

namespace: kube-system

---

kind: ClusterRoleBinding #绑定群集用户角色

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: dashboard-admin #群集用户角色其实就是管理员的身份

subjects:

- kind: ServiceAccount

name: dashboard-admin

namespace: kube-system

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io- 先查看secret(安全角色)的命名空间中的资源

[root@master dashboard]# kubectl get secret -n kube-system

NAME TYPE DATA AGE

default-token-xspc5 kubernetes.io/service-account-token 3 6d3h #默认的token令牌

kubernetes-dashboard-certs Opaque 11 7h30m

kubernetes-dashboard-key-holder Opaque 2 8h

kubernetes-dashboard-token-hrj96 kubernetes.io/service-account-token 3 8h

- 生成令牌

[root@master dashboard]# kubectl create -f k8s-admin.yaml

serviceaccount/dashboard-admin created

clusterrolebinding.rbac.authorization.k8s.io/dashboard-admin created

#再次查看secret资源

[root@master dashboard]# kubectl get secret -n kube-system

NAME TYPE DATA AGE

dashboard-admin-token-dpjdk kubernetes.io/service-account-token 3 2m36s #生成了admin的token令牌

default-token-xspc5 kubernetes.io/service-account-token 3 6d3h

kubernetes-dashboard-certs Opaque 11 7h37m

kubernetes-dashboard-key-holder Opaque 2 8h

kubernetes-dashboard-token-hrj96 kubernetes.io/service-account-token 3 8h

- 详细查看令牌信息

[root@master dashboard]# kubectl describe secret dashboard-admin-token-dpjdk -n kube-system

Name: dashboard-admin-token-dpjdk

Namespace: kube-system

Labels:

Annotations: kubernetes.io/service-account.name: dashboard-admin

kubernetes.io/service-account.uid: 0696adb7-9129-11ea-aded-000c29e424dc

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1359 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tZHBqZGsiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiMDY5NmFkYjctOTEyOS0xMWVhLWFkZWQtMDAwYzI5ZTQyNGRjIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.flITcRSrxIcaGPePNKAhURcyDK6q5mJ6YpXDUXtspLanGfgm13aH-4R57XMsloDb_mO6iOta5V-NGuKZmohCXbjn3oc77yQo9D4ivvNYSbivkXCEE1qY5S-Pv--EYJnZXs6G9lJc4bvSBm0nsTdqP2iVog911hFBvaZsWHHq1SZPrwsgYNxBO94tfbCAFlouOqneIk78UlYaHrFZgjbSrPlv-hkjLEp3yuR1IBJrNLnYAPh_wRlV5mLK5K8q79ICTAV8hdTQaZ-0EqiK6UAcxcKLfbgSRKfVB6oBDh8cX7zuxdv_yf4FJUx0tUbGqXwbk7IMjHSTXOTsgY3iQSqmWA

#token:以下为令牌码,复制以下令牌码 - 复制完令牌码之后,回到浏览器界面 选择“令牌” 粘贴令牌码,然后登录即可,如下:

-

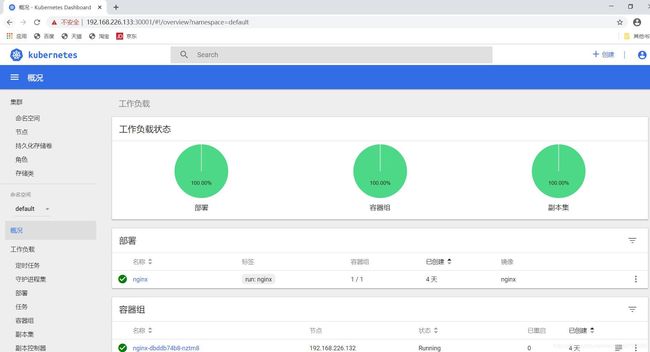

以下已进入K8s的Web界面

此处可以看到Nginx资源,命名空间、节点、标签等

也可以在Web上创建、删除、管理

三、Kubectl 命令管理

3.1 Kubectl 帮助信息

-

命令:kubectl --help

常用的命令:

- create:创建资源(可以从文件或这标准性输入)

- expose:暴露资源。把资源对外提供出去(提供端口)

- run :运行指定镜像

- set :设置指定的对象(例如版本号)

- explain:查询资源文件

- get:显示信息

- edit:编辑指定资源

- delete:删除

更新机制:

- rollout:回滚

- scale:创建多个副本–副本内容相同(弹性伸缩)

- autoscale:自动调整(自动化弹性伸缩)

群集管理命令:

- certificate:修改certificate资源(例如审批通过node节点申请加入集群的请求)

- cluster-info :显示集群信息

- top:显示更详细的原站点信息

- cordon:标记node 为unscheduleable(状态)

- uncordon:标记node 为scheduleable(状态)

- taint:更新一个或多个节点信息

问题和调试命令:

- describe:显示一个指定的源或组的源信息

- logs:输出容器在Pod中的日志

- attach:把指定组件运行在指定容器中

- exec:进入一个pod资源

- port-forward:转发一个霍多个本地的端口到一个pod中

- proxy:运行一个代理到k8s的apiserver

- cp:复制

- auth:验证

3.2 Kubectl 管理项目命令

-

项目完整的生命周期如下:

创建–》发布–》更新–》回滚–》删除

3.2.1 kubectl run 命令

-

格式如下:

kubectl run NAME --image=image [–env=“key=value”] [–port=port] [–replicas=replicas] [–dry-run=bool] [–overrides=inline-json] [–command] – [COMMAND] [args…] [options]

-

参数简介:

- NAME:资源名称

- –image=image:指定镜像

- [–env=“key=value”]:设置Pod中一些参数/变量

- [–port=port] :提供的端口

- [–replicas=replicas]:副本集的数量

- [–dry-run=bool]:试运行的池

- [–overrides=inline-json]:是否在线

- [–command] – [COMMAND] [args…] [options]:其他的参数指令

示例:

创建一个nginx 资源,指定对外提供端口为80,副本集为3个

[root@master ~]# kubectl run nginx-deployment --image=nginx --port=80 --replicas=3

kubectl run --generator=deployment/apps.v1beta1 is DEPRECATED and will be removed in a future version. Use kubectl create instead.

deployment.apps/nginx-deployment created

#查看Pods状态

[root@master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-dbddb74b8-nztm8 1/1 Running 0 4d4h

nginx-deployment-5477945587-8tt6c 1/1 Running 0 7s

nginx-deployment-5477945587-lfw8x 0/1 ContainerCreating 0 7s

nginx-deployment-5477945587-rssxn 0/1 ContainerCreating 0 7s

#apiserver会自动控制创建Pod(以上已有一个pod为running状态)

#过一会再次查看

[root@master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-dbddb74b8-nztm8 1/1 Running 0 4d4h

nginx-deployment-5477945587-8tt6c 1/1 Running 0 33s

nginx-deployment-5477945587-lfw8x 1/1 Running 0 33s

nginx-deployment-5477945587-rssxn 1/1 Running 0 33s

#已全部为运行状态- 查看资源创建的位置

[root@master ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

nginx-dbddb74b8-nztm8 1/1 Running 0 4d4h 172.17.6.3 192.168.226.132

nginx-deployment-5477945587-8tt6c 1/1 Running 0 3m18s 172.17.6.5 192.168.226.132

nginx-deployment-5477945587-lfw8x 1/1 Running 0 3m18s 172.17.54.3 192.168.226.133

nginx-deployment-5477945587-rssxn 1/1 Running 0 3m18s 172.17.6.4 192.168.226.132

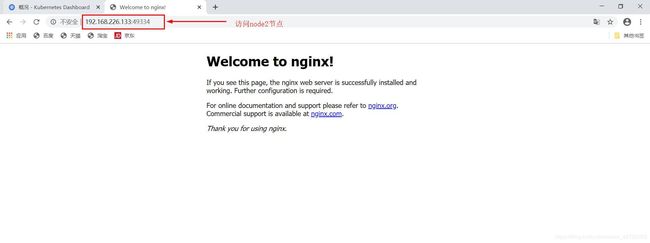

#可见,在node1节点创建了2个副本,在node2节点创建了一个副本 - 查看所有资源信息

[root@master ~]# kubectl get all

NAME READY STATUS RESTARTS AGE

pod/nginx-dbddb74b8-nztm8 1/1 Running 0 4d4h

pod/nginx-deployment-5477945587-8tt6c 1/1 Running 0 4m27s

pod/nginx-deployment-5477945587-lfw8x 1/1 Running 0 4m27s

pod/nginx-deployment-5477945587-rssxn 1/1 Running 0 4m27s

#以上为刚刚创建的3个副本资源

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.0.0.1 443/TCP 6d4h

#以上的service资源信息为之前创建的对外提供服务的资源(10.0.0.1为集群内部的网关)

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx 1 1 1 1 4d4h

deployment.apps/nginx-deployment 3 3 3 3 4m29s

#以上deployment 为控制器的资源,想要创建pod资源,需要先调用控制器的资源去控制创建Pod资源,所以需要先创建控制器资源

NAME DESIRED CURRENT READY AGE

replicaset.apps/nginx-dbddb74b8 1 1 1 4d4h

replicaset.apps/nginx-deployment-5477945587 3 3 3 4m27s

#以上为副本集的资源 - 小结:Pod资源的创建伴随着控制器资源的创建和副本集资源的创建

3.2.2 kubect delete 删除命令

- 删除Nginx

[root@master ~]# kubectl delete deploy/nginx

deployment.extensions "nginx" deleted

#再次查看pods状态

[root@master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-deployment-5477945587-8tt6c 1/1 Running 0 18h

nginx-deployment-5477945587-lfw8x 1/1 Running 0 18h

nginx-deployment-5477945587-rssxn 1/1 Running 0 18h

#Nginx资源已被删除- 删除nginx-deployment

[root@master ~]# kubectl delete deploy/nginx-deployment

deployment.extensions "nginx-deployment" deleted

#再次查看pod资源

[root@master ~]# kubectl get pods

No resources found.3.2.2 项目周期流程

- 上文有叙述过,项目的生命周期为:创建–》发布–》更新–》回滚–》删除,以下进行演示项目生命周期的过程

- ① 创建nginx

#创建nginx

[root@master ~]# kubectl run nginx --image=nginx:latest --port=80 --replicas=3

kubectl run --generator=deployment/apps.v1beta1 is DEPRECATED and will be removed in a future version. Use kubectl create instead.

deployment.apps/nginx created

#查看pods状态(容器创建,一定会伴随deployment和replaces的创建

[root@master ~]# kubectl get pods,deployment,replicaset

NAME READY STATUS RESTARTS AGE

pod/nginx-7697996758-4flgt 1/1 Running 0 14m

pod/nginx-7697996758-h85tv 1/1 Running 0 14m

pod/nginx-7697996758-l7gb8 1/1 Running 0 14m

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deployment.extensions/nginx 3 3 3 3 14m

NAME DESIRED CURRENT READY AGE

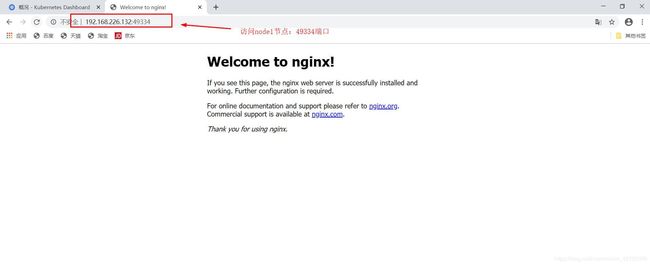

replicaset.extensions/nginx-7697996758 3 3 3 14m- ② 发布nginx(service提供负载均衡的功能)

[root@master ~]# kubectl expose deployment nginx --port=80 --target-port=80 --name=nginx-service --type=NodePort

service/nginx-service exposed

#查看服务信息

[root@master ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.0.0.1 443/TCP 6d23h

nginx-service NodePort 10.0.0.249 80:49334/TCP 45s

#类型为NodePort IP是群集IP PORT端口 80为内部端口,49334是对外的端口/TCP协议

#外网口只要访问49334端口,就会由kube-proxy负载均衡调用后端Pod资源 - 查看关联后端的节点

[root@master ~]# kubectl get endpoints

NAME ENDPOINTS AGE

kubernetes 192.168.226.128:6443,192.168.226.137:6443 6d23h

nginx-service 172.17.54.3:80,172.17.6.3:80,172.17.6.4:80 10m

- 查看资源对象简写

[root@master ~]# kubectl api-resources

NAME SHORTNAMES APIGROUP NAMESPACED KIND

bindings true Binding

componentstatuses cs false ComponentStatus

configmaps cm true ConfigMap

endpoints ep true Endpoints

events ev true Event

...........省略部分内容-

在node1操作,查验是否开启了负载均衡及对外提供的端口是否为49334

K8S中kube-proxy支持三种模式,在v1.8之前使用的是iptables及userspace两种模式,在kubernetes1.8之后引入了ipvs模式

-

安装ipvsadm工具

[root@node1 ~]# yum install ipvsadm -y

.....省略部分内容

#ipvsadm lvs内核管理工具- 查验:

[root@node1 ~]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 127.0.0.1:30001 rr

-> 172.17.54.2:8443 Masq 1 0 0

TCP 127.0.0.1:49334 rr

-> 172.17.6.3:80 Masq 1 0 0

-> 172.17.6.4:80 Masq 1 0 0

-> 172.17.54.3:80 Masq 1 0 0

TCP 172.17.6.0:30001 rr

-> 172.17.54.2:8443 Masq 1 0 0

TCP 172.17.6.0:49334 rr

-> 172.17.6.3:80 Masq 1 0 0

-> 172.17.6.4:80 Masq 1 0 0

-> 172.17.54.3:80 Masq 1 0 0

TCP 172.17.6.1:30001 rr

-> 172.17.54.2:8443 Masq 1 0 0

TCP 172.17.6.1:49334 rr

-> 172.17.6.3:80 Masq 1 0 0

-> 172.17.6.4:80 Masq 1 0 0

-> 172.17.54.3:80 Masq 1 0 0

TCP 192.168.122.1:30001 rr

-> 172.17.54.2:8443 Masq 1 0 0

TCP 192.168.122.1:49334 rr

-> 172.17.6.3:80 Masq 1 0 0

-> 172.17.6.4:80 Masq 1 0 0

-> 172.17.54.3:80 Masq 1 0 0

TCP 192.168.226.132:30001 rr

-> 172.17.54.2:8443 Masq 1 0 0

TCP 192.168.226.132:49334 rr

-> 172.17.6.3:80 Masq 1 0 0

-> 172.17.6.4:80 Masq 1 0 0

-> 172.17.54.3:80 Masq 1 0 0

TCP 10.0.0.1:443 rr

-> 192.168.226.128:6443 Masq 1 0 0

-> 192.168.226.137:6443 Masq 1 0 0

TCP 10.0.0.56:443 rr

-> 172.17.54.2:8443 Masq 1 0 0

TCP 10.0.0.249:80 rr

-> 172.17.6.3:80 Masq 1 0 0

-> 172.17.6.4:80 Masq 1 0 0

-> 172.17.54.3:80 Masq 1 0 0 #查看Pod资源信息

[root@master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-7697996758-4flgt 1/1 Running 0 120m

nginx-7697996758-h85tv 1/1 Running 0 120m

nginx-7697996758-l7gb8 1/1 Running 0 120m

#查看日志

[root@master ~]# kubectl logs nginx-7697996758-4flgt

172.17.6.1 - - [09/May/2020:10:08:24 +0000] "GET / HTTP/1.1" 200 612 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.122 Safari/537.36" "-"

2020/05/09 10:08:24 [error] 6#6: *1 open() "/usr/share/nginx/html/favicon.ico" failed (2: No such file or directory), client: 172.17.6.1, server: localhost, request: "GET /favicon.ico HTTP/1.1", host: "192.168.226.132:49334", referrer: "http://192.168.226.132:49334/"

172.17.6.1 - - [09/May/2020:10:08:24 +0000] "GET /favicon.ico HTTP/1.1" 404 556 "http://192.168.226.132:49334/" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.122 Safari/537.36" "-"

[root@master ~]# kubectl logs nginx-7697996758-l7gb8

172.17.54.1 - - [09/May/2020:10:08:51 +0000] "GET / HTTP/1.1" 200 612 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.122 Safari/537.36" "-"

2020/05/09 10:08:51 [error] 6#6: *1 open() "/usr/share/nginx/html/favicon.ico" failed (2: No such file or directory), client: 172.17.54.1, server: localhost, request: "GET /favicon.ico HTTP/1.1", host: "192.168.226.133:49334", referrer: "http://192.168.226.133:49334/"

172.17.54.1 - - [09/May/2020:10:08:51 +0000] "GET /favicon.ico HTTP/1.1" 404 556 "http://192.168.226.133:49334/" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.122 Safari/537.36" "-"

[root@master ~]#

#可见访问者是doocker0网关

#同时可见proxy提供了负载均衡的功能,会把请求按照轮询的机制分配给后端的节点-

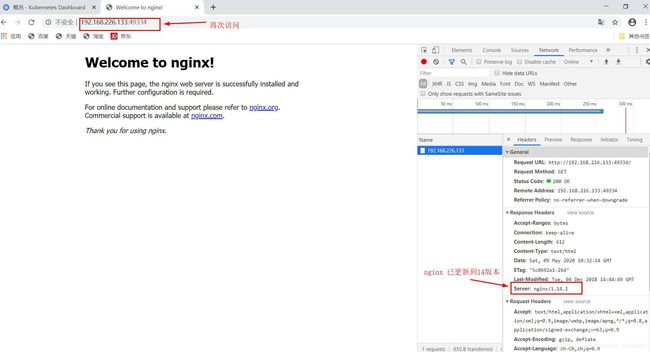

③ 版本更新

再次访问网页,查看“更多工具”----》“开发者工具”----》点击Name 中的地址----》查看headers头部信息可查看到版本,如下:

- 修改模板、更新

#更新nginx 到1.14版本

[root@master ~]# kubectl set image deployment/nginx nginx=nginx:1.14

deployment.extensions/nginx image updated

可查看更新过程

[root@master ~]# kubectl get pods -w

NAME READY STATUS RESTARTS AGE

nginx-6ff7c89c7c-vgjpf 0/1 ContainerCreating 0 14s

nginx-6ff7c89c7c-vxvr6 1/1 Running 0 49s

nginx-6ff7c89c7c-zwthn 1/1 Running 0 29s

nginx-7697996758-l7gb8 1/1 Running 0 140m

nginx-6ff7c89c7c-vgjpf 1/1 Running 0 16s

nginx-7697996758-l7gb8 1/1 Terminating 0 140m

nginx-7697996758-l7gb8 0/1 Terminating 0 140m

.....>省略部分内容

#稍等一会再次查看pods资源

^C[root@master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-6ff7c89c7c-vgjpf 1/1 Running 0 106s

nginx-6ff7c89c7c-vxvr6 1/1 Running 0 2m21s

nginx-6ff7c89c7c-zwthn 1/1 Running 0 2m1s

#可查看到出了三个新的容器

#容器更新过程:创建一个容器,停止并删除第一个待更新的容器,- 容器更新过程:先创建一个容器,停止并删除第一个待更新的容器,待第一个新容器运行时,再开始创建第二个容器,同时删除第二个待更新容器。

- 小结:保持pods池中有三个可运行的容器(固定容器数量是由replicaset进行控制)

- 再次访问网页验证

-

④ 回滚

假设版本更新之后,业务线发现用户无法访问网页,此时就需要使用到回滚操作

-

查看历史版本

[root@master ~]# kubectl rollout history deployment/nginx

deployment.extensions/nginx

REVISION CHANGE-CAUSE

1

2

#因为之前更新过,所以有两个版本 - 执行回滚、查看回滚状态

#执行回滚

[root@master ~]# kubectl rollout undo deployment/nginx

deployment.extensions/nginx

#查看回滚状态

[root@master ~]# kubectl rollout status deployment/nginx

Waiting for deployment "nginx" rollout to finish: 1 out of 3 new replicas have been updated...

Waiting for deployment "nginx" rollout to finish: 1 out of 3 new replicas have been updated...

Waiting for deployment "nginx" rollout to finish: 2 out of 3 new replicas have been updated...- 再次查看状态

[root@master ~]# kubectl rollout history deployment/nginx

deployment.extensions/nginx

REVISION CHANGE-CAUSE

2

3 -

⑤ 删除

需要注意的一点是,在资源创建的时候,会有三个资源产生,即 pods deployment replicaset,但是只要把业务提供给出去,必定创建Service资源。所以在删除时需要一并把service资源删除,避免资源占用

-

删除操作

#删除nginx资源deployment是控制器

[root@master ~]# kubectl delete deployment/nginx

deployment.extensions "nginx" deleted

#查看状态,已被删除

[root@master ~]# kubectl get pods

No resources found.

#查看服务。nginx-service的服务端口还对外开放着,所以也需要一并删除

[root@master ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.0.0.1 443/TCP 7d1h

nginx-service NodePort 10.0.0.249 80:49334/TCP 135m

#删除nginx-service

[root@master ~]# kubectl delete svc/nginx-service

service "nginx-service" deleted

#查看服务状态

[root@master ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.0.0.1 443/TCP 7d1h 3.3 详细信息

- 如果容器处于卡住的状态,可以使用以下命令查看日志信息(event字段)

kubectl describe pod nignx-793921923-w4t3a总结:

- kbectl管理命令的参数、命令及其中的调度原理是非常重要的,本篇博客简要介绍了kubectl的管理命令,之后将会介绍Kubernetes支持YAML和JSON格式创建资源对象的内容~~