爬取豆瓣Top100电影并存储为csv文件(附csv文件乱码解决方法)

大致分为以下步骤:

- 使用requests库爬取网页

- 使用BeautifulSoup库解析网页

- 使用pandas库将数据存储为csv文件

1.首先引入第三方库:

import requests

from bs4 import BeautifulSoup

import pandas as pd

我们去豆瓣电影Top250会发现每个页面有25个电影:

2.下载4个页面的HTML:

page_indexs = range(0, 100, 25) # 构造分页列表

list(page_indexs)

def download_all_htmls(): # 爬取4个页面的HTML,用于后续的分析

htmls = []

for idx in page_indexs:

url = f"https://movie.douban.com/top250?start={idx}&filter=" # 替换分页参数

print("craw html:", url)

r = requests.get(url,

headers={"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko)"})

# r返回一个包含服务器资源的Response对象

if r.status_code != 200:

raise Exception("error") # 当程序出错时通过raise语句显示引发异常

htmls.append(r.text)

return htmls

# 执行爬取

htmls = download_all_htmls() # 爬取4个页面的HTML

3.解析HTML得到数据:

def parse_single_html(html): # 解析单个HTML,得到数据

soup = BeautifulSoup(html, 'html.parser') # 解析HTML数据

article_items = ( # 对照HTML结构写

soup.find("div", class_="article")

.find("ol", class_="grid_view")

.find_all("div", class_="item")

)

datas = [] # 将数据存入列表

for article_item in article_items:

rank = article_item.find("div", class_="pic").find("em").get_text()

info = article_item.find("div", class_="info")

title = info.find("div", class_="hd").find("span", class_="title").get_text()

stars = (

info.find("div", class_="bd")

.find("div", class_="star")

.find_all("span") # 有4个span,所以用find_all

)

rating_star = stars[0]["class"][0] #由于class属性有多个,所以取第0个

rating_num = stars[1].get_text()

comments = stars[3].get_text()

datas.append({

"排名":rank,

"电影名称":title,

"评星":rating_star.replace("rating","").replace("-t",""),

"评分":rating_num,

"评价人数":comments.replace("人评价", "")

})

return datas

# 执行所有的HTML页面的解析

all_datas = []

for html in htmls:

all_datas.extend(parse_single_html(html))

4.将数据存储为csv文件

df = pd.DataFrame(all_datas)

df.to_csv("豆瓣电影Top100.csv")

解决方法:

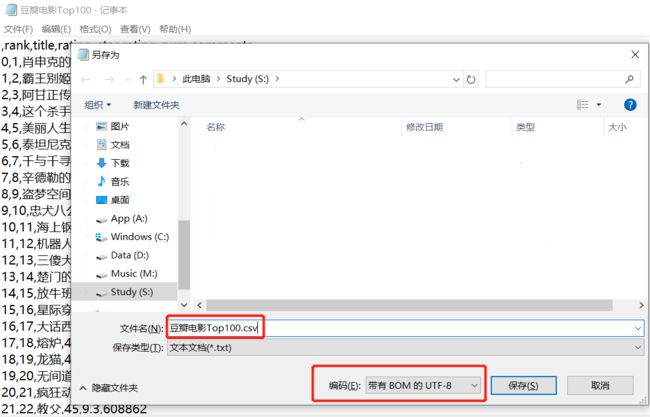

1. 用记事本打开该文件:

2.另存为csv文件(在文件名后面加.csv后缀),并把编码改为带有BOM的UTF-8

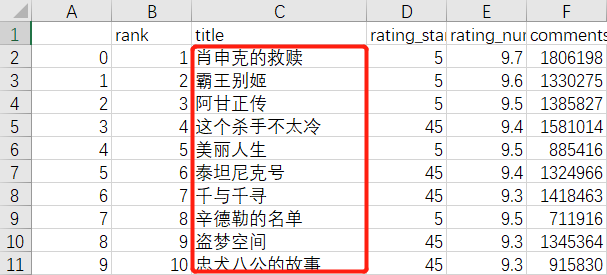

3.再次打开该csv文件,就不会出现乱码了:

完整源代码:

import requests

from bs4 import BeautifulSoup

import pandas as pd

page_indexs = range(0, 100, 25) # 构造分页列表

list(page_indexs)

def download_all_htmls(): # 爬取4个页面的HTML,用于后续的分析

htmls = []

for idx in page_indexs:

url = f"https://movie.douban.com/top250?start={idx}&filter=" # 替换分页参数

print("craw html:", url)

r = requests.get(url,

headers={"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko)"})

# r返回一个包含服务器资源的Response对象

if r.status_code != 200:

raise Exception("error") # 当程序出错时通过raise语句显示引发异常

htmls.append(r.text)

return htmls

# 执行爬取

htmls = download_all_htmls() # 爬取4个页面的HTML

def parse_single_html(html): # 解析单个HTML,得到数据

soup = BeautifulSoup(html, 'html.parser') # 解析HTML数据

article_items = ( # 对照HTML结构写

soup.find("div", class_="article")

.find("ol", class_="grid_view")

.find_all("div", class_="item")

)

datas = [] # 将数据存入列表

for article_item in article_items:

rank = article_item.find("div", class_="pic").find("em").get_text()

info = article_item.find("div", class_="info")

title = info.find("div", class_="hd").find("span", class_="title").get_text()

stars = (

info.find("div", class_="bd")

.find("div", class_="star")

.find_all("span") # 有4个span,所以用find_all

)

rating_star = stars[0]["class"][0] #由于class属性有多个,所以取第0个

rating_num = stars[1].get_text()

comments = stars[3].get_text()

datas.append({

"rank":rank,

"title":title,

"rating_star":rating_star.replace("rating","").replace("-t",""),

"rating_num":rating_num,

"comments":comments.replace("人评价", "")

})

return datas

# 执行所有的HTML页面的解析

all_datas = []

for html in htmls:

all_datas.extend(parse_single_html(html))

df = pd.DataFrame(all_datas)

df.to_csv("豆瓣电影Top100.csv")