人工智能实践:Tensorflow笔记(3)——神经网路八股

文章目录

- 1、搭建网络八股sequential

- 2、搭建网络八股class

- 3、MNIST数据集

- 4、FASHION数据集

1、搭建网络八股sequential

用Tensorflow API:tf.keras搭建网络八股

六步法

import

#import相关模块

train,test

#告知要喂入的训练集和测试集,即要指定训练集和测试集的输入特征x_train、x_test和训练集和测试集的标签y_train、y_test

model = tf.keras.models.Sequential

#在Sequential()中搭建网络结构,逐层描述每层网络

model.compile

#在compile()中配置训练方法,告知训练时选择哪种优化器,选择哪个损失函数,选择哪种评测指标

model.fit

#在fit()中执行训练过程,告知训练集和测试集的输入特征和标签,每个batch的数量,迭代数据集的数量

model.summary

#用summary()打印出网络的结构和参数统计model = tf.keras.models.Sequential([网络结构]) #描述各层网络

网络结构举例:

拉直层:tf.keras.layers.Flatten() #不含计算,只是形状转换,把输入特征拉直变成一维数组

全连接层:tf.keras.layers.Dense(神经元个数,activation=“激活函数”,kernel_regularizer=哪种正则化)

activation(字符串给出)可选:relu、softmax、sigmoid、tanh

kernel_regularizer可选:tf.keras.regularizers.l1()、tf.keras.regularizers.l2()

卷积层:tf.keras.layers.Conv2D(filters=卷积核个数,kernel_size=卷积核尺寸,strides=卷积步长,padding="valid"or"same")

LSTM层:tf.keras.layers.LSTM()

model.compile(optimizer=优化器,loss=损失函数,metrics=["准确率"])

Optimizer可选:

'sgd' or tf.keras.optimizers.SGD(lr=学习率,momentum=动量参数)

'adagrad' or tf.keras.optimizers.Adagrad(lr=学习率)

'adadelta' or tf.keras.optimizers.Adadelta(lr=学习率)

'adam' or tf.keras.optimizers.Adam(lr=学习率,beta_1=0.9,beta_2=0.999)

loss可选:

'mse' or tf.keras.losses.MeanSquaredError()

'sparse_categorical_crossentropy' or tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False) #from_logits该参数为询问是否经概率分布的输出

Metrics可选:

'accuracy':y_和y都是数值,如y_=[1] y=[1]

'categorical_accuracy':y_和y都是独热码(概率分布),如y_=[0,1,0] y=[0.256,0.695,0.048]

'sparse_categorical_accuracy':y_是数值,y是独热码(概率分布),如y_=[1] y=[0.256,0.695,0.048]model.fit(训练集的输入特征,训练集的标签,batch_size=,epochs=,validation_data=(测试集的输入特征,测试集的标签),validation_split=从训练集划分多少比例给测试集,validation_freq=多少次epoch测试一次)model.summary()鸢尾花分类六步法

import tensorflow as tf

from sklearn import datasets

import numpy as np

x_train = datasets.load_iris().data

y_train = datasets.load_iris().target

np.random.seed(116)

np.random.shuffle(x_train)

np.random.seed(116)

np.random.shuffle(y_train)

tf.random.set_seed(116)

model = tf.keras.models.Sequential([

tf.keras.layers.Dense(3, activation='softmax', kernel_regularizer=tf.keras.regularizers.l2())

])

model.compile(optimizer=tf.keras.optimizers.SGD(lr=0.1),

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=['sparse_categorical_accuracy'])

model.fit(x_train, y_train, batch_size=32, epochs=500, validation_split=0.2, validation_freq=20)

model.summary()2、搭建网络八股class

可以使用class类封装一个神经网络结构

class MyModel(Model):

def __init__(self):

super(MyModel,self).__init__()

定义网络结构块

def call(self,x):

调用网络结构块,实现前向传播

return y

model = MyModel()

__init__() 定义所需网络结构块

call() 写出前向传播

class IrisModel(Model):

def __init__(self):

super(IrisModel,self).__init__()

self.d1 = Dense(3)

def call(self,x):

y = self.d1(x)

return y

model = IrisModel()import tensorflow as tf

from tensorflow.keras.layers import Dense

from tensorflow.keras import Model

from sklearn import datasets

import numpy as np

x_train = datasets.load_iris().data

y_train = datasets.load_iris().target

np.random.seed(116)

np.random.shuffle(x_train)

np.random.seed(116)

np.random.shuffle(y_train)

tf.random.set_seed(116)

class IrisModel(Model):

def __init__(self):

super(IrisModel, self).__init__()

self.d1 = Dense(3, activation='softmax', kernel_regularizer=tf.keras.regularizers.l2())

def call(self, x):

y = self.d1(x)

return y

model = IrisModel()

model.compile(optimizer=tf.keras.optimizers.SGD(lr=0.1),

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=['sparse_categorical_accuracy'])

model.fit(x_train, y_train, batch_size=32, epochs=500, validation_split=0.2, validation_freq=20)

model.summary()3、MNIST数据集

- MNIST数据集:

提供6万张28 * 28像素点的0~9手写数字图片和标签,用于训练。

提供1万张28 * 28像素点的0~9手写数字图片和标签,用于测试。 - 导入MNIST数据集:

mnist = tf.keras.datasets.mnist

(x_train,y_train),(x_test,y_test) = mnist.load_data()- 作为输入特征,输入神经网络时,将数据拉伸为一维数组:

tf.keras.layers.Flatten()

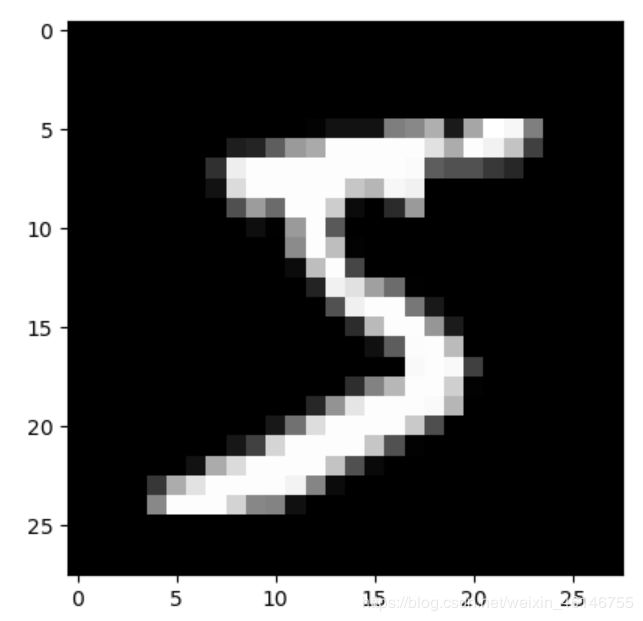

[ 0 0 0 48 238 252 252 ...... ....... 253 186 12 0 0 0 0 0 ]- 把训练集中的第一个样本x_train[0]可视化出来

plt.imshow(x_train[0],cmap='gray') #绘制灰度图

plt.show()- 用print函数把训练集中第一个样本的输入特征打印出来

print("x_train[0]:\n",x_train[0])- 用print把训练集中第一个样本的标签打印出来

print("y_train[0]:",y_train[0])

- 用print函数打印出测试集的形状

print("x_test.shape:",x_test.shape)import tensorflow as tf

from matplotlib import pyplot as plt

mnist = tf.keras.datasets.mnist

(x_train, y_train), (x_test, y_test) = mnist.load_data()

# 可视化训练集输入特征的第一个元素

plt.imshow(x_train[0], cmap='gray') # 绘制灰度图

plt.show()

# 打印出训练集输入特征的第一个元素

print("x_train[0]:\n", x_train[0])

# 打印出训练集标签的第一个元素

print("y_train[0]:\n", y_train[0])

# 打印出整个训练集输入特征形状

print("x_train.shape:\n", x_train.shape)

# 打印出整个训练集标签的形状

print("y_train.shape:\n", y_train.shape)

# 打印出整个测试集输入特征的形状

print("x_test.shape:\n", x_test.shape)

# 打印出整个测试集标签的形状

print("y_test.shape:\n", y_test.shape)报错:

![]()

手动下载MNIST数据集

重写load_data()方法

def load_data(path="D:/AI/class3/mnist.npz"):

with np.load(path, allow_pickle=True) as f:

x_train, y_train = f['x_train'], f['y_train']

x_test, y_test = f['x_test'], f['y_test']

return (x_train, y_train), (x_test, y_test)

(x_train, y_train), (x_test, y_test) = load_data()用Sequential实现手写数字识别训练

import tensorflow as tf

mnist = tf.keras.datasets.mnist

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

model = tf.keras.models.Sequential([

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dense(10, activation='softmax')

])

model.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=['sparse_categorical_accuracy'])

model.fit(x_train, y_train, batch_size=32, epochs=5, validation_data=(x_test, y_test), validation_freq=1)

model.summary()import tensorflow as tf

import numpy as np

def load_data(path="D:/AI/class3/mnist.npz"):

with np.load(path, allow_pickle=True) as f:

x_train, y_train = f['x_train'], f['y_train']

x_test, y_test = f['x_test'], f['y_test']

return (x_train, y_train), (x_test, y_test)

(x_train, y_train), (x_test, y_test) = load_data()

x_train,x_test = x_train / 255.0,x_test /255.0 #对输入网络的输入特征进行归一化

model = tf.keras.models.Sequential([

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(128,activation='relu'), #定义第一层网络

tf.keras.layers.Dense(10,activation='softmax') #定义第二层网络

])

model.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=['sparse_categorical_accuracy'])

model.fit(x_train,y_train,batch_size=32,epochs=5,validation_data=(x_test,y_test),validation_freq=1)

model.summary()用类实现手写数字识别模型训练

和Sequential的方法相比,只是实例化model的方法不同,__init__函数中定义了call函数中所用的层,call函数中输入x到输出y,走过一次前向传播返回输出y

import tensorflow as tf

from tensorflow.keras.layers import Dense,Flatten

from tensorflow.keras import Model

import numpy as np

def load_data(path="D:/AI/class3/mnist.npz"):

with np.load(path, allow_pickle=True) as f:

x_train, y_train = f['x_train'], f['y_train']

x_test, y_test = f['x_test'], f['y_test']

return (x_train, y_train), (x_test, y_test)

(x_train, y_train), (x_test, y_test) = load_data()

x_train,x_test = x_train / 255.0,x_test /255.0

class MnistModel(Model):

def __init__(self):

super(MnistModel,self).__init__()

self.flatten = Flatten()

self.d1 = Dense(128,activation='relu')

self.d2 = Dense(10,activation='softmax')

def call(self,x):

x = self.flatten(x)

x = self.d1(x)

y = self.d2(x)

return y

model = MnistModel()

model.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=['sparse_categorical_accuracy'])

model.fit(x_train,y_train,batch_size=32,epochs=5,validation_data=(x_test,y_test),validation_freq=1)

model.summary()4、FASHION数据集

- FASHION数据集

提供6万张28 * 28 像素点的衣裤等图片和标签,用于训练

提供1万张28 * 28 像素点的衣裤等图片和标签,用于测试

| Label | Description |

|---|---|

| 0 | T恤(T-shirt/top) |

| 1 | 裤子(Trouser) |

| 2 | 套头衫(Pullover) |

| 3 | 连衣裙(Dress) |

| 4 | 外套(Coat) |

| 5 | 凉鞋(Sandal) |

| 6 | 衬衫(Shirt) |

| 7 | 运动鞋(Sneaker) |

| 8 | 包(bag) |

| 9 | 靴子(Ankle boot) |

- 导入FASHION数据集

fashion = tf.keras.datasets.fashion_mnist

(x_train,y_train),(x_test,y_test) = fashion.load_data()- 导入本地FASHION数据集

from tensorflow.keras.utils import get_file

import gzip

import numpy as np

def load_data():

base = "file:///D:/AI/class3/"

files = ['train-labels-idx1-ubyte.gz','train-images-idx3-ubyte.gz',

't10k-labels-idx1-ubyte.gz','t10k-images-idx3-ubyte.gz'

]

paths = []

for fname in files:

paths.append(get_file(fname,origin = base + fname))

with gzip.open(paths[0], 'rb') as lbpath:

y_train = np.frombuffer(lbpath.read(), np.uint8, offset=8)

with gzip.open(paths[1], 'rb') as imgpath:

x_train = np.frombuffer(

imgpath.read(), np.uint8, offset=16).reshape(len(y_train), 28, 28)

with gzip.open(paths[2], 'rb') as lbpath:

y_test = np.frombuffer(lbpath.read(), np.uint8, offset=8)

with gzip.open(paths[3], 'rb') as imgpath:

x_test = np.frombuffer(

imgpath.read(), np.uint8, offset=16).reshape(len(y_test), 28, 28)

return (x_train, y_train), (x_test, y_test)- sequential方法

import tensorflow as tf

import LoadData

(x_train, y_train),(x_test, y_test) = LoadData.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

model = tf.keras.models.Sequential([

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dense(10, activation='softmax')

])

model.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=['sparse_categorical_accuracy'])

model.fit(x_train, y_train, batch_size=32, epochs=5, validation_data=(x_test, y_test), validation_freq=1)

model.summary()

- class方法

import tensorflow as tf

from tensorflow.keras.layers import Dense, Flatten

from tensorflow.keras import Model

import LoadData

(x_train, y_train),(x_test, y_test) = LoadData.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

class MnistModel(Model):

def __init__(self):

super(MnistModel, self).__init__()

self.flatten = Flatten()

self.d1 = Dense(128, activation='relu')

self.d2 = Dense(10, activation='softmax')

def call(self, x):

x = self.flatten(x)

x = self.d1(x)

y = self.d2(x)

return y

model = MnistModel()

model.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=['sparse_categorical_accuracy'])

model.fit(x_train, y_train, batch_size=32, epochs=5, validation_data=(x_test, y_test), validation_freq=1)

model.summary()