线性回归——最小二乘法代数详细计算过程

Reference: 动手实战人工智能 AI By Doing

关于矩阵方法的求解可参考:最小二乘法矩阵详细计算过程

基本定义:

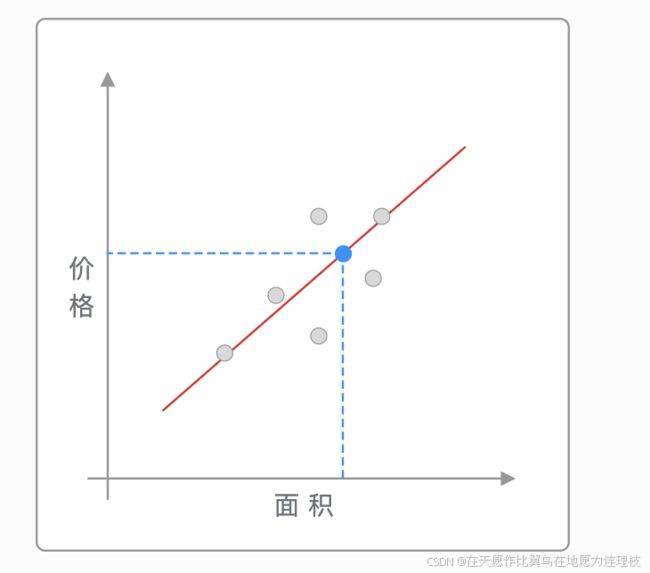

通过找到一条直线去拟合数据点的分布趋势的过程,就是线性回归的过程。

在上图呈现的这个过程中,通过找到一条直线去拟合数据点的分布趋势的过程,就是线性回归的过程。而线性回归中的「线性」代指线性关系,也就是图中所绘制的红色直线。

所以,找到最适合的那一条红色直线,就成为了线性回归中需要解决的目标问题。

一元线性回归

令该一次函数表达式为 y ( x , w ) = w 0 + w 1 x y(x,w)=w_0+w_1x y(x,w)=w0+w1x 通过组合不同的 w 0 w_0 w0 和 w 1 w_1 w1 的值得到不同的拟合直线

def f(x: list, w0: float, w1: float):

"""一元一次函数表达式"""

y = w0 + w1 * x

return y

平方损失函数

- 如果一个数据点为 ( x i x_i xi, y i y_i yi),那么它对应的误差(残差)就为: y i − ( w 0 + w 1 x ) y_i-(w_0+w_1x) yi−(w0+w1x)

- 那么,对 n n n个全部数据点而言,其对应的残差损失总和就为:

∑ i = 1 n ( y i − ( w 0 + w 1 x i ) ) \sum_{i = 1}^{n} (y_i - (w_0 + w_1x_i)) i=1∑n(yi−(w0+w1xi)) - 我们一般使用残差的平方和来表示所有样本点的误差。公式如下:

∑ i = 1 n ( y i − ( w 0 + w 1 x i ) ) 2 \sum_{i = 1}^{n} (y_i - (w_0 + w_1x_i))^2 i=1∑n(yi−(w0+w1xi))2

好处:在于能保证损失始终是累加的正数,而不会存在正负残差抵消的问题

def square_loss(x: np.ndarray, y: np.ndarray, w0: float, w1: float):

"""平方损失函数"""

loss = sum(np.square(y - (w0 + w1 * x)))

return loss

求解损失最小值的过程,就必须用到下面的数学方法:

最小二乘法代数求解

「二乘」代表平方,「平方」指的是上文的平方损失函数,最小二乘法也就是求解平方损失函数最小值的方法

f = ∑ i = 1 n ( y i − ( w 0 + w 1 x i ) ) 2 f=\sum_{i = 1}^{n} (y_i - (w_0 + w_1x_i))^2 f=i=1∑n(yi−(w0+w1xi))2

∂ f ∂ w 0 = − 2 ( ∑ i = 1 n y i − n w 0 − w 1 ∑ i = 1 n x i ) \frac{\partial f}{\partial w_0} = -2(\sum_{i = 1}^{n} y_i - nw_0 - w_1\sum_{i = 1}^{n} x_i) ∂w0∂f=−2(i=1∑nyi−nw0−w1i=1∑nxi)

∂ f ∂ w 1 = − 2 ( ∑ i = 1 n x i y i − w 0 ∑ i = 1 n x i − w 1 ∑ i = 1 n x i 2 ) \frac{\partial f}{\partial w_1} = -2(\sum_{i = 1}^{n} x_i y_i - w_0\sum_{i = 1}^{n} x_i - w_1\sum_{i = 1}^{n} x_i^2) ∂w1∂f=−2(i=1∑nxiyi−w0i=1∑nxi−w1i=1∑nxi2)

令 ∂ f ∂ w 0 = 0 \frac{\partial{f}}{\partial{w_0}}=0 ∂w0∂f=0和 ∂ f ∂ w 1 = 0 \frac{\partial{f}}{\partial{w_1}}=0 ∂w1∂f=0得:

w 1 = n ∑ x i y i − ∑ x i ∑ y i n ∑ x i 2 − ( ∑ x i ) 2 w_1 = \frac{n\sum x_iy_i - \sum x_i\sum y_i}{n\sum x_i^2 - (\sum x_i)^2} w1=n∑xi2−(∑xi)2n∑xiyi−∑xi∑yi

w 0 = ∑ x i 2 ∑ y i − ∑ x i ∑ x i y i n ∑ x i 2 − ( ∑ x i ) 2 w_0 = \frac{\sum x_i^2\sum y_i - \sum x_i\sum x_iy_i}{n\sum x_i^2 - (\sum x_i)^2} w0=n∑xi2−(∑xi)2∑xi2∑yi−∑xi∑xiyi

def least_squares_algebraic(x: np.ndarray, y: np.ndarray):

"""最小二乘法代数求解"""

n = x.shape[0]

w1 = (n * sum(x * y) - sum(x) * sum(y)) / (n * sum(x * x) - sum(x) * sum(x))

w0 = (sum(x * x) * sum(y) - sum(x) * sum(x * y)) / (

n * sum(x * x) - sum(x) * sum(x)

)

return w0, w1

"""绘图"""

w0 = least_squares_algebraic(x, y)[0]

w1 = least_squares_algebraic(x, y)[1]

plt.scatter(x, y)

plt.plot(x_temp, x_temp * w1 + w0, "r")

详细计算过程

对 w 0 w_0 w0求偏导

∂ ∂ w 0 ∑ i = 1 n ( y i − ( w 0 + w 1 x i ) ) 2 = ∑ i = 1 n ∂ ( u i 2 ) ∂ w 0 = ∑ i = 1 n − 2 ( y i − ( w 0 + w 1 x i ) ) = − 2 ∑ i = 1 n ( y i − ( w 0 + w 1 x i ) ) \begin{align*} \frac{\partial}{\partial w_0}\sum_{i = 1}^{n} (y_i - (w_0 + w_1x_i))^2&=\sum_{i = 1}^{n}\frac{\partial (u_i^2)}{\partial w_0}\\ &=\sum_{i = 1}^{n} -2(y_i - (w_0 + w_1x_i))\\ &=-2\sum_{i = 1}^{n}(y_i - (w_0 + w_1x_i)) \end{align*} ∂w0∂i=1∑n(yi−(w0+w1xi))2=i=1∑n∂w0∂(ui2)=i=1∑n−2(yi−(w0+w1xi))=−2i=1∑n(yi−(w0+w1xi))

对 w 1 w_1 w1求偏导

∂ ∂ w 1 ∑ i = 1 n ( y i − ( w 0 + w 1 x i ) ) 2 = ∑ i = 1 n ∂ ( u i 2 ) ∂ w 1 = ∑ i = 1 n − 2 x i ( y i − ( w 0 + w 1 x i ) ) = − 2 ∑ i = 1 n x i ( y i − ( w 0 + w 1 x i ) ) \begin{align*} \frac{\partial}{\partial w_1}\sum_{i = 1}^{n} (y_i - (w_0 + w_1x_i))^2&=\sum_{i = 1}^{n}\frac{\partial (u_i^2)}{\partial w_1}\\ &=\sum_{i = 1}^{n} -2x_i(y_i - (w_0 + w_1x_i))\\ &=-2\sum_{i = 1}^{n}x_i(y_i - (w_0 + w_1x_i)) \end{align*} ∂w1∂i=1∑n(yi−(w0+w1xi))2=i=1∑n∂w1∂(ui2)=i=1∑n−2xi(yi−(w0+w1xi))=−2i=1∑nxi(yi−(w0+w1xi))

令 ∂ f ∂ w 0 = 0 \frac{\partial{f}}{\partial{w_0}}=0 ∂w0∂f=0和 ∂ f ∂ w 1 = 0 \frac{\partial{f}}{\partial{w_1}}=0 ∂w1∂f=0得:

w 0 = 1 n ( ∑ i = 1 n y i − w 1 ∑ i = 1 n x i ) w_0 = \frac{1}{n}\left(\sum_{i = 1}^{n} y_i - w_1\sum_{i = 1}^{n} x_i\right) w0=n1(i=1∑nyi−w1i=1∑nxi)

∑ i = 1 n x i y i − w 0 ∑ i = 1 n x i − w 1 ∑ i = 1 n x i 2 = 0 \sum_{i = 1}^{n}x_iy_i - w_0\sum_{i = 1}^{n}x_i - w_1\sum_{i = 1}^{n}x_i^2 = 0 i=1∑nxiyi−w0i=1∑nxi−w1i=1∑nxi2=0

将 ( w 0 = 1 n ( ∑ i = 1 n y i − w 1 ∑ i = 1 n x i ) (w_0=\frac{1}{n}\left(\sum_{i = 1}^{n}y_i - w_1\sum_{i = 1}^{n}x_i\right) (w0=n1(∑i=1nyi−w1∑i=1nxi)代入 ( ∑ i = 1 n x i y i − w 0 ∑ i = 1 n x i − w 1 ∑ i = 1 n x i 2 = 0 ) (\sum_{i = 1}^{n}x_iy_i - w_0\sum_{i = 1}^{n}x_i - w_1\sum_{i = 1}^{n}x_i^2 = 0) (∑i=1nxiyi−w0∑i=1nxi−w1∑i=1nxi2=0)中:

∑ i = 1 n x i y i − 1 n ( ∑ i = 1 n y i − w 1 ∑ i = 1 n x i ) ∑ i = 1 n x i − w 1 ∑ i = 1 n x i 2 = 0 n ∑ i = 1 n x i y i − ( ∑ i = 1 n y i − w 1 ∑ i = 1 n x i ) ∑ i = 1 n x i − n w 1 ∑ i = 1 n x i 2 = 0 n ∑ i = 1 n x i y i − ∑ i = 1 n x i ∑ i = 1 n y i + w 1 ( ∑ i = 1 n x i ) 2 − n w 1 ∑ i = 1 n x i 2 = 0 w 1 ( n ∑ i = 1 n x i 2 − ( ∑ i = 1 n x i ) 2 ) = n ∑ i = 1 n x i y i − ∑ i = 1 n x i ∑ i = 1 n y i w 1 = n ∑ i = 1 n x i y i − ∑ i = 1 n x i ∑ i = 1 n y i n ∑ i = 1 n x i 2 − ( ∑ i = 1 n x i ) 2 \begin{align*} \sum_{i = 1}^{n}x_iy_i-\frac{1}{n}\left(\sum_{i = 1}^{n}y_i - w_1\sum_{i = 1}^{n}x_i\right)\sum_{i = 1}^{n}x_i - w_1\sum_{i = 1}^{n}x_i^2&=0\\ n\sum_{i = 1}^{n}x_iy_i-\left(\sum_{i = 1}^{n}y_i - w_1\sum_{i = 1}^{n}x_i\right)\sum_{i = 1}^{n}x_i - n w_1\sum_{i = 1}^{n}x_i^2&=0\\ n\sum_{i = 1}^{n}x_iy_i-\sum_{i = 1}^{n}x_i\sum_{i = 1}^{n}y_i + w_1\left(\sum_{i = 1}^{n}x_i\right)^2- n w_1\sum_{i = 1}^{n}x_i^2&=0\\ w_1\left(n\sum_{i = 1}^{n}x_i^2-\left(\sum_{i = 1}^{n}x_i\right)^2\right)&=n\sum_{i = 1}^{n}x_iy_i-\sum_{i = 1}^{n}x_i\sum_{i = 1}^{n}y_i\\ w_1&=\frac{n\sum_{i = 1}^{n}x_iy_i-\sum_{i = 1}^{n}x_i\sum_{i = 1}^{n}y_i}{n\sum_{i = 1}^{n}x_i^2-\left(\sum_{i = 1}^{n}x_i\right)^2} \end{align*} i=1∑nxiyi−n1(i=1∑nyi−w1i=1∑nxi)i=1∑nxi−w1i=1∑nxi2ni=1∑nxiyi−(i=1∑nyi−w1i=1∑nxi)i=1∑nxi−nw1i=1∑nxi2ni=1∑nxiyi−i=1∑nxii=1∑nyi+w1(i=1∑nxi)2−nw1i=1∑nxi2w1 ni=1∑nxi2−(i=1∑nxi)2 w1=0=0=0=ni=1∑nxiyi−i=1∑nxii=1∑nyi=n∑i=1nxi2−(∑i=1nxi)2n∑i=1nxiyi−∑i=1nxi∑i=1nyi

w 0 = 1 n [ ∑ i = 1 n y i − n ∑ i = 1 n x i y i − ∑ i = 1 n x i ∑ i = 1 n y i n ∑ i = 1 n x i 2 − ( ∑ i = 1 n x i ) 2 ⋅ ∑ i = 1 n x i ] = 1 n ⋅ ( n ∑ i = 1 n x i 2 − ( ∑ i = 1 n x i ) 2 ) ∑ i = 1 n y i − ( n ∑ i = 1 n x i y i − ∑ i = 1 n x i ∑ i = 1 n y i ) ∑ i = 1 n x i n ∑ i = 1 n x i 2 − ( ∑ i = 1 n x i ) 2 = ( n ∑ i = 1 n x i 2 − ( ∑ i = 1 n x i ) 2 ) ∑ i = 1 n y i − n ∑ i = 1 n x i y i ∑ i = 1 n x i + ∑ i = 1 n x i ∑ i = 1 n y i ∑ i = 1 n x i n ( n ∑ i = 1 n x i 2 − ( ∑ i = 1 n x i ) 2 ) = n ∑ i = 1 n x i 2 ∑ i = 1 n y i − ( ∑ i = 1 n x i ) 2 ∑ i = 1 n y i − n ∑ i = 1 n x i y i ∑ i = 1 n x i + ( ∑ i = 1 n x i ) 2 ∑ i = 1 n y i n ( n ∑ i = 1 n x i 2 − ( ∑ i = 1 n x i ) 2 ) = n ∑ i = 1 n x i 2 ∑ i = 1 n y i − n ∑ i = 1 n x i y i ∑ i = 1 n x i n ( n ∑ i = 1 n x i 2 − ( ∑ i = 1 n x i ) 2 ) = ∑ i = 1 n x i 2 ∑ i = 1 n y i − ∑ i = 1 n x i ∑ i = 1 n x i y i n ∑ i = 1 n x i 2 − ( ∑ i = 1 n x i ) 2 \begin{align*} w_0&=\frac{1}{n}\left[\sum_{i = 1}^{n}y_i-\frac{n\sum_{i = 1}^{n}x_iy_i-\sum_{i = 1}^{n}x_i\sum_{i = 1}^{n}y_i}{n\sum_{i = 1}^{n}x_i^2 - (\sum_{i = 1}^{n}x_i)^2}\cdot\sum_{i = 1}^{n}x_i\right]\\ &=\frac{1}{n}\cdot\frac{(n\sum_{i = 1}^{n}x_i^2 - (\sum_{i = 1}^{n}x_i)^2)\sum_{i = 1}^{n}y_i-(n\sum_{i = 1}^{n}x_iy_i-\sum_{i = 1}^{n}x_i\sum_{i = 1}^{n}y_i)\sum_{i = 1}^{n}x_i}{n\sum_{i = 1}^{n}x_i^2 - (\sum_{i = 1}^{n}x_i)^2}\\ &=\frac{(n\sum_{i = 1}^{n}x_i^2 - (\sum_{i = 1}^{n}x_i)^2)\sum_{i = 1}^{n}y_i - n\sum_{i = 1}^{n}x_iy_i\sum_{i = 1}^{n}x_i+\sum_{i = 1}^{n}x_i\sum_{i = 1}^{n}y_i\sum_{i = 1}^{n}x_i}{n(n\sum_{i = 1}^{n}x_i^2 - (\sum_{i = 1}^{n}x_i)^2)}\\ &=\frac{n\sum_{i = 1}^{n}x_i^2\sum_{i = 1}^{n}y_i-(\sum_{i = 1}^{n}x_i)^2\sum_{i = 1}^{n}y_i - n\sum_{i = 1}^{n}x_iy_i\sum_{i = 1}^{n}x_i+\left(\sum_{i = 1}^{n}x_i\right)^2\sum_{i = 1}^{n}y_i}{n(n\sum_{i = 1}^{n}x_i^2 - (\sum_{i = 1}^{n}x_i)^2)}\\ &=\frac{n\sum_{i = 1}^{n}x_i^2\sum_{i = 1}^{n}y_i - n\sum_{i = 1}^{n}x_iy_i\sum_{i = 1}^{n}x_i}{n(n\sum_{i = 1}^{n}x_i^2 - (\sum_{i = 1}^{n}x_i)^2)}\\ &=\frac{\sum_{i = 1}^{n}x_i^2\sum_{i = 1}^{n}y_i-\sum_{i = 1}^{n}x_i\sum_{i = 1}^{n}x_iy_i}{n\sum_{i = 1}^{n}x_i^2 - (\sum_{i = 1}^{n}x_i)^2} \end{align*} w0=n1[i=1∑nyi−n∑i=1nxi2−(∑i=1nxi)2n∑i=1nxiyi−∑i=1nxi∑i=1nyi⋅i=1∑nxi]=n1⋅n∑i=1nxi2−(∑i=1nxi)2(n∑i=1nxi2−(∑i=1nxi)2)∑i=1nyi−(n∑i=1nxiyi−∑i=1nxi∑i=1nyi)∑i=1nxi=n(n∑i=1nxi2−(∑i=1nxi)2)(n∑i=1nxi2−(∑i=1nxi)2)∑i=1nyi−n∑i=1nxiyi∑i=1nxi+∑i=1nxi∑i=1nyi∑i=1nxi=n(n∑i=1nxi2−(∑i=1nxi)2)n∑i=1nxi2∑i=1nyi−(∑i=1nxi)2∑i=1nyi−n∑i=1nxiyi∑i=1nxi+(∑i=1nxi)2∑i=1nyi=n(n∑i=1nxi2−(∑i=1nxi)2)n∑i=1nxi2∑i=1nyi−n∑i=1nxiyi∑i=1nxi=n∑i=1nxi2−(∑i=1nxi)2∑i=1nxi2∑i=1nyi−∑i=1nxi∑i=1nxiyi

因此:

w 1 = n ∑ x i y i − ∑ x i ∑ y i n ∑ x i 2 − ( ∑ x i ) 2 w_1 = \frac{n\sum x_iy_i - \sum x_i\sum y_i}{n\sum x_i^2 - (\sum x_i)^2} w1=n∑xi2−(∑xi)2n∑xiyi−∑xi∑yi

w 0 = ∑ x i 2 ∑ y i − ∑ x i ∑ x i y i n ∑ x i 2 − ( ∑ x i ) 2 w_0 = \frac{\sum x_i^2\sum y_i - \sum x_i\sum x_iy_i}{n\sum x_i^2 - (\sum x_i)^2} w0=n∑xi2−(∑xi)2∑xi2∑yi−∑xi∑xiyi