Android 基于Zxing的二维码扫描优化

最近公司项目App中要集成二维码扫描来适应在户外工作的时候,对码头集装箱等上面贴的A4纸张打印的二维码进行识别, 一般App二维码集成后,能扫出来就不管了,但是我们在集成成功后,根据用户反馈,在户外的环境下,很多二维码识别不了,或者识别速度慢,我们自己也是适用了一下,发现也确实是这样.

一般造成这个识别不出来的原因,我们总结了以下几点:

- A4纸张打印的标签二维码, 本来打印就不是特别清晰,有些像素点,不一定都打印了出来,

- 户外环境,多变,灰尘,粉尘影响

- 日晒雨淋,还有各种划痕,造成了二维码破损和有污渍

- Android手机配置不一样,有的手机好一点,像素高,有的用户的像素低

大概就是这些,但是用基于QBar(在Zxing上做了优化)的微信,却能很快的识别出上面几种情况造成的二维码; 基于libqrencode 库集成的支付宝或者钉钉二维码扫描,一样也能识别出来;还有IOS也就是调用系统的扫描,也一样能够扫描出来,为啥我们大android不行?

老板不管这些,只是说了,别人的可以,为啥你的不可以,那就是你的问题.......

网上找了很多各种几千个赞的第三方集成的二维码,根本就不能满足上面的需求,当时感觉真心不知道怎么办好了.

唯独网上找的这个还可以一点:https://github.com/vondear/RxTool 但是破损一些的还是扫描不出来;那个网上几千个赞的一片枫叶的 ,这种环境下的二维码扫描根本边都摸不到.

还有郭林推荐的:https://github.com/al4fun/SimpleScanner 这个库,虽然是大神推荐的,但是比上面的这个,还要差那么好几点,郭林的推荐的二维码扫描链接:https://mp.weixin.qq.com/s/aPqSK1FlsPiENzSE48BVUA

没办法,最后只能自己动手,网上找的,没有找到合适的,目前我们修改的二维码扫描基本可以做到:除了破损的识别不了,其他的都能识别,就是有时候速度慢了点,要多对一下焦,勉强能够比上面好那么一点点而已.

代码如下: (这里面很多类都在原有类的基础上有改动,虽然类名相同,但是里面的方法有些有变动!)

build.gradle

dependencies{

api fileTree(include: ['*.jar'], dir: 'libs')

api files('libs/core-3.3.0.jar')

// provided 'com.android.support:appcompat-v7:26.1.0'

compileOnly 'com.android.support:design:26.1.0'

compileOnly 'com.android.support:support-vector-drawable:26.1.0'

}

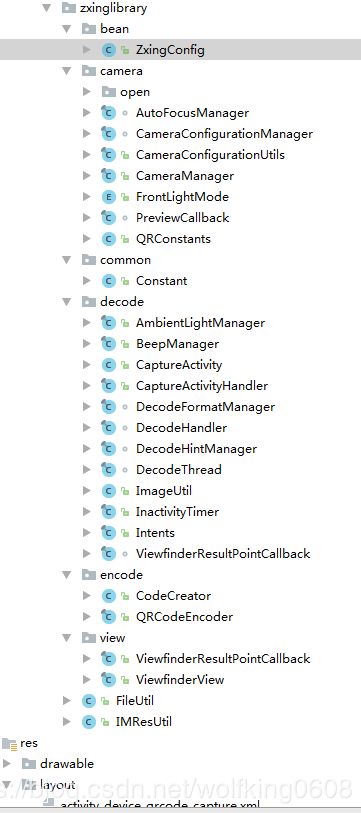

文件目录如下:

ZxingConfig.javapublic class ZxingConfig implements Serializable {

/*是否播放声音*/

private boolean isPlayBeep = true;

/*是否震动*/

private boolean isShake = false;

/*是否显示下方的其他功能布局*/

private boolean isShowbottomLayout = true;

/*是否显示闪光灯按钮*/

private boolean isShowFlashLight = true;

/*是否显示相册按钮*/

private boolean isShowAlbum = true;

public boolean isPlayBeep() {

return isPlayBeep;

}

public void setPlayBeep(boolean playBeep) {

isPlayBeep = playBeep;

}

public boolean isShake() {

return isShake;

}

public void setShake(boolean shake) {

isShake = shake;

}

public boolean isShowbottomLayout() {

return isShowbottomLayout;

}

public void setShowbottomLayout(boolean showbottomLayout) {

isShowbottomLayout = showbottomLayout;

}

public boolean isShowFlashLight() {

return isShowFlashLight;

}

public void setShowFlashLight(boolean showFlashLight) {

isShowFlashLight = showFlashLight;

}

public boolean isShowAlbum() {

return isShowAlbum;

}

public void setShowAlbum(boolean showAlbum) {

isShowAlbum = showAlbum;

}

}

CameraFacing.javapublic enum CameraFacing {

BACK, // must be value 0!

FRONT, // must be value 1!

}OpenCamera.javapublic final class OpenCamera {

private final int index;

private final Camera camera;

private final CameraFacing facing;

private final int orientation;

public OpenCamera(int index, Camera camera, CameraFacing facing, int orientation) {

this.index = index;

this.camera = camera;

this.facing = facing;

this.orientation = orientation;

}

public Camera getCamera() {

return camera;

}

public CameraFacing getFacing() {

return facing;

}

public int getOrientation() {

return orientation;

}

@Override

public String toString() {

return "Camera #" + index + " : " + facing + ',' + orientation;

}

}

OpenCameraInterface.javapublic final class OpenCameraInterface {

private static final String TAG = OpenCameraInterface.class.getName();

private OpenCameraInterface() {

}

/**

* For {@link #open(int)}, means no preference for which camera to open.

*/

public static final int NO_REQUESTED_CAMERA = -1;

/**

* Opens the requested camera with {@link Camera#open(int)}, if one exists.

*

* @param cameraId camera ID of the camera to use. A negative value

* or {@link #NO_REQUESTED_CAMERA} means "no preference", in which case a rear-facing

* camera is returned if possible or else any camera

* @return handle to {@link OpenCamera} that was opened

*/

public static OpenCamera open(int cameraId) {

int numCameras = Camera.getNumberOfCameras();

if (numCameras == 0) {

Log.w(TAG, "No cameras!");

return null;

}

boolean explicitRequest = cameraId >= 0;

Camera.CameraInfo selectedCameraInfo = null;

int index;

if (explicitRequest) {

index = cameraId;

selectedCameraInfo = new Camera.CameraInfo();

Camera.getCameraInfo(index, selectedCameraInfo);

} else {

index = 0;

while (index < numCameras) {

Camera.CameraInfo cameraInfo = new Camera.CameraInfo();

Camera.getCameraInfo(index, cameraInfo);

CameraFacing reportedFacing = CameraFacing.values()[cameraInfo.facing];

if (reportedFacing == CameraFacing.BACK) {

selectedCameraInfo = cameraInfo;

break;

}

index++;

}

}

Camera camera;

if (index < numCameras) {

Log.i(TAG, "Opening camera #" + index);

camera = Camera.open(index);

} else {

if (explicitRequest) {

Log.w(TAG, "Requested camera does not exist: " + cameraId);

camera = null;

} else {

Log.i(TAG, "No camera facing " + CameraFacing.BACK + "; returning camera #0");

camera = Camera.open(0);

selectedCameraInfo = new Camera.CameraInfo();

Camera.getCameraInfo(0, selectedCameraInfo);

}

}

if (camera == null) {

return null;

}

return new OpenCamera(index,

camera,

CameraFacing.values()[selectedCameraInfo.facing],

selectedCameraInfo.orientation);

}

}

AutoFocusManager.javafinal class AutoFocusManager implements Camera.AutoFocusCallback {

private static final String TAG = AutoFocusManager.class.getSimpleName();

private static final long AUTO_FOCUS_INTERVAL_MS = 2000L;

private static final Collection FOCUS_MODES_CALLING_AF;

static {

FOCUS_MODES_CALLING_AF = new ArrayList<>(2);

FOCUS_MODES_CALLING_AF.add(Camera.Parameters.FOCUS_MODE_AUTO);

FOCUS_MODES_CALLING_AF.add(Camera.Parameters.FOCUS_MODE_MACRO);

}

private boolean stopped;

private boolean focusing;

private final boolean useAutoFocus;

private final Camera camera;

private AsyncTask outstandingTask;

AutoFocusManager(Context context, Camera camera) {

this.camera = camera;

SharedPreferences sharedPrefs = PreferenceManager.getDefaultSharedPreferences(context);

String currentFocusMode = camera.getParameters().getFocusMode();

useAutoFocus =

sharedPrefs.getBoolean(QRConstants.KEY_AUTO_FOCUS, true) &&

FOCUS_MODES_CALLING_AF.contains(currentFocusMode);

Log.i(TAG, "Current focus mode '" + currentFocusMode + "'; use auto focus? " + useAutoFocus);

start();

}

@Override

public synchronized void onAutoFocus(boolean success, Camera theCamera) {

focusing = false;

autoFocusAgainLater();

}

private synchronized void autoFocusAgainLater() {

if (!stopped && outstandingTask == null) {

AutoFocusTask newTask = new AutoFocusTask();

try {

newTask.executeOnExecutor(AsyncTask.THREAD_POOL_EXECUTOR);

outstandingTask = newTask;

} catch (RejectedExecutionException ree) {

Log.w(TAG, "Could not request auto focus", ree);

}

}

}

synchronized void start() {

// if (useAutoFocus) {

outstandingTask = null;

if (!stopped && !focusing) {

try {

camera.autoFocus(this);

focusing = true;

} catch (RuntimeException re) {

// Have heard RuntimeException reported in Android 4.0.x+; continue?

Log.w(TAG, "Unexpected exception while focusing", re);

// Try again later to keep cycle going

autoFocusAgainLater();

}

}

// }

}

private synchronized void cancelOutstandingTask() {

if (outstandingTask != null) {

if (outstandingTask.getStatus() != AsyncTask.Status.FINISHED) {

outstandingTask.cancel(true);

}

outstandingTask = null;

}

}

synchronized void stop() {

stopped = true;

// if (useAutoFocus) {

cancelOutstandingTask();

// Doesn't hurt to call this even if not focusing

try {

camera.cancelAutoFocus();

} catch (RuntimeException re) {

// Have heard RuntimeException reported in Android 4.0.x+; continue?

Log.w(TAG, "Unexpected exception while cancelling focusing", re);

}

// }

}

private final class AutoFocusTask extends AsyncTask {

@Override

protected Object doInBackground(Object... voids) {

try {

Thread.sleep(AUTO_FOCUS_INTERVAL_MS);

} catch (InterruptedException e) {

// continue

}

start();

return null;

}

}

} CameraConfigurationManager.javafinal class CameraConfigurationManager {

private static final String TAG = "CameraConfiguration";

private final Context context;

private int cwNeededRotation;

private int cwRotationFromDisplayToCamera;

private Point screenResolution;

private Point cameraResolution;

private Point bestPreviewSize;

private Point previewSizeOnScreen;

CameraConfigurationManager(Context context) {

this.context = context;

}

/**

* Reads, one time, values from the camera that are needed by the app.

*/

void initFromCameraParameters(OpenCamera camera) {

Camera.Parameters parameters = camera.getCamera().getParameters();

WindowManager manager = (WindowManager) context.getSystemService(Context.WINDOW_SERVICE);

Display display = manager.getDefaultDisplay();

int displayRotation = display.getRotation();

int cwRotationFromNaturalToDisplay;

switch (displayRotation) {

case Surface.ROTATION_0:

cwRotationFromNaturalToDisplay = 0;

break;

case Surface.ROTATION_90:

cwRotationFromNaturalToDisplay = 90;

break;

case Surface.ROTATION_180:

cwRotationFromNaturalToDisplay = 180;

break;

case Surface.ROTATION_270:

cwRotationFromNaturalToDisplay = 270;

break;

default:

// Have seen this return incorrect values like -90

if (displayRotation % 90 == 0) {

cwRotationFromNaturalToDisplay = (360 + displayRotation) % 360;

} else {

throw new IllegalArgumentException("Bad rotation: " + displayRotation);

}

}

Log.i(TAG, "Display at: " + cwRotationFromNaturalToDisplay);

int cwRotationFromNaturalToCamera = camera.getOrientation();

Log.i(TAG, "Camera at: " + cwRotationFromNaturalToCamera);

// Still not 100% sure about this. But acts like we need to flip this:

if (camera.getFacing() == CameraFacing.FRONT) {

cwRotationFromNaturalToCamera = (360 - cwRotationFromNaturalToCamera) % 360;

Log.i(TAG, "Front camera overriden to: " + cwRotationFromNaturalToCamera);

}

/*

SharedPreferences prefs = PreferenceManager.getDefaultSharedPreferences(context);

String overrideRotationString;

if (camera.getFacing() == CameraFacing.FRONT) {

overrideRotationString = prefs.getString(PreferencesActivity.KEY_FORCE_CAMERA_ORIENTATION_FRONT, null);

} else {

overrideRotationString = prefs.getString(PreferencesActivity.KEY_FORCE_CAMERA_ORIENTATION, null);

}

if (overrideRotationString != null && !"-".equals(overrideRotationString)) {

Log.i(TAG, "Overriding camera manually to " + overrideRotationString);

cwRotationFromNaturalToCamera = Integer.parseInt(overrideRotationString);

}

*/

cwRotationFromDisplayToCamera =

(360 + cwRotationFromNaturalToCamera - cwRotationFromNaturalToDisplay) % 360;

Log.i(TAG, "Final display orientation: " + cwRotationFromDisplayToCamera);

if (camera.getFacing() == CameraFacing.FRONT) {

Log.i(TAG, "Compensating rotation for front camera");

cwNeededRotation = (360 - cwRotationFromDisplayToCamera) % 360;

} else {

cwNeededRotation = cwRotationFromDisplayToCamera;

}

Log.i(TAG, "Clockwise rotation from display to camera: " + cwNeededRotation);

Point theScreenResolution = new Point();

display.getSize(theScreenResolution);

screenResolution = theScreenResolution;

Log.i(TAG, "Screen resolution in current orientation: " + screenResolution);

cameraResolution = CameraConfigurationUtils.findBestPreviewSizeValue(parameters, screenResolution);

Log.i(TAG, "Camera resolution: " + cameraResolution);

bestPreviewSize = CameraConfigurationUtils.findBestPreviewSizeValue(parameters, screenResolution);

Log.i(TAG, "Best available preview size: " + bestPreviewSize);

boolean isScreenPortrait = screenResolution.x < screenResolution.y;

boolean isPreviewSizePortrait = bestPreviewSize.x < bestPreviewSize.y;

if (isScreenPortrait == isPreviewSizePortrait) {

previewSizeOnScreen = bestPreviewSize;

} else {

previewSizeOnScreen = new Point(bestPreviewSize.y, bestPreviewSize.x);

}

Log.i(TAG, "Preview size on screen: " + previewSizeOnScreen);

}

void setDesiredCameraParameters(OpenCamera camera, boolean safeMode) {

Camera theCamera = camera.getCamera();

Camera.Parameters parameters = theCamera.getParameters();

if (parameters == null) {

Log.w(TAG, "Device error: no camera parameters are available. Proceeding without configuration.");

return;

}

Log.i(TAG, "Initial camera parameters: " + parameters.flatten());

if (safeMode) {

Log.w(TAG, "In camera config safe mode -- most settings will not be honored");

}

// SharedPreferences prefs = PreferenceManager.getDefaultSharedPreferences(context);

initializeTorch(parameters, safeMode, QRConstants.disableExposure);

CameraConfigurationUtils.setFocus(

parameters,

//是否支持自动对焦

QRConstants.autoFocus,

true,

safeMode);

if (!safeMode) {

//

//CameraConfigurationUtils.setInvertColor(parameters);

CameraConfigurationUtils.setBarcodeSceneMode(parameters);

CameraConfigurationUtils.setVideoStabilization(parameters);

CameraConfigurationUtils.setFocusArea(parameters);

CameraConfigurationUtils.setMetering(parameters);

}

parameters.setPreviewSize(bestPreviewSize.x, bestPreviewSize.y);

theCamera.setParameters(parameters);

theCamera.setDisplayOrientation(cwRotationFromDisplayToCamera);

Camera.Parameters afterParameters = theCamera.getParameters();

Camera.Size afterSize = afterParameters.getPreviewSize();

if (afterSize != null && (bestPreviewSize.x != afterSize.width || bestPreviewSize.y != afterSize.height)) {

Log.w(TAG, "Camera said it supported preview size " + bestPreviewSize.x + 'x' + bestPreviewSize.y +

", but after setting it, preview size is " + afterSize.width + 'x' + afterSize.height);

bestPreviewSize.x = afterSize.width;

bestPreviewSize.y = afterSize.height;

}

}

Point getBestPreviewSize() {

return bestPreviewSize;

}

Point getPreviewSizeOnScreen() {

return previewSizeOnScreen;

}

Point getCameraResolution() {

return cameraResolution;

}

Point getScreenResolution() {

return screenResolution;

}

int getCWNeededRotation() {

return cwNeededRotation;

}

boolean getTorchState(Camera camera) {

if (camera != null) {

Camera.Parameters parameters = camera.getParameters();

if (parameters != null) {

String flashMode = camera.getParameters().getFlashMode();

return flashMode != null &&

(Camera.Parameters.FLASH_MODE_ON.equals(flashMode) ||

Camera.Parameters.FLASH_MODE_TORCH.equals(flashMode));

}

}

return false;

}

void setTorch(Camera camera, boolean newSetting) {

Camera.Parameters parameters = camera.getParameters();

doSetTorch(parameters, newSetting, false, QRConstants.disableExposure);

camera.setParameters(parameters);

}

private void initializeTorch(Camera.Parameters parameters, boolean safeMode, boolean disableExposure) {

boolean currentSetting = QRConstants.frontLightMode == FrontLightMode.ON;

doSetTorch(parameters, currentSetting, safeMode, disableExposure);

}

private void doSetTorch(Camera.Parameters parameters, boolean newSetting, boolean safeMode, boolean disableExposure) {

CameraConfigurationUtils.setTorch(parameters, newSetting);

if (!safeMode && !disableExposure) {

CameraConfigurationUtils.setBestExposure(parameters, newSetting);

}

}

}

CameraConfigurationUtils.java@TargetApi(Build.VERSION_CODES.ICE_CREAM_SANDWICH_MR1)

public final class CameraConfigurationUtils {

private static final String TAG = "CameraConfiguration";

private static final Pattern SEMICOLON = Pattern.compile(";");

private static final int MIN_PREVIEW_PIXELS = 480 * 320; // normal screen

private static final float MAX_EXPOSURE_COMPENSATION = 1.5f;

private static final float MIN_EXPOSURE_COMPENSATION = 0.0f;

private static final double MAX_ASPECT_DISTORTION = 0.15;

private static final int MIN_FPS = 10;

private static final int MAX_FPS = 20;

private static final int AREA_PER_1000 = 400;

private CameraConfigurationUtils() {

}

public static void setFocus(Camera.Parameters parameters,

boolean autoFocus,

boolean disableContinuous,

boolean safeMode) {

List supportedFocusModes = parameters.getSupportedFocusModes();

String focusMode = null;

if (autoFocus) {

if (safeMode || disableContinuous) {

focusMode = findSettableValue("focus mode",

supportedFocusModes,

Camera.Parameters.FOCUS_MODE_AUTO);

} else {

focusMode = findSettableValue("focus mode",

supportedFocusModes,

Camera.Parameters.FOCUS_MODE_CONTINUOUS_PICTURE,

Camera.Parameters.FOCUS_MODE_CONTINUOUS_VIDEO,

Camera.Parameters.FOCUS_MODE_AUTO);

}

}

// Maybe selected auto-focus but not available, so fall through here:

if (!safeMode && focusMode == null) {

focusMode = findSettableValue("focus mode",

supportedFocusModes,

Camera.Parameters.FOCUS_MODE_MACRO,

Camera.Parameters.FOCUS_MODE_EDOF);

}

if (focusMode != null) {

if (focusMode.equals(parameters.getFocusMode())) {

Log.i(TAG, "Focus mode already set to " + focusMode);

} else {

parameters.setFocusMode(focusMode);

}

}

}

public static void setTorch(Camera.Parameters parameters, boolean on) {

List supportedFlashModes = parameters.getSupportedFlashModes();

String flashMode;

if (on) {

flashMode = findSettableValue("flash mode",

supportedFlashModes,

Camera.Parameters.FLASH_MODE_TORCH,

Camera.Parameters.FLASH_MODE_ON);

} else {

flashMode = findSettableValue("flash mode",

supportedFlashModes,

Camera.Parameters.FLASH_MODE_OFF);

}

if (flashMode != null) {

if (flashMode.equals(parameters.getFlashMode())) {

Log.i(TAG, "Flash mode already set to " + flashMode);

} else {

Log.i(TAG, "Setting flash mode to " + flashMode);

parameters.setFlashMode(flashMode);

}

}

}

public static void setBestExposure(Camera.Parameters parameters, boolean lightOn) {

int minExposure = parameters.getMinExposureCompensation();

int maxExposure = parameters.getMaxExposureCompensation();

float step = parameters.getExposureCompensationStep();

if ((minExposure != 0 || maxExposure != 0) && step > 0.0f) {

// Set low when light is on

float targetCompensation = lightOn ? MIN_EXPOSURE_COMPENSATION : MAX_EXPOSURE_COMPENSATION;

int compensationSteps = Math.round(targetCompensation / step);

float actualCompensation = step * compensationSteps;

// Clamp value:

compensationSteps = Math.max(Math.min(compensationSteps, maxExposure), minExposure);

if (parameters.getExposureCompensation() == compensationSteps) {

Log.i(TAG, "Exposure compensation already set to " + compensationSteps + " / " + actualCompensation);

} else {

Log.i(TAG, "Setting exposure compensation to " + compensationSteps + " / " + actualCompensation);

parameters.setExposureCompensation(compensationSteps);

}

} else {

Log.i(TAG, "Camera does not support exposure compensation");

}

}

public static void setBestPreviewFPS(Camera.Parameters parameters) {

setBestPreviewFPS(parameters, MIN_FPS, MAX_FPS);

}

public static void setBestPreviewFPS(Camera.Parameters parameters, int minFPS, int maxFPS) {

List supportedPreviewFpsRanges = parameters.getSupportedPreviewFpsRange();

Log.i(TAG, "Supported FPS ranges: " + toString(supportedPreviewFpsRanges));

if (supportedPreviewFpsRanges != null && !supportedPreviewFpsRanges.isEmpty()) {

int[] suitableFPSRange = null;

for (int[] fpsRange : supportedPreviewFpsRanges) {

int thisMin = fpsRange[Camera.Parameters.PREVIEW_FPS_MIN_INDEX];

int thisMax = fpsRange[Camera.Parameters.PREVIEW_FPS_MAX_INDEX];

if (thisMin >= minFPS * 1000 && thisMax <= maxFPS * 1000) {

suitableFPSRange = fpsRange;

break;

}

}

if (suitableFPSRange == null) {

Log.i(TAG, "No suitable FPS range?");

} else {

int[] currentFpsRange = new int[2];

parameters.getPreviewFpsRange(currentFpsRange);

if (Arrays.equals(currentFpsRange, suitableFPSRange)) {

Log.i(TAG, "FPS range already set to " + Arrays.toString(suitableFPSRange));

} else {

Log.i(TAG, "Setting FPS range to " + Arrays.toString(suitableFPSRange));

parameters.setPreviewFpsRange(suitableFPSRange[Camera.Parameters.PREVIEW_FPS_MIN_INDEX],

suitableFPSRange[Camera.Parameters.PREVIEW_FPS_MAX_INDEX]);

}

}

}

}

public static void setFocusArea(Camera.Parameters parameters) {

if (parameters.getMaxNumFocusAreas() > 0) {

Log.i(TAG, "Old focus areas: " + toString(parameters.getFocusAreas()));

List middleArea = buildMiddleArea(AREA_PER_1000);

Log.i(TAG, "Setting focus area to : " + toString(middleArea));

parameters.setFocusAreas(middleArea);

} else {

Log.i(TAG, "Device does not support focus areas");

}

}

public static void setMetering(Camera.Parameters parameters) {

if (parameters.getMaxNumMeteringAreas() > 0) {

Log.i(TAG, "Old metering areas: " + parameters.getMeteringAreas());

List middleArea = buildMiddleArea(AREA_PER_1000);

Log.i(TAG, "Setting metering area to : " + toString(middleArea));

parameters.setMeteringAreas(middleArea);

} else {

Log.i(TAG, "Device does not support metering areas");

}

}

private static List buildMiddleArea(int areaPer1000) {

return Collections.singletonList(

new Camera.Area(new Rect(-areaPer1000, -areaPer1000, areaPer1000, areaPer1000), 1));

}

public static void setVideoStabilization(Camera.Parameters parameters) {

if (parameters.isVideoStabilizationSupported()) {

if (parameters.getVideoStabilization()) {

Log.i(TAG, "Video stabilization already enabled");

} else {

Log.i(TAG, "Enabling video stabilization...");

parameters.setVideoStabilization(true);

}

} else {

Log.i(TAG, "This device does not support video stabilization");

}

}

public static void setBarcodeSceneMode(Camera.Parameters parameters) {

if (Camera.Parameters.SCENE_MODE_BARCODE.equals(parameters.getSceneMode())) {

Log.i(TAG, "Barcode scene mode already set");

return;

}

String sceneMode = findSettableValue("scene mode",

parameters.getSupportedSceneModes(),

Camera.Parameters.SCENE_MODE_BARCODE);

if (sceneMode != null) {

parameters.setSceneMode(sceneMode);

}

}

public static void setZoom(Camera.Parameters parameters, double targetZoomRatio) {

if (parameters.isZoomSupported()) {

Integer zoom = indexOfClosestZoom(parameters, targetZoomRatio);

if (zoom == null) {

return;

}

if (parameters.getZoom() == zoom) {

Log.i(TAG, "Zoom is already set to " + zoom);

} else {

Log.i(TAG, "Setting zoom to " + zoom);

parameters.setZoom(zoom);

}

} else {

Log.i(TAG, "Zoom is not supported");

}

}

private static Integer indexOfClosestZoom(Camera.Parameters parameters, double targetZoomRatio) {

List ratios = parameters.getZoomRatios();

Log.i(TAG, "Zoom ratios: " + ratios);

int maxZoom = parameters.getMaxZoom();

if (ratios == null || ratios.isEmpty() || ratios.size() != maxZoom + 1) {

Log.w(TAG, "Invalid zoom ratios!");

return null;

}

double target100 = 100.0 * targetZoomRatio;

double smallestDiff = Double.POSITIVE_INFINITY;

int closestIndex = 0;

for (int i = 0; i < ratios.size(); i++) {

double diff = Math.abs(ratios.get(i) - target100);

if (diff < smallestDiff) {

smallestDiff = diff;

closestIndex = i;

}

}

Log.i(TAG, "Chose zoom ratio of " + (ratios.get(closestIndex) / 100.0));

return closestIndex;

}

public static void setInvertColor(Camera.Parameters parameters) {

if (Camera.Parameters.EFFECT_NEGATIVE.equals(parameters.getColorEffect())) {

Log.i(TAG, "Negative effect already set");

return;

}

String colorMode = findSettableValue("color effect",

parameters.getSupportedColorEffects(),

Camera.Parameters.EFFECT_NEGATIVE);

if (colorMode != null) {

parameters.setColorEffect(colorMode);

}

}

public static Point findBestPreviewSizeValue(Camera.Parameters parameters, Point screenResolution) {

List rawSupportedSizes = parameters.getSupportedPreviewSizes();

if (rawSupportedSizes == null) {

Log.w(TAG, "Device returned no supported preview sizes; using default");

Camera.Size defaultSize = parameters.getPreviewSize();

if (defaultSize == null) {

throw new IllegalStateException("Parameters contained no preview size!");

}

return new Point(defaultSize.width, defaultSize.height);

}

// Sort by size, descending

List supportedPreviewSizes = new ArrayList<>(rawSupportedSizes);

Collections.sort(supportedPreviewSizes, new Comparator() {

@Override

public int compare(Camera.Size a, Camera.Size b) {

int aPixels = a.height * a.width;

int bPixels = b.height * b.width;

if (bPixels < aPixels) {

return -1;

}

if (bPixels > aPixels) {

return 1;

}

return 0;

}

});

if (Log.isLoggable(TAG, Log.INFO)) {

StringBuilder previewSizesString = new StringBuilder();

for (Camera.Size supportedPreviewSize : supportedPreviewSizes) {

previewSizesString.append(supportedPreviewSize.width).append('x')

.append(supportedPreviewSize.height).append(' ');

}

Log.i(TAG, "Supported preview sizes: " + previewSizesString);

}

double screenAspectRatio = (double) screenResolution.x / (double) screenResolution.y;

// Remove sizes that are unsuitable

Iterator it = supportedPreviewSizes.iterator();

while (it.hasNext()) {

Camera.Size supportedPreviewSize = it.next();

int realWidth = supportedPreviewSize.width;

int realHeight = supportedPreviewSize.height;

if (realWidth * realHeight < MIN_PREVIEW_PIXELS) {

it.remove();

continue;

}

boolean isCandidatePortrait = realWidth < realHeight;

int maybeFlippedWidth = isCandidatePortrait ? realHeight : realWidth;

int maybeFlippedHeight = isCandidatePortrait ? realWidth : realHeight;

double aspectRatio = (double) maybeFlippedWidth / (double) maybeFlippedHeight;

double distortion = Math.abs(aspectRatio - screenAspectRatio);

if (distortion > MAX_ASPECT_DISTORTION) {

it.remove();

continue;

}

if (maybeFlippedWidth == screenResolution.x && maybeFlippedHeight == screenResolution.y) {

Point exactPoint = new Point(realWidth, realHeight);

Log.i(TAG, "Found preview size exactly matching screen size: " + exactPoint);

return exactPoint;

}

}

// If no exact match, use largest preview size. This was not a great idea on older devices because

// of the additional computation needed. We're likely to get here on newer Android 4+ devices, where

// the CPU is much more powerful.

if (!supportedPreviewSizes.isEmpty()) {

Camera.Size largestPreview = supportedPreviewSizes.get(0);

Point largestSize = new Point(largestPreview.width, largestPreview.height);

Log.i(TAG, "Using largest suitable preview size: " + largestSize);

return largestSize;

}

// If there is nothing at all suitable, return current preview size

Camera.Size defaultPreview = parameters.getPreviewSize();

if (defaultPreview == null) {

throw new IllegalStateException("Parameters contained no preview size!");

}

Point defaultSize = new Point(defaultPreview.width, defaultPreview.height);

Log.i(TAG, "No suitable preview sizes, using default: " + defaultSize);

return defaultSize;

}

private static String findSettableValue(String name,

Collection supportedValues,

String... desiredValues) {

Log.i(TAG, "Requesting " + name + " value from among: " + Arrays.toString(desiredValues));

Log.i(TAG, "Supported " + name + " values: " + supportedValues);

if (supportedValues != null) {

for (String desiredValue : desiredValues) {

if (supportedValues.contains(desiredValue)) {

Log.i(TAG, "Can set " + name + " to: " + desiredValue);

return desiredValue;

}

}

}

Log.i(TAG, "No supported values match");

return null;

}

private static String toString(Collection arrays) {

if (arrays == null || arrays.isEmpty()) {

return "[]";

}

StringBuilder buffer = new StringBuilder();

buffer.append('[');

Iterator it = arrays.iterator();

while (it.hasNext()) {

buffer.append(Arrays.toString(it.next()));

if (it.hasNext()) {

buffer.append(", ");

}

}

buffer.append(']');

return buffer.toString();

}

private static String toString(Iterable areas) {

if (areas == null) {

return null;

}

StringBuilder result = new StringBuilder();

for (Camera.Area area : areas) {

result.append(area.rect).append(':').append(area.weight).append(' ');

}

return result.toString();

}

public static String collectStats(Camera.Parameters parameters) {

return collectStats(parameters.flatten());

}

public static String collectStats(CharSequence flattenedParams) {

StringBuilder result = new StringBuilder(1000);

result.append("BOARD=").append(Build.BOARD).append('\n');

result.append("BRAND=").append(Build.BRAND).append('\n');

result.append("CPU_ABI=").append(Build.CPU_ABI).append('\n');

result.append("DEVICE=").append(Build.DEVICE).append('\n');

result.append("DISPLAY=").append(Build.DISPLAY).append('\n');

result.append("FINGERPRINT=").append(Build.FINGERPRINT).append('\n');

result.append("HOST=").append(Build.HOST).append('\n');

result.append("ID=").append(Build.ID).append('\n');

result.append("MANUFACTURER=").append(Build.MANUFACTURER).append('\n');

result.append("MODEL=").append(Build.MODEL).append('\n');

result.append("PRODUCT=").append(Build.PRODUCT).append('\n');

result.append("TAGS=").append(Build.TAGS).append('\n');

result.append("TIME=").append(Build.TIME).append('\n');

result.append("TYPE=").append(Build.TYPE).append('\n');

result.append("USER=").append(Build.USER).append('\n');

result.append("VERSION.CODENAME=").append(Build.VERSION.CODENAME).append('\n');

result.append("VERSION.INCREMENTAL=").append(Build.VERSION.INCREMENTAL).append('\n');

result.append("VERSION.RELEASE=").append(Build.VERSION.RELEASE).append('\n');

result.append("VERSION.SDK_INT=").append(Build.VERSION.SDK_INT).append('\n');

if (flattenedParams != null) {

String[] params = SEMICOLON.split(flattenedParams);

Arrays.sort(params);

for (String param : params) {

result.append(param).append('\n');

}

}

return result.toString();

}

}

CameraManager.javapublic final class CameraManager {

private static final String TAG = CameraManager.class.getSimpleName();

private static final int MIN_FRAME_WIDTH = 240;

private static final int MIN_FRAME_HEIGHT = 240;

private static final int MAX_FRAME_WIDTH = 1200; // = 5/8 * 1920

private static final int MAX_FRAME_HEIGHT = 675; // = 5/8 * 1080

private final Context context;

private final CameraConfigurationManager configManager;

private OpenCamera camera;

private AutoFocusManager autoFocusManager;

private Rect framingRect;

private Rect framingRectInPreview;

private boolean initialized;

private boolean previewing;

private int requestedCameraId = OpenCameraInterface.NO_REQUESTED_CAMERA;

private int requestedFramingRectWidth;

private int requestedFramingRectHeight;

/**

* Preview frames are delivered here, which we pass on to the registered handler. Make sure to

* clear the handler so it will only receive one message.

*/

private final PreviewCallback previewCallback;

public CameraManager(Context context) {

this.context = context;

this.configManager = new CameraConfigurationManager(context);

previewCallback = new PreviewCallback(configManager);

}

/**

* Opens the camera driver and initializes the hardware parameters.

*

* @param holder The surface object which the camera will draw preview frames into.

* @throws IOException Indicates the camera driver failed to open.

*/

public synchronized void openDriver(SurfaceHolder holder) throws IOException {

OpenCamera theCamera = camera;

if (theCamera == null) {

theCamera = OpenCameraInterface.open(requestedCameraId);

if (theCamera == null) {

throw new IOException("Camera.open() failed to return object from driver");

}

camera = theCamera;

}

if (!initialized) {

initialized = true;

configManager.initFromCameraParameters(theCamera);

if (requestedFramingRectWidth > 0 && requestedFramingRectHeight > 0) {

setManualFramingRect(requestedFramingRectWidth, requestedFramingRectHeight);

requestedFramingRectWidth = 0;

requestedFramingRectHeight = 0;

}

}

Camera cameraObject = theCamera.getCamera();

Camera.Parameters parameters = cameraObject.getParameters();

String parametersFlattened = parameters == null ? null : parameters.flatten(); // Save these, temporarily

try {

configManager.setDesiredCameraParameters(theCamera, false);

} catch (RuntimeException re) {

// Driver failed

Log.w(TAG, "Camera rejected parameters. Setting only minimal safe-mode parameters");

Log.i(TAG, "Resetting to saved camera params: " + parametersFlattened);

// Reset:

if (parametersFlattened != null) {

parameters = cameraObject.getParameters();

parameters.unflatten(parametersFlattened);

try {

cameraObject.setParameters(parameters);

configManager.setDesiredCameraParameters(theCamera, true);

} catch (RuntimeException re2) {

// Well, darn. Give up

Log.w(TAG, "Camera rejected even safe-mode parameters! No configuration");

}

}

}

cameraObject.setPreviewDisplay(holder);

}

public synchronized boolean isOpen() {

return camera != null;

}

/**

* Closes the camera driver if still in use.

*/

public synchronized void closeDriver() {

if (camera != null) {

camera.getCamera().release();

camera = null;

// Make sure to clear these each time we close the camera, so that any scanning rect

// requested by intent is forgotten.

framingRect = null;

framingRectInPreview = null;

}

}

/**

* Asks the camera hardware to begin drawing preview frames to the screen.

*/

public synchronized void startPreview() {

OpenCamera theCamera = camera;

if (theCamera != null && !previewing) {

theCamera.getCamera().startPreview();

previewing = true;

autoFocusManager = new AutoFocusManager(context, theCamera.getCamera());

}

}

/**

* Tells the camera to stop drawing preview frames.

*/

public synchronized void stopPreview() {

if (autoFocusManager != null) {

autoFocusManager.stop();

autoFocusManager = null;

}

if (camera != null && previewing) {

camera.getCamera().stopPreview();

previewCallback.setHandler(null, 0);

previewing = false;

}

}

/**

* Convenience method for {@link CaptureActivity}

*

* @param newSetting if {@code true}, light should be turned on if currently off. And vice versa.

*/

public synchronized void setTorch(boolean newSetting) {

OpenCamera theCamera = camera;

if (theCamera != null) {

if (newSetting != configManager.getTorchState(theCamera.getCamera())) {

boolean wasAutoFocusManager = autoFocusManager != null;

if (wasAutoFocusManager) {

autoFocusManager.stop();

autoFocusManager = null;

}

configManager.setTorch(theCamera.getCamera(), newSetting);

if (wasAutoFocusManager) {

autoFocusManager = new AutoFocusManager(context, theCamera.getCamera());

autoFocusManager.start();

}

}

}

}

/**

* A single preview frame will be returned to the handler supplied. The data will arrive as byte[]

* in the message.obj field, with width and height encoded as message.arg1 and message.arg2,

* respectively.

*

* @param handler The handler to send the message to.

* @param message The what field of the message to be sent.

*/

public synchronized void requestPreviewFrame(Handler handler, int message) {

OpenCamera theCamera = camera;

if (theCamera != null && previewing) {

previewCallback.setHandler(handler, message);

theCamera.getCamera().setOneShotPreviewCallback(previewCallback);

}

}

/**

* Calculates the framing rect which the UI should draw to show the user where to place the

* barcode. This target helps with alignment as well as forces the user to hold the device

* far enough away to ensure the image will be in focus.

*

* @return The rectangle to draw on screen in window coordinates.

*/

public synchronized Rect getFramingRect() {

if (framingRect == null) {

if (camera == null) {

return null;

}

Point screenResolution = configManager.getScreenResolution();

if (screenResolution == null) {

// Called early, before init even finished

return null;

}

int width = findDesiredDimensionInRange(screenResolution.x, MIN_FRAME_WIDTH, MAX_FRAME_WIDTH);

int height = findDesiredDimensionInRange(screenResolution.y, MIN_FRAME_HEIGHT, MAX_FRAME_HEIGHT);

//保持扫描框宽高一致

int finalSize = height;

if (height > width) {

finalSize = width;

}

int leftOffset = (screenResolution.x - finalSize) / 2;

int topOffset = (screenResolution.y - finalSize) / 2;

framingRect = new Rect(leftOffset, topOffset, leftOffset + finalSize, topOffset + finalSize);

Log.d(TAG, "Calculated framing rect: " + framingRect + " width =" + width + " height = " + height +

" screenResolution.x = " + screenResolution.x + " screenResolution.y = " + screenResolution.y);

}

return framingRect;

}

private static int findDesiredDimensionInRange(int resolution, int hardMin, int hardMax) {

int dim = 5 * resolution / 8; // Target 5/8 of each dimension

if (dim < hardMin) {

return hardMin;

}

if (dim > hardMax) {

return hardMax;

}

return dim;

}

/**

* Like {@link #getFramingRect} but coordinates are in terms of the preview frame,

* not UI / screen.

*

* @return {@link Rect} expressing barcode scan area in terms of the preview size

*/

public synchronized Rect getFramingRectInPreview() {

if (framingRectInPreview == null) {

Rect framingRect = getFramingRect();

if (framingRect == null) {

return null;

}

Rect rect = new Rect(framingRect);

Point cameraResolution = configManager.getCameraResolution();

Point screenResolution = configManager.getScreenResolution();

if (cameraResolution == null || screenResolution == null) {

// Called early, before init even finished

return null;

}

rect.left = rect.left * cameraResolution.x / screenResolution.x;

rect.right = rect.right * cameraResolution.x / screenResolution.x;

rect.top = rect.top * cameraResolution.y / screenResolution.y;

rect.bottom = rect.bottom * cameraResolution.y / screenResolution.y;

framingRectInPreview = rect;

}

return framingRectInPreview;

}

/**

* Allows third party apps to specify the camera ID, rather than determine

* it automatically based on available cameras and their orientation.

*

* @param cameraId camera ID of the camera to use. A negative value means "no preference".

*/

public synchronized void setManualCameraId(int cameraId) {

requestedCameraId = cameraId;

}

/**

* Allows third party apps to specify the scanning rectangle dimensions, rather than determine

* them automatically based on screen resolution.

*

* @param width The width in pixels to scan.

* @param height The height in pixels to scan.

*/

public synchronized void setManualFramingRect(int width, int height) {

if (initialized) {

Point screenResolution = configManager.getScreenResolution();

if (width > screenResolution.x) {

width = screenResolution.x;

}

if (height > screenResolution.y) {

height = screenResolution.y;

}

int leftOffset = (screenResolution.x - width) / 2;

int topOffset = (screenResolution.y - height) / 2;

framingRect = new Rect(leftOffset, topOffset, leftOffset + width, topOffset + height);

Log.d(TAG, "Calculated manual framing rect: " + framingRect);

framingRectInPreview = null;

} else {

requestedFramingRectWidth = width;

requestedFramingRectHeight = height;

}

}

/**

* A factory method to build the appropriate LuminanceSource object based on the format

* of the preview buffers, as described by Camera.Parameters.

*

* @param data A preview frame.

* @param width The width of the image.

* @param height The height of the image.

* @return A PlanarYUVLuminanceSource instance.

*/

public PlanarYUVLuminanceSource buildLuminanceSource(byte[] data, int width, int height) {

Rect rect = getFramingRectInPreview();

if (rect == null) {

return null;

}

// Go ahead and assume it's YUV rather than die.

return new PlanarYUVLuminanceSource(data, width, height, rect.left, rect.top,

rect.width(), rect.height(), false);

}

public void openLight() {

if (camera != null && camera.getCamera() != null) {

Camera.Parameters parameter = camera.getCamera().getParameters();

parameter.setFlashMode(Camera.Parameters.FLASH_MODE_TORCH);

camera.getCamera().setParameters(parameter);

}

}

public void offLight() {

if (camera != null && camera.getCamera() != null) {

Camera.Parameters parameter = camera.getCamera().getParameters();

parameter.setFlashMode(Camera.Parameters.FLASH_MODE_OFF);

camera.getCamera().setParameters(parameter);

}

}

}

FrontLightMode.javapublic enum FrontLightMode {

/** Always on. */

ON,

/** On only when ambient light is low. */

AUTO,

/** Always off. */

OFF

/* private static FrontLightMode parse(String modeString) {

return modeString == null ? OFF : valueOf(modeString);

}

public static FrontLightMode readPref(SharedPreferences sharedPrefs) {

return parse(sharedPrefs.getString(PreferencesActivity.KEY_FRONT_LIGHT_MODE, OFF.toString()));

}*/

}PreviewCallback.javafinal class PreviewCallback implements Camera.PreviewCallback {

private static final String TAG = PreviewCallback.class.getSimpleName();

private final CameraConfigurationManager configManager;

private Handler previewHandler;

private int previewMessage;

PreviewCallback(CameraConfigurationManager configManager) {

this.configManager = configManager;

}

void setHandler(Handler previewHandler, int previewMessage) {

this.previewHandler = previewHandler;

this.previewMessage = previewMessage;

}

@Override

public void onPreviewFrame(byte[] data, Camera camera) {

Point cameraResolution = configManager.getCameraResolution();

Handler thePreviewHandler = previewHandler;

if (cameraResolution != null && thePreviewHandler != null) {

//给DecodeHandler发消息

Message message = thePreviewHandler.obtainMessage(previewMessage, cameraResolution.x,

cameraResolution.y, data);

message.sendToTarget();

previewHandler = null;

} else {

Log.d(TAG, "Got preview callback, but no handler or resolution available");

}

}

}

QRConstants.javapublic class QRConstants {

public static boolean vibrateEnable = true;

public static boolean beepEnable = true;

public static FrontLightMode frontLightMode = FrontLightMode.OFF;

public static boolean disableExposure = true;

public static boolean autoFocus = true;

public static final String KEY_AUTO_FOCUS = "preferences_auto_focus";

}

Constant.javapublic class Constant {

public static final int DECODE = 1;

public static final int DECODE_FAILED = 2;

public static final int DECODE_SUCCEEDED = 3;

public static final int LAUNCH_PRODUCT_QUERY = 4;

public static final int QUIT = 5;

public static final int RESTART_PREVIEW = 6;

public static final int RETURN_SCAN_RESULT = 7;

public static final int FLASH_OPEN = 8;

public static final int FLASH_CLOSE = 9;

public static final int REQUEST_IMAGE = 10;

public static final String CODED_CONTENT = "codedContent";

public static final String CODED_BITMAP = "codedBitmap";

/*传递的zxingconfing*/

public static final String INTENT_ZXING_CONFIG = "zxingConfig";

}AmbientLightManager.javapublic final class AmbientLightManager implements SensorEventListener {

private static final float TOO_DARK_LUX = 45.0f;

private static final float BRIGHT_ENOUGH_LUX = 450.0f;

private final Context context;

private CameraManager cameraManager;

private Sensor lightSensor;

public AmbientLightManager(Context context) {

this.context = context;

}

public void start(CameraManager cameraManager) {

this.cameraManager = cameraManager;

SharedPreferences sharedPrefs = PreferenceManager.getDefaultSharedPreferences(context);

if (QRConstants.frontLightMode == FrontLightMode.AUTO) {

SensorManager sensorManager = (SensorManager) context.getSystemService(Context.SENSOR_SERVICE);

lightSensor = sensorManager.getDefaultSensor(Sensor.TYPE_LIGHT);

if (lightSensor != null) {

sensorManager.registerListener(this, lightSensor, SensorManager.SENSOR_DELAY_NORMAL);

}

}

}

public void stop() {

if (lightSensor != null) {

SensorManager sensorManager = (SensorManager) context.getSystemService(Context.SENSOR_SERVICE);

sensorManager.unregisterListener(this);

cameraManager = null;

lightSensor = null;

}

}

@Override

public void onSensorChanged(SensorEvent sensorEvent) {

float ambientLightLux = sensorEvent.values[0];

if (cameraManager != null) {

if (ambientLightLux <= TOO_DARK_LUX) {

cameraManager.setTorch(true);

} else if (ambientLightLux >= BRIGHT_ENOUGH_LUX) {

cameraManager.setTorch(false);

}

}

}

@Override

public void onAccuracyChanged(Sensor sensor, int accuracy) {

// do nothing

}

}

BeepManager.javapublic final class BeepManager implements MediaPlayer.OnCompletionListener,

MediaPlayer.OnErrorListener, Closeable {

private static final String TAG = BeepManager.class.getSimpleName();

private static final float BEEP_VOLUME = 0.10f;

private static final long VIBRATE_DURATION = 200L;

private final Activity activity;

private MediaPlayer mediaPlayer;

private boolean playBeep;

private boolean vibrate;

public BeepManager(Activity activity) {

this.activity = activity;

this.mediaPlayer = null;

updatePrefs();

}

public boolean isPlayBeep() {

return playBeep;

}

public void setPlayBeep(boolean playBeep) {

this.playBeep = playBeep;

}

public boolean isVibrate() {

return vibrate;

}

public void setVibrate(boolean vibrate) {

this.vibrate = vibrate;

}

public synchronized void updatePrefs() {

if (playBeep && mediaPlayer == null) {

// The volume on STREAM_SYSTEM is not adjustable, and users found it

// too loud,

// so we now play on the music stream.

// 设置activity音量控制键控制的音频流

activity.setVolumeControlStream(AudioManager.STREAM_MUSIC);

mediaPlayer = buildMediaPlayer(activity);

}

}

/**

* 开启响铃和震动

*/

@SuppressLint("MissingPermission")

public synchronized void playBeepSoundAndVibrate() {

if (playBeep && mediaPlayer != null) {

mediaPlayer.start();

}

if (vibrate) {

Vibrator vibrator = (Vibrator) activity

.getSystemService(Context.VIBRATOR_SERVICE);

vibrator.vibrate(VIBRATE_DURATION);

}

}

/**

* 创建MediaPlayer

*

* @param activity

* @return

*/

private MediaPlayer buildMediaPlayer(Context activity) {

MediaPlayer mediaPlayer = new MediaPlayer();

mediaPlayer.setAudioStreamType(AudioManager.STREAM_MUSIC);

// 监听是否播放完成

mediaPlayer.setOnCompletionListener(this);

mediaPlayer.setOnErrorListener(this);

// 配置播放资源

try {

AssetFileDescriptor file = activity.getResources()

.openRawResourceFd(R.raw.beep);

try {

mediaPlayer.setDataSource(file.getFileDescriptor(),

file.getStartOffset(), file.getLength());

} finally {

file.close();

}

// 设置音量

mediaPlayer.setVolume(BEEP_VOLUME, BEEP_VOLUME);

mediaPlayer.prepare();

return mediaPlayer;

} catch (IOException ioe) {

Log.w(TAG, ioe);

mediaPlayer.release();

return null;

}

}

@Override

public void onCompletion(MediaPlayer mp) {

// When the beep has finished playing, rewind to queue up another one.

mp.seekTo(0);

}

@Override

public synchronized boolean onError(MediaPlayer mp, int what, int extra) {

if (what == MediaPlayer.MEDIA_ERROR_SERVER_DIED) {

// we are finished, so put up an appropriate error toast if required

// and finish

activity.finish();

} else {

// possibly media player error, so release and recreate

mp.release();

mediaPlayer = null;

updatePrefs();

}

return true;

}

@Override

public synchronized void close() {

if (mediaPlayer != null) {

mediaPlayer.release();

mediaPlayer = null;

}

}

}

CaptureActivity.javapublic class CaptureActivity extends Activity implements SurfaceHolder.Callback, View.OnClickListener {

static {

AppCompatDelegate.setCompatVectorFromResourcesEnabled(true);//处理api 5.1以下手机不兼容问题

}

private static final String TAG = CaptureActivity.class.getSimpleName();

private ImageView mBackmImg;

private TextView mTitle;

public int REQ_ID_GALLERY = 0;

public static boolean isLightOn = false;

private IMResUtil mImResUtil;

public static void startAction(Activity activity, Bundle bundle, int requestCode) {

Intent intent = new Intent(activity, CaptureActivity.class);

intent.putExtras(bundle);

activity.startActivityForResult(intent, requestCode);

}

private CameraManager cameraManager;

private CaptureActivityHandler handler;

private ViewfinderView viewfinderView;

private boolean hasSurface;

private Collection decodeFormats;

private String characterSet;

private InactivityTimer inactivityTimer;

private BeepManager beepManager;

private AmbientLightManager ambientLightManager;

private LinearLayout bottomLayout;

private TextView flashLightTv;

private ImageView flashLightIv;

private LinearLayout flashLightLayout;

private LinearLayout albumLayout;

private ZxingConfig config;

private ImageView img_phone;

public ViewfinderView getViewfinderView() {

return viewfinderView;

}

public Handler getHandler() {

return handler;

}

public CameraManager getCameraManager() {

return cameraManager;

}

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

mImResUtil = new IMResUtil(this);

setContentView(mImResUtil.getLayout("activity_device_qrcode_capture"));

//设置浸入式状态栏

setColor(this, Color.BLACK);

hasSurface = false;

inactivityTimer = new InactivityTimer(this);

ambientLightManager = new AmbientLightManager(this);

/*先获取配置信息*/

try {

config = (ZxingConfig) getIntent().getExtras().get(Constant.INTENT_ZXING_CONFIG);

} catch (Exception e) {

Log.i("config", e.toString());

}

if (config == null) {

config = new ZxingConfig();

}

beepManager = new BeepManager(this);

beepManager.setPlayBeep(config.isPlayBeep());

beepManager.setVibrate(config.isShake());

initView(getIntent().getExtras());

onEvent(getIntent().getExtras());

}

private void initView(Bundle bundle) {

//判断是否为横屏状态

if ("landscape".equals(bundle.getString("portraitOrLandscape"))) {

setRequestedOrientation(ActivityInfo.SCREEN_ORIENTATION_LANDSCAPE);

}

mBackmImg = (ImageView) findViewById(mImResUtil.getId("iv_qr_back"));

mTitle = (TextView) findViewById(mImResUtil.getId("tv_qr_title"));

viewfinderView = (ViewfinderView) findViewById(mImResUtil.getId("vv_qr_viewfinderView"));

flashLightTv = (TextView) findViewById(mImResUtil.getId("flashLightTv"));

bottomLayout = (LinearLayout) findViewById(mImResUtil.getId("bottomLayout"));

flashLightIv = (ImageView) findViewById(mImResUtil.getId("flashLightIv"));

img_phone = (ImageView) findViewById(mImResUtil.getId("img_phone"));

flashLightLayout = (LinearLayout) findViewById(mImResUtil.getId("flashLightLayout"));

flashLightLayout.setOnClickListener(this);

albumLayout = (LinearLayout) findViewById(mImResUtil.getId("albumLayout"));

albumLayout.setOnClickListener(this);

}

private void onEvent(Bundle bundle) {

switchVisibility(bottomLayout, config.isShowbottomLayout());

switchVisibility(flashLightLayout, config.isShowFlashLight());

switchVisibility(albumLayout, config.isShowAlbum());

flashLightIv.setImageResource(R.drawable.device_qrcode_scan_flash_off);

img_phone.setImageResource(R.drawable.ic_photo);

/*有闪光灯就显示手电筒按钮 否则不显示*/

if (isSupportCameraLedFlash(getPackageManager())) {

flashLightLayout.setVisibility(View.VISIBLE);

} else {

flashLightLayout.setVisibility(View.GONE);

}

/********************新增 END*****************************/

mBackmImg.setOnClickListener(this);

if (bundle == null) {

return;

}

String titileText = bundle.getString("titileText");

if (titileText != null && !titileText.isEmpty()) {

mTitle.setText(titileText);

}

String headColor = bundle.getString("headColor");

mTitle.setTextColor(Color.parseColor(headColor));

float headSize = bundle.getFloat("headSize");

if (headSize > 0) {

mTitle.setTextSize(headSize);

}

}

/**

* 沉浸式状态栏

*

* @param activity

* @param color

*/

public static void setColor(Activity activity, int color) {

if (Build.VERSION.SDK_INT >= Build.VERSION_CODES.KITKAT) {

// 设置状态栏透明

activity.getWindow().addFlags(WindowManager.LayoutParams.FLAG_TRANSLUCENT_STATUS);

// 生成一个状态栏大小的矩形

View statusView = createStatusView(activity, color);

// 添加 statusView 到布局中

ViewGroup decorView = (ViewGroup) activity.getWindow().getDecorView();

decorView.addView(statusView);

// 设置根布局的参数

ViewGroup rootView = (ViewGroup) ((ViewGroup) activity.findViewById(android.R.id.content)).getChildAt(0);

rootView.setFitsSystemWindows(true);

rootView.setClipToPadding(true);

}

}

/**

* 绘制一个和状态栏登高的矩形

*

* @param activity

* @param color

*/

private static View createStatusView(Activity activity, int color) {

// 获得状态栏高度

int resourceId = activity.getResources().getIdentifier("status_bar_height", "dimen", "android");

int statusBarHeight = activity.getResources().getDimensionPixelSize(resourceId);

View statusView = new View(activity);

LinearLayout.LayoutParams params = new LinearLayout.LayoutParams(ViewGroup.LayoutParams.MATCH_PARENT, statusBarHeight);

statusView.setLayoutParams(params);

statusView.setBackgroundColor(color);

return statusView;

}

@Override

public void onClick(View v) {

int id = v.getId();

if (id == mImResUtil.getId("iv_qr_back")) {

this.finish();

} else if (id == mImResUtil.getId("albumLayout")) {//打开相册

// Intent intent = new Intent();

// intent.setType("image/*");

// intent.setAction(Intent.ACTION_GET_CONTENT);

// intent.addCategory(Intent.CATEGORY_OPENABLE);

// startActivityForResult(intent, REQ_ID_GALLERY);

/*打开相册*/

Intent intent = new Intent();

intent.setAction(Intent.ACTION_PICK);

intent.setType("image/*");

startActivityForResult(intent, REQ_ID_GALLERY);

} else if (id == mImResUtil.getId("flashLightLayout")) {//打开手电筒感应部分

if (isLightOn) {

isLightOn = false;

cameraManager.offLight();

switchFlashImg(9);

} else {

isLightOn = true;

cameraManager.openLight();

switchFlashImg(8);

}

}

}

@Override

protected void onActivityResult(int requestCode, int resultCode, Intent data) {

super.onActivityResult(requestCode, resultCode, data);

if (requestCode == REQ_ID_GALLERY) {

if (resultCode == RESULT_OK) {

Uri uri = data.getData();

if (uri != null) {

String path = FileUtil.checkPicturePath(CaptureActivity.this, uri);//Device.getActivity()

BitmapFactory.Options bmOptions = new BitmapFactory.Options();

bmOptions.inJustDecodeBounds = false;

bmOptions.inPurgeable = true;

Bitmap bmp = BitmapFactory.decodeFile(path, bmOptions);

decodeQRCode(bmp, this);

}

}

}

//-----------------------------------------------------

if (requestCode == Constant.REQUEST_IMAGE && resultCode == RESULT_OK) {

String path = ImageUtil.getImageAbsolutePath(this, data.getData());

Log.e(TAG, "onActivityResult: -------二维码:path" + path);

BitmapFactory.Options bmOptions = new BitmapFactory.Options();

bmOptions.inJustDecodeBounds = false;

bmOptions.inPurgeable = true;

Bitmap bmp = BitmapFactory.decodeFile(path, bmOptions);

decodeQRCode(bmp, this);

}

}

/**

* 解析二维码图片

*

* @param bitmap 要解析的二维码图片

*/

public final Map HINTS = new EnumMap<>(DecodeHintType.class);

@SuppressLint("StaticFieldLeak")

public void decodeQRCode(final Bitmap bitmap, final Activity activity) {

new AsyncTask() {

@Override

protected String doInBackground(Void... params) {

try {

int width = bitmap.getWidth();

int height = bitmap.getHeight();

int[] pixels = new int[width * height];

bitmap.getPixels(pixels, 0, width, 0, 0, width, height);

RGBLuminanceSource source = new RGBLuminanceSource(width, height, pixels);

Result result = new MultiFormatReader().decode(new BinaryBitmap(new HybridBinarizer(source)), HINTS);

String url = "解析失败";

if (result != null && result.getText() != null) {

url = result.getText();

}

Intent resultIntent = new Intent();

Bundle bundle = new Bundle();

bundle.putString(Constant.CODED_CONTENT, url);

resultIntent.putExtras(bundle);

activity.setResult(RESULT_OK, resultIntent);

CaptureActivity.this.finish();

return result.getText();

} catch (Exception e) {

return null;

}

}

@Override

protected void onPostExecute(String result) {

Log.d("CaptureActivity", "result=" + result);

Toast.makeText(CaptureActivity.this, "解析失败,换个图片试一下", Toast.LENGTH_LONG).show();

}

}.execute();

}

@Override

protected void onResume() {

super.onResume();

cameraManager = new CameraManager(getApplication());

viewfinderView.setCameraManager(cameraManager);

handler = null;

beepManager.updatePrefs();

ambientLightManager.start(cameraManager);

inactivityTimer.onResume();

decodeFormats = null;

characterSet = null;

SurfaceView surfaceView = (SurfaceView) findViewById(mImResUtil.getId("device_qrcode_preview_view"));

SurfaceHolder surfaceHolder = surfaceView.getHolder();

if (hasSurface) {

initCamera(surfaceHolder);

} else {

surfaceHolder.addCallback(this);

}

}

@Override

protected void onPause() {

if (handler != null) {

handler.quitSynchronously();

handler = null;

}

inactivityTimer.onPause();

ambientLightManager.stop();

beepManager.close();

cameraManager.closeDriver();

if (!hasSurface) {

SurfaceView surfaceView = (SurfaceView) findViewById(mImResUtil.getId("device_qrcode_preview_view"));

SurfaceHolder surfaceHolder = surfaceView.getHolder();

surfaceHolder.removeCallback(this);

}

super.onPause();

}

@Override

protected void onDestroy() {

inactivityTimer.shutdown();

super.onDestroy();

}

private void initCamera(SurfaceHolder surfaceHolder) {

if (surfaceHolder == null) {

throw new IllegalStateException("No SurfaceHolder provided");

}

if (cameraManager.isOpen()) {

Log.w(TAG, "initCamera() while already open -- late SurfaceView callback?");

return;

}

try {

cameraManager.openDriver(surfaceHolder);

// Creating the handler starts the preview, which can also throw a RuntimeException.

if (handler == null) {

handler = new CaptureActivityHandler(this, decodeFormats, characterSet, cameraManager);

}

} catch (IOException ioe) {

Log.w(TAG, ioe);

} catch (RuntimeException e) {

// Barcode Scanner has seen crashes in the wild of this variety:

// java.?lang.?RuntimeException: Fail to connect to camera service

Log.e(TAG, "Unexpected error initializing camera", e);

}

}

public void drawViewfinder() {

viewfinderView.drawViewfinder();

}

public void handleDecode(Result rawResult, Bitmap barcode, float scaleFactor) {

Log.d("wxl", "rawResult=" + rawResult);

boolean fromLiveScan = barcode != null;

if (fromLiveScan) {

String resultString = rawResult.getText();

Log.e("wxl", "rawResult=" + rawResult.getText());

beepManager.playBeepSoundAndVibrate();

Intent resultIntent = new Intent();

Bundle bundle = new Bundle();

bundle.putString(Constant.CODED_CONTENT, resultString);

resultIntent.putExtras(bundle);

this.setResult(RESULT_OK, resultIntent);

} else {

// this.setResult(RESULT_OK, resultIntent);

Toast.makeText(CaptureActivity.this, "扫描失败", Toast.LENGTH_SHORT).show();

}

CaptureActivity.this.finish();

}

@Override

public void surfaceCreated(SurfaceHolder holder) {

if (holder == null) {

Log.e(TAG, "*** WARNING *** surfaceCreated() gave us a null surface!");

}

if (!hasSurface) {

hasSurface = true;

initCamera(holder);

}

}

@Override

public void surfaceDestroyed(SurfaceHolder holder) {

hasSurface = false;

}

@Override

public void surfaceChanged(SurfaceHolder holder, int format, int width, int height) {

}

/********************新增代码 start: **************************/

private void switchVisibility(View view, boolean b) {

if (b) {

view.setVisibility(View.VISIBLE);

} else {

view.setVisibility(View.GONE);

}

}

/**

* @param pm

*

* @return 是否有闪光灯

*/

public static boolean isSupportCameraLedFlash(PackageManager pm) {

if (pm != null) {

FeatureInfo[] features = pm.getSystemAvailableFeatures();

if (features != null) {

for (FeatureInfo f : features) {

if (f != null && PackageManager.FEATURE_CAMERA_FLASH.equals(f.name)) {

return true;

}

}

}

}

return false;

}

/**

* @param flashState 切换闪光灯图片

*/

public void switchFlashImg(int flashState) {

if (flashState == Constant.FLASH_OPEN) {

flashLightIv.setImageResource(R.drawable.device_qrcode_scan_flash_on);//ic_open

flashLightTv.setText("关闭闪光灯");

} else {

flashLightIv.setImageResource(R.drawable.device_qrcode_scan_flash_off);//ic_close

flashLightTv.setText("打开闪光灯");

}

}

/********************新增代码 END*****************************/

}

CaptureActivityHandler.javapublic final class CaptureActivityHandler extends Handler {

private static final String TAG = CaptureActivityHandler.class.getSimpleName();

private final CaptureActivity activity;

private final DecodeThread decodeThread;

private State state;

private final CameraManager cameraManager;

private final IMResUtil mImResUtil;

// private RelativeLayout mLightLinearLay;//LinearLayout 手电筒的线性布局

private enum State {

PREVIEW, SUCCESS, DONE

}

public CaptureActivityHandler(CaptureActivity activity, Collection decodeFormats, String characterSet, CameraManager cameraManager) {

this.activity = activity;

mImResUtil = new IMResUtil(activity);

decodeThread = new DecodeThread(activity, decodeFormats, characterSet, new ViewfinderResultPointCallback(activity.getViewfinderView()));

decodeThread.start();

state = State.SUCCESS;

// Start ourselves capturing previews and decoding.

this.cameraManager = cameraManager;

cameraManager.startPreview();

Log.d(TAG, "CaptureActivityHandler " + CaptureActivityHandler.class.toString());

restartPreviewAndDecode();

}

@Override

public void handleMessage(Message message) {

int what = message.what;

if (what == mImResUtil.getId("device_qrcode_restart_preview")) {

restartPreviewAndDecode();

} else if (what == mImResUtil.getId("device_qrcode_decode_succeeded")) {

state = State.SUCCESS;

Bundle bundle = message.getData();

Bitmap barcode = null;

float scaleFactor = 1.0f;

if (bundle != null) {

byte[] compressedBitmap = bundle.getByteArray(DecodeThread.BARCODE_BITMAP);

if (compressedBitmap != null) {

barcode = BitmapFactory.decodeByteArray(compressedBitmap, 0, compressedBitmap.length, null);

// Mutable copy:

barcode = barcode.copy(Bitmap.Config.ARGB_8888, true);

}

scaleFactor = bundle.getFloat(DecodeThread.BARCODE_SCALED_FACTOR);

}

activity.handleDecode((Result) message.obj, barcode, scaleFactor);

} else if (what == mImResUtil.getId("device_qrcode_decode_failed")) {

state = State.PREVIEW;

cameraManager.requestPreviewFrame(decodeThread.getHandler(), mImResUtil.getId("device_qrcode_decode"));

} else if (what == mImResUtil.getId("device_qrcode_return_scan_result")) {

activity.setResult(Activity.RESULT_OK, (Intent) message.obj);

activity.finish();

} else if (what == mImResUtil.getId("device_qrcode_launch_product_query")) {

String url = (String) message.obj;

Intent intent = new Intent(Intent.ACTION_VIEW);

intent.addFlags(Intent.FLAG_ACTIVITY_CLEAR_WHEN_TASK_RESET);

intent.setData(Uri.parse(url));

ResolveInfo resolveInfo = activity.getPackageManager().resolveActivity(intent, PackageManager.MATCH_DEFAULT_ONLY);

String browserPackageName = null;

if (resolveInfo != null && resolveInfo.activityInfo != null) {

browserPackageName = resolveInfo.activityInfo.packageName;

Log.d(TAG, "Using browser in package " + browserPackageName);

}

// Needed for default Android browser / Chrome only apparently

if ("com.android.browser".equals(browserPackageName) || "com.android.chrome".equals(browserPackageName)) {

intent.setPackage(browserPackageName);

intent.addFlags(Intent.FLAG_ACTIVITY_NEW_TASK);

intent.putExtra(Browser.EXTRA_APPLICATION_ID, browserPackageName);

}

try {

activity.startActivity(intent);

} catch (ActivityNotFoundException ignored) {

Log.w(TAG, "Can't find anything to handle VIEW of URI " + url);

}

}

}

public void quitSynchronously() {

state = State.DONE;

cameraManager.stopPreview();

Message quit = Message.obtain(decodeThread.getHandler(), mImResUtil.getId("device_qrcode_quit"));

quit.sendToTarget();

try {

// Wait at most half a second; should be enough time, and onPause() will timeout quickly

decodeThread.join(500L);

} catch (InterruptedException e) {

// continue

}

// Be absolutely sure we don't send any queued up messages

removeMessages(mImResUtil.getId("device_qrcode_decode_succeeded"));

removeMessages(mImResUtil.getId("device_qrcode_decode_failed"));

}

private void restartPreviewAndDecode() {

if (state == State.SUCCESS) {

state = State.PREVIEW;

//decodeThread.getHandler()拿到DecodeHandler

cameraManager.requestPreviewFrame(decodeThread.getHandler(), mImResUtil.getId("device_qrcode_decode"));

activity.drawViewfinder();

}

}

}

DecodeFormatManager.javafinal class DecodeFormatManager {

private static final Pattern COMMA_PATTERN = Pattern.compile(",");

// static final Set PRODUCT_FORMATS;

// static final Set INDUSTRIAL_FORMATS;

// private static final Set ONE_D_FORMATS;

static final Set QR_CODE_FORMATS = EnumSet.of(BarcodeFormat.QR_CODE);

// static final Set DATA_MATRIX_FORMATS = EnumSet.of(BarcodeFormat.DATA_MATRIX);

// static final Set AZTEC_FORMATS = EnumSet.of(BarcodeFormat.AZTEC);

// static final Set PDF417_FORMATS = EnumSet.of(BarcodeFormat.PDF_417);

/*static {

PRODUCT_FORMATS = EnumSet.of(BarcodeFormat.UPC_A,

BarcodeFormat.UPC_E,

BarcodeFormat.EAN_13,

BarcodeFormat.EAN_8,

BarcodeFormat.RSS_14,

BarcodeFormat.RSS_EXPANDED);

INDUSTRIAL_FORMATS = EnumSet.of(BarcodeFormat.CODE_39,

BarcodeFormat.CODE_93,

BarcodeFormat.CODE_128,

BarcodeFormat.ITF,

BarcodeFormat.CODABAR);

ONE_D_FORMATS = EnumSet.copyOf(PRODUCT_FORMATS);

ONE_D_FORMATS.addAll(INDUSTRIAL_FORMATS);

}*/

private static final Map> FORMATS_FOR_MODE;

static {

FORMATS_FOR_MODE = new HashMap<>();

// FORMATS_FOR_MODE.put(Intents.Scan.ONE_D_MODE, ONE_D_FORMATS);

// FORMATS_FOR_MODE.put(Intents.Scan.PRODUCT_MODE, PRODUCT_FORMATS);

FORMATS_FOR_MODE.put(Intents.Scan.QR_CODE_MODE, QR_CODE_FORMATS);

// FORMATS_FOR_MODE.put(Intents.Scan.DATA_MATRIX_MODE, DATA_MATRIX_FORMATS);

// FORMATS_FOR_MODE.put(Intents.Scan.AZTEC_MODE, AZTEC_FORMATS);

// FORMATS_FOR_MODE.put(Intents.Scan.PDF417_MODE, PDF417_FORMATS);

}

private DecodeFormatManager() {}

static Set parseDecodeFormats(Intent intent) {

Iterable scanFormats = null;

CharSequence scanFormatsString = intent.getStringExtra(Intents.Scan.FORMATS);

if (scanFormatsString != null) {

scanFormats = Arrays.asList(COMMA_PATTERN.split(scanFormatsString));

}

return parseDecodeFormats(scanFormats, intent.getStringExtra(Intents.Scan.MODE));

}

static Set parseDecodeFormats(Uri inputUri) {

List formats = inputUri.getQueryParameters(Intents.Scan.FORMATS);

if (formats != null && formats.size() == 1 && formats.get(0) != null){

formats = Arrays.asList(COMMA_PATTERN.split(formats.get(0)));

}

return parseDecodeFormats(formats, inputUri.getQueryParameter(Intents.Scan.MODE));

}

private static Set parseDecodeFormats(Iterable scanFormats, String decodeMode) {

if (scanFormats != null) {

Set formats = EnumSet.noneOf(BarcodeFormat.class);

try {

for (String format : scanFormats) {

formats.add(BarcodeFormat.valueOf(format));

}

return formats;

} catch (IllegalArgumentException iae) {

// ignore it then

}

}

if (decodeMode != null) {

return FORMATS_FOR_MODE.get(decodeMode);

}

return null;

}

}

DecodeHandler.javafinal class DecodeHandler extends Handler {

private static final String TAG = DecodeHandler.class.getSimpleName();

public static boolean isWeakLight = false;

private final CaptureActivity activity;

private final MultiFormatReader multiFormatReader;

private boolean running = true;

private final IMResUtil mImResUtil;

DecodeHandler(CaptureActivity activity, Map hints) {

multiFormatReader = new MultiFormatReader();

multiFormatReader.setHints(hints);

this.activity = activity;

mImResUtil = new IMResUtil(activity);

}

@Override

public void handleMessage(Message message) {

if (!running) {

return;

}

int what = message.what;

if (what == mImResUtil.getId("device_qrcode_decode")) {

decode((byte[]) message.obj, message.arg1, message.arg2);

} else if (what == mImResUtil.getId("device_qrcode_quit")) {

isWeakLight = false;

CaptureActivity.isLightOn = false;

running = false;

Looper.myLooper().quit();

}

}

/**

* Decode the data within the viewfinder rectangle, and time how long it took. For efficiency,

* reuse the same reader objects from one decode to the next.

*

* @param data The YUV preview frame.

* @param width The width of the preview frame.

* @param height The height of the preview frame.

*/

private void decode(byte[] data, int width, int height) {

Log.i(TAG, "decode");

//弱光检测

analysisColor(data,width,height);

long start = System.currentTimeMillis();

Result rawResult = null;

PlanarYUVLuminanceSource source = activity.getCameraManager().buildLuminanceSource(data, width, height);

if (source != null) {

BinaryBitmap bitmap = new BinaryBitmap(new HybridBinarizer(source));

try {

rawResult = multiFormatReader.decodeWithState(bitmap);

} catch (ReaderException re) {

// continue

} finally {

multiFormatReader.reset();

}

}

Handler handler = activity.getHandler();//拿到CaptureActivityHandler

Log.d(TAG, "Found handler " + handler);

if (rawResult != null) {

// Don't log the barcode contents for security.

long end = System.currentTimeMillis();

Log.d(TAG, "Found barcode in " + (end - start) + " ms");

if (handler != null) {

//给CaptureActivityHandler发消息

Message message = Message.obtain(handler, mImResUtil.getId("device_qrcode_decode_succeeded"), rawResult);

Bundle bundle = new Bundle();

bundleThumbnail(source, bundle);

message.setData(bundle);

message.sendToTarget();

}

} else {

if (handler != null) {

Message message = Message.obtain(handler, mImResUtil.getId("device_qrcode_decode_failed"));

message.sendToTarget();

}

}

}

private static void bundleThumbnail(PlanarYUVLuminanceSource source, Bundle bundle) {

int[] pixels = source.renderThumbnail();

int width = source.getThumbnailWidth();

int height = source.getThumbnailHeight();

Bitmap bitmap = Bitmap.createBitmap(pixels, 0, width, width, height, Bitmap.Config.ARGB_8888);

ByteArrayOutputStream out = new ByteArrayOutputStream();

bitmap.compress(Bitmap.CompressFormat.JPEG, 50, out);

bundle.putByteArray(DecodeThread.BARCODE_BITMAP, out.toByteArray());

bundle.putFloat(DecodeThread.BARCODE_SCALED_FACTOR, (float) width / source.getWidth());

}

private int[] decodeYUV420SP(byte[] yuv420sp, int width, int height) {

final int frameSize = width * height;

int rgb[] = new int[width * height];

for (int j = 0, yp = 0; j < height; j++) {

int uvp = frameSize + (j >> 1) * width, u = 0, v = 0;

for (int i = 0; i < width; i++, yp++) {

int y = (0xff & ((int) yuv420sp[yp])) - 16;

if (y < 0) y = 0;

if ((i & 1) == 0) {