Flume+Kafka+SparkStreaming+Redis+Mysql做的实时日志分析ip访问次数

新手学习,如有错误请指正,感谢!

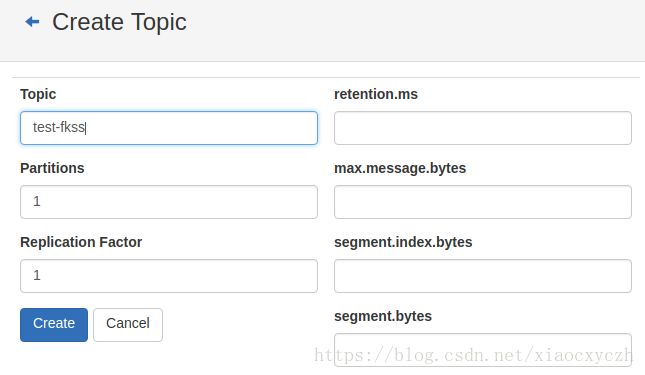

1.启动zookeeper和kafka,并建立一个topic为test-fkss,为了方便观察我是通过kafka-manager添加

2.配置Flume并启动,监听文件为/home/czh/docker-public-file/testflume.log,发送给kafka

a1.sources = r1

a1.sinks = k1

a1.channels = c1

a1.sources.r1.type = exec

a1.sources.r1.command = tail -F /home/czh/docker-public-file/testflume.log

a1.sources.r1.channels = c1

a1.sinks.k1.type = org.apache.flume.sink.kafka.KafkaSink

a1.sinks.k1.topic = test-fkss

a1.sinks.k1.brokerList = 172.17.0.2:9092

a1.sinks.k1.requiredAcks = 1

a1.sinks.k1.batchSize = 2

a1.sinks.k1.channel = c1

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100在flume文件夹执行启动命令bin/flume-ng agent --conf conf --conf-file conf/flume-conf.properties.template --name a1 -Dflume.root.logger=INFO,console

出现最下面一行说明启动成功

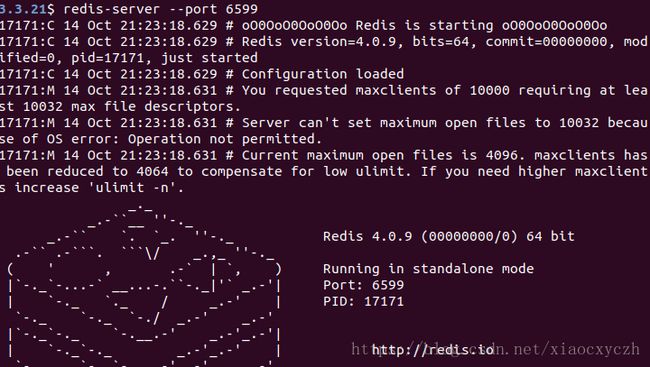

3.启动redis

4.启动sparkstreaming日志处理程序,具体代码如下

分析程序SparkStreamDemo.scala

import kafka.serializer.StringDecoder

import org.apache.spark.streaming.kafka.KafkaUtils

import org.apache.spark.streaming.{Seconds, StreamingContext}

import org.apache.spark.{SparkConf, SparkContext}

object SparkStreamDemo {

def main(args: Array[String]) {

val conf = new SparkConf()

conf.setAppName("flume_spark_streaming")

conf.setMaster("local[4]")

val sc = new SparkContext(conf)

sc.setCheckpointDir("/home/czh/IdeaProjects/SparkDemo/out/artifacts")

sc.setLogLevel("ERROR")

val ssc = new StreamingContext(sc, Seconds(3))

val kafkaParams = Map[String, String](

"bootstrap.servers" -> "172.17.0.2:9092",

"group.id" -> "test-fkss",

"auto.offset.reset" -> "smallest"

)

val topics = Set("test-fkss");

val stream = createStream(ssc, kafkaParams, topics)

val lines = stream.map(_._2)

lines.foreachRDD { rdd =>

rdd.foreachPartition { partitionOfRecords =>

partitionOfRecords.foreach(pair => {

val time = parseLog(pair)._1

val ip = parseLog(pair)._2

println(time+" "+ip)

val jedis = RedisClient.pool.getResource

jedis.select(0)

if(null == jedis.get(ip))

jedis.set(ip, "1");

else

jedis.incr(ip)

val at = jedis.get(ip).toInt

val c = new JDBCCommend()

c.add(time, ip, at)

jedis.close()

})

}

}

ssc.start()

ssc.awaitTermination()

}

def createStream(scc: StreamingContext, kafkaParam: Map[String, String], topics: Set[String]) = {

KafkaUtils.createDirectStream[String, String, StringDecoder, StringDecoder](scc, kafkaParam, topics)

}

def parseLog(log: String): (String, String) = {

var time = ""

var ip = ""

try{

time = log.split(" ")(0)

ip = log.split(" ")(1)

}

catch {

case e: Exception =>

e.printStackTrace()

}

(time, ip)

}

}Redis连接池RedisClient.scalaimport org.apache.commons.pool2.impl.GenericObjectPoolConfig

import redis.clients.jedis.JedisPool

object RedisClient extends Serializable {

val redisHost = "127.0.0.1"

val redisPort = 6599

val redisTimeout = 30000

lazy val pool = new JedisPool(new GenericObjectPoolConfig(), redisHost, redisPort, redisTimeout)

lazy val hook = new Thread {

override def run = {

println("Execute hook thread: " + this)

pool.destroy()

}

}

sys.addShutdownHook(hook.run)

}JDBC连接JDBCConnection.scalaimport java.sql.{Connection, DriverManager}

object JDBCConnection {

val IP = "172.17.0.2"

val Port = "3306"

val DBType = "mysql"

val DBName = "st"

val username = "root"

val password = "root"

val url = "jdbc:" + DBType + "://" + IP + ":" + Port + "/" + DBName

classOf[com.mysql.jdbc.Driver]

def getConnection(): Connection = {

DriverManager.getConnection(url, username, password)

}

def close(conn: Connection): Unit = {

try {

if (!conn.isClosed() || conn != null) {

conn.close()

}

}

catch {

case ex: Exception => {

ex.printStackTrace()

}

}

}

}

JDBC操作JDBCCommend.scalaclass JDBCCommend {

case class Ip(time: String, ip: String, access_times: Int)

def add(time: String, ip: String, access_times: Int): Boolean = {

val conn = JDBCConnection.getConnection()

try {

val sql = new StringBuilder()

.append("INSERT INTO ip_table (time, ip, access_times)")

.append("VALUES(?, ?, ?)")

val ps = conn.prepareStatement(sql.toString())

ps.setObject(1, time)

ps.setObject(2, ip)

ps.setObject(3, access_times)

ps.executeUpdate() > 0

}

finally {

conn.close()

}

}

}pom.xml

4.0.0

com

czh

1.0-SNAPSHOT

2008

2.11.0

scala-tools.org

Scala-Tools Maven2 Repository

http://scala-tools.org/repo-releases

scala-tools.org

Scala-Tools Maven2 Repository

http://scala-tools.org/repo-releases

org.scala-lang

scala-library

${scala.version}

org.specs

specs

1.2.5

test

org.apache.spark

spark-core_2.11

2.3.2

org.apache.spark

spark-sql_2.11

2.3.2

mysql

mysql-connector-java

5.1.12

org.apache.spark

spark-streaming_2.11

2.3.2

org.apache.spark

spark-streaming-kafka_2.11

1.6.3

redis.clients

jedis

2.9.0

com.alibaba

fastjson

1.2.47

src/main/scala

src/test/scala

org.scala-tools

maven-scala-plugin

compile

testCompile

${scala.version}

-target:jvm-1.5

org.apache.maven.plugins

maven-eclipse-plugin

true

ch.epfl.lamp.sdt.core.scalabuilder

ch.epfl.lamp.sdt.core.scalanature

org.eclipse.jdt.launching.JRE_CONTAINER

ch.epfl.lamp.sdt.launching.SCALA_CONTAINER

org.scala-tools

maven-scala-plugin

${scala.version}

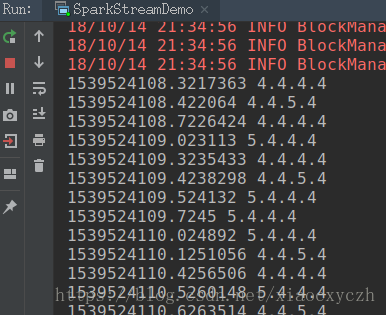

5.启动模拟ip访问产生日志程序

write-flumelog.pyimport time

import random

for i in range(10):

f = open('/home/czh/docker-public-file/testflume.log', 'a')

time.sleep(random.randint(4, 9) * 0.1)

current_time = time.time()

ip = str(random.randint(4, 5)) + "." + str(random.randint(4, 4))\

+ "." + str(random.randint(4, 5)) + "." + str(random.randint(4, 4))

print(str(current_time) + " " + ip + "\n")

f.write(current_time.__str__() + " " + ip + "\n")

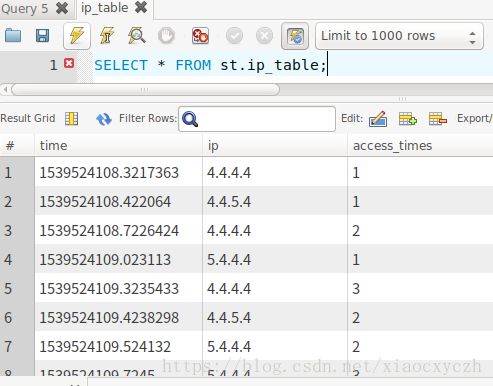

f.close()整体运行结果如下:

python程序写入ip和访问时间到log文件

sparkstreaming程序实时获取flume传入的数据计算访问次数写入mysql