sparkSQL读取hive分区表的问题追踪

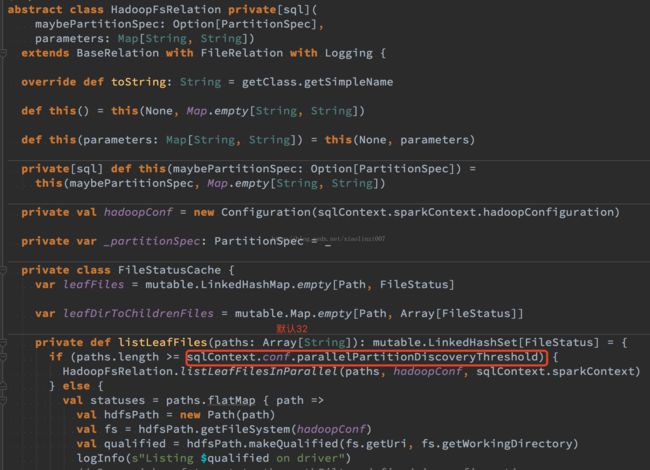

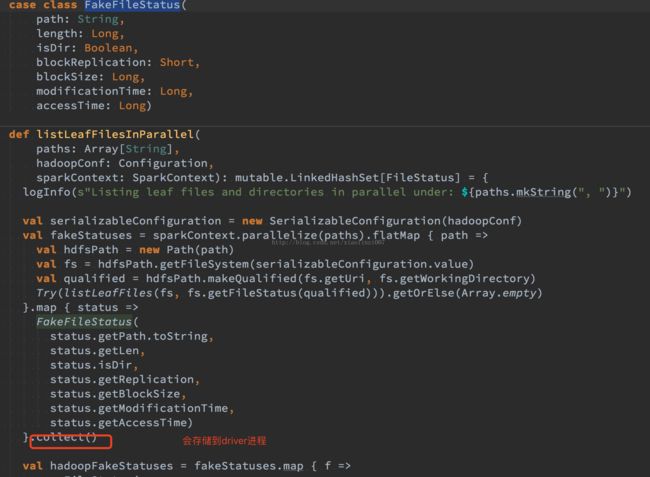

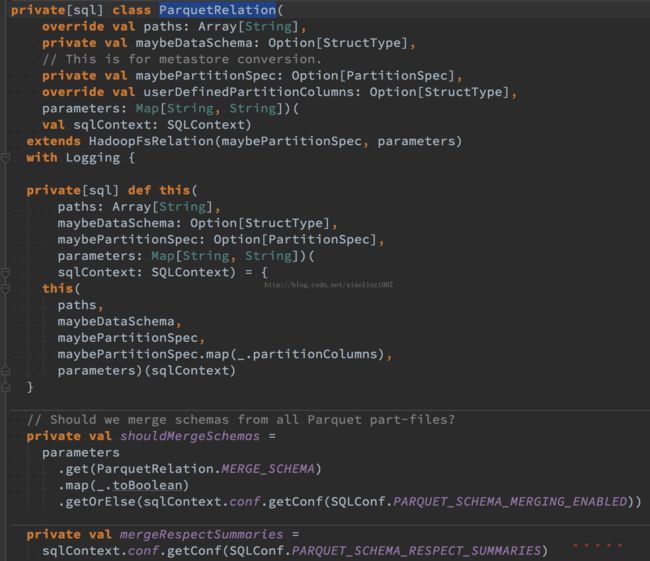

示例读取的table格式为parqut格式,spark每次读取会扫描table根目录下所有的目录和文件信息,然后生成file的FakeFileStatus信息,用于生成table的schema信息,并且每次查询table都会判断该table的schema的信息是否有变化,如果有变化则从parquet的meta文件、data文件的footeer(如果meta文件不存在),再次生成schame信息,但是生的FakeFileStatus全部缓存在driver进程,如果文件过多会占用driver内存过多,导致gc oom异常,而且该设计方式针对在本次提交的spark的任务有效,本次任务结束driver进程结束,缓存也会消失,那么理解针对stream或在一次spark提交的任务多次查询同一张table有效率提升,针对只有一次table查询会导致效率的下降,并且为什么spark不是去从hive的metastore获取table的schema信息而要遍历查询文件?

异常堆栈信息:

异常堆栈信息:

java.lang.OutOfMemoryError: GC overhead limit exceeded

at java.lang.StringBuilder. at java.io.ObjectStreamClass.getClassSignature(ObjectStreamClass.java:1458)

at java.io.ObjectStreamClass$MemberSignature. at java.io.ObjectStreamClass.computeDefaultSUID(ObjectStreamClass.java:1701)

at java.io.ObjectStreamClass.access$100(ObjectStreamClass.java:69)

at java.io.ObjectStreamClass$1.run(ObjectStreamClass.java:247)

at java.io.ObjectStreamClass$1.run(ObjectStreamClass.java:245)

at java.security.AccessController.doPrivileged(Native Method)

at java.io.ObjectStreamClass.getSerialVersionUID(ObjectStreamClass.java:244)

at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1601)

at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1514)

at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:1750)

at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1347)

at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:1964)

at java.io.ObjectInputStream.defaultReadObject(ObjectInputStream.java:498)

at org.apache.spark.rpc.netty.NettyRpcEndpointRef.readObject(NettyRpcEnv.scala:499)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:601)

at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:1866)

at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:1771)

at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:1964)

at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:1888)

at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1347)

at java.io.ObjectInputStream.readObject(ObjectInputStream.java:369)

at org.apache.spark.serializer.JavaDeserializationStream.readObject(JavaSerializer.scala:76)

Exception in thread "dispatcher-event-loop-23" java.lang.OutOfMemoryError: GC overhead limit exceeded

at scala.collection.mutable.MapLike$class.getOrElseUpdate(MapLike.scala:187)

at scala.collection.mutable.AbstractMap.getOrElseUpdate(Map.scala:91)

at org.apache.spark.scheduler.TaskSchedulerImpl$$anonfun$resourceOffers$1$$anonfun$apply$5.apply(TaskSchedulerImpl.scala:293)

at scala.Option.foreach(Option.scala:236)

at org.apache.spark.scheduler.TaskSchedulerImpl$$anonfun$resourceOffers$1.apply(TaskSchedulerImpl.scala:284)

at scala.collection.immutable.List.foreach(List.scala:318)

at org.apache.spark.scheduler.cluster.CoarseGrainedSchedulerBackend$DriverEndpoint.org$apache$spark$scheduler$cluster$CoarseGrainedSchedulerBackend$DriverEndpoint$$makeOffers(CoarseGrainedSchedulerBackend.scala:200)

at org.apache.spark.rpc.netty.Inbox$$anonfun$process$1.apply$mcV$sp(Inbox.scala:116)

at org.apache.spark.rpc.netty.Inbox.safelyCall(Inbox.scala:204)

at org.apache.spark.rpc.netty.Dispatcher$MessageLoop.run(Dispatcher.scala:215)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:603)

at java.lang.Thread.run(Thread.java:722)

java.lang.OutOfMemoryError: GC overhead limit exceeded

at akka.actor.LightArrayRevolverScheduler$$anon$8.nextTick(Scheduler.scala:409)

at java.lang.Thread.run(Thread.java:722)

17/05/25 12:14:43 ERROR ActorSystemImpl: Uncaught fatal error from thread [sparkDriverActorSystem-scheduler-1] shutting down ActorSystem [sparkDriverActorSystem]

java.lang.OutOfMemoryError: GC overhead limit exceeded

at akka.actor.LightArrayRevolverScheduler$$anon$8.run(Scheduler.scala:375)

17/05/25 12:14:43 ERROR LiveListenerBus: SparkListenerBus has already stopped! Dropping event SparkListenerExecutorMetricsUpdate(5,WrappedArray())

17/05/25 12:14:43 ERROR LiveListenerBus: SparkListenerBus has already stopped! Dropping event SparkListenerExecutorMetricsUpdate(1,WrappedArray())

17/05/25 12:14:43 ERROR LiveListenerBus: SparkListenerBus has already stopped! Dropping event SparkListenerExecutorMetricsUpdate(4,WrappedArray())

17/05/25 12:14:43 ERROR LiveListenerBus: SparkListenerBus has already stopped! Dropping event SparkListenerExecutorMetricsUpdate(8,WrappedArray())

17/05/25 12:14:43 INFO RemoteActorRefProvider$RemotingTerminator: Remote daemon shut down; proceeding with flushing remote transports.

17/05/25 12:14:43 ERROR LiveListenerBus: SparkListenerBus has already stopped! Dropping event SparkListenerExecutorMetricsUpdate(20,WrappedArray())

java.nio.channels.ClosedChannelException

17/05/25 12:14:43 ERROR LiveListenerBus: SparkListenerBus has already stopped! Dropping event SparkListenerExecutorMetricsUpdate(16,WrappedArray())

java.nio.channels.ClosedChannelException

17/05/25 12:14:43 ERROR LiveListenerBus: SparkListenerBus has already stopped! Dropping event SparkListenerExecutorMetricsUpdate(7,WrappedArray())

17/05/25 12:14:43 ERROR LiveListenerBus: SparkListenerBus has already stopped! Dropping event SparkListenerExecutorMetricsUpdate(15,WrappedArray())

17/05/25 12:14:43 ERROR LiveListenerBus: SparkListenerBus has already stopped! Dropping event SparkListenerExecutorMetricsUpdate(12,WrappedArray())

17/05/25 12:14:43 ERROR LiveListenerBus: SparkListenerBus has already stopped! Dropping event SparkListenerExecutorMetricsUpdate(5,WrappedArray())

17/05/25 12:14:43 ERROR LiveListenerBus: SparkListenerBus has already stopped! Dropping event SparkListenerExecutorMetricsUpdate(20,WrappedArray())

17/05/25 12:14:43 ERROR LiveListenerBus: SparkListenerBus has already stopped! Dropping event SparkListenerExecutorMetricsUpdate(7,WrappedArray())

17/05/25 12:14:43 INFO YarnClientSchedulerBackend: Shutting down all executors

17/05/25 12:14:43 ERROR LiveListenerBus: SparkListenerBus has already stopped! Dropping event SparkListenerExecutorMetricsUpdate(1,WrappedArray())

17/05/25 12:14:43 ERROR YarnScheduler: Lost executor 8 on hdp42.car.bj2.yongche.com: Executor heartbeat timed out after 143220 ms

17/05/25 12:14:43 ERROR LiveListenerBus: SparkListenerBus has already stopped! Dropping event SparkListenerExecutorMetricsUpdate(3,WrappedArray())

17/05/25 12:14:43 ERROR YarnScheduler: Lost executor 11 on hdp46.car.bj2.yongche.com: Executor heartbeat timed out after 153371 ms

17/05/25 12:14:43 ERROR LiveListenerBus: SparkListenerBus has already stopped! Dropping event SparkListenerExecutorMetricsUpdate(4,WrappedArray())

17/05/25 12:14:43 ERROR LiveListenerBus: SparkListenerBus has already stopped! Dropping event SparkListenerExecutorMetricsUpdate(11,WrappedArray())

17/05/25 12:14:43 ERROR LiveListenerBus: SparkListenerBus has already stopped! Dropping event SparkListenerExecutorMetricsUpdate(6,WrappedArray())

17/05/25 12:14:43 ERROR LiveListenerBus: SparkListenerBus has already stopped! Dropping event SparkListenerExecutorMetricsUpdate(6,WrappedArray())

17/05/25 12:14:43 ERROR LiveListenerBus: SparkListenerBus has already stopped! Dropping event SparkListenerExecutorMetricsUpdate(14,WrappedArray())

17/05/25 12:14:43 ERROR LiveListenerBus: SparkListenerBus has already stopped! Dropping event SparkListenerExecutorMetricsUpdate(18,WrappedArray())

java.nio.channels.ClosedChannelException

17/05/25 12:14:43 ERROR LiveListenerBus: SparkListenerBus has already stopped! Dropping event SparkListenerExecutorMetricsUpdate(16,WrappedArray())

17/05/25 12:14:43 ERROR LiveListenerBus: SparkListenerBus has already stopped! Dropping event SparkListenerExecutorMetricsUpdate(9,WrappedArray())

17/05/25 12:14:43 ERROR LiveListenerBus: SparkListenerBus has already stopped! Dropping event SparkListenerExecutorMetricsUpdate(14,WrappedArray())

17/05/25 12:14:43 ERROR LiveListenerBus: SparkListenerBus has already stopped! Dropping event SparkListenerExecutorMetricsUpdate(9,WrappedArray())

17/05/25 12:14:43 ERROR LiveListenerBus: SparkListenerBus has already stopped! Dropping event SparkListenerExecutorMetricsUpdate(4,WrappedArray())

17/05/25 12:14:43 WARN YarnSchedulerBackend$YarnSchedulerEndpoint: Container marked as failed: container_e2304_1488635601234_1323367_01_000009 on host: hdp42.car.bj2.yongche.com. Exit status: 1. Diagnostics: Exception from container-launch.

Exit code: 1

at org.apache.hadoop.util.Shell.runCommand(Shell.java:545)

at org.apache.hadoop.util.Shell$ShellCommandExecutor.execute(Shell.java:722)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLaunch.java:302)

at java.util.concurrent.FutureTask$Sync.innerRun(FutureTask.java:334)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1110)

at java.lang.Thread.run(Thread.java:722)

org.apache.spark.rpc.RpcTimeoutException: Futures timed out after [120 seconds]. This timeout is controlled by spark.rpc.askTimeout

at org.apache.spark.rpc.RpcTimeout.org$apache$spark$rpc$RpcTimeout$$createRpcTimeoutException(RpcTimeout.scala:48)

at org.apache.spark.rpc.RpcTimeout$$anonfun$addMessageIfTimeout$1.applyOrElse(RpcTimeout.scala:63)

at org.apache.spark.rpc.RpcTimeout$$anonfun$addMessageIfTimeout$1.applyOrElse(RpcTimeout.scala:59)

at org.apache.spark.rpc.RpcTimeout.awaitResult(RpcTimeout.scala:76)

at org.apache.spark.rpc.RpcEndpointRef.askWithRetry(RpcEndpointRef.scala:101)

at org.apache.spark.rpc.RpcEndpointRef.askWithRetry(RpcEndpointRef.scala:77)

at org.apache.spark.scheduler.cluster.CoarseGrainedSchedulerBackend.stopExecutors(CoarseGrainedSchedulerBackend.scala:335)

at org.apache.spark.scheduler.cluster.YarnClientSchedulerBackend.stop(YarnClientSchedulerBackend.scala:190)

at org.apache.spark.scheduler.TaskSchedulerImpl.stop(TaskSchedulerImpl.scala:446)

at org.apache.spark.SparkContext$$anonfun$stop$9.apply$mcV$sp(SparkContext.scala:1740)

at org.apache.spark.util.Utils$.tryLogNonFatalError(Utils.scala:1219)

at com.yongche.App$.etl$1(App.scala:111)

at com.yongche.App.main(App.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

at java.lang.reflect.Method.invoke(Method.java:601)

at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:181)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:121)

Caused by: java.util.concurrent.TimeoutException: Futures timed out after [120 seconds]

at scala.concurrent.impl.Promise$DefaultPromise.result(Promise.scala:223)

at scala.concurrent.BlockContext$DefaultBlockContext$.blockOn(BlockContext.scala:53)

at org.apache.spark.rpc.RpcTimeout.awaitResult(RpcTimeout.scala:75)

Container id: container_e2304_1488635601234_1323367_01_000009

Exit code: 1

at org.apache.hadoop.util.Shell.run(Shell.java:456)

at org.apache.hadoop.util.Shell$ShellCommandExecutor.execute(Shell.java:722)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLaunch.java:302)

at java.util.concurrent.FutureTask$Sync.innerRun(FutureTask.java:334)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1110)

Container exited with a non-zero exit code 1

org.apache.spark.rpc.RpcTimeoutException: Futures timed out after [120 seconds]. This timeout is controlled by spark.rpc.askTimeout

at org.apache.spark.rpc.RpcTimeout$$anonfun$addMessageIfTimeout$1.applyOrElse(RpcTimeout.scala:63)

at scala.runtime.AbstractPartialFunction.apply(AbstractPartialFunction.scala:33)

at org.apache.spark.rpc.RpcEndpointRef.askWithRetry(RpcEndpointRef.scala:101)

at org.apache.spark.scheduler.cluster.CoarseGrainedSchedulerBackend.removeExecutor(CoarseGrainedSchedulerBackend.scala:370)

at org.apache.spark.rpc.netty.Inbox$$anonfun$process$1.apply$mcV$sp(Inbox.scala:116)

at org.apache.spark.rpc.netty.Dispatcher$MessageLoop.run(Dispatcher.scala:215)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:603)

Caused by: java.util.concurrent.TimeoutException: Futures timed out after [120 seconds]

at scala.concurrent.impl.Promise$DefaultPromise.result(Promise.scala:223)

at scala.concurrent.BlockContext$DefaultBlockContext$.blockOn(BlockContext.scala:53)

at org.apache.spark.rpc.RpcTimeout.awaitResult(RpcTimeout.scala:75)

org.apache.spark.rpc.RpcTimeoutException: Futures timed out after [120 seconds]. This timeout is controlled by spark.rpc.askTimeout

at org.apache.spark.rpc.RpcTimeout$$anonfun$addMessageIfTimeout$1.applyOrElse(RpcTimeout.scala:63)

at scala.runtime.AbstractPartialFunction.apply(AbstractPartialFunction.scala:33)

at org.apache.spark.rpc.RpcEndpointRef.askWithRetry(RpcEndpointRef.scala:101)

at org.apache.spark.rpc.RpcEndpointRef.askWithRetry(RpcEndpointRef.scala:77)

at org.apache.spark.SparkContext.killAndReplaceExecutor(SparkContext.scala:1499)

at org.apache.spark.util.Utils$.tryLogNonFatalError(Utils.scala:1219)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:471)

at java.util.concurrent.FutureTask.run(FutureTask.java:166)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:603)

Caused by: java.util.concurrent.TimeoutException: Futures timed out after [120 seconds]

at scala.concurrent.impl.Promise$DefaultPromise.result(Promise.scala:223)

at scala.concurrent.BlockContext$DefaultBlockContext$.blockOn(BlockContext.scala:53)

... 14 more

17/05/25 12:20:49 ERROR Utils: Uncaught exception in thread kill-executor-thread

at org.apache.spark.rpc.RpcEndpointRef.askWithRetry(RpcEndpointRef.scala:77)

at org.apache.spark.scheduler.cluster.CoarseGrainedSchedulerBackend.killExecutors(CoarseGrainedSchedulerBackend.scala:511)

at org.apache.spark.HeartbeatReceiver$$anonfun$org$apache$spark$HeartbeatReceiver$$expireDeadHosts$3$$anon$3$$anonfun$run$3.apply$mcV$sp(HeartbeatReceiver.scala:206)

at org.apache.spark.util.Utils$.tryLogNonFatalError(Utils.scala:1219)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:471)

at java.util.concurrent.FutureTask.run(FutureTask.java:166)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:603)

Caused by: org.apache.spark.rpc.RpcTimeoutException: Futures timed out after [120 seconds]. This timeout is controlled by spark.rpc.askTimeout

at org.apache.spark.rpc.RpcTimeout$$anonfun$addMessageIfTimeout$1.applyOrElse(RpcTimeout.scala:63)

at org.apache.spark.rpc.RpcTimeout$$anonfun$addMessageIfTimeout$1.applyOrElse(RpcTimeout.scala:59)

at org.apache.spark.rpc.RpcTimeout.awaitResult(RpcTimeout.scala:76)

... 13 more

at scala.concurrent.impl.Promise$DefaultPromise.ready(Promise.scala:219)

at scala.concurrent.Await$$anonfun$result$1.apply(package.scala:107)

at scala.concurrent.BlockContext$DefaultBlockContext$.blockOn(BlockContext.scala:53)

at org.apache.spark.rpc.RpcTimeout.awaitResult(RpcTimeout.scala:75)

17/05/25 12:20:49 ERROR Utils: Uncaught exception in thread main

at org.apache.spark.scheduler.cluster.CoarseGrainedSchedulerBackend.stop(CoarseGrainedSchedulerBackend.scala:344)

at org.apache.spark.scheduler.TaskSchedulerImpl.stop(TaskSchedulerImpl.scala:446)

at org.apache.spark.SparkContext$$anonfun$stop$9.apply$mcV$sp(SparkContext.scala:1740)

at org.apache.spark.SparkContext.stop(SparkContext.scala:1739)

at com.yongche.App$.main(App.scala:116)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:731)

at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:206)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

at org.apache.spark.rpc.RpcEndpointRef.askWithRetry(RpcEndpointRef.scala:118)

at org.apache.spark.scheduler.cluster.CoarseGrainedSchedulerBackend.stopExecutors(CoarseGrainedSchedulerBackend.scala:335)

Caused by: org.apache.spark.rpc.RpcTimeoutException: Futures timed out after [120 seconds]. This timeout is controlled by spark.rpc.askTimeout

at org.apache.spark.rpc.RpcTimeout$$anonfun$addMessageIfTimeout$1.applyOrElse(RpcTimeout.scala:63)

at scala.runtime.AbstractPartialFunction.apply(AbstractPartialFunction.scala:33)

at org.apache.spark.rpc.RpcEndpointRef.askWithRetry(RpcEndpointRef.scala:101)

Caused by: java.util.concurrent.TimeoutException: Futures timed out after [120 seconds]

at scala.concurrent.impl.Promise$DefaultPromise.result(Promise.scala:223)

at scala.concurrent.BlockContext$DefaultBlockContext$.blockOn(BlockContext.scala:53)

... 22 more

17/05/25 12:20:49 ERROR Inbox: Ignoring error

at org.apache.spark.scheduler.cluster.CoarseGrainedSchedulerBackend.removeExecutor(CoarseGrainedSchedulerBackend.scala:373)

at org.apache.spark.rpc.netty.Inbox$$anonfun$process$1.apply$mcV$sp(Inbox.scala:116)

at org.apache.spark.rpc.netty.Inbox.process(Inbox.scala:100)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1110)

at java.lang.Thread.run(Thread.java:722)

Container id: container_e2304_1488635601234_1323367_01_000009

Stack trace: ExitCodeException exitCode=1:

at org.apache.hadoop.util.Shell.run(Shell.java:456)

at org.apache.hadoop.yarn.server.nodemanager.DefaultContainerExecutor.launchContainer(DefaultContainerExecutor.java:211)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLaunch.java:82)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1110)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:603)

Container exited with a non-zero exit code 1

at org.apache.spark.rpc.RpcEndpointRef.askWithRetry(RpcEndpointRef.scala:118)

at org.apache.spark.scheduler.cluster.CoarseGrainedSchedulerBackend.removeExecutor(CoarseGrainedSchedulerBackend.scala:370)

Caused by: org.apache.spark.rpc.RpcTimeoutException: Futures timed out after [120 seconds]. This timeout is controlled by spark.rpc.askTimeout

at org.apache.spark.rpc.RpcTimeout$$anonfun$addMessageIfTimeout$1.applyOrElse(RpcTimeout.scala:63)

at scala.runtime.AbstractPartialFunction.apply(AbstractPartialFunction.scala:33)

at org.apache.spark.rpc.RpcEndpointRef.askWithRetry(RpcEndpointRef.scala:101)

Caused by: java.util.concurrent.TimeoutException: Futures timed out after [120 seconds]

at scala.concurrent.Await$$anonfun$result$1.apply(package.scala:107)

at scala.concurrent.Await$.result(package.scala:107)

... 11 more

17/05/25 12:20:50 WARN NettyRpcEnv: Ignored message: true

17/05/25 12:20:50 WARN YarnSchedulerBackend$YarnSchedulerEndpoint: Container marked as failed: container_e2304_1488635601234_1323367_01_000034 on host: hdp42.car.bj2.yongche.com. Exit status: 1. Diagnostics: Exception from container-launch.

Exit code: 1

Stack trace: ExitCodeException exitCode=1:

at org.apache.hadoop.util.Shell.run(Shell.java:456)

at org.apache.hadoop.yarn.server.nodemanager.DefaultContainerExecutor.launchContainer(DefaultContainerExecutor.java:211)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLaunch.java:82)

at java.util.concurrent.FutureTask.run(FutureTask.java:166)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:603)

Container exited with a non-zero exit code 1

17/05/25 12:20:50 WARN NettyRpcEnv: Ignored message: true

17/05/25 12:20:51 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

17/05/25 12:20:51 INFO MemoryStore: MemoryStore cleared

17/05/25 12:20:51 INFO BlockManager: BlockManager stopped

17/05/25 12:20:51 INFO BlockManagerMaster: BlockManagerMaster stopped

17/05/25 12:20:52 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

java.lang.IllegalStateException: RpcEnv already stopped.

at org.apache.spark.rpc.netty.Dispatcher.postMessage(Dispatcher.scala:159)

at org.apache.spark.rpc.netty.Dispatcher.postToAll(Dispatcher.scala:109)

at org.apache.spark.network.server.TransportRequestHandler.channelUnregistered(TransportRequestHandler.java:94)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannel$AbstractUnsafe$8.run(AbstractChannel.java:659)

at io.netty.util.concurrent.SingleThreadEventExecutor.confirmShutdown(SingleThreadEventExecutor.java:627)

at io.netty.util.concurrent.SingleThreadEventExecutor$2.run(SingleThreadEventExecutor.java:111)

17/05/25 12:20:52 WARN Dispatcher: Message RemoteProcessDisconnected(hdp33.car.bj2.yongche.com:39572) dropped.

at org.apache.spark.rpc.netty.Dispatcher.postMessage(Dispatcher.scala:159)

at org.apache.spark.rpc.netty.NettyRpcHandler.connectionTerminated(NettyRpcEnv.scala:630)

at org.apache.spark.network.server.TransportChannelHandler.channelUnregistered(TransportChannelHandler.java:89)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannel$AbstractUnsafe$8.run(AbstractChannel.java:659)

at io.netty.util.concurrent.SingleThreadEventExecutor.confirmShutdown(SingleThreadEventExecutor.java:627)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:362)

at java.lang.Thread.run(Thread.java:722)

java.lang.IllegalStateException: RpcEnv already stopped.

at org.apache.spark.rpc.netty.Dispatcher.postMessage(Dispatcher.scala:159)

at org.apache.spark.rpc.netty.NettyRpcHandler.connectionTerminated(NettyRpcEnv.scala:630)

at org.apache.spark.network.server.TransportChannelHandler.channelUnregistered(TransportChannelHandler.java:89)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.DefaultChannelPipeline.fireChannelUnregistered(DefaultChannelPipeline.java:739)

at io.netty.channel.AbstractChannel$AbstractUnsafe$8.run(AbstractChannel.java:659)

at io.netty.util.concurrent.SingleThreadEventExecutor.confirmShutdown(SingleThreadEventExecutor.java:627)

at io.netty.util.concurrent.SingleThreadEventExecutor$2.run(SingleThreadEventExecutor.java:111)

17/05/25 12:20:52 WARN Dispatcher: Message RemoteProcessDisconnected(hdp50.car.bj2.yongche.com:61481) dropped.

at org.apache.spark.rpc.netty.Dispatcher.postMessage(Dispatcher.scala:159)

at org.apache.spark.rpc.netty.NettyRpcHandler.connectionTerminated(NettyRpcEnv.scala:630)

at org.apache.spark.network.server.TransportChannelHandler.channelUnregistered(TransportChannelHandler.java:89)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannel$AbstractUnsafe$8.run(AbstractChannel.java:659)

at io.netty.util.concurrent.SingleThreadEventExecutor.confirmShutdown(SingleThreadEventExecutor.java:627)

at io.netty.util.concurrent.SingleThreadEventExecutor$2.run(SingleThreadEventExecutor.java:111)

17/05/25 12:20:52 WARN Dispatcher: Message RemoteProcessDisconnected(hdp56.car.bj2.yongche.com:22771) dropped.

at org.apache.spark.rpc.netty.Dispatcher.postMessage(Dispatcher.scala:159)

at org.apache.spark.rpc.netty.NettyRpcHandler.connectionTerminated(NettyRpcEnv.scala:630)

at org.apache.spark.network.server.TransportChannelHandler.channelUnregistered(TransportChannelHandler.java:89)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.DefaultChannelPipeline.fireChannelUnregistered(DefaultChannelPipeline.java:739)

at io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasks(SingleThreadEventExecutor.java:328)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:362)

17/05/25 12:20:52 WARN Dispatcher: Message RemoteProcessDisconnected(hdp22.car.bj2.yongche.com:18239) dropped.

at org.apache.spark.rpc.netty.Dispatcher.postMessage(Dispatcher.scala:159)

at org.apache.spark.rpc.netty.NettyRpcHandler.connectionTerminated(NettyRpcEnv.scala:630)

at org.apache.spark.network.server.TransportChannelHandler.channelUnregistered(TransportChannelHandler.java:89)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannel$AbstractUnsafe$8.run(AbstractChannel.java:659)

at io.netty.util.concurrent.SingleThreadEventExecutor.confirmShutdown(SingleThreadEventExecutor.java:627)

at java.lang.Thread.run(Thread.java:722)

java.lang.IllegalStateException: RpcEnv already stopped.

at org.apache.spark.rpc.netty.Dispatcher.postToAll(Dispatcher.scala:109)

at org.apache.spark.network.server.TransportRequestHandler.channelUnregistered(TransportRequestHandler.java:94)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannel$AbstractUnsafe$8.run(AbstractChannel.java:659)

at io.netty.util.concurrent.SingleThreadEventExecutor.confirmShutdown(SingleThreadEventExecutor.java:627)

at io.netty.util.concurrent.SingleThreadEventExecutor$2.run(SingleThreadEventExecutor.java:111)

java.lang.IllegalStateException: RpcEnv already stopped.

at org.apache.spark.rpc.netty.Dispatcher.postToAll(Dispatcher.scala:109)

at org.apache.spark.network.server.TransportRequestHandler.channelUnregistered(TransportRequestHandler.java:94)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.DefaultChannelPipeline.fireChannelUnregistered(DefaultChannelPipeline.java:739)

at io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasks(SingleThreadEventExecutor.java:328)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:362)

17/05/25 12:20:52 WARN Dispatcher: Message RemoteProcessDisconnected(hdp31.car.bj2.yongche.com:24195) dropped.

at org.apache.spark.rpc.netty.Dispatcher.postMessage(Dispatcher.scala:159)

at org.apache.spark.rpc.netty.Dispatcher.postToAll(Dispatcher.scala:109)

at org.apache.spark.network.server.TransportRequestHandler.channelUnregistered(TransportRequestHandler.java:94)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.DefaultChannelPipeline.fireChannelUnregistered(DefaultChannelPipeline.java:739)

at io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasks(SingleThreadEventExecutor.java:328)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:362)

at java.lang.Thread.run(Thread.java:722)

java.lang.IllegalStateException: RpcEnv already stopped.

at org.apache.spark.rpc.netty.Dispatcher.postToAll(Dispatcher.scala:109)

at org.apache.spark.network.server.TransportRequestHandler.channelUnregistered(TransportRequestHandler.java:94)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.DefaultChannelPipeline.fireChannelUnregistered(DefaultChannelPipeline.java:739)

at io.netty.util.concurrent.SingleThreadEventExecutor.confirmShutdown(SingleThreadEventExecutor.java:627)

at io.netty.util.concurrent.SingleThreadEventExecutor$2.run(SingleThreadEventExecutor.java:111)

17/05/25 12:20:52 WARN Dispatcher: Message RemoteProcessDisconnected(hdp30.car.bj2.yongche.com:29503) dropped.

at org.apache.spark.rpc.netty.Dispatcher.postMessage(Dispatcher.scala:159)

at org.apache.spark.rpc.netty.NettyRpcHandler.connectionTerminated(NettyRpcEnv.scala:630)

at org.apache.spark.network.server.TransportChannelHandler.channelUnregistered(TransportChannelHandler.java:89)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannel$AbstractUnsafe$8.run(AbstractChannel.java:659)

at io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasks(SingleThreadEventExecutor.java:328)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:362)

at java.lang.Thread.run(Thread.java:722)

17/05/25 12:20:52 WARN Dispatcher: Message RemoteProcessDisconnected(hdp33.car.bj2.yongche.com:39572) dropped.

at org.apache.spark.rpc.netty.Dispatcher.postMessage(Dispatcher.scala:159)

at org.apache.spark.rpc.netty.NettyRpcHandler.connectionTerminated(NettyRpcEnv.scala:630)

at org.apache.spark.network.server.TransportRequestHandler.channelUnregistered(TransportRequestHandler.java:94)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannel$AbstractUnsafe$8.run(AbstractChannel.java:659)

at io.netty.util.concurrent.SingleThreadEventExecutor.confirmShutdown(SingleThreadEventExecutor.java:627)

at io.netty.util.concurrent.SingleThreadEventExecutor$2.run(SingleThreadEventExecutor.java:111)

17/05/25 12:20:52 WARN Dispatcher: Message RemoteProcessDisconnected(hdp31.car.bj2.yongche.com:24195) dropped.

at org.apache.spark.rpc.netty.Dispatcher.postToAll(Dispatcher.scala:109)

at org.apache.spark.network.server.TransportRequestHandler.channelUnregistered(TransportRequestHandler.java:94)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannel$AbstractUnsafe$8.run(AbstractChannel.java:659)

at io.netty.util.concurrent.SingleThreadEventExecutor.confirmShutdown(SingleThreadEventExecutor.java:627)

at io.netty.util.concurrent.SingleThreadEventExecutor$2.run(SingleThreadEventExecutor.java:111)

java.lang.IllegalStateException: RpcEnv already stopped.

at org.apache.spark.rpc.netty.Dispatcher.postToAll(Dispatcher.scala:109)

at org.apache.spark.network.server.TransportRequestHandler.channelUnregistered(TransportRequestHandler.java:94)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.DefaultChannelPipeline.fireChannelUnregistered(DefaultChannelPipeline.java:739)

at io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasks(SingleThreadEventExecutor.java:328)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:362)

at java.lang.Thread.run(Thread.java:722)

17/05/25 12:20:52 WARN Dispatcher: Message RemoteProcessDisconnected(hdp34.car.bj2.yongche.com:18375) dropped.

at org.apache.spark.rpc.netty.Dispatcher.postMessage(Dispatcher.scala:159)

at org.apache.spark.rpc.netty.NettyRpcHandler.connectionTerminated(NettyRpcEnv.scala:630)

at org.apache.spark.network.server.TransportChannelHandler.channelUnregistered(TransportChannelHandler.java:89)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannel$AbstractUnsafe$8.run(AbstractChannel.java:659)

at io.netty.util.concurrent.SingleThreadEventExecutor.confirmShutdown(SingleThreadEventExecutor.java:627)

at java.lang.Thread.run(Thread.java:722)

java.lang.IllegalStateException: RpcEnv already stopped.

at org.apache.spark.rpc.netty.Dispatcher.postToAll(Dispatcher.scala:109)

at org.apache.spark.network.server.TransportRequestHandler.channelUnregistered(TransportRequestHandler.java:94)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.AbstractChannel$AbstractUnsafe$8.run(AbstractChannel.java:659)

at io.netty.util.concurrent.SingleThreadEventExecutor.confirmShutdown(SingleThreadEventExecutor.java:627)

at io.netty.util.concurrent.SingleThreadEventExecutor$2.run(SingleThreadEventExecutor.java:111)

17/05/25 12:20:52 WARN Dispatcher: Message RemoteProcessDisconnected(hdp34.car.bj2.yongche.com:18375) dropped.

at org.apache.spark.rpc.netty.Dispatcher.postMessage(Dispatcher.scala:159)

at org.apache.spark.network.server.TransportRequestHandler.channelUnregistered(TransportRequestHandler.java:94)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannel$AbstractUnsafe$8.run(AbstractChannel.java:659)

at io.netty.util.concurrent.SingleThreadEventExecutor.confirmShutdown(SingleThreadEventExecutor.java:627)

at io.netty.util.concurrent.SingleThreadEventExecutor$2.run(SingleThreadEventExecutor.java:111)

17/05/25 12:20:52 WARN Dispatcher: Message RemoteProcessDisconnected(hdp28.car.bj2.yongche.com:27463) dropped.

java.lang.IllegalStateException: RpcEnv already stopped.

at org.apache.spark.rpc.netty.Dispatcher.postMessage(Dispatcher.scala:159)

at org.apache.spark.rpc.netty.NettyRpcHandler.connectionTerminated(NettyRpcEnv.scala:630)

at org.apache.spark.network.server.TransportChannelHandler.channelUnregistered(TransportChannelHandler.java:89)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannel$AbstractUnsafe$8.run(AbstractChannel.java:659)

at io.netty.util.concurrent.SingleThreadEventExecutor.confirmShutdown(SingleThreadEventExecutor.java:627)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:362)

at java.lang.Thread.run(Thread.java:722)

java.lang.IllegalStateException: RpcEnv already stopped.

at org.apache.spark.rpc.netty.Dispatcher.postToAll(Dispatcher.scala:109)

at org.apache.spark.rpc.netty.NettyRpcHandler.connectionTerminated(NettyRpcEnv.scala:630)

at org.apache.spark.network.server.TransportChannelHandler.channelUnregistered(TransportChannelHandler.java:89)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.DefaultChannelPipeline.fireChannelUnregistered(DefaultChannelPipeline.java:739)

at io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasks(SingleThreadEventExecutor.java:328)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:362)

at java.lang.Thread.run(Thread.java:722)

java.lang.IllegalStateException: RpcEnv already stopped.

at org.apache.spark.rpc.netty.Dispatcher.postToAll(Dispatcher.scala:109)

at org.apache.spark.network.server.TransportRequestHandler.channelUnregistered(TransportRequestHandler.java:94)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.DefaultChannelPipeline.fireChannelUnregistered(DefaultChannelPipeline.java:739)

at io.netty.channel.AbstractChannel$AbstractUnsafe$8.run(AbstractChannel.java:659)

at io.netty.util.concurrent.SingleThreadEventExecutor.confirmShutdown(SingleThreadEventExecutor.java:627)

at io.netty.util.concurrent.SingleThreadEventExecutor$2.run(SingleThreadEventExecutor.java:111)

17/05/25 12:20:52 WARN Dispatcher: Message RemoteProcessDisconnected(hdp30.car.bj2.yongche.com:29503) dropped.

at org.apache.spark.rpc.netty.Dispatcher.postMessage(Dispatcher.scala:159)

at org.apache.spark.rpc.netty.NettyRpcHandler.connectionTerminated(NettyRpcEnv.scala:630)

at org.apache.spark.network.server.TransportRequestHandler.channelUnregistered(TransportRequestHandler.java:94)

at org.apache.spark.network.server.TransportChannelHandler.channelUnregistered(TransportChannelHandler.java:89)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannel$AbstractUnsafe$8.run(AbstractChannel.java:659)

at io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasks(SingleThreadEventExecutor.java:328)

at io.netty.util.concurrent.SingleThreadEventExecutor.confirmShutdown(SingleThreadEventExecutor.java:627)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:362)

at io.netty.util.concurrent.SingleThreadEventExecutor$2.run(SingleThreadEventExecutor.java:111)

17/05/25 12:20:52 INFO SparkContext: Successfully stopped SparkContext

java.lang.IllegalStateException: RpcEnv already stopped.

at org.apache.spark.rpc.netty.Dispatcher.postToAll(Dispatcher.scala:109)

at org.apache.spark.network.server.TransportRequestHandler.channelUnregistered(TransportRequestHandler.java:94)

at org.apache.spark.network.server.TransportChannelHandler.channelUnregistered(TransportChannelHandler.java:89)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.DefaultChannelPipeline.fireChannelUnregistered(DefaultChannelPipeline.java:739)

at io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasks(SingleThreadEventExecutor.java:328)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:362)

at io.netty.util.concurrent.SingleThreadEventExecutor$2.run(SingleThreadEventExecutor.java:111)

17/05/25 12:20:52 WARN Dispatcher: Message RemoteProcessDisconnected(hdp28.car.bj2.yongche.com:27463) dropped.

java.lang.IllegalStateException: RpcEnv already stopped.

at org.apache.spark.rpc.netty.Dispatcher.postToAll(Dispatcher.scala:109)

at org.apache.spark.network.server.TransportRequestHandler.channelUnregistered(TransportRequestHandler.java:94)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannel$AbstractUnsafe$8.run(AbstractChannel.java:659)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:362)

at java.lang.Thread.run(Thread.java:722)

17/05/25 12:20:52 WARN Dispatcher: Message RemoteProcessDisconnected(hdp34.car.bj2.yongche.com:18375) dropped.

at org.apache.spark.rpc.netty.Dispatcher.postMessage(Dispatcher.scala:159)

at org.apache.spark.network.server.TransportRequestHandler.channelUnregistered(TransportRequestHandler.java:94)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasks(SingleThreadEventExecutor.java:328)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:362)

at java.lang.Thread.run(Thread.java:722)

java.lang.IllegalStateException: RpcEnv already stopped.

at org.apache.spark.rpc.netty.Dispatcher.postToAll(Dispatcher.scala:109)

at org.apache.spark.network.server.TransportRequestHandler.channelUnregistered(TransportRequestHandler.java:94)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.DefaultChannelPipeline.fireChannelUnregistered(DefaultChannelPipeline.java:739)

at io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasks(SingleThreadEventExecutor.java:328)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:362)

at java.lang.Thread.run(Thread.java:722)

java.lang.IllegalStateException: RpcEnv already stopped.

at org.apache.spark.rpc.netty.NettyRpcHandler.connectionTerminated(NettyRpcEnv.scala:630)

at org.apache.spark.network.server.TransportChannelHandler.channelUnregistered(TransportChannelHandler.java:89)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.DefaultChannelPipeline.fireChannelUnregistered(DefaultChannelPipeline.java:739)

at io.netty.channel.AbstractChannel$AbstractUnsafe$8.run(AbstractChannel.java:659)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:362)

at java.lang.Thread.run(Thread.java:722)

17/05/25 12:20:52 WARN Dispatcher: Message RemoteProcessDisconnected(hdp22.car.bj2.yongche.com:18239) dropped.

at org.apache.spark.rpc.netty.Dispatcher.postMessage(Dispatcher.scala:159)

at org.apache.spark.rpc.netty.NettyRpcHandler.connectionTerminated(NettyRpcEnv.scala:630)

at org.apache.spark.network.server.TransportChannelHandler.channelUnregistered(TransportChannelHandler.java:89)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelUnregistered(AbstractChannelHandlerContext.java:158)

at io.netty.channel.ChannelInboundHandlerAdapter.channelUnregistered(ChannelInboundHandlerAdapter.java:53)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelUnregistered(AbstractChannelHandlerContext.java:144)

at io.netty.channel.AbstractChannel$AbstractUnsafe$8.run(AbstractChannel.java:659)

at io.netty.util.concurrent.SingleThreadEventExecutor.confirmShutdown(SingleThreadEventExecutor.java:627)

at io.netty.util.concurrent.SingleThreadEventExecutor$2.run(SingleThreadEventExecutor.java:111)

Exception in thread "main" java.lang.OutOfMemoryError: GC overhead limit exceeded

at java.lang.String. at java.net.URI.toString(URI.java:1926)

at org.apache.hadoop.fs.Path. at org.apache.spark.sql.sources.HadoopFsRelation$$anonfun$23.apply(interfaces.scala:918)

at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:244)

at scala.collection.mutable.ArrayOps$ofRef.foreach(ArrayOps.scala:108)

at scala.collection.mutable.ArrayOps$ofRef.map(ArrayOps.scala:108)

at org.apache.spark.sql.sources.HadoopFsRelation$FileStatusCache.listLeafFiles(interfaces.scala:445)

at org.apache.spark.sql.sources.HadoopFsRelation.org$apache$spark$sql$sources$HadoopFsRelation$$fileStatusCache$lzycompute(interfaces.scala:489)

at org.apache.spark.sql.sources.HadoopFsRelation.cachedLeafStatuses(interfaces.scala:494)

at org.apache.spark.sql.execution.datasources.parquet.ParquetRelation.org$apache$spark$sql$execution$datasources$parquet$ParquetRelation$$metadataCache$lzycompute(ParquetRelation.scala:145)

at org.apache.spark.sql.execution.datasources.parquet.ParquetRelation$$anonfun$6.apply(ParquetRelation.scala:202)

at scala.Option.getOrElse(Option.scala:120)

at org.apache.spark.sql.sources.HadoopFsRelation.schema$lzycompute(interfaces.scala:636)

at org.apache.spark.sql.execution.datasources.LogicalRelation. at org.apache.spark.sql.hive.HiveMetastoreCatalog$$anonfun$12.apply(HiveMetastoreCatalog.scala:481)

17/05/25 12:20:52 INFO ShutdownHookManager: Deleting directory /home/y/var/spark/local/spark-94b24edc-668e-4de0-84a9-ca7b9e7a642a

17/05/25 12:20:52 ERROR Utils: Uncaught exception in thread driver-revive-thread

at org.apache.spark.rpc.netty.Dispatcher.postMessage(Dispatcher.scala:159)

at org.apache.spark.rpc.netty.NettyRpcEnv.send(NettyRpcEnv.scala:192)

at org.apache.spark.scheduler.cluster.CoarseGrainedSchedulerBackend$DriverEndpoint$$anon$1$$anonfun$run$1$$anonfun$apply$mcV$sp$1.apply(CoarseGrainedSchedulerBackend.scala:102)

at scala.Option.foreach(Option.scala:236)

at org.apache.spark.util.Utils$.tryLogNonFatalError(Utils.scala:1219)

at org.apache.spark.scheduler.cluster.CoarseGrainedSchedulerBackend$DriverEndpoint$$anon$1.run(CoarseGrainedSchedulerBackend.scala:101)

at java.util.concurrent.FutureTask$Sync.innerRunAndReset(FutureTask.java:351)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$301(ScheduledThreadPoolExecutor.java:178)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:293)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:603)

17/05/25 12:20:54 ERROR Utils: Uncaught exception in thread driver-revive-thread

at org.apache.spark.rpc.netty.Dispatcher.postMessage(Dispatcher.scala:159)

at org.apache.spark.rpc.netty.NettyRpcEnv.send(NettyRpcEnv.scala:192)

at org.apache.spark.scheduler.cluster.CoarseGrainedSchedulerBackend$DriverEndpoint$$anon$1$$anonfun$run$1$$anonfun$apply$mcV$sp$1.apply(CoarseGrainedSchedulerBackend.scala:102)

at scala.Option.foreach(Option.scala:236)

at org.apache.spark.scheduler.cluster.CoarseGrainedSchedulerBackend$DriverEndpoint$$anon$1.run(CoarseGrainedSchedulerBackend.scala:101)

at java.util.concurrent.FutureTask$Sync.innerRunAndReset(FutureTask.java:351)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$301(ScheduledThreadPoolExecutor.java:178)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:603)

at java.lang.Thread.run(Thread.java:722)