FFmpeg音频播放器(8)-创建FFmpeg播放器

原文地址::https://www.jianshu.com/p/73b0a0a9bb0d

相关文章

1、FFmpeg音频解码播放----https://www.jianshu.com/p/76562aba84fb

2、通过FFMpeg播放音乐文件----https://blog.csdn.net/chenhy24/article/details/84201421

3、ffmpeg命令操作音频格式转换----https://www.bbsmax.com/A/mo5kbyeEJw/

4、使用FFMpeg 解码音频文件----https://blog.csdn.net/douzhq/article/details/82937422

5、FFmpeg的音频处理详解----https://blog.csdn.net/fireroll/article/details/83032025

6、FFmpeg 入门(3):播放音频----https://blog.csdn.net/naibei/article/details/81086483

7、ffmpeg 音视频文件播放模块----https://blog.csdn.net/wer85121430/article/details/79689002

8、FFmpeg简易播放器的实现-音频播放----https://blog.csdn.net/leisure_chn/article/details/87641899

9、最简单的基于FFMPEG+SDL的视频播放器 ver2 (采用SDL2.0)----https://blog.csdn.net/leixiaohua1020/article/details/38868499

10、[总结]视音频编解码技术零基础学习方法----https://blog.csdn.net/leixiaohua1020/article/details/18893769

FFmpeg音频播放器(1)-简介

FFmpeg音频播放器(2)-编译动态库

FFmpeg音频播放器(3)-将FFmpeg加入到Android中

FFmpeg音频播放器(4)-将mp3解码成pcm

FFmpeg音频播放器(5)-单输入filter(volume,atempo)

FFmpeg音频播放器(6)-多输入filter(amix)

FFmpeg音频播放器(7)-使用OpenSLES播放音频

FFmpeg音频播放器(8)-创建FFmpeg播放器

FFmpeg音频播放器(9)-播放控制

有了前面一系列的准备知识,可以开始打造FFmpeg音频播放器了。主要需求,多个音频混音播放,每个音轨音量控制,合成音频变速播放。而音频的暂停,进度条,停止放到下一节讲述。

AudioPlayer类

首先我们创建一个C++ Class名为AudioPlayer,为了能够实现音频解码,过滤,播放功能,我们需要解码、过滤、队列、输出pcm相关、多线程、Open SL ES相关的成员变量,代码如下:

//解码

int fileCount; //输入音频文件数量

AVFormatContext **fmt_ctx_arr; //FFmpeg上下文数组

AVCodecContext **codec_ctx_arr; //解码器上下文数组

int *stream_index_arr; //音频流索引数组

//过滤

AVFilterGraph *graph;

AVFilterContext **srcs; //输入filter

AVFilterContext *sink; //输出filter

char **volumes; //各个音频的音量

char *tempo; //播放速度0.5~2.0

//AVFrame队列

std::vector queue; //队列,用于保存解码过滤后的AVFrame

//输入输出格式

SwrContext *swr_ctx; //重采样,用于将AVFrame转成pcm数据

uint64_t in_ch_layout;

int in_sample_rate; //采样率

int in_ch_layout_nb; //输入声道数,配合swr_ctx使用

enum AVSampleFormat in_sample_fmt; //输入音频采样格式

uint64_t out_ch_layout;

int out_sample_rate; //采样率

int out_ch_layout_nb; //输出声道数,配合swr_ctx使用

int max_audio_frame_size; //最大缓冲数据大小

enum AVSampleFormat out_sample_fmt; //输出音频采样格式

// 进度相关

AVRational time_base; //刻度,用于计算进度

double total_time; //总时长(秒)

double current_time; //当前进度

int isPlay = 0; //播放状态1:播放中

//多线程

pthread_t decodeId; //解码线程id

pthread_t playId; //播放线程id

pthread_mutex_t mutex; //同步锁

pthread_cond_t not_full; //不为满条件,生产AVFrame时使用

pthread_cond_t not_empty; //不为空条件,消费AVFrame时使用

//Open SL ES

SLObjectItf engineObject; //引擎对象

SLEngineItf engineItf; //引擎接口

SLObjectItf mixObject; //输出混音对象

SLObjectItf playerObject; //播放器对象

SLPlayItf playItf; //播放器接口

SLAndroidSimpleBufferQueueItf bufferQueueItf; //缓冲接口

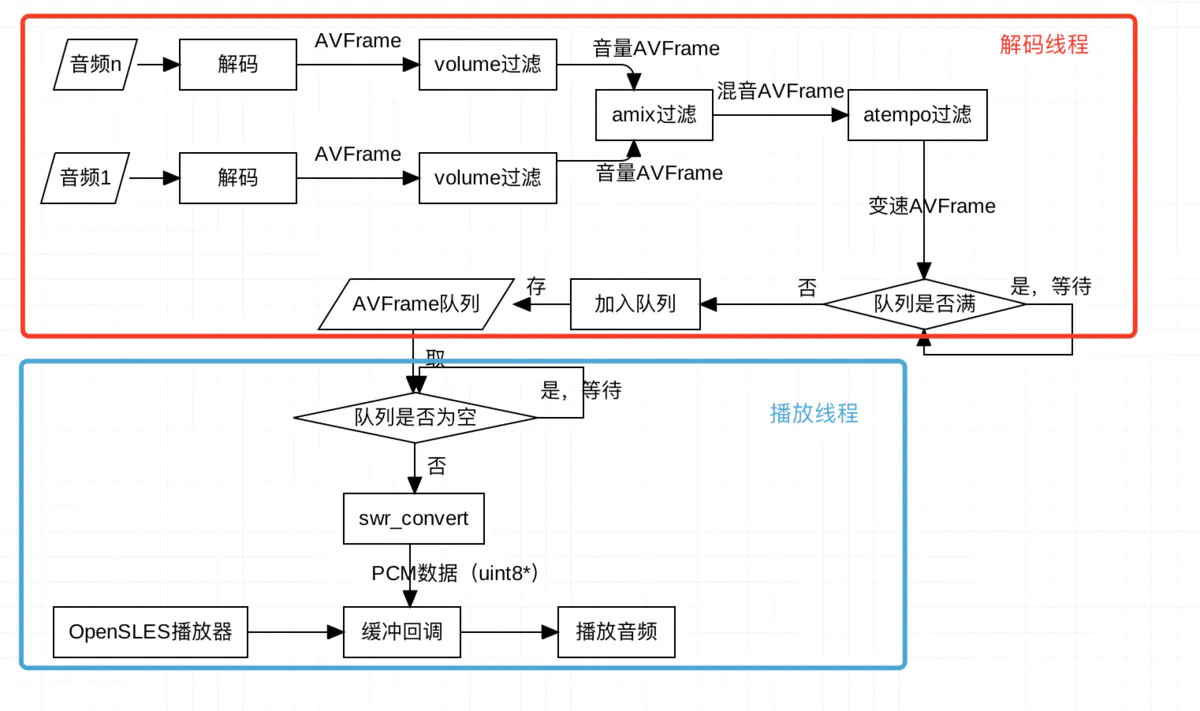

解码播放流程

整个音频处理播放流程如上图,首先,我们需要两个线程一个是解码线程,一个是播放线程。解码线程负责多个音频文件的解码,过滤,加入队列操作,播放线程则需要从队列中取出处理后的AVFrame,然后转成pcm输入,通过缓冲回调播放音频。

为了初始化这些成员变量,我们按照每块成员列表定义了对于的初始化方法。

int createPlayer(); //创建播放器

int initCodecs(char **pathArr); //初始化解码器

int initSwrContext(); //初始化SwrContext

int initFilters(); //初始化过滤器

而在构造函数中传入音频文件数组,和文件数量,初始化相关方法

AudioPlayer::AudioPlayer(char **pathArr, int len) {

//初始化

fileCount = len;

//默认音量1.0 速度1.0

volumes = (char **) malloc(fileCount * sizeof(char *));

for (int i = 0; i < fileCount; i++) {

volumes[i] = "1.0";

}

tempo = "1.0";

pthread_mutex_init(&mutex, NULL);

pthread_cond_init(¬_full, NULL);

pthread_cond_init(¬_empty, NULL);

initCodecs(pathArr);

avfilter_register_all();

initSwrContext();

initFilters();

createPlayer();

}

这里我们还初始化了控制各个音频音量和速度的变量,同步锁和条件变量(生产消费控制)。

初始化解码器数组

int AudioPlayer::initCodecs(char **pathArr) {

LOGI("init codecs");

av_register_all();

fmt_ctx_arr = (AVFormatContext **) malloc(fileCount * sizeof(AVFormatContext *));

codec_ctx_arr = (AVCodecContext **) malloc(fileCount * sizeof(AVCodecContext *));

stream_index_arr = (int *) malloc(fileCount * sizeof(int));

for (int n = 0; n < fileCount; n++) {

AVFormatContext *fmt_ctx = avformat_alloc_context();

fmt_ctx_arr[n] = fmt_ctx;

const char *path = pathArr[n];

if (avformat_open_input(&fmt_ctx, path, NULL, NULL) < 0) {//打开文件

LOGE("could not open file:%s", path);

return -1;

}

if (avformat_find_stream_info(fmt_ctx, NULL) < 0) {//读取音频格式文件信息

LOGE("find stream info error");

return -1;

}

//获取音频索引

int audio_stream_index = -1;

for (int i = 0; i < fmt_ctx->nb_streams; i++) {

if (fmt_ctx->streams[i]->codecpar->codec_type == AVMEDIA_TYPE_AUDIO) {

audio_stream_index = i;

LOGI("find audio stream index:%d", audio_stream_index);

break;

}

}

if (audio_stream_index < 0) {

LOGE("error find stream index");

return -1;

}

stream_index_arr[n] = audio_stream_index;

//获取解码器

AVCodecContext *codec_ctx = avcodec_alloc_context3(NULL);

codec_ctx_arr[n] = codec_ctx;

AVStream *stream = fmt_ctx->streams[audio_stream_index];

avcodec_parameters_to_context(codec_ctx, fmt_ctx->streams[audio_stream_index]->codecpar);

AVCodec *codec = avcodec_find_decoder(codec_ctx->codec_id);

if (n == 0) {//获取输入格式

in_sample_fmt = codec_ctx->sample_fmt;

in_ch_layout = codec_ctx->channel_layout;

in_sample_rate = codec_ctx->sample_rate;

in_ch_layout_nb = av_get_channel_layout_nb_channels(in_ch_layout);

max_audio_frame_size = in_sample_rate * in_ch_layout_nb;

time_base = fmt_ctx->streams[audio_stream_index]->time_base;

int64_t duration = stream->duration;

total_time = av_q2d(stream->time_base) * duration;

LOGI("total time:%lf", total_time);

} else {//如果是多个文件,判断格式是否一致(采用率,格式、声道数)

if (in_ch_layout != codec_ctx->channel_layout

|| in_sample_fmt != codec_ctx->sample_fmt

|| in_sample_rate != codec_ctx->sample_rate) {

LOGE("输入文件格式相同");

return -1;

}

}

//打开解码器

if (avcodec_open2(codec_ctx, codec, NULL) < 0) {

LOGE("could not open codec");

return -1;

}

}

return 1;

}

这里将输入音频的格式信息保存起来,用于SwrContext初始化、Filter初始化。

初始化Filters

int AudioPlayer::initFilters() {

LOGI("init filters");

graph = avfilter_graph_alloc();

srcs = (AVFilterContext **) malloc(fileCount * sizeof(AVFilterContext **));

char args[128];

AVDictionary *dic = NULL;

//混音过滤器

AVFilter *amix = avfilter_get_by_name("amix");

AVFilterContext *amix_ctx = avfilter_graph_alloc_filter(graph, amix, "amix");

snprintf(args, sizeof(args), "inputs=%d:duration=first:dropout_transition=3", fileCount);

if (avfilter_init_str(amix_ctx, args) < 0) {

LOGE("error init amix filter");

return -1;

}

const char *sample_fmt = av_get_sample_fmt_name(in_sample_fmt);

snprintf(args, sizeof(args), "sample_rate=%d:sample_fmt=%s:channel_layout=0x%" PRIx64,

in_sample_rate, sample_fmt, in_ch_layout);

for (int i = 0; i < fileCount; i++) {

AVFilter *abuffer = avfilter_get_by_name("abuffer");

char name[50];

snprintf(name, sizeof(name), "src%d", i);

srcs[i] = avfilter_graph_alloc_filter(graph, abuffer, name);

if (avfilter_init_str(srcs[i], args) < 0) {

LOGE("error init abuffer filter");

return -1;

}

//音量过滤器

AVFilter *volume = avfilter_get_by_name("volume");

AVFilterContext *volume_ctx = avfilter_graph_alloc_filter(graph, volume, "volume");

av_dict_set(&dic, "volume", volumes[i], 0);

if (avfilter_init_dict(volume_ctx, &dic) < 0) {

LOGE("error init volume filter");

return -1;

}

//将输入端链接到volume过滤器

if (avfilter_link(srcs[i], 0, volume_ctx, 0) < 0) {

LOGE("error link to volume filter");

return -1;

}

//链接到混音amix过滤器

if (avfilter_link(volume_ctx, 0, amix_ctx, i) < 0) {

LOGE("error link to amix filter");

return -1;

}

av_dict_free(&dic);

}

//变速过滤器atempo

AVFilter *atempo = avfilter_get_by_name("atempo");

AVFilterContext *atempo_ctx = avfilter_graph_alloc_filter(graph, atempo, "atempo");

av_dict_set(&dic, "tempo", tempo, 0);

if (avfilter_init_dict(atempo_ctx, &dic) < 0) {

LOGE("error init atempo filter");

return -1;

}

//输出格式

AVFilter *aformat = avfilter_get_by_name("aformat");

AVFilterContext *aformat_ctx = avfilter_graph_alloc_filter(graph, aformat, "aformat");

snprintf(args, sizeof(args), "sample_rates=%d:sample_fmts=%s:channel_layouts=0x%" PRIx64,

in_sample_rate, sample_fmt, in_ch_layout);

if (avfilter_init_str(aformat_ctx, args) < 0) {

LOGE("error init aformat filter");

return -1;

}

//输出缓冲

AVFilter *abuffersink = avfilter_get_by_name("abuffersink");

sink = avfilter_graph_alloc_filter(graph, abuffersink, "sink");

if (avfilter_init_str(sink, NULL) < 0) {

LOGE("error init abuffersink filter");

return -1;

}

//将amix链接到atempo

if (avfilter_link(amix_ctx, 0, atempo_ctx, 0) < 0) {

LOGE("error link to atempo filter");

return -1;

}

if (avfilter_link(atempo_ctx, 0, aformat_ctx, 0) < 0) {

LOGE("error link to aformat filter");

return -1;

}

if (avfilter_link(aformat_ctx, 0, sink, 0) < 0) {

LOGE("error link to abuffersink filter");

return -1;

}

if (avfilter_graph_config(graph, NULL) < 0) {

LOGE("error config graph");

return -1;

}

return 1;

}

通过初始化解码器获取的输入音频格式信息,可以初始化abuffer输入filter(采样率、格式、声道必须匹配),然后可以链接volume ,amix,atempo filter。这样音频就可以实现调音,混音,变速的效果。

初始化SwrContext

int AudioPlayer::initSwrContext() {

LOGI("init swr context");

swr_ctx = swr_alloc();

out_sample_fmt = AV_SAMPLE_FMT_S16;

out_ch_layout = AV_CH_LAYOUT_STEREO;

out_ch_layout_nb = 2;

out_sample_rate = in_sample_rate;

max_audio_frame_size = out_sample_rate * 2;

swr_alloc_set_opts(swr_ctx, out_ch_layout, out_sample_fmt, out_sample_rate, in_ch_layout,

in_sample_fmt, in_sample_rate, 0, NULL);

if (swr_init(swr_ctx) < 0) {

LOGE("error init SwrContext");

return -1;

}

return 1;

}

为了能使得解码出来的AVFrame能在OpenSL ES下播放,我们将采用格式固定为16位的AV_SAMPLE_FMT_S16,声道为立体声AV_CH_LAYOUT_STEREO,声道数为2,采样率和输入一样。缓冲回调pcm数据最大值为采样率*2。

初始化OpenSL ES播放器

int AudioPlayer::createPlayer() {

//创建播放器

//创建并且初始化引擎对象

// SLObjectItf engineObject;

slCreateEngine(&engineObject, 0, NULL, 0, NULL, NULL);

(*engineObject)->Realize(engineObject, SL_BOOLEAN_FALSE);

//获取引擎接口

// SLEngineItf engineItf;

(*engineObject)->GetInterface(engineObject, SL_IID_ENGINE, &engineItf);

//通过引擎接口获取输出混音

// SLObjectItf mixObject;

(*engineItf)->CreateOutputMix(engineItf, &mixObject, 0, 0, 0);

(*mixObject)->Realize(mixObject, SL_BOOLEAN_FALSE);

//设置播放器参数

SLDataLocator_AndroidSimpleBufferQueue

android_queue = {SL_DATALOCATOR_ANDROIDSIMPLEBUFFERQUEUE, 2};

SLuint32 samplesPerSec = (SLuint32) out_sample_rate * 1000;

//pcm格式

SLDataFormat_PCM pcm = {SL_DATAFORMAT_PCM,

2,//两声道

samplesPerSec,

SL_PCMSAMPLEFORMAT_FIXED_16,

SL_PCMSAMPLEFORMAT_FIXED_16,

SL_SPEAKER_FRONT_LEFT | SL_SPEAKER_FRONT_RIGHT,//

SL_BYTEORDER_LITTLEENDIAN};

SLDataSource slDataSource = {&android_queue, &pcm};

//输出管道

SLDataLocator_OutputMix outputMix = {SL_DATALOCATOR_OUTPUTMIX, mixObject};

SLDataSink audioSnk = {&outputMix, NULL};

const SLInterfaceID ids[3] = {SL_IID_BUFFERQUEUE, SL_IID_EFFECTSEND, SL_IID_VOLUME};

const SLboolean req[3] = {SL_BOOLEAN_TRUE, SL_BOOLEAN_TRUE, SL_BOOLEAN_TRUE};

//通过引擎接口,创建并且初始化播放器对象

// SLObjectItf playerObject;

(*engineItf)->CreateAudioPlayer(engineItf, &playerObject, &slDataSource, &audioSnk, 1, ids,

req);

(*playerObject)->Realize(playerObject, SL_BOOLEAN_FALSE);

//获取播放接口

// SLPlayItf playItf;

(*playerObject)->GetInterface(playerObject, SL_IID_PLAY, &playItf);

//获取缓冲接口

// SLAndroidSimpleBufferQueueItf bufferQueueItf;

(*playerObject)->GetInterface(playerObject, SL_IID_BUFFERQUEUE, &bufferQueueItf);

//注册缓冲回调

(*bufferQueueItf)->RegisterCallback(bufferQueueItf, _playCallback, this);

return 1;

}

这里的pcm格式和SwrContext设置的参数要一致

启动播放线程和解码线程

void *_decodeAudio(void *args) {

AudioPlayer *p = (AudioPlayer *) args;

p->decodeAudio();

pthread_exit(0);

}

void *_play(void *args) {

AudioPlayer *p = (AudioPlayer *) args;

p->setPlaying();

pthread_exit(0);

}

void AudioPlayer::setPlaying() {

//设置播放状态

(*playItf)->SetPlayState(playItf, SL_PLAYSTATE_PLAYING);

_playCallback(bufferQueueItf, this);

}

void AudioPlayer::play() {

LOGI("play...");

isPlay = 1;

pthread_create(&decodeId, NULL, _decodeAudio, this);

pthread_create(&playId, NULL, _play, this);

}

play方法中我们pthread_create启动播放和解码线程,播放线程通过播放接口设置播放中状态,然后回调缓冲接口,在回调中,取出队列中的AVFrame转成pcm,然后通过Enqueue播放。解码线程负责解码过滤出AVFrame,加入到队列中。

缓冲回调

void _playCallback(SLAndroidSimpleBufferQueueItf bq, void *context) {

AudioPlayer *player = (AudioPlayer *) context;

AVFrame *frame = player->get();

if (frame) {

int size = av_samples_get_buffer_size(NULL, player->out_ch_layout_nb, frame->nb_samples,

player->out_sample_fmt, 1);

if (size > 0) {

uint8_t *outBuffer = (uint8_t *) av_malloc(player->max_audio_frame_size);

swr_convert(player->swr_ctx, &outBuffer, player->max_audio_frame_size,

(const uint8_t **) frame->data, frame->nb_samples);

(*bq)->Enqueue(bq, outBuffer, size);

}

}

}

解码过滤

void AudioPlayer::decodeAudio() {

LOGI("start decode...");

AVFrame *frame = av_frame_alloc();

AVPacket *packet = av_packet_alloc();

int ret, got_frame;

int index = 0;

while (isPlay) {

LOGI("decode frame:%d", index);

for (int i = 0; i < fileCount; i++) {

AVFormatContext *fmt_ctx = fmt_ctx_arr[i];

ret = av_read_frame(fmt_ctx, packet);

if (packet->stream_index != stream_index_arr[i])continue;//不是音频packet跳过

if (ret < 0) {

LOGE("decode finish");

goto end;

}

ret = avcodec_decode_audio4(codec_ctx_arr[i], frame, &got_frame, packet);

if (ret < 0) {

LOGE("error decode packet");

goto end;

}

if (got_frame <= 0) {

LOGE("decode error or finish");

goto end;

}

ret = av_buffersrc_add_frame(srcs[i], frame);

if (ret < 0) {

LOGE("error add frame to filter");

goto end;

}

}

LOGI("time:%lld,%lld,%lld", frame->pkt_dts, frame->pts, packet->pts);

while (av_buffersink_get_frame(sink, frame) >= 0) {

frame->pts = packet->pts;

LOGI("put frame:%d,%lld", index, frame->pts);

put(frame);

}

index++;

}

end:

av_packet_unref(packet);

av_frame_unref(frame);

}

这里有一个注意的点是,通过av_read_frame读取的packet不一定是音频流,所以需要通过音频流索引过滤packet。在av_buffersink_get_frame获取的AVFrame中,将pts修改为packet里的pts,用于保存进度(过滤后的pts时间进度不是当前解码的进度)。

AVFrame存和取

/**

* 将AVFrame加入到队列,队列长度为5时,阻塞等待

* @param frame

* @return

*/

int AudioPlayer::put(AVFrame *frame) {

AVFrame *out = av_frame_alloc();

if (av_frame_ref(out, frame) < 0)return -1;//复制AVFrame

pthread_mutex_lock(&mutex);

if (queue.size() == 5) {

LOGI("queue is full,wait for put frame:%d", queue.size());

pthread_cond_wait(¬_full, &mutex);

}

queue.push_back(out);

pthread_cond_signal(¬_empty);

pthread_mutex_unlock(&mutex);

return 1;

}

/**

* 取出AVFrame,队列为空时,阻塞等待

* @return

*/

AVFrame *AudioPlayer::get() {

AVFrame *out = av_frame_alloc();

pthread_mutex_lock(&mutex);

while (isPlay) {

if (queue.empty()) {

pthread_cond_wait(¬_empty, &mutex);

} else {

AVFrame *src = queue.front();

if (av_frame_ref(out, src) < 0)return NULL;

queue.erase(queue.begin());//删除取出的元素

av_free(src);

if (queue.size() < 5)pthread_cond_signal(¬_full);

pthread_mutex_unlock(&mutex);

current_time = av_q2d(time_base) * out->pts;

LOGI("get frame:%d,time:%lf", queue.size(), current_time);

return out;

}

}

pthread_mutex_unlock(&mutex);

return NULL;

}

通过两个条件变量,实现一个缓冲太小为5的生产消费模型,用于AVFrame队列的存和取。

通过以上代码就可以实现音量为1,速度为1的多音频播放了,而具体的音频控制放在下一节讲。

项目地址

播放时需要将assets的音频文件放到对应sd卡目录

https://github.com/iamyours/FFmpegAudioPlayer

作者:星星y

链接:https://www.jianshu.com/p/73b0a0a9bb0d

来源:简书

著作权归作者所有。商业转载请联系作者获得授权,非商业转载请注明出处。