【docker基础知识】docker坑问题汇总

1. Got starting container process caused "process_linux.go:301: running exec setns process for init caused \"exit status 40\"": unknown. from time to time

解决问题: https://github.com/opencontainers/runc/issues/1740

most of the memories are consumed by page cache, echo 1 > /proc/sys/vm/drop_caches

2. Rpmdb checksum is invalid: dCDPT(pkg checksums):

描述:

rpm数据库损坏需要重建。需要在 yum install … 前使用 rpm –rebuilddb重建数据库

解决方法:

RUN rpm --rebuilddb && yum install -y ...

3. Docker宿主机agetty进程cpu占用率100%

描述:

使用"docker run"运行容器时使用了 /sbin/init和--privileged参数

解决方法:

在宿主机以及Container中运行下述命令

systemctl stop [email protected]

systemctl mask [email protected] 4. Failed to get D-Bus connection: Operation not permitted

描述:

centos 7.2容器内使用systemctl命令

解决方法:

docker run --privileged -d centos:7.2.1511 /usr/sbin/init

5. 解决ssh登录慢慢的问题

使用了dns反查,当ssh某个IP时,通过DNS反查相对应的域名,如果DNS中没有这个IP的域名解析,等待超时

解决方法:/etc/ssh/sshd_config

设置 UseDNS no

6. /etc/hosts, /etc/resolv.conf和/etc/hostname都是易失

问题描述:

/etc/hosts, /etc/resolv.conf和/etc/hostname,容器中的这三个文件不存在于镜像,而是存在于/var/lib/docker/containers/

解决方法:

通过docker run命令的--add-host参数来为容器添加host与ip的映射关系

通过echo -e "aaa.com 10.10.10.10\n" >> /etc/hosts

7. docker容器centos 7.2镜像支持中文

sudo localedef -c -f UTF-8 -i zh_CN zh_CN.utf8

export LC_ALL="zh_CN.UTF-8"

8. docker容器时间为UTC时间,与宿主机相差8小时

cp -a /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

9. overlayfs: Can't delete file moved from base layer to newly created dir even on ext4

Centos 提供的新文件系统 XFS 和 Overlay 兼容问题导致, 这个问题的修复在内核 4.4.6以上( https://github.com/moby/moby/issues/9572)

Fixed in linux 4.5 going to be backported into next 4.4.y and other stable brances. Simple test sequence in commit message (https://git.kernel.org/cgit/linux/kernel/git/torvalds/linux.git/commit/?)id=45d11738969633ec07ca35d75d486bf2d8918df6

解决方法:

1. 停止各个中间服务,

stop容器(docker stop $(docker ps -qa))

systemctl stop docker

备份数据/srv lsof | grep srv

2. 查看磁盘分区fdisk -l , mount | grep srv

umount /dev/mapper/centos-srv

格式化: mkfs.xfs -fn ftype=1 /dev/mapper/centos-srv

查看ftype是否设置为1: xfs-info /srv |grep ftypemount /dev/mapper/centos-srv /srv/

3. 恢复数据/srv

systemctl start docker

docker start $(docker ps -qa)

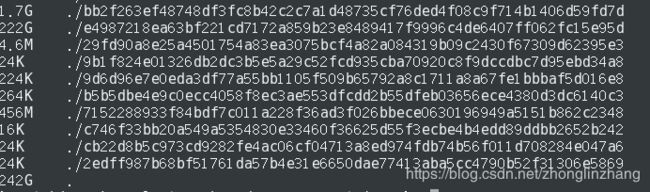

10. /var/lib/docker/overlay2 占用很大,占用几百G空间

描述:这个问题应该是容器内应用产生的数据或者日志造成

解决方法:

进入/var/lib/docker/overlay2,du -h --max-depth=1查看哪个容器占用的比较大,我擦一看占用450G,

一查看发现日志占用的多,这个啥吗应用这么刷日志,是调试遗留的一个容器,一直在刷错误日志,docker kill and docker rm,一下释放了450多G

11. Error starting daemon: error initializing graphdriver: driver not supported

描述: 使用overlay2存储驱动启动docker daemon报错

解决方法: 添加配置如下:

cat /etc/docker/daemon.json

{

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

]

}

或者添加启动参数:

/usr/bin/dockerd --storage-driver=overlay2 --storage-opt overlay2.override_kernel_check=1

11. 修改docker容器最大文件数(open files)

直接修改docker container的 /etc/security/limits.conf无效

宿主机上执行如下操作:

[lin@node1 ~]$ cat /etc/sysconfig/docker

ulimit -HSn 999999

重起docker daemon进程,systemctl restart docker

12. /var/lib/docker/containers 占用过大

描述: 容器日志一般存放在/var/lib/docker/containers/container_id/下面, 以json.log结尾的文件(业务日志)很大。

如果docker容器正在运行,那么使用rm -rf方式删除日志后,通过df -h会发现磁盘空间并没有释放。原因是在Linux或者Unix系统中,通过rm -rf或者文件管理器删除文件,将会从文件系统的目录结构上解除链接(unlink)。如果文件是被打开的(有一个进程正在使用),那么进程将仍然可以读取该文件,磁盘空间也一直被占用

解决方法1:cat /dev/null > *-json.log

#!/bin/sh

logs=$(find /var/lib/docker/containers/ -name *-json.log)

for log in $logs

do

echo "clean logs : $log"

cat /dev/null > $log

done

解决方法2:增加dockerd启动参数,/etc/docker/daemon.json

{

"registry-mirrors": ["https://registry.docker-cn.com"],

"max-concurrent-downloads": 6,

"insecure-registries":["harbor.master.online.local", "harbor.local.com"],

"log-driver":"json-file",

"log-opts": {"max-size":"2G", "max-file":"10"}

}

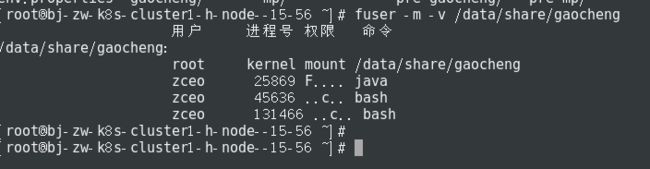

13. umount.nfs device is busy

描述:

[root@localhost /]# umount /data/share/gaocheng

umount.nfs: /data/share/gaocheng: deviceisbusy

解决方法:

- -v 表示 verbose 模式。进程以 ps 的方式显示,包括 PID、USER、COMMAND、ACCESS 字段

- -m 表示指定文件所在的文件系统或者块设备(处于 mount 状态)。所有访问该文件系统的进程都被列出。

如上所示,有进程占用了,将其kill掉,再重新取消挂载

- kill -9 25869 45636 131466

- umount /data/share/gaocheng

14. docker hang

调试: curl --unix-socket /var/run/docker.sock http://./debug/pprof/goroutine?debug=2

only found in docker/container/state.go发现想要获取lock,真特么得不到,导致所有都等待,暂未发现哪里给lock了

func (s *State) IsRunning() bool {

s.Lock()

res := s.Running

s.Unlock()

return res

}goroutine 68266990 [semacquire, 1350 minutes]:

sync.runtime_SemacquireMutex(0xc421004e04, 0x11f0900)

/usr/local/go/src/runtime/sema.go:71 +0x3d

sync.(*Mutex).Lock(0xc421004e00)

/usr/local/go/src/sync/mutex.go:134 +0xee

github.com/docker/docker/container.(*State).IsRunning(0xc421004e00, 0xc42995c2b7)

/root/rpmbuild/BUILD/src/engine/.gopath/src/github.com/docker/docker/container/state.go:250 +0x2d

github.com/docker/docker/daemon.(*Daemon).ContainerStop(0xc420260000, 0xc42995c2b7, 0x40, 0xc421c3cac0, 0xc428235298, 0xc42e5fac01)

/root/rpmbuild/BUILD/src/engine/.gopath/src/github.com/docker/docker/daemon/stop.go:23 +0x84

github.com/docker/docker/api/server/router/container.(*containerRouter).postContainersStop(0xc420b4bfc0, 0x7f85ac36f560, 0xc42844d3e0, 0x38b7d00, 0xc42b8aaa80, 0xc42d85be00, 0xc42844d290, 0x2a4e3dd, 0x5)

/root/rpmbuild/BUILD/src/engine/.gopath/src/github.com/docker/docker/api/server/router/container/container_routes.go:186 +0xf0

github.com/docker/docker/api/server/router/container.(*containerRouter).(github.com/docker/docker/api/server/router/container.postContainersStop)-fm(0x7f85ac36f560, 0xc42844d3e0, 0x38b7d00, 0xc42b8aaa80, 0xc42d85be00, 0xc42844d290, 0x7f85ac36f560, 0xc42844d3e0)

/root/rpmbuild/BUILD/src/engine/.gopath/src/github.com/docker/docker/api/server/router/container/container.go:67 +0x69

github.com/docker/docker/api/server/middleware.ExperimentalMiddleware.WrapHandler.func1(0x7f85ac36f560, 0xc42844d3e0, 0x38b7d00, 0xc42b8aaa80, 0xc42d85be00, 0xc42844d290, 0x7f85ac36f560, 0xc42844d3e0)

15 Too many open files的四种解决办法

一 单个进程打开文件句柄数过多

通过“cat /proc/

二 操作系统打开的文件句柄数过多

/proc/sys/fs/file-max”命令来动态修改该值,也可以通过修改"/etc/sysctl.conf"文件来永久修改该值

三 systemd对该进程进行了限制

LimitNOFILE=

四 inotify达到上限

监控机制,可以监控文件系统的变化。该机制受到2个内核参数的影响:“fs.inotify.max_user_instances”和“fs.inotify.max_user_watches”,其中“fs.inotify.max_user_instances”表示每个用户最多可以创建的inotify instances数量上限,“fs.inotify.max_user_watches”表示么个用户同时可以添加的watch数目

参考:Too many open files的四种解决办法

https://bbs.huaweicloud.com/blogs/7c4c0324a5da11e89fc57ca23e93a89f

参考:Linux下设置最大文件打开数nofile及nr_open、file-max

https://www.cnblogs.com/zengkefu/p/5635153.html

16 docker harbor上镜像硬删除

docker-compose stop

docker run -it --name gc --rm --volumes-from registry vmware/registry:2.6.2-photon garbage-collect --dry-run /etc/registry/config.yml

docker run -it --name gc --rm --volumes-from registry vmware/registry:2.6.2-photon garbage-collect /etc/registry/config.ymldocker-compose start

17 failed to disable IPv6 forwarding for container's interface all' in Docker daemon logs

Issue

If IPv6 networking has been disabled in the kernel, log entries of the following format may be seen in the Docker daemon logs:

Aug 21 11:05:39 ucpworker-0 dockerd[16657]: time="2018-08-21T11:05:39Z" level=error msg="failed to disable IPv6 forwarding for container's interface all: open /proc/sys/net/ipv6/conf/all/disable_ipv6: no such file or directory"

Aug 21 11:05:39 ucpworker-0 dockerd[16657]: time="2018-08-21T11:05:39.599992614Z" level=warning msg="Failed to disable IPv6 on all interfaces on network namespace \"/var/run/docker/netns/de1d0ed4fae9\": reexec to set IPv6 failed: exit status 4"

Root Cause

These log entries are displayed when the Docker daemon fails to disable IPv6 forwarding on a container's interfaces, because IPv6 networking has been disabled at the kernel level (i.e. the ipv6.disable=1 parameter has been added to your kernel boot configuration).

In this situation, the log entries are expected and can be ignored.

Resolution

In a future release of the Docker engine the log level will be updated, so that these entries are only warning messages, as this does not indicate an actionable error case.

参考:https://success.docker.com/article/failed-to-disable-ipv6-forwarding-for-containers-interface-all-error-in-docker-daemon-logs

18. iptables -I FORWARD -o docker0 -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT

在宿主机上发往docker0网桥的网络数据报文,如果是该数据报文所处的连接已经建立的话,则无条件接受,并由Linux内核将其发送到原来的连接上,即回到Docker Container内部

19. malformed module missing dot in first path element

go1.13 mod 要求import 后面的path 第一个元素,符合域名规范,比如code.be.mingbai.com/tools/soa

go mod 要求所有依赖的 import path 的path 以域名开头,如果现有项目转1.13的go mod 模式,且不是以域名开头则需要修改。

go mod init csi.storage.com/storage-csi

20. 报错 “write /proc/self/attr/keycreate: permission denied”

解决办法:vi /etc/selinux/config 将 SELINUX 属性改为 disabled,然后保存重启服务