莎士比亚风格的文本生成任务

日萌社

日萌社

人工智能AI:Keras PyTorch MXNet TensorFlow PaddlePaddle 深度学习实战(不定时更新)

莎士比亚风格的文本生成任务

学习目标

- 了解文本生成任务和相关数据集.

- 掌握使用GRU模型实现文本生成任务的过程.

任务说明

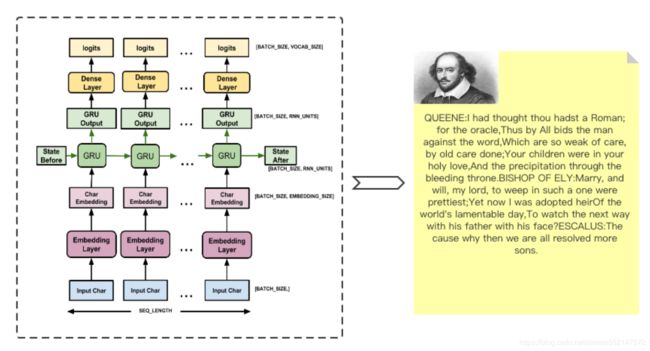

- 这是一项使用GRU模型的文本生成任务,文本生成任务是NLP领域最具有挑战性的任务之一,我们将以一段文本或字符为输入,使用模型预测之后可能出现的文本内容,我们希望这些文本内容符合语法并能保持语义连贯性。但是到目前为止,这是一项艰巨的任务,因此从实用角度出发,更多的尝试在与艺术类文本相关的任务中,如我们的当前案例,就是使用莎士比亚的剧本作为原始数据。

数据集说明

- 数据集名称: 莎士比亚作品数据集

- 数据下载地址: https://storage.googleapis.com/download.tensorflow.org/data/shakespeare.txt

- 数据集预览:

QUEENE:

I had thought thou hadst a Roman; for the oracle,

Thus by All bids the man against the word,

Which are so weak of care, by old care done;

Your children were in your holy love,

And the precipitation through the bleeding throne.

BISHOP OF ELY:

Marry, and will, my lord, to weep in such a one were prettiest;

Yet now I was adopted heir

Of the world's lamentable day,

To watch the next way with his father with his face?

ESCALUS:

The cause why then we are all resolved more sons.

VOLUMNIA:

O, no, no, no, no, no, no, no, no, no, no, no, no, no, no, no, no, no, no, no, no, it is no sin it should be dead,

And love and pale as any will to that word.

QUEEN ELIZABETH:

But how long have I heard the soul for this world,

And show his hands of life be proved to stand.

PETRUCHIO:

I say he look'd on, if I must be content

To stay him from the fatal of our country's bliss.

His lordship pluck'd from this sentence then for prey,

And then let us twain, being the moon,

were she such a case as fills m使用GRU模型实现文本生成任务的步骤

- 第一步: 下载数据集并做文本预处理

- 第二步: 构建模型并训练模型

- 第三步: 使用模型生成文本内容

第一步: 下载数据集并做文本预处理

- 下载数据:

from __future__ import absolute_import, division, print_function, unicode_literals

import tensorflow as tf

# 打印tensorflow版本

print("Tensorflow Version:", tf.__version__)

import numpy as np

import os

import time

# 使用tf.keras.utils.get_file方法从指定地址下载数据,得到原始数据本地路径

path_to_file = tf.keras.utils.get_file('shakespeare.txt', 'https://storage.googleapis.com/download.tensorflow.org/data/shakespeare.txt')- 输出效果:

Tensorflow Version: 2.1.0-rc2

Downloading data from https://storage.googleapis.com/download.tensorflow.org/data/shakespeare.txt

1122304/1115394 [==============================] - 0s 0us/step- 读取数据:

# 打开原始数据文件并读取文本内容

text = open(path_to_file, 'rb').read().decode(encoding='utf-8')

# 统计字符个数并查看前250个字符

print('Length of text: {} characters'.format(len(text)))

print(text[:250])

# 统计文本中非重复字符数量

vocab = sorted(set(text))

print ('{} unique characters'.format(len(vocab)))- 输出效果:

Length of text: 1115394 characters

First Citizen:

Before we proceed any further, hear me speak.

All:

Speak, speak.

First Citizen:

You are all resolved rather to die than to famish?

All:

Resolved. resolved.

First Citizen:

First, you know Caius Marcius is chief enemy to the people.

65 unique characters- 对文本进行数值映射:

# 对字符进行数值映射,将创建两个映射表:字符映射成数字,数字映射成字符

char2idx = {u:i for i, u in enumerate(vocab)}

idx2char = np.array(vocab)

# 使用字符到数字的映射表示所有文本

text_as_int = np.array([char2idx[c] for c in text])

# 查看映射表

print('{')

for char,_ in zip(char2idx, range(20)):

print(' {:4s}: {:3d},'.format(repr(char), char2idx[char]))

print(' ...\n}')- 输出效果:

{

'\n': 0,

' ' : 1,

'!' : 2,

'$' : 3,

'&' : 4,

"'" : 5,

',' : 6,

'-' : 7,

'.' : 8,

'3' : 9,

':' : 10,

';' : 11,

'?' : 12,

'A' : 13,

'B' : 14,

'C' : 15,

'D' : 16,

'E' : 17,

'F' : 18,

'G' : 19,

...

}# 查看原始语料前13个字符映射后的结果

print ('{} ---- characters mapped to int ---- > {}'.format(repr(text[:13]), text_as_int[:13]))- 输出效果:

'First Citizen' ---- characters mapped to int ---- > [18 47 56 57 58 1 15 47 58 47 64 43 52]- 生成训练数据

- 训练数据定义:

- 对于原始文本,人工定义输入序列长度seq_length,每个输入序列与其对应的目标序列等长度,但是向右顺移一个字符。如:设定输入序列长度seq_length为4,针对文本hello来讲,得到的训练数据为:输入序列“hell”,目标序列为“ello”.

- 训练数据定义:

# 设定输入序列长度

seq_length = 100

# 获得样本总数

examples_per_epoch = len(text)//seq_length

# 将数值映射后的文本转换成dataset对象方便后续处理

char_dataset = tf.data.Dataset.from_tensor_slices(text_as_int)

# 通过dataset的take方法以及映射表查看前5个字符

for i in char_dataset.take(5):

print(idx2char[i.numpy()])- 输出效果:

F

i

r

s

t# 使用dataset的batch方法按照字符长度+1划分(要留出一个向后顺移的位置)

# drop_remainder=True表示删除掉最后一批可能小于批次数量的数据

sequences = char_dataset.batch(seq_length+1, drop_remainder=True)

# 查看划分后的5条数据对应的文本内容

for item in sequences.take(5):

print(repr(''.join(idx2char[item.numpy()])))- 输出效果:

'First Citizen:\nBefore we proceed any further, hear me speak.\n\nAll:\nSpeak, speak.\n\nFirst Citizen:\nYou '

'are all resolved rather to die than to famish?\n\nAll:\nResolved. resolved.\n\nFirst Citizen:\nFirst, you k'

"now Caius Marcius is chief enemy to the people.\n\nAll:\nWe know't, we know't.\n\nFirst Citizen:\nLet us ki"

"ll him, and we'll have corn at our own price.\nIs't a verdict?\n\nAll:\nNo more talking on't; let it be d"

'one: away, away!\n\nSecond Citizen:\nOne word, good citizens.\n\nFirst Citizen:\nWe are accounted poor citi'def split_input_target(chunk):

"""划分输入序列和目标序列函数"""

# 前100个字符为输入序列,第二个字符开始到最后为目标序列

input_text = chunk[:-1]

target_text = chunk[1:]

return input_text, target_text

# 使用map方法调用该函数对每条序列进行划分

dataset = sequences.map(split_input_target)

# 查看划分后的第一批次结果

for input_example, target_example in dataset.take(1):

print ('Input data: ', repr(''.join(idx2char[input_example.numpy()])))

print ('Target data:', repr(''.join(idx2char[target_example.numpy()])))- 输出效果:

Input data: 'First Citizen:\nBefore we proceed any further, hear me speak.\n\nAll:\nSpeak, speak.\n\nFirst Citizen:\nYou'

Target data: 'irst Citizen:\nBefore we proceed any further, hear me speak.\n\nAll:\nSpeak, speak.\n\nFirst Citizen:\nYou '# 查看将要输入模型中的每个时间步的输入和输出(以前五步为例)

# 循环每个字符,并打印每个时间步对应的输入和输出

for i, (input_idx, target_idx) in enumerate(zip(input_example[:5], target_example[:5])):

print("Step {:4d}".format(i))

print(" input: {} ({:s})".format(input_idx, repr(idx2char[input_idx])))

print(" expected output: {} ({:s})".format(target_idx, repr(idx2char[target_idx])))- 输出效果:

Step 0

input: 18 ('F')

expected output: 47 ('i')

Step 1

input: 47 ('i')

expected output: 56 ('r')

Step 2

input: 56 ('r')

expected output: 57 ('s')

Step 3

input: 57 ('s')

expected output: 58 ('t')

Step 4

input: 58 ('t')

expected output: 1 (' ')- 创建批次数据:

# 定义批次大小为64

BATCH_SIZE = 64

# 设定缓冲区大小,以重新排列数据集

# 缓冲区越大数据混乱程度越高,所需内存也越大

BUFFER_SIZE = 10000

# 打乱数据并分批次

dataset = dataset.shuffle(BUFFER_SIZE).batch(BATCH_SIZE, drop_remainder=True)

# 打印数据集对象查看数据张量形状

print(dataset)- 输出效果:

第二步: 构建模型并训练模型

# 获得词汇集大小

vocab_size = len(vocab)

# 定义词嵌入维度

embedding_dim = 256

# 定义GRU的隐层节点数量

rnn_units = 1024

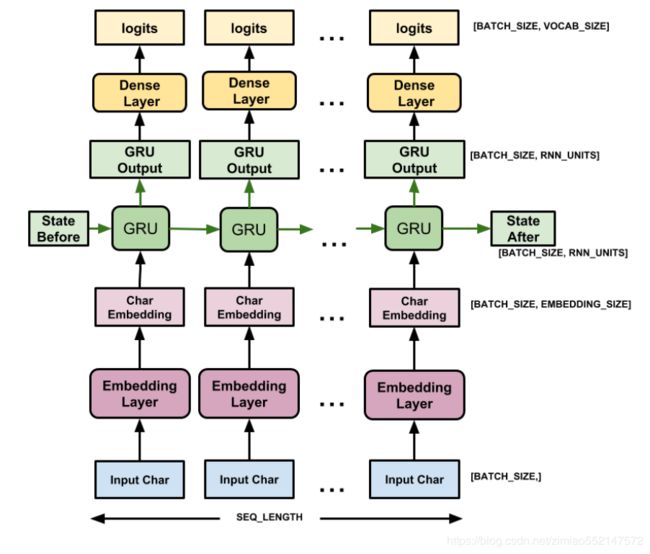

# 模型包括三个层:输入层即embedding层,中间层即GRU层(详情查看)输出层即全连接层

def build_model(vocab_size, embedding_dim, rnn_units, batch_size):

"""模型构建函数"""

# 使用tf.keras.Sequential定义模型

# GRU层的参数return_sequences为True说明返回结果为每个时间步的输出,而不是最后时间步的输出

# stateful参数为True,说明将保留每个batch数据的结果状态作为下一个batch的初始化数据

# recurrent_initializer='glorot_uniform',说明GRU的循环核采用均匀分布的初始化方法

# 模型最终通过全连接层返回一个所有可能字符的概率分布.

model = tf.keras.Sequential([

tf.keras.layers.Embedding(vocab_size, embedding_dim,

batch_input_shape=[batch_size, None]),

tf.keras.layers.GRU(rnn_units,

return_sequences=True,

stateful=True,

recurrent_initializer='glorot_uniform'),

tf.keras.layers.Dense(vocab_size)

])

return model

# 传入超参数构建模型

model = build_model(

vocab_size = len(vocab),

embedding_dim=embedding_dim,

rnn_units=rnn_units,

batch_size=BATCH_SIZE)- 模型结构图:

- 训练前试用模型:

# 使用一个批次的数据作为输入

# 查看通过模型后的结果形状是否满足预期

for input_example_batch, target_example_batch in dataset.take(1):

example_batch_predictions = model(input_example_batch)

print(example_batch_predictions.shape, "# (batch_size, sequence_length, vocab_size)")- 输出效果

(64, 100, 65) # (batch_size, sequence_length, vocab_size)# 查看模型参数情况

model.summary()- 输出效果

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

embedding (Embedding) (64, None, 256) 16640

_________________________________________________________________

gru (GRU) (64, None, 1024) 3938304

_________________________________________________________________

dense (Dense) (64, None, 65) 66625

=================================================================

Total params: 4,021,569

Trainable params: 4,021,569

Non-trainable params: 0

_________________________________________________________________-

接下来我们将介绍一种新的技巧,取代之前我们熟悉的使用贪心算法从结果分布中获得最有可能的预测ID.

-

random categorical:

- 从理论上来讲,如果模型足够准确,我们只需要从概率分布中选择概率最大的值的索引即可,这就是贪心算法。但在实际中,模型的预测效果很难确定,一直按照最大概率选取很容易陷入重复的循环中,因此会将分布的概率值作为其被选中的概率值,这样每个分布中的值都有可能被选中,tensorflow中使用tf.random.categorical方法来实现.

# 使用random categorical

sampled_indices = tf.random.categorical(example_batch_predictions[0], num_samples=1)

# squeeze表示消减一个维度

sampled_indices = tf.squeeze(sampled_indices,axis=-1).numpy()

print(sampled_indices)- 输出效果

array([63, 38, 7, 1, 59, 11, 25, 46, 36, 46, 59, 43, 52, 21, 48, 41, 39,

53, 59, 6, 63, 13, 1, 39, 3, 18, 30, 23, 29, 29, 38, 37, 42, 10,

37, 7, 63, 25, 37, 55, 62, 54, 8, 13, 25, 56, 50, 64, 48, 62, 34,

33, 25, 48, 39, 38, 3, 16, 25, 37, 31, 19, 1, 21, 30, 18, 2, 6,

0, 55, 56, 13, 5, 63, 44, 27, 12, 34, 54, 30, 38, 36, 24, 43, 62,

61, 23, 14, 43, 19, 30, 58, 6, 21, 56, 6, 54, 48, 2, 54])# 也将输入映射成文本内容

print("Input: \n", repr("".join(idx2char[input_example_batch[0]])))

print()

# 映射这些索引查看对应的文本

# 在没有训练之前,生成的文本没有任何规律

print("Next Char Predictions: \n", repr("".join(idx2char[sampled_indices ])))- 输出效果

Input:

"ght me craft\nTo counterfeit oppression of such grief\nThat words seem'd buried in my sorrow's grave.\n"

Next Char Predictions:

"yZ- u;MhXhuenIjcaou,yA a$FRKQQZYd:Y-yMYqxp.AMrlzjxVUMjaZ$DMYSG IRF!,\nqrA'yfO?VpRZXLexwKBeGRt,Ir,pj!p"- 添加损失函数

- 此时可以将生成问题看作是标准的分类问题,即给定RNN的状态和该时间步的输入,预测下一个字符的类别(从分布中只选择一个),类别总数即不重复的字符总数,因此这是一个稀疏类别矩阵.

- 稀疏类别矩阵: 样本的所属类别总数较多,如几百到几千个类别,且每条样本所属的类别较少,如单标签多分类一条样本只所属一个类别,那么此时形成的类别矩阵即稀疏类别矩阵.

# 使用keras预置的稀疏类别交叉熵损失(当类别矩阵为稀疏类别矩阵时使用该损失)

def loss(labels, logits):

return tf.keras.losses.sparse_categorical_crossentropy(labels, logits, from_logits=True)

# 使用损失函数

example_batch_loss = loss(target_example_batch, example_batch_predictions)

print("Prediction shape: ", example_batch_predictions.shape, " # (batch_size, sequence_length, vocab_size)")

print("scalar_loss: ", example_batch_loss.numpy().mean())- 输出效果

Prediction shape: (64, 100, 65) # (batch_size, sequence_length, vocab_size)

scalar_loss: 4.175118- 添加优化器

# 配置优化器为'adam'

model.compile(optimizer='adam', loss=loss)- 配置检测点:

# 检查点保存至的目录

checkpoint_dir = './training_checkpoints'

# 检查点的文件名

checkpoint_prefix = os.path.join(checkpoint_dir, "ckpt_{epoch}")

# 创建检测点保存的回调对象

checkpoint_callback=tf.keras.callbacks.ModelCheckpoint(

filepath=checkpoint_prefix,

save_weights_only=True)- 模型训练并打印日志:

EPOCHS=10

history = model.fit(dataset, epochs=EPOCHS, callbacks=[checkpoint_callback])- 输出效果

Epoch 1/10

172/172 [==============================] - 8s 49ms/step - loss: 2.6956

Epoch 2/10

172/172 [==============================] - 7s 39ms/step - loss: 1.9793

Epoch 3/10

172/172 [==============================] - 7s 38ms/step - loss: 1.7066

Epoch 4/10

172/172 [==============================] - 6s 37ms/step - loss: 1.5544

Epoch 5/10

172/172 [==============================] - 7s 38ms/step - loss: 1.4630

Epoch 6/10

172/172 [==============================] - 6s 38ms/step - loss: 1.4019

Epoch 7/10

172/172 [==============================] - 6s 37ms/step - loss: 1.3562

Epoch 8/10

172/172 [==============================] - 7s 38ms/step - loss: 1.3177

Epoch 9/10

172/172 [==============================] - 6s 37ms/step - loss: 1.2836

Epoch 10/10

172/172 [==============================] - 6s 37ms/step - loss: 1.2513第三步: 使用模型生成文本内容

- 恢复模型:

# 恢复模型结构

model = build_model(vocab_size, embedding_dim, rnn_units, batch_size=1)

# 从检测点中获得训练后的模型参数

model.load_weights(tf.train.latest_checkpoint(checkpoint_dir))- 构建生成函数

def generate_text(model, start_string):

"""

:param model: 训练后的模型

:param start_string: 任意起始字符串

"""

# 要生成的字符个数

num_generate = 1000

# 将起始字符串转换为数字(向量化)

input_eval = [char2idx[s] for s in start_string]

# 扩展维度满足模型输入要求

input_eval = tf.expand_dims(input_eval, 0)

# 空列表用于存储结果

text_generated = []

# 设定“温度参数”,根据tf.random_categorical方法特点,

# 温度参数能够调节该方法的输入分布中概率的差距,以便控制随机被选中的概率大小

temperature = 1.0

# 初始化模型参数

model.reset_states()

# 开始循环生成

for i in range(num_generate):

# 使用模型获得输出

predictions = model(input_eval)

# 删除批次的维度

predictions = tf.squeeze(predictions, 0)

# 使用“温度参数”和tf.random.categorical方法生成最终的预测字符索引

predictions = predictions / temperature

predicted_id = tf.random.categorical(predictions, num_samples=1)[-1,0].numpy()

# 将预测的输出再扩展维度作为下一次的模型输入

input_eval = tf.expand_dims([predicted_id], 0)

# 将该次输出映射成字符存到列表中

text_generated.append(idx2char[predicted_id])

# 最后将初始字符串和生成的字符进行连接

return (start_string + ''.join(text_generated))- 调用:

print(generate_text(model, start_string=u"ROMEO: "))- 输出效果

ROMEO: it may be see, I say.

Elong where I have sea loved for such heart

As of all desperate in your colls?

On how much to purwed esumptrues as we,

But taker appearing our great Isabel,;

Of your brother's needs.

I cannot but one hour, by nimwo and ribs

After 't? O Pedur, break our manory,

The shadot bestering eyes write; onfility;

Indeed I am possips And feated with others and throw it?

CAPULET:

O, not the ut with mine own sort.

But, with your souls, sir, well we would he,

And videwith the sungesoy begins, revell;

Much it in secart.

PROSPERO:

Villain, I darry record;

In sea--lodies, nor that I do I were stir,

You appointed with that sed their o tailor and hope left fear'd,

I so; that your looks stand up,

Comes I truly see this last weok not the

sul us.

CAMILLO:

You did and ever sea,

Into these hours: awake! Ro with mine enemies,

Were werx'd in everlawacted man been to alter

As Lewis could smile to his.

Farthus:

Marry! I'll do lose a man see me

To no drinking often hat back on an illing mo更高级的方式

* 为了能够获得更多的可控参数,我们将采用tf2.0推荐的训练方式.- 构建训练模型与函数

# 构建模型

model = build_model(

vocab_size = len(vocab),

embedding_dim=embedding_dim,

rnn_units=rnn_units,

batch_size=BATCH_SIZE)

# 选择优化器

optimizer = tf.keras.optimizers.Adam()

# 编写带有装饰器@tf.function的函数进行训练

@tf.function

def train_step(inp, target):

"""

:param inp: 模型输入

:param tatget: 输入对应的标签

"""

# 打开梯度记录管理器

with tf.GradientTape() as tape:

# 使用模型进行预测

predictions = model(inp)

# 使用sparse_categorical_crossentropy计算平均损失

loss = tf.reduce_mean(

tf.keras.losses.sparse_categorical_crossentropy(

target, predictions, from_logits=True))

# 使用梯度记录管理器求解全部参数的梯度

grads = tape.gradient(loss, model.trainable_variables)

# 使用梯度和优化器更新参数

optimizer.apply_gradients(zip(grads, model.trainable_variables))

# 返回平均损失

return loss- 进行训练:

# 训练轮数

EPOCHS = 10

#进行轮数循环

for epoch in range(EPOCHS):

# 获得开始时间

start = time.time()

# 初始化隐层状态

hidden = model.reset_states()

# 进行批次循环

for (batch_n, (inp, target)) in enumerate(dataset):

# 调用train_step进行训练, 获得批次循环的损失

loss = train_step(inp, target)

# 每100个批次打印轮数,批次和对应的损失

if batch_n % 100 == 0:

template = 'Epoch {} Batch {} Loss {}'

print(template.format(epoch+1, batch_n, loss))

# 每5轮保存一次检测点

if (epoch + 1) % 5 == 0:

model.save_weights(checkpoint_prefix.format(epoch=epoch))

# 打印轮数,当前损失,和训练耗时

print ('Epoch {} Loss {:.4f}'.format(epoch+1, loss))

print ('Time taken for 1 epoch {} sec\n'.format(time.time() - start))

# 保存最后的检测点

model.save_weights(checkpoint_prefix.format(epoch=epoch))- 输出效果

WARNING:tensorflow:Unresolved object in checkpoint: (root).optimizer

WARNING:tensorflow:Unresolved object in checkpoint: (root).optimizer.iter

WARNING:tensorflow:Unresolved object in checkpoint: (root).optimizer.beta_1

WARNING:tensorflow:Unresolved object in checkpoint: (root).optimizer.beta_2

WARNING:tensorflow:Unresolved object in checkpoint: (root).optimizer.decay

WARNING:tensorflow:Unresolved object in checkpoint: (root).optimizer.learning_rate

WARNING:tensorflow:Unresolved object in checkpoint: (root).optimizer's state 'm' for (root).layer_with_weights-0.embeddings

WARNING:tensorflow:Unresolved object in checkpoint: (root).optimizer's state 'm' for (root).layer_with_weights-2.kernel

WARNING:tensorflow:Unresolved object in checkpoint: (root).optimizer's state 'm' for (root).layer_with_weights-2.bias

WARNING:tensorflow:Unresolved object in checkpoint: (root).optimizer's state 'm' for (root).layer_with_weights-1.cell.kernel

WARNING:tensorflow:Unresolved object in checkpoint: (root).optimizer's state 'm' for (root).layer_with_weights-1.cell.recurrent_kernel

WARNING:tensorflow:Unresolved object in checkpoint: (root).optimizer's state 'm' for (root).layer_with_weights-1.cell.bias

WARNING:tensorflow:Unresolved object in checkpoint: (root).optimizer's state 'v' for (root).layer_with_weights-0.embeddings

WARNING:tensorflow:Unresolved object in checkpoint: (root).optimizer's state 'v' for (root).layer_with_weights-2.kernel

WARNING:tensorflow:Unresolved object in checkpoint: (root).optimizer's state 'v' for (root).layer_with_weights-2.bias

WARNING:tensorflow:Unresolved object in checkpoint: (root).optimizer's state 'v' for (root).layer_with_weights-1.cell.kernel

WARNING:tensorflow:Unresolved object in checkpoint: (root).optimizer's state 'v' for (root).layer_with_weights-1.cell.recurrent_kernel

WARNING:tensorflow:Unresolved object in checkpoint: (root).optimizer's state 'v' for (root).layer_with_weights-1.cell.bias

WARNING:tensorflow:A checkpoint was restored (e.g. tf.train.Checkpoint.restore or tf.keras.Model.load_weights) but not all checkpointed values were used. See above for specific issues. Use expect_partial() on the load status object, e.g. tf.train.Checkpoint.restore(...).expect_partial(), to silence these warnings, or use assert_consumed() to make the check explicit. See https://www.tensorflow.org/alpha/guide/checkpoints#loading_mechanics for details.

Epoch 1 Batch 0 Loss 4.175624370574951

Epoch 1 Batch 100 Loss 2.3564367294311523

Epoch 1 Loss 2.1518

Time taken for 1 epoch 7.982784032821655 sec

Epoch 2 Batch 0 Loss 2.163119316101074

Epoch 2 Batch 100 Loss 1.9472384452819824

Epoch 2 Loss 1.8479

Time taken for 1 epoch 5.905952215194702 sec

Epoch 3 Batch 0 Loss 1.7995233535766602

Epoch 3 Batch 100 Loss 1.7234388589859009

Epoch 3 Loss 1.6408

Time taken for 1 epoch 6.035773515701294 sec

Epoch 4 Batch 0 Loss 1.5643184185028076

Epoch 4 Batch 100 Loss 1.5031523704528809

Epoch 4 Loss 1.4930

Time taken for 1 epoch 5.940794944763184 sec

Epoch 5 Batch 0 Loss 1.460329532623291

Epoch 5 Batch 100 Loss 1.4455183744430542

Epoch 5 Loss 1.4024

Time taken for 1 epoch 5.9728288650512695 sec

Epoch 6 Batch 0 Loss 1.3865368366241455

Epoch 6 Batch 100 Loss 1.4166384935379028

Epoch 6 Loss 1.4086

Time taken for 1 epoch 5.951516151428223 sec

Epoch 7 Batch 0 Loss 1.3148362636566162

Epoch 7 Batch 100 Loss 1.3581626415252686

Epoch 7 Loss 1.3357

Time taken for 1 epoch 5.936840057373047 sec

Epoch 8 Batch 0 Loss 1.2742923498153687

Epoch 8 Batch 100 Loss 1.2792794704437256

Epoch 8 Loss 1.3340

Time taken for 1 epoch 6.054928302764893 sec

Epoch 9 Batch 0 Loss 1.2506366968154907

Epoch 9 Batch 100 Loss 1.2806147336959839

Epoch 9 Loss 1.2853

Time taken for 1 epoch 6.035765886306763 sec

Epoch 10 Batch 0 Loss 1.2073233127593994

Epoch 10 Batch 100 Loss 1.2544529438018799

Epoch 10 Loss 1.2721

Time taken for 1 epoch 6.2950661182403564 sec"""

任务说明

这是一项使用GRU模型的文本生成任务,文本生成任务是NLP领域最具有挑战性的任务之一,

我们将以一段文本或字符为输入,使用模型预测之后可能出现的文本内容,我们希望这些文本内容符合语法并能保持语义连贯性。

但是到目前为止,这是一项艰巨的任务,因此从实用角度出发,更多的尝试在与艺术类文本相关的任务中,

如我们的当前案例,就是使用莎士比亚的剧本作为原始数据。

使用GRU模型实现文本生成任务的步骤

第一步: 下载数据集并做文本预处理

第二步: 构建模型并训练模型

第三步: 使用模型生成文本内容

"""

#-----------------------------------第一步: 下载数据集并做文本预处理--------------------------#

from __future__ import absolute_import, division, print_function, unicode_literals

import tensorflow as tf

# 打印tensorflow版本

# print("Tensorflow Version:", tf.__version__)

import numpy as np

import os

import time

# 使用tf.keras.utils.get_file方法从指定地址下载数据,得到原始数据本地路径

# path_to_file = tf.keras.utils.get_file('shakespeare.txt', 'https://storage.googleapis.com/download.tensorflow.org/data/shakespeare.txt')

path_to_file = './shakespeare.txt'

# path_to_file = './jielun_song.txt' #周杰伦歌词文件

#读取数据:

# 打开原始数据文件并读取文本内容

text = open(path_to_file, 'rb').read().decode(encoding='utf-8')

# 统计字符个数并查看前5个字符

# print('Length of text: {} characters'.format(len(text))) #文件中一共 1115394 个字符

# print(text[:5]) #First

# 统计文本中非重复字符数量

vocab = sorted(set(text))

#vocab ['\n', ' ', '!', '$', '&', "'", ',', '-', '.', '3', ':', ';', '?', 'A', 'B', 'C', 'D', 'E', 'F',

# 'G', 'H', 'I', 'J', 'K', 'L', 'M', 'N', 'O', 'P', 'Q', 'R', 'S', 'T', 'U', 'V', 'W', 'X', 'Y', 'Z',

# 'a', 'b', 'c', 'd', 'e', 'f', 'g', 'h', 'i', 'j', 'k', 'l', 'm', 'n', 'o', 'p', 'q', 'r', 's', 't', 'u', 'v', 'w', 'x', 'y', 'z']

# print("vocab",vocab)

# print ('{} unique characters'.format(len(vocab))) #65个不重复字符

#对文本进行数值映射:

# 对字符进行数值映射,将创建两个映射表:字符映射成数字,数字映射成字符

char2idx = {u:i for i, u in enumerate(vocab)} #key为 不重复字符,value为不重复字符在vocab变量列表中的索引

#char2idx {'\n': 0, ' ': 1, '!': 2, '$': 3, '&': 4, "'": 5, ',': 6, '-': 7, '.': 8, '3': 9, ':': 10, ';': 11,

# '?': 12, 'A': 13, 'B': 14, 'C': 15, 'D': 16, 'E': 17, 'F': 18, 'G': 19, 'H': 20, 'I': 21, 'J': 22, 'K': 23,

# 'L': 24, 'M': 25, 'N': 26, 'O': 27, 'P': 28, 'Q': 29, 'R': 30, 'S': 31, 'T': 32, 'U': 33, 'V': 34, 'W': 35,

# 'X': 36, 'Y': 37, 'Z': 38, 'a': 39, 'b': 40, 'c': 41, 'd': 42, 'e': 43, 'f': 44, 'g': 45, 'h': 46, 'i': 47,

# 'j': 48, 'k': 49, 'l': 50, 'm': 51, 'n': 52, 'o': 53, 'p': 54, 'q': 55, 'r': 56, 's': 57, 't': 58, 'u': 59,

# 'v': 60, 'w': 61, 'x': 62, 'y': 63, 'z': 64}

# print("char2idx",char2idx)

idx2char = np.array(vocab)

#['\n' ' ' '!' '$' '&' "'" ',' '-' '.' '3' ':' ';' '?' 'A' 'B' 'C' 'D' 'E'

# 'F' 'G' 'H' 'I' 'J' 'K' 'L' 'M' 'N' 'O' 'P' 'Q' 'R' 'S' 'T' 'U' 'V' 'W'

# 'X' 'Y' 'Z' 'a' 'b' 'c' 'd' 'e' 'f' 'g' 'h' 'i' 'j' 'k' 'l' 'm' 'n' 'o'

# 'p' 'q' 'r' 's' 't' 'u' 'v' 'w' 'x' 'y' 'z']

# print("idx2char",idx2char)

#text_as_int:把全文每个字符都映射转换为对应的索引值

# 使用字符到数字的映射表示所有文本

text_as_int = np.array([char2idx[c] for c in text])

# 查看映射表

# print('{')

# for char,_ in zip(char2idx, range(20)):

# print(' {:4s}: {:3d},'.format(repr(char), char2idx[char]))

# print(' ...\n}')

# 查看原始语料前13个字符映射后的结果

# print ('{} ---- characters mapped to int ---- > {}'.format(repr(text[:5]), text_as_int[:5]))

#'First' ---- characters mapped to int ---- > [18 47 56 57 58]

"""

生成训练数据

训练数据定义:

对于原始文本,人工定义输入序列长度seq_length,每个输入序列与其对应的目标序列等长度,

但是向右顺移一个字符。如:设定输入序列长度seq_length为4,针对文本hello来讲,

得到的训练数据为:输入序列“hell”,目标序列为“ello”.

"""

# 设定输入序列长度

seq_length = 100

#len(text):文件中一共 1115394 个字符

# 获得样本总数:总字符数//输入序列长度100=输入样本数

examples_per_epoch = len(text)//seq_length

print("输入样本数",examples_per_epoch)

#text_as_int:把全文每个字符都映射转换为对应的索引值

# 将数值映射后的文本转换成dataset对象方便后续处理

char_dataset = tf.data.Dataset.from_tensor_slices(text_as_int)

# # 通过dataset的take方法以及映射表查看前5个字符

# for i in char_dataset.take(5):

# #每个字符

# print(i.numpy(),end="\t") # 18 47 56 57 58

# #每个索引值对应的字符

# print(idx2char[i.numpy()],end="\t") #F i r s t

# char_dataset:全文所有字符数据。

# seq_length+1:101,作为一个句子样本的长度,1~100(前100个字符)作为输入序列,2~101(第2个字符开始到101)作为目标序列

# 使用dataset的batch方法按照字符长度+1划分(要留出一个向后顺移的位置)。batch函数对整个字符集分配批量大小的数据

# drop_remainder=True表示删除掉最后一批可能小于批次数量的数据,即如果最后一个批次的批量数据的样本数量不足批量大小的话则该批量数据被丢弃。

sequences = char_dataset.batch(seq_length+1, drop_remainder=True)

# 查看划分后的5条数据对应的文本内容

# for item in sequences.take(2):

# print("行开头:",repr(''.join(idx2char[item.numpy()])))

# 行开头: 'First Citizen:\nBefore we proceed any further, hear me speak.\n\nAll:\nSpeak, speak.\n\nFirst Citizen:\nYou '

# 行开头: 'are all resolved rather to die than to famish?\n\nAll:\nResolved. resolved.\n\nFirst Citizen:\nFirst, you k'

def split_input_target(chunk):

"""划分输入序列和目标序列函数"""

# 前100个字符为输入序列,第二个字符开始到最后为目标序列。[开始索引:结束索引]为左闭右开。

input_text = chunk[:-1] #取1~100(前100个字符)作为输入序列

target_text = chunk[1:] #2~101(第2个字符开始到101)作为目标序列

return input_text, target_text

"""

使用map方法对dataset类型集合sequences中的每条样本数据进行调用split_input_target函数处理:

集合sequences中的每条样本的单词数为101,取1~100(前100个字符)作为输入序列,2~101(第2个字符开始到101)作为目标序列。

输入序列和目标序列的长度(单词数)均为100。目标序列中的每个字符均是“输入序列中相同索引位置上的字符的”下一个字符。

"""

# 使用map方法调用该函数对每条序列进行划分

dataset = sequences.map(split_input_target)

# # 查看划分后的第一批次结果

# for input_example, target_example in dataset.take(1):

# #'First Citizen:\nBefore we proceed any further, hear me speak.\n\nAll:\nSpeak, speak.\n\nFirst Citizen:\nYou'

# print ('Input data: ', repr(''.join(idx2char[input_example.numpy()])))

# #'irst Citizen:\nBefore we proceed any further, hear me speak.\n\nAll:\nSpeak, speak.\n\nFirst Citizen:\nYou '

# print ('Target data:', repr(''.join(idx2char[target_example.numpy()])))

# # 查看将要输入模型中的每个时间步的输入和输出(以前五步为例)

# # 循环每个字符,并打印每个时间步对应的输入和输出

# for i, (input_idx, target_idx) in enumerate(zip(input_example[:5], target_example[:5])):

# print("Step {:4d}".format(i))

# print(" input: {} ({:s})".format(input_idx, repr(idx2char[input_idx])))

# print(" expected output: {} ({:s})".format(target_idx, repr(idx2char[target_idx])))

"""

Step 0

input: 18 ('F')

expected output: 47 ('i')

Step 1

input: 47 ('i')

expected output: 56 ('r')

Step 2

input: 56 ('r')

expected output: 57 ('s')

Step 3

input: 57 ('s')

expected output: 58 ('t')

Step 4

input: 58 ('t')

expected output: 1 (' ')

"""

#创建批次数据:

# 定义批次大小为64

BATCH_SIZE = 64

# 设定缓冲区大小,以重新排列数据集

# 缓冲区越大数据混乱程度越高,所需内存也越大

BUFFER_SIZE = 10000

"""

dataset

包含多个批次的批量数据,每个批次的批量数据包含输入序列和目标序列。

输入序列和目标序列的形状均为(64, 100),即[批量大小,句子长度(单词数)],批量大小指句子样本数。

输入序列和目标序列的长度(单词数)均为100。目标序列中的每个字符均是“输入序列中相同索引位置上的字符的”下一个字符。

"""

# drop_remainder=True表示删除掉最后一批可能小于批次数量的数据,即如果最后一个批次的批量数据的样本数量不足批量大小的话则该批量数据被丢弃。

# 打乱数据并分批次

dataset = dataset.shuffle(BUFFER_SIZE).batch(BATCH_SIZE, drop_remainder=True)

# 打印数据集对象查看数据张量形状

# print(dataset) #

#----------------------------------第二步: 构建模型并训练模型-------------------------------#

# 获得词汇集大小

vocab_size = len(vocab) #65个不重复字符

print("vocab_size",vocab_size)

# 定义词嵌入维度

embedding_dim = 256

# 定义GRU的隐层节点数量(隐藏神经元数量)

rnn_units = 1024

# 模型包括三个层:输入层即embedding层,中间层即GRU层(详情查看)输出层即全连接层

def build_model(vocab_size, embedding_dim, rnn_units, batch_size):

"""模型构建函数"""

# 使用tf.keras.Sequential定义模型

# GRU层的参数return_sequences为True说明返回结果为每个时间步的输出,而不是最后时间步的输出

# stateful参数为True,说明将保留每个batch数据的结果状态作为下一个batch的初始化数据

# recurrent_initializer='glorot_uniform',说明GRU的循环核采用均匀分布的初始化方法

# 模型最终通过全连接层返回一个所有可能字符的概率分布.

model = tf.keras.Sequential([

tf.keras.layers.Embedding(vocab_size, embedding_dim,

batch_input_shape=[batch_size, None]),

tf.keras.layers.GRU(rnn_units,

return_sequences=True,

stateful=True,

recurrent_initializer='glorot_uniform'),

tf.keras.layers.Dense(vocab_size) #输出层:输出维度(输出层的神经元数量)为vocab_size(65个不重复字符)

])

return model

# 传入超参数构建模型

model = build_model(

vocab_size = len(vocab),

embedding_dim=embedding_dim,

rnn_units=rnn_units,

batch_size=BATCH_SIZE)

# 使用一个批次的数据作为输入

# 查看通过模型后的结果形状是否满足预期

# for input_example_batch, target_example_batch in dataset.take(1):

# example_batch_predictions = model(input_example_batch)

# #(64, 100, 65) 即[批量大小, 句子长度(句子单词数), 65个不重复字符的概率值的维度]

# print(example_batch_predictions.shape, "# (batch_size, sequence_length, vocab_size)")

# 查看模型参数情况

model.summary()

# Model: "sequential"

# Layer (type) Output Shape Param #

# =================================================================

# embedding (Embedding) (64, None, 256) 16640

# gru (GRU) (64, None, 1024) 3938304

# dense (Dense) (64, None, 65) 66625

# =================================================================

# Total params: 4,021,569

# Trainable params: 4,021,569

# Non-trainable params: 0

"""

1.接下来我们将介绍一种新的技巧,取代之前我们熟悉的使用贪心算法从结果分布中获得最有可能的预测ID,

贪心算法也即取出65个不重复字符维度中的最大概率值的索引值,该索引值便对应的字符。

2.tf.random.categorical

从理论上来讲,如果模型足够准确,我们只需要从概率分布中选择概率最大的值的索引即可,这就是贪心算法。

但在实际中,模型的预测效果很难确定,一直按照最大概率选取很容易陷入重复的循环中,

因此会将分布的概率值作为其被选中的概率值,这样每个分布中的值都有可能被选中,

tensorflow中使用tf.random.categorical方法来实现.

3.tf.random.categorical(logits, num_samples, dtype=None, seed=None, name=None)

参数:

logits:具有形状的二维张量[批量大小,类别数] 即[batch_size, num_classes]。每个切片[i,:]表示所有类别的非归一化的记录概率。

num_samples:代表数量样本,值为0维,即单个数值,该数值代表每行切片中绘制的独立样本数

返回值:所绘制的形状样本[batch_size, num_samples] 即 [批量大小,数量样本]。

>>> tf.math.log([[0.5, 0.5]])

>>> #返回值具有形状[1,5],即[批量大小batch_size, num_samples数量样本],其中每个值以相等的概率为0或1

>>> samples = tf.random.categorical(tf.math.log([[0.5, 0.5]]), 5)

>>> samples

>>> #返回值具有形状[1,1],即[批量大小batch_size, num_samples数量样本],其中每个值以相等的概率为0或1

>>> samples = tf.random.categorical(tf.math.log([[0.5, 0.5]]), 1)

>>> samples

"""

"""

sampled_indices = tf.random.categorical(example_batch_predictions[0], num_samples=1)

1.即把 [句子长度(句子单词数), 65个不重复字符的概率值的维度]的输入 转换为 [句子长度(句子单词数), 1]的输出。

65个类别字符的概率值取出一个类别字符的索引值。

2.example_batch_predictions:(64, 100, 65) 即[批量大小, 句子长度(句子单词数), 65个不重复字符的概率值的维度]

example_batch_predictions[0]:表示取第一个样本句子,即(100, 65) 即[句子长度(句子单词数), 65个不重复字符的概率值的维度]

num_samples=1:代表数量样本,值为0维,即单个数值,该数值代表每行切片中绘制的独立样本数

"""

# print(example_batch_predictions[0].shape) #(100, 65) 即[句子长度(句子单词数), 65个不重复字符的概率值的维度],65即为类别数

# 使用random categorical

# sampled_indices = tf.random.categorical(example_batch_predictions[0], num_samples=1)

# print(sampled_indices.shape) #(100, 1) 即[句子长度(句子单词数), 1]

# squeeze表示消减一个维度

# sampled_indices = tf.squeeze(sampled_indices, axis=-1).numpy()

# print(sampled_indices)

# [32 21 30 26 57 6 38 58 14 35 9 22 18 3 0 50 47 10 52 10 23 41 23 4

# 29 6 61 35 25 9 41 35 56 34 9 37 11 29 20 49 13 60 36 1 1 38 61 51

# 5 47 55 10 54 29 46 18 22 1 36 28 17 52 57 27 9 43 62 0 10 9 14 60

# 4 62 64 6 55 57 23 43 24 41 46 14 28 17 38 60 62 64 34 58 24 8 61 12

# 60 16 21 38]

"""

input_example_batch[0]:第一个句子样本中每个字符对应的索引值

idx2char[input_example_batch[0]]:把第一个句子样本中每个字符对应的索引值映射转换为字符

sampled_indices:[句子长度(句子单词数), 1],每个字符对应的65个类别字符的概率值取出一个类别字符的索引值

idx2char[sampled_indices]:把每个字符的索引值映射转换为字符

"""

# 也将输入映射成文本内容

# print("Input: \n", repr("".join(idx2char[input_example_batch[0]])))

#"gn of truth, I kiss your highness' hand.\n\nKING HENRY VI:\nWell-minded Clarence, be thou fortunate!\n\nM"

# 映射这些索引查看对应的文本

# 在没有训练之前,生成的文本没有任何规律

# print("Next Char Predictions: \n", repr("".join(idx2char[sampled_indices])))

#"L:!!!?lC:IeoykLCQyNkpY'?oMpsmFMXlCVfrZSKhScptRQqor?Voq$o&TE;snLbVW\nqTiMAQKlfVomlmJVRMrHetrCEDKu?TO;B"

"""

添加损失函数

1.此时可以将生成问题看作是标准的分类问题,即给定RNN的状态和该时间步的输入,预测下一个字符的类别(从分布中只选择一个),

类别总数即不重复的字符总数,因此这是一个稀疏类别矩阵.

2.稀疏类别矩阵: 样本的所属类别总数较多,如几百到几千个类别,且每条样本所属的类别较少,如单标签多分类一条样本只所属一个类别,

那么此时形成的类别矩阵即稀疏类别矩阵.

3.sparse_categorical_crossentropy 和 categorical_crossentropy的区别

sparse_categorical_crossentropy:传入类别值后便自动会one-hot化表示

categorical_crossentropy:传入每个类别值的one-hot化表示

4.from_logits

import tensorflow as tf

help(tf.losses.categorical_crossentropy)

查看categorical_crossentropy函数的默认参数列表和使用方法介绍

其中形参默认为from_logits=False,网络预测值y_pred 表示必须为经过了 Softmax函数的输出值。

当 from_logits 设置为 True 时,网络预测值y_pred 表示必须为还没经过 Softmax 函数的变量 z。

from_logits=True 标志位将softmax激活函数实现在损失函数中,便不需要手动添加softmax损失函数,提升数值计算稳定性。

from_logits 指的就是是否有经过Logistic函数,常见的Logistic函数包括Sigmoid、Softmax函数。

"""

# 使用keras预置的稀疏类别交叉熵损失(当类别矩阵为稀疏类别矩阵时使用该损失)

def loss(labels, logits):

return tf.keras.losses.sparse_categorical_crossentropy(labels, logits, from_logits=True)

"""

tf.keras.losses.sparse_categorical_crossentropy(真实值, 预测值)

target_example_batch:

真实值,(64, 100)即 (batch_size, sequence_length) [批量大小, 句子长度(句子单词数)]。

自己无需手动将真实值进行one-hot化表示,传入之后自动会进行one-hot化表示

example_batch_predictions:

预测值,(64, 100, 65)即(batch_size, sequence_length, vocab_size) [批量大小, 句子长度(句子单词数), 65个不重复字符的概率值的维度]。

"""

# 使用损失函数

# example_batch_loss = loss(target_example_batch, example_batch_predictions)

# print(target_example_batch.shape) #(64, 100)

# print("Prediction shape: ", example_batch_predictions.shape, " # (batch_size, sequence_length, vocab_size)") # (64, 100, 65)

# print("scalar_loss: ", example_batch_loss.numpy().mean()) #4.175519

#添加优化器:

# 配置优化器为'adam'

model.compile(optimizer='adam', loss=loss)

#配置检测点:

# 检查点保存至的目录

checkpoint_dir = './training_checkpoints'

# 检查点的文件名

checkpoint_prefix = os.path.join(checkpoint_dir, "ckpt_{epoch}")

# 创建检测点保存的回调对象

checkpoint_callback=tf.keras.callbacks.ModelCheckpoint(filepath=checkpoint_prefix, save_weights_only=True)

#模型训练并打印日志:

EPOCHS=10

# history = model.fit(dataset, epochs=EPOCHS, callbacks=[checkpoint_callback])

#------------------------------------------------------------------------------------------------------------#

"""

为了能够获得更多的可控参数,我们将采用tf2.0推荐的训练方式

"""

# 构建模型

model = build_model(

vocab_size = len(vocab),

embedding_dim=embedding_dim,

rnn_units=rnn_units,

batch_size=BATCH_SIZE)

# 选择优化器

optimizer = tf.keras.optimizers.Adam()

# 编写带有装饰器@tf.function的函数进行训练

@tf.function

def train_step(inp, target):

"""

:param inp: 模型输入

:param tatget: 输入对应的标签

"""

# 打开梯度记录管理器

with tf.GradientTape() as tape:

# 使用模型进行预测

predictions = model(inp)

# 使用sparse_categorical_crossentropy计算平均损失

loss = tf.reduce_mean(

tf.keras.losses.sparse_categorical_crossentropy(

target, predictions, from_logits=True))

# 使用梯度记录管理器求解全部参数的梯度

grads = tape.gradient(loss, model.trainable_variables)

# 使用梯度和优化器更新参数

optimizer.apply_gradients(zip(grads, model.trainable_variables))

# 返回平均损失

return loss

# 训练轮数

EPOCHS = 10

#进行轮数循环

for epoch in range(EPOCHS):

# 获得开始时间

start = time.time()

# 初始化隐层状态

hidden = model.reset_states()

# 进行批次循环

for (batch_n, (inp, target)) in enumerate(dataset):

# 调用train_step进行训练, 获得批次循环的损失

loss = train_step(inp, target)

# 每100个批次打印轮数,批次和对应的损失

if batch_n % 100 == 0:

template = 'Epoch {} Batch {} Loss {}'

print(template.format(epoch+1, batch_n, loss))

# 每5轮保存一次检测点

if (epoch + 1) % 5 == 0:

model.save_weights(checkpoint_prefix.format(epoch=epoch))

# 打印轮数,当前损失,和训练耗时

print('Epoch {} Loss {:.4f}'.format(epoch+1, loss))

print('Time taken for 1 epoch {} sec\n'.format(time.time() - start))

# 保存最后的检测点

model.save_weights(checkpoint_prefix.format(epoch=epoch))

#------------------------------------------------------------------------------------------------------------#

"""

第三步: 使用模型生成文本内容

"""

# 构建生成函数:

def generate_text(model, start_string):

"""

:param model: 训练后的模型

:param start_string: 任意起始字符串

"""

# 要生成的字符个数

num_generate = 1000

# 将起始字符串转换为数字(向量化)

input_eval = [char2idx[s] for s in start_string]

print("len(input_eval)", len(input_eval)) #7 。输入的为7个字符。

# 扩展维度满足模型输入要求

input_eval = tf.expand_dims(input_eval, 0)

print("input_eval.shape", input_eval.shape) # (1, 1)

# 空列表用于存储结果

text_generated = []

# 设定“温度参数”,根据tf.random_categorical方法特点,

# 温度参数能够调节该方法的输入分布中概率的差距,以便控制随机被选中的概率大小

temperature = 1.0

# 初始化模型参数

model.reset_states()

# 开始循环生成

for i in range(num_generate):

# 使用模型获得输出

predictions = model(input_eval)

# print("predictions.shape", predictions.shape) #(1, 1, 65) 即 [批量大小, 句子长度(句子单词数), 65个不重复字符的概率值的维度]

# 删除批次的维度

predictions = tf.squeeze(predictions, 0)

# print("predictions.shape", predictions.shape) #(1, 65) 即 [句子长度(句子单词数), 65个不重复字符的概率值的维度]

# 使用“温度参数”和tf.random.categorical方法生成最终的预测字符索引

#temperature = 1.0 的话,predictions / temperature 相当于 结果没有变

predictions = predictions / temperature

predicted_ids = tf.random.categorical(predictions, num_samples=1)

predicted_id = predicted_ids[-1, 0].numpy()

# print("predicted_ids.shape", predicted_ids.shape) #(1, 1)

# print("predicted_id.shape", predicted_id.shape) #() 即0维的单个数值

# print("predicted_ids", predicted_ids) #tf.Tensor([[21]], shape=(1, 1), dtype=int64) 即[[65个不重复字符中的所预测的一个字符的索引值]]

# print("predicted_id", predicted_id) #21 即取出 [[65个不重复字符中的所预测的一个字符的索引值]] 中的 索引值

# 将预测的输出再扩展维度作为下一次的模型输入

input_eval = tf.expand_dims([predicted_id], 0)

# print("input_eval.shape", input_eval.shape) #(1, 1)

# 将该次输出映射成字符存到列表中

# 将65个不重复字符中的所预测的一个字符的索引值 映射转换为 对应的 字符

text_generated.append(idx2char[predicted_id])

# 最后将初始字符串和生成的字符进行连接

return (start_string + ''.join(text_generated))

# 恢复模型结构

model = build_model(vocab_size, embedding_dim, rnn_units, batch_size=1)

# 从检测点中获得训练后的模型参数

model.load_weights(tf.train.latest_checkpoint(checkpoint_dir))

# 调用:

print(generate_text(model, start_string=u"ROMEO: "))