hive 常用统计分析函数

前言:

hive中提供了很多的的统计分析函数,实际中经常用来进行统计分析,如下笔者整理了常用的一些分析函数,并附以相关实例.

博客参考连接:http://lxw1234.com/archives/2015/07/367.htm

1.基础函数

window 子句 rows between

- preceding:往前

- following:往后

- current row:当前行

- unbounded:起点

- unbounded preceding:表示从前面的起点

- unbounded following:表示到后面的终点

注意

- 如果不指定rows betwwen,默认为从起点到当前行

- 如果不指定order by,则将分组内所有值累加

创建表,加载数据

create table tb_pv (

cookieid string,

dt string,

pv int

) row format delimited

fields terminated by ',';

-- 数据

'''

cookie1,2019-04-10,1

cookie1,2019-04-11,5

cookie1,2019-04-12,7

cookie1,2019-04-13,3

cookie1,2019-04-14,2

cookie1,2019-04-15,4

cookie1,2019-04-16,4

'''

load data local inpath '/data/cookie.txt' overwrite into table test_db.tb_pv;

1.1 sum 求和

- sql

select

cookieid,

dt,

pv,

sum(pv) over(partition by cookieid order by dt) as pv1,

sum(pv) over(partition by cookieid order by dt rows between unbounded preceding and current row) as pv2,

sum(pv) over(partition by cookieid) as pv3,

sum(pv) over(partition by cookieid order by dt rows between 3 preceding and current row) as pv4,

sum(pv) over(partition by cookieid order by dt rows between 3 preceding and 1 following) as pv5,

sum(pv) over(partition by cookieid order by dt rows between current row and unbounded following) as pv6

from tb_pv order by dt;

''' 运行结果

cookieid dt pv pv1 pv2 pv3 pv4 pv5 pv6

cookie1 2019-04-10 1 1 1 26 1 6 26

cookie1 2019-04-11 5 6 6 26 6 13 25

cookie1 2019-04-12 7 13 13 26 13 16 20

cookie1 2019-04-13 3 16 16 26 16 18 13

cookie1 2019-04-14 2 18 18 26 17 21 10

cookie1 2019-04-15 4 22 22 26 16 20 8

cookie1 2019-04-16 4 26 26 26 13 13 4

'''

- 说明

pv1:分组内从起点到当前行的pv累计相加,如

11号pv = 10号pv + 11号pv = 1 + 5 = 6

12号pv = 10号pv + 11号pv + 12号pv = 1 + 5 + 7 = 13

依此类推

pv2:同pv1

pv3:分组内(cookie1)所有pv累加,如

pv3 = 1 + 5 + 7 + 3 + 2 + 4 + 4 = 26

pv4:分组内,当前行 + 往前3行,如

11号pv = 10号pv + 11号pv = 1 + 5 = 6

12号pv = 12号pv + 10号pv + 11号pv = 7 + 1 + 5 = 13

13号pv = 13号pv + 10号pv + 11号pv + 12号pv = 3 + 1 + 5 + 7 = 16

14号pv = 14号pv + 11号pv + 12号pv + 13号pv = 2 + 5 + 7 + 3 = 17

pv5:分组内,当前行 + 往前3行 + 往后1行,如

10号pv = 10号pv + 往前3行没有 + 11号pv = 1 + 0 + 5 = 6

13号pv = 13号pv + 10号pv + 11号pv + 12号pv + 14号pv = 3 + 1 + 5 + 7 + 2 = 18

pv6:分组内当前行 + 往后所有行

10号pv = 10号pv + 11号pv + 12号pv + 13号pv + 14号pv + 15号pv + 16号pv = 1 + 5 + 7 + 3 + 2 + 4 + 4 = 26

13号pv = 13号pv + 14号pv + 15号pv + 16号pv = 3 + 2 + 4 + 4 = 13

1.2 avg 求平均数

同 1.1中 sum

select

cookieid,

dt,

pv,

avg(pv) over(partition by cookieid order by dt) as pv1,

avg(pv) over(partition by cookieid order by dt rows between unbounded preceding and current row) as pv2,

avg(pv) over(partition by cookieid) as pv3,

avg(pv) over(partition by cookieid order by dt rows between 3 preceding and current row) as pv4,

avg(pv) over(partition by cookieid order by dt rows between 3 preceding and 1 following) as pv5,

avg(pv) over(partition by cookieid order by dt rows between current row and unbounded following) as pv6

from tb_pv order by dt;

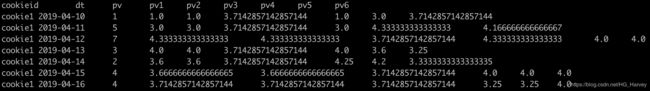

求平均数,运行的结果可以看到有很多小数位,我们使用cast函数来保留两位小数

语法:cast(column_name as decimal(10,2))

select

cookieid,

dt,

pv,

cast(avg(pv) over(partition by cookieid order by dt) as decimal(10,2)) as pv1,

cast(avg(pv) over(partition by cookieid order by dt rows between unbounded preceding and current row) as decimal(10,2)) as pv2,

cast(avg(pv) over(partition by cookieid) as decimal(10,2)) as pv3,

cast(avg(pv) over(partition by cookieid order by dt rows between 3 preceding and current row) as decimal(10,2)) as pv4,

cast(avg(pv) over(partition by cookieid order by dt rows between 3 preceding and 1 following) as decimal(10,2)) as pv5,

cast(avg(pv) over(partition by cookieid order by dt rows between current row and unbounded following) as decimal(10,2)) as pv6

from tb_pv order by dt;

运行结果

cookieid dt pv pv1 pv2 pv3 pv4 pv5 pv6

cookie1 2019-04-10 1 1.00 1.00 3.71 1.00 3.00 3.71

cookie1 2019-04-11 5 3.00 3.00 3.71 3.00 4.33 4.17

cookie1 2019-04-12 7 4.33 4.33 3.71 4.33 4.00 4.00

cookie1 2019-04-13 3 4.00 4.00 3.71 4.00 3.60 3.25

cookie1 2019-04-14 2 3.60 3.60 3.71 4.25 4.20 3.33

cookie1 2019-04-15 4 3.67 3.67 3.71 4.00 4.00 4.00

cookie1 2019-04-16 4 3.71 3.71 3.71 3.25 3.25 4.00

1.3 min 求最小值

同 1.1 sum 求和

select

cookieid,

dt,

pv,

min(pv) over(partition by cookieid order by dt) as pv1,

min(pv) over(partition by cookieid order by dt rows between unbounded preceding and current row) as pv2,

min(pv) over(partition by cookieid) as pv3,

min(pv) over(partition by cookieid order by dt rows between 3 preceding and current row) as pv4,

min(pv) over(partition by cookieid order by dt rows between 3 preceding and 1 following) as pv5,

min(pv) over(partition by cookieid order by dt rows between current row and unbounded following) as pv6

from tb_pv order by dt;

查询结果

cookieid dt pv pv1 pv2 pv3 pv4 pv5 pv6

cookie1 2019-04-10 1 1 1 1 1 1 1

cookie1 2019-04-11 5 1 1 1 1 1 2

cookie1 2019-04-12 7 1 1 1 1 1 2

cookie1 2019-04-13 3 1 1 1 1 1 2

cookie1 2019-04-14 2 1 1 1 2 2 2

cookie1 2019-04-15 4 1 1 1 2 2 4

cookie1 2019-04-16 4 1 1 1 2 2 4

1.4 max 最大值

同 1.1 sum 求和

select

cookieid,

dt,

pv,

max(pv) over(partition by cookieid order by dt) as pv1,

max(pv) over(partition by cookieid order by dt rows between unbounded preceding and current row) as pv2,

max(pv) over(partition by cookieid) as pv3,

max(pv) over(partition by cookieid order by dt rows between 3 preceding and current row) as pv4,

max(pv) over(partition by cookieid order by dt rows between 3 preceding and 1 following) as pv5,

max(pv) over(partition by cookieid order by dt rows between current row and unbounded following) as pv6

from tb_pv order by dt;

查询结果

cookieid dt pv pv1 pv2 pv3 pv4 pv5 pv6

cookie1 2019-04-10 1 1 1 7 1 5 7

cookie1 2019-04-11 5 5 5 7 5 7 7

cookie1 2019-04-12 7 7 7 7 7 7 7

cookie1 2019-04-13 3 7 7 7 7 7 4

cookie1 2019-04-14 2 7 7 7 7 7 4

cookie1 2019-04-15 4 7 7 7 7 7 4

cookie1 2019-04-16 4 7 7 7 4 4 4

2.序列函数

序列函数:ntile,row_number,rank,dense_rank

注意:序列函数不支持window子句

数据

cookie1,2019-04-10,1

cookie1,2019-04-11,5

cookie1,2019-04-12,7

cookie1,2019-04-13,3

cookie1,2019-04-14,2

cookie1,2019-04-15,4

cookie1,2019-04-16,4

cookie2,2019-04-10,2

cookie2,2019-04-11,3

cookie2,2019-04-12,5

cookie2,2019-04-13,6

cookie2,2019-04-14,3

cookie2,2019-04-15,9

cookie2,2019-04-16,7

2.1 ntile

-

ntile(n):用于将分组数据按照顺序切分成n片,返回当前切片值

ntile不支持rows between,比如 ntile(2) over(partition by cookieid order by createtime rows between 3 preceding and current row)

-

如果切片不均匀,默认增加第一个切片的分布

-

经常用来取前30% 带有百分之多少比例的记录什么的

select

cookieid,

dt,

pv,

ntile(2) over(partition by cookieid order by dt) as rn1,

ntile(3) over(partition by cookieid order by dt) as rn2,

ntile(4) over(order by dt) as rn3

from tb_pv

order by cookieid, dt;

查询结果

cookieid dt pv rn1 rn2 rn3

cookie1 2019-04-10 1 1 1 1

cookie1 2019-04-11 5 1 1 1

cookie1 2019-04-12 7 1 1 2

cookie1 2019-04-13 3 1 2 2

cookie1 2019-04-14 2 2 2 3

cookie1 2019-04-15 4 2 3 4

cookie1 2019-04-16 4 2 3 4

cookie2 2019-04-10 2 1 1 1

cookie2 2019-04-11 3 1 1 1

cookie2 2019-04-12 5 1 1 2

cookie2 2019-04-13 6 1 2 2

cookie2 2019-04-14 3 2 2 3

cookie2 2019-04-15 9 2 3 3

cookie2 2019-04-16 7 2 3 4

- 例1:统计一个cookie中,pv数最多的前三分之一天

select

cookieid,

dt,

pv,

ntile(3) over(partition by cookieid order by pv desc) as rn

from tb_pv;

运行结果(获取rn为1的记录,即为pv数最多的前三分之天)

cookieid dt pv rn

cookie1 2019-04-12 7 1

cookie1 2019-04-11 5 1

cookie1 2019-04-16 4 1

cookie1 2019-04-15 4 2

cookie1 2019-04-13 3 2

cookie1 2019-04-14 2 3

cookie1 2019-04-10 1 3

cookie2 2019-04-15 9 1

cookie2 2019-04-16 7 1

cookie2 2019-04-13 6 1

cookie2 2019-04-12 5 2

cookie2 2019-04-11 3 2

cookie2 2019-04-14 3 3

cookie2 2019-04-10 2 3

- 例2:统计用户消费排名前30%的平均消费和后70%的平均消费

创建表,加载数据

create table `tb_user_price`(

`user_id` string,

`price` double

) row format delimited

fields terminated by ',';

1001,100

1002,200

1003,10

1004,60

1005,20

1006,40

1007,1000

1008,220

1009,110

1010,190

1011,20

1012,80

1013,2000

1014,900

1015,26

load data local inpath '/Users/harvey/data/tb_user_price.txt' overwrite into table test_db.tb_user_price;

思路:将数据按照价格排序分为10分保存至临时表,基于临时表获取前30%和后70%求平均

-- 步骤一:将数据按照价格排序分为10分保存至临时表

drop table if exists tb_user_price_ntile_temp;

create table tb_user_price_ntile_temp as select user_id, price, ntile(10) over(order by price desc) as rn from tb_user_price;

-- 查询结果

user_id price rn

1013 2000.0 1

1007 1000.0 1

1014 900.0 2

1008 220.0 2

1002 200.0 3

1010 190.0 3

1009 110.0 4

1001 100.0 4

1012 80.0 5

1004 60.0 5

1006 40.0 6

1015 26.0 7

1011 20.0 8

1005 20.0 9

1003 10.0 10

-- 步骤二:基于临时表获取前30%和后70%求平均

select

a.rn as rn,

case when a.rn = 1 then 'avg_price_first_30%' when a.rn = 2 then 'avg_price_last_70%' end as avg_price_name,

avg( price ) as avg_price

from

( select user_id, price, case when rn in ( 1, 2, 3 ) then 1 else 2 end as rn from tb_user_price_ntile_temp ) a

group by a.rn;

-- 统计结果

rn avg_price_name avg_price

1 avg_price_first_30% 751.6666666666666

2 avg_price_last_70% 51.77777777777778

2.2 row_number

- 用于分组,比方说依照 date 分组

- 组内可以依照某个属性排序,比方说依照 score 分组,组内按照imei排序

- 语法:

row_number() over (partition by xxx order by xxx) rank,其中rank为分组的别名,可任意 - 取组内第一个数据使用 rank = 1

- 例1:求分组内pv名词

select cookieid, dt, pv, row_number() over(partition by cookieid order by pv desc) as rn from tb_pv;

查询结果

cookieid dt pv rn

cookie1 2019-04-12 7 1

cookie1 2019-04-11 5 2

cookie1 2019-04-16 4 3

cookie1 2019-04-15 4 4

cookie1 2019-04-13 3 5

cookie1 2019-04-14 2 6

cookie1 2019-04-10 1 7

cookie2 2019-04-15 9 1

cookie2 2019-04-16 7 2

cookie2 2019-04-13 6 3

cookie2 2019-04-12 5 4

cookie2 2019-04-11 3 5

cookie2 2019-04-14 3 6

cookie2 2019-04-10 2 7

- 例2:例1基础上获取排名为第一的

select * from (select cookieid, dt, pv, row_number() over(partition by cookieid order by pv desc) as rn from tb_pv) a where a.rn = 1;

查询结果

a.cookieid a.dt a.pv a.rn

cookie1 2019-04-12 7 1

cookie2 2019-04-15 9 1

2.3 rank 和 dense_rank

- rank() 生成数据项在分组中的排名,排名相同的名次一样,同一排名有几个,后面排名就会跳过几次

- dense_rank() 生成数据项在分组中的排名,排名相同的名次一样,且后面名次不跳跃

- row_number() 生成数据项在分组中的排名,不管排名是否有相同的,都按照顺序1,2,3……n

select

cookieid,

dt,

pv,

rank() over(partition by cookieid order by pv desc) as rn1,

dense_rank() over(partition by cookieid order by pv desc) as rn2,

row_number() over(partition by cookieid order by pv desc) as rn3

from tb_pv;

查询结果

cookieid dt pv rn1 rn2 rn3

cookie1 2019-04-12 7 1 1 1

cookie1 2019-04-11 5 2 2 2

cookie1 2019-04-16 4 3 3 3

cookie1 2019-04-15 4 3 3 4

cookie1 2019-04-13 3 5 4 5

cookie1 2019-04-14 2 6 5 6

cookie1 2019-04-10 1 7 6 7

cookie2 2019-04-15 9 1 1 1

cookie2 2019-04-16 7 2 2 2

cookie2 2019-04-13 6 3 3 3

cookie2 2019-04-12 5 4 4 4

cookie2 2019-04-11 3 5 5 5

cookie2 2019-04-14 3 5 5 6

cookie2 2019-04-10 2 7 6 7

2.4 cume_dist percent_rank

ps:这两个序列函数不常使用

创建表、导入数据

-- 数据

1201,Gopal,45000,TD

1202,Manisha,45000,HRD

1203,Masthanvali,40000,AD

1204,Kiran,40000,HRD

1205,Kranthi,30000,TD

-- 创建表

create table `emps`(

`eid` int,

`name` string,

`salary` string,

`dept` string

) row format delimited

fields terminated by ',';

-- 导入数据

load data local inpath '/Users/harvey/data/emps.txt' overwrite into table emps;

-- 查询

emps.eid emps.name emps.salary emps.dept

1201 Gopal 45000 TD

1202 Manisha 45000 HRD

1203 Masthanvali 40000 AD

1204 Kiran 40000 HRD

1205 Kranthi 30000 TD

2.4.1 cume_dist

- 小于等于当前值的行数/分组内总行数

例:统计小于等于当前薪水的人数,所占总人数的比例

select

eid,

name,

salary,

dept,

cume_dist() over(order by salary) as rn1,

cume_dist() over(partition by dept order by salary) as rn2

from emps;

查询结果

eid name salary dept rn1 rn2

1203 Masthanvali 40000 AD 0.6 1.0

1204 Kiran 40000 HRD 0.6 0.5

1202 Manisha 45000 HRD 1.0 1.0

1205 Kranthi 30000 TD 0.2 0.5

1201 Gopal 45000 TD 1.0 1.0

说明

rn1:没有 partition,所有数据均为1组,总行数为5

第一行:小于等于40000的行数为3,即 3 / 5 = 0.6

第三行:小于等于45000的行数为5,即 5 / 5 = 1.0

rn2:按照部门 dept 分组,dept 为 TD 的行数为2

第四行:小于等于30000的行数为1,即 1 2 = 0.5

2.4.2 percent_rank

- 分组内当前行的 RANK值 - 1 / 分组内总行数 - 1

select

eid,

name,

salary,

dept,

percent_rank() over(order by salary) as rn1, --分组内,总行数5

rank() over(order by salary) as rn11, --分组内 rank 值

percent_rank() over(partition by dept order by salary) as rn2, --按照部门 dept 分组

rank() over(partition by dept order by salary) as rn22 --按照部门 dept 分组内 rank 值

from emps;

查询结果

eid name salary dept rn1 rn11 rn2 rn22

1203 Masthanvali 40000 AD 0.25 2 0.0 1

1204 Kiran 40000 HRD 0.25 2 0.0 1

1202 Manisha 45000 HRD 0.75 4 1.0 2

1205 Kranthi 30000 TD 0.0 1 0.0 1

1201 Gopal 45000 TD 0.75 4 1.0 2

说明

rn1:没有 partition,所有数据均为1组,总行数为5

第一行:(2 - 1) / (5 - 1) = 1 / 4 = 0.25

第四行:(1 - 1) / (5 - 1) = 0 / 4 = 0.0

rn2:按照部门 dept 分组,salary 排序

第二行:HRD共两行,(1 - 1) / (2 - 1) = 0 / 1 = 0.0

第五行:TD共两行,(2 - 1) / (2 - 1) = 1 / 1 = 1.0

3.3 分析函数(窗口函数)

lag、lead、first_value、last_value

PS:这几个函数不支持window子句

创建表,加载数据

-- 数据

user1,2019-04-10 10:00:02,https://www.baidu.com/

user1,2019-04-10 10:00:00,https://www.baidu.com/

user1,2019-04-10 10:03:04,http://www.google.cn/

user1,2019-04-10 10:50:05,https://yq.aliyun.com/topic/list/

user1,2019-04-10 11:00:00,https://www.json.cn/

user1,2019-04-10 10:10:00,https://music.163.com/

user1,2019-04-10 10:50:01,https://www.guoguo-app.com/

user2,2019-04-10 10:00:02,http://www.google.cn/

user2,2019-04-10 10:00:00,https://www.baidu.com/

user2,2019-04-10 10:03:04,https://www.runoob.com/

user2,2019-04-10 10:50:05,http://fanyi.youdao.com/

user2,2019-04-10 11:00:00,https://www.csdn.net/

user2,2019-04-10 10:10:00,https://www.json.cn/

user2,2019-04-10 10:50:01,https://yq.aliyun.com/topic/list/

-- 创建表

create table tb_page_access (

userid string,

create_time string,

url string

) row format delimited

fields terminated by ',';

-- 加载数据

load data local inpath '/Users/harvey/data/tb_page_access.txt' overwrite into table tb_page_access;

3.1 lag

-

语法

LAG (scalar_expression [,offset] [,default]) OVER ([query_partition_clause] order_by_clause) -

lag(col, n, DEFAULT):用于统计窗口内往上第 n 行值

第一个参数为列名

第二个参数为往上第n行(可选,默认为1)

第三个参数为默认值(当往上第 n 行为 NULL 时候,取默认值,如不指定,则为 NULL)

select

userid,

create_time,

url,

row_number() over(partition by userid order by create_time) as rn,

lag(create_time, 1, '1970-01-01 00:00:00') over(partition by userid order by create_time) as last_1_time,

lag(create_time, 2) over(partition by userid order by create_time) as last_2_time

from tb_page_access;

rn:按照userid分组,create_time排序后的排名

last_1_time

第一行:往上一行,没有数据,则为 1970-01-01 00:00:00

第二行:往上一行,为 2019-04-10 10:00:00

依次类推

last_2_time

第一行:往上两行为空,未指定默认值,则为 NULL

第二行:同第一行

第三行:往上两行,为第一行的数据的 create_time,即 2019-04-10 10:00:00

依此类推

3.2 lead

-

语法

LEAD (scalar_expression [,offset] [,default]) OVER ([query_partition_clause] order_by_clause) -

lead(col, n, DEFAULT) 用于统计窗口内往下第n行值

第一个参数为列名

第二个参数为往下第n行(可选,默认为1)

第三个参数为默认值(当往下第 n 行为 NULL 时候,取默认值,如不指定,则为 NULL)

select

userid,

create_time,

url,

row_number() over(partition by userid order by create_time) as rn,

lead(create_time, 1, '1970-01-01 00:00:00') over(partition by userid order by create_time) as next_1_time,

lead(create_time, 2) over(partition by userid order by create_time) as next_2_time

from tb_page_access;

rn:按照 userid 分组,create_time 排序后的排名

next_1_time

第一行:向下一行,即第二行的 create_time,即 2019-04-10 10:00:02

依次类推

最后一行:先下一行,没有数据,即为默认值 1970-01-01 00:00:00

next_2_time

第一行:向下两行,即第三行的 create_time,即 2019-04-10 10:03:04

依次类推

最后一行:向下两行,没有数据也没有设置默认值,即为 NULL

3.3 first_value

- 取分组内排序后,截止到当前行,第一个值

select

userid,

create_time,

url,

row_number() over(partition by userid order by create_time) as rn,

first_value(url) over(partition by userid order by create_time) as first_url

from tb_page_access;

查询结果

PS:理解,截止到当前行的含义,指的指,在分组排序后,从第一行到当前行之间的第一个值

3.4 last_value

- 取分组内排序后,截止到当前行,最后一个值

select

userid,

create_time,

url,

row_number() over(partition by userid order by create_time) as rn,

last_value(url) over(partition by userid order by create_time) as last_url

from tb_page_access;

查询结果

PS:理解,截止到当前行的含义,指的是,在分组排序后,从第一行到当前行之间中最后一个值

4 分析函数(OLAP)

grouping sets、grouping__id、cube、rollup

这几个分析函数通常用于OLAP中,不能累加,而且需要根据不同维度上钻和下钻的指标统计,比如,分小时、天、月的UV数

通常需要对各个维度进行交叉分析,如果只有GROUP BY子句,那我们可以写出按各个维度或层次进行GROUP BY的查询语句,然后再通过UNION子句把结果集拼凑起来,但是这样的查询语句显得冗长、笨拙。为了解决HQL冗长的问题。hive中提供了这几个函数来解决

创建表、加载数据

''' 数据

2019-03,2019-03-10,127.0.0.1

2019-03,2019-03-10,192.168.1.1

2019-03,2019-03-12,61.135.169.121

2019-04,2019-04-12,203.208.41.47

2019-04,2019-04-13,39.96.252.213

2019-04,2019-04-13,121.40.179.176

2019-04,2019-04-16,139.196.135.171

2019-03,2019-03-10,119.167.188.226

2019-03,2019-03-10,59.111.181.38:

2019-04,2019-04-12,192.168.1.1

2019-04,2019-04-13,203.208.41.47

2019-04,2019-04-15,121.40.179.176

2019-04,2019-04-15,39.96.252.213

2019-04,2019-04-16,59.111.181.38

'''

-- 创建表

create table tb_uv (

month string,

day string,

ip string

) row format delimited

fields terminated by ',';

-- 加载数据

load data local inpath '/Users/harvey/data/tb_uv.txt' overwrite into table tb_uv;

4.1 grouping sets

在一个GROUP BY查询中,根据不同的维度组合进行聚合,等价于将不同维度的GROUP BY结果集进行UNION ALL

select

month,

day,

count(distinct ip) as uv,

grouping__id

from tb_uv

group by month, day

grouping sets (month, day)

order by grouping__id;

-- sql 等价于

select month, NULL, count(distinct ip) as uv, 1 as grouping__id from tb_uv group by month

union all

select NULL, day, count(distinct ip) as uv, 2 as grouping__id from tb_uv group by day;

''' 查询结果

month day uv grouping__id

2019-04 NULL 6 1

2019-03 NULL 5 1

NULL 2019-04-16 2 2

NULL 2019-04-15 2 2

NULL 2019-04-13 3 2

NULL 2019-04-12 2 2

NULL 2019-03-12 1 2

NULL 2019-03-10 4 2

'''

select

month,

day,

count(distinct ip) as uv,

grouping__id

from tb_uv

group by month, day

grouping sets (month, day, (month, day))

order by grouping__id asc;

-- 等价于

select month, null, count(distinct ip) as uv, 1 as grouping__id from tb_uv group by month

union all

select null, day, count(distinct ip) as uv, 2 as grouping__id from tb_uv group by day

union all

select month, day, count(distinct ip) as uv, 3 as grouping__id from tb_uv group by month,day order by grouping__id asc;

''' 查询结果

month day uv grouping__id

2019-04 NULL 6 1

2019-03 NULL 5 1

NULL 2019-04-16 2 2

NULL 2019-04-15 2 2

NULL 2019-04-13 3 2

NULL 2019-04-12 2 2

NULL 2019-03-12 1 2

NULL 2019-03-10 4 2

2019-04 2019-04-16 2 3

2019-04 2019-04-15 2 3

2019-04 2019-04-13 3 3

2019-04 2019-04-12 2 3

2019-03 2019-03-12 1 3

2019-03 2019-03-10 4 3

'''

4.2 cube

根据GROUP BY的维度的所有组合进行聚合

select

month,

day,

count(distinct ip) as uv,

grouping__id

from tb_uv

group by month, day

with cube

order by grouping__id asc;

-- 等价于

select null,null,count(distinct ip) as uv,0 as grouping__id from tb_uv

union all

select month,null,count(distinct ip) as uv,1 as grouping__id from tb_uv group by month

union all

select null,day,count(distinct ip) as uv,2 as grouping__id from tb_uv group by day

union all

select month,day,count(distinct ip) as uv,3 as grouping__id from tb_uv group by month,day order by grouping__id asc;

''' 查询结果

month day uv grouping__id

2019-04 2019-04-16 2 0

2019-04 2019-04-15 2 0

2019-04 2019-04-13 3 0

2019-04 2019-04-12 2 0

2019-03 2019-03-12 1 0

2019-03 2019-03-10 4 0

2019-03 NULL 5 1

2019-04 NULL 6 1

NULL 2019-04-15 2 2

NULL 2019-04-13 3 2

NULL 2019-04-12 2 2

NULL 2019-03-12 1 2

NULL 2019-03-10 4 2

NULL 2019-04-16 2 2

NULL NULL 10 3

'''

4.3 rollup

rollup可以实现从右到做递减多级的统计,显示统计某一层次结构的聚合。

select month, day, count(distinct ip) as uv, grouping__id

from tb_uv

group by month, day

with rollup

order by grouping__id asc;

-- 等价于

select month, day, count(distinct ip) as uv, grouping__id

from tb_uv

group by month, day

grouping sets((month, day), month, ())

order by grouping__id asc;

-- 等价于

select month, day, count(distinct ip) as uv, 0 as grouping__id from tb_uv group by month, day

union all

select month, NULL, count(distinct ip) as uv, 1 as grouping__id from tb_uv group by month

union all

select NULL, NULL, count(distinct ip) as uv, 3 as grouping__id from tb_uv

order by grouping__id asc;

''' 查询结果

month day uv grouping__id

2019-03 2019-03-10 4 0

2019-03 2019-03-12 1 0

2019-04 2019-04-12 2 0

2019-04 2019-04-13 3 0

2019-04 2019-04-15 2 0

2019-04 2019-04-16 2 0

2019-03 NULL 5 1

2019-04 NULL 6 1

NULL NULL 10 3

'''

PS:这几个函数,理解起来相对较难,需要结合业务场景多去使用和研究。