HDFS客户端源码

文章目录

- DFSClient

- 构造器

- 文件和目录

- 读与输入流

-text会最终进入

Display类的

getInputStream方法:

protected void processPath(PathData item) throws IOException {

if (item.stat.isDirectory()) {

throw new PathIsDirectoryException(item.toString());

}

item.fs.setVerifyChecksum(verifyChecksum);

printToStdout(getInputStream(item));//打印输出流

}

...

// 以下是输出流

protected InputStream getInputStream(PathData item) throws IOException {

FSDataInputStream i = (FSDataInputStream)super.getInputStream(item);

// Handle 0 and 1-byte files

short leadBytes;

try {

leadBytes = i.readShort();

} catch (EOFException e) {

i.seek(0);

return i;

}

// Check type of stream first

switch(leadBytes) {

case 0x1f8b: { // RFC 1952

// Must be gzip

i.seek(0);

return new GZIPInputStream(i);

}

case 0x5345: { // 'S' 'E'

// Might be a SequenceFile

if (i.readByte() == 'Q') {

i.close();

return new TextRecordInputStream(item.stat);

}

}

default: {

// Check the type of compression instead, depending on Codec class's

// own detection methods, based on the provided path.

CompressionCodecFactory cf = new CompressionCodecFactory(getConf());

CompressionCodec codec = cf.getCodec(item.path);

if (codec != null) {

i.seek(0);

return codec.createInputStream(i);

}

break;

}

case 0x4f62: { // 'O' 'b'

if (i.readByte() == 'j') {

i.close();

return new AvroFileInputStream(item.stat);

}

break;

}

}

// File is non-compressed, or not a file container we know.

i.seek(0);

return i;

}

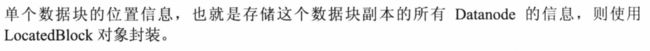

Display.getInputStream方法会调用DFSClient.open方法:

protected InputStream getInputStream(PathData item) throws IOException {

return item.fs.open(item.path);

}

上述的fs的值为DistributedFileSystem(其中dfs的属性为DFSClient):

(重要)进入DistributedFileSystem中open方法

public FSDataInputStream open(Path f, final int bufferSize)

throws IOException {

statistics.incrementReadOps(1);

Path absF = fixRelativePart(f);

return new FileSystemLinkResolver<FSDataInputStream>() {

@Override

public FSDataInputStream doCall(final Path p)

throws IOException, UnresolvedLinkException {

final DFSInputStream dfsis =

dfs.open(getPathName(p), bufferSize, verifyChecksum);

return dfs.createWrappedInputStream(dfsis);

}

@Override

public FSDataInputStream next(final FileSystem fs, final Path p)

throws IOException {

return fs.open(p, bufferSize);

}

}.resolve(this, absF);

}

其中有两个匿名方法 doCall 和 next

public FSDataInputStream open(Path f, final int bufferSize)

throws IOException {

statistics.incrementReadOps(1);

Path absF = fixRelativePart(f);

return new FileSystemLinkResolver<FSDataInputStream>() {

@Override

public FSDataInputStream doCall(final Path p)

throws IOException, UnresolvedLinkException {

final DFSInputStream dfsis =

dfs.open(getPathName(p), bufferSize, verifyChecksum);

return dfs.createWrappedInputStream(dfsis);

}

@Override

public FSDataInputStream next(final FileSystem fs, final Path p)

throws IOException {

return fs.open(p, bufferSize);

}

}.resolve(this, absF);

}

doCall方法调用了 dfs.open()获得的流对象,而这个dfs 是fs所持有的一个对象,它持有了一个可以和namenode进行通信的clientProtocal对象。

dfsClient.open:

public DFSInputStream open(String src, int buffersize, boolean verifyChecksum)

throws IOException, UnresolvedLinkException {

checkOpen();

// Get block info from namenode

TraceScope scope = getPathTraceScope("newDFSInputStream", src);

try {

return new DFSInputStream(this, src, verifyChecksum);

} finally {

scope.close();

}

}

通过DFSInputStream的构造方法来构造一个对应文件的输入流。

然后我们来看看构造方法里面是什么:

DFSInputStream(DFSClient dfsClient, String src, boolean verifyChecksum

) throws IOException, UnresolvedLinkException {

this.dfsClient = dfsClient;

this.verifyChecksum = verifyChecksum;

this.src = src;

synchronized (infoLock) {

this.cachingStrategy = dfsClient.getDefaultReadCachingStrategy();

}

openInfo();

}

void openInfo() throws IOException, UnresolvedLinkException {

synchronized(infoLock) {

// 获取文件对应的所有数据块的信息

lastBlockBeingWrittenLength = fetchLocatedBlocksAndGetLastBlockLength();

// 初始化重试次数

int retriesForLastBlockLength = dfsClient.getConf().retryTimesForGetLastBlockLength;

// 如果出现无法获取数据块长度的情况则重试

while (retriesForLastBlockLength > 0) {

// Getting last block length as -1 is a special case. When cluster

// restarts, DNs may not report immediately. At this time partial block

// locations will not be available with NN for getting the length. Lets

// retry for 3 times to get the length.

if (lastBlockBeingWrittenLength == -1) {

DFSClient.LOG.warn("Last block locations not available. "

+ "Datanodes might not have reported blocks completely."

+ " Will retry for " + retriesForLastBlockLength + " times");

waitFor(dfsClient.getConf().retryIntervalForGetLastBlockLength);

lastBlockBeingWrittenLength = fetchLocatedBlocksAndGetLastBlockLength();

} else {

break;

}

retriesForLastBlockLength--;

}

if (retriesForLastBlockLength == 0) {

throw new IOException("Could not obtain the last block locations.");

}

}

}

如上所示,openInfo 会调用 fetchLocatedBlocksAndGetLastBlockLength 获取文件对应的所有数据块的位置信息。下边我们看一下 fetchLocatedBlocksAndGetLastBlockLength的方法的实现,他的执行逻辑可以分为以下几个步骤:

private long fetchLocatedBlocksAndGetLastBlockLength() throws IOException {

// 通过ClientProtocol获取文件对应的所有数据块位置信息

final LocatedBlocks newInfo = dfsClient.getLocatedBlocks(src, 0);

if (DFSClient.LOG.isDebugEnabled()) {

DFSClient.LOG.debug("newInfo = " + newInfo);

}

if (newInfo == null) {

throw new IOException("Cannot open filename " + src);

}

// 比较DFSClient.locatedBlocks属性及新获取位置的信息

if (locatedBlocks != null) {

Iterator<LocatedBlock> oldIter = locatedBlocks.getLocatedBlocks().iterator();

Iterator<LocatedBlock> newIter = newInfo.getLocatedBlocks().iterator();

while (oldIter.hasNext() && newIter.hasNext()) {

// 如果数据块位置信息不匹配则抛出异常

if (! oldIter.next().getBlock().equals(newIter.next().getBlock())) {

throw new IOException("Blocklist for " + src + " has changed!");

}

}

}

// 更新locatedBlocks字段

locatedBlocks = newInfo;

long lastBlockBeingWrittenLength = 0;

if (!locatedBlocks.isLastBlockComplete()) {

final LocatedBlock last = locatedBlocks.getLastLocatedBlock();

if (last != null) {

if (last.getLocations().length == 0) {

if (last.getBlockSize() == 0) {

// if the length is zero, then no data has been written to

// datanode. So no need to wait for the locations.

// 如果最后一个数据块的长度为0则不用个更新直接返回0

return 0;

}

return -1;

}

// 通过ClientDatanodeProtocol获取数据块在Datanode上的长度

final long len = readBlockLength(last);

// 更新DFSClient.locatedBlocks保存的最后一个数据块的长度

last.getBlock().setNumBytes(len);

lastBlockBeingWrittenLength = len;

}

}

上述方法中,调用了以下两个方法:

dfsClient.getLocatedBlocks:通过ClientProtocol与NN通信readBlockLength:通过ClientDatanodeProtocol与DN通信

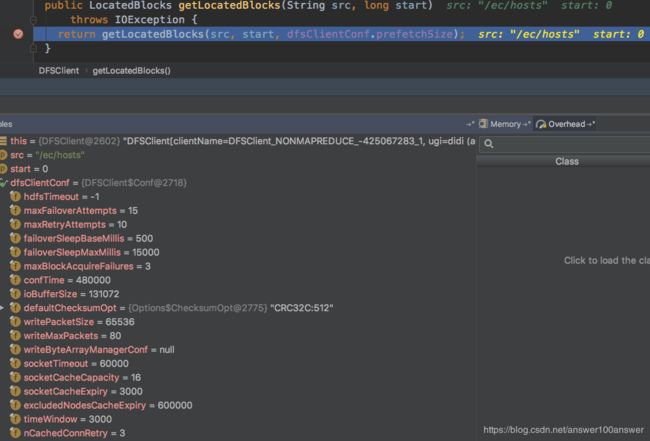

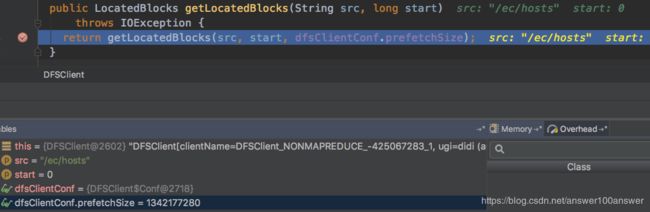

(1) dfsClient.getLocatedBlocks

public LocatedBlocks getLocatedBlocks(String src, long start)

throws IOException {

return getLocatedBlocks(src, start, dfsClientConf.prefetchSize);

}

public LocatedBlocks getLocatedBlocks(String src, long start, long length)

throws IOException {

TraceScope scope = getPathTraceScope("getBlockLocations", src);

try {

return callGetBlockLocations(namenode, src, start, length);

} finally {

scope.close();

}

}

static LocatedBlocks callGetBlockLocations(ClientProtocol namenode,

String src, long start, long length)

throws IOException {

try {

return namenode.getBlockLocations(src, start, length);

} catch(RemoteException re) {

throw re.unwrapRemoteException(AccessControlException.class,

FileNotFoundException.class,

UnresolvedPathException.class);

}

}

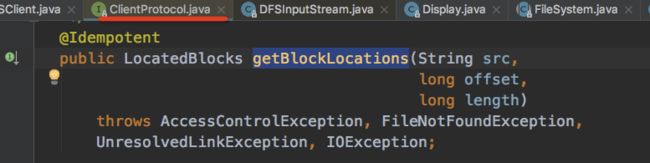

进入ClientProtocol中的getBlockLocations方法:

(2) readBlockLength

DFSClient

对目录树的操作,响应地调用mkdir、delete等等,利用rpc调用nn的对用想法,相比输入输出流,稍简单一点。

构造器

构造器:主要任务有两个:

- 读入配置初始化成员变量

- 建立NN的

IPC链接

/**

* Create a new DFSClient connected to the given nameNodeUri or rpcNamenode.

* If HA is enabled and a positive value is set for

* {@link DFSConfigKeys#DFS_CLIENT_TEST_DROP_NAMENODE_RESPONSE_NUM_KEY} in the

* configuration, the DFSClient will use {@link LossyRetryInvocationHandler}

* as its RetryInvocationHandler. Otherwise one of nameNodeUri or rpcNamenode

* must be null.

*/

@VisibleForTesting

public DFSClient(URI nameNodeUri, ClientProtocol rpcNamenode,

Configuration conf, FileSystem.Statistics stats)

throws IOException {

SpanReceiverHost.get(conf, DFSConfigKeys.DFS_CLIENT_HTRACE_PREFIX);

traceSampler = new SamplerBuilder(TraceUtils.

wrapHadoopConf(DFSConfigKeys.DFS_CLIENT_HTRACE_PREFIX, conf)).build();

// Copy only the required DFSClient configuration

this.dfsClientConf = new Conf(conf);

if (this.dfsClientConf.useLegacyBlockReaderLocal) {

LOG.debug("Using legacy short-circuit local reads.");

}

this.conf = conf;

this.stats = stats;

this.socketFactory = NetUtils.getSocketFactory(conf, ClientProtocol.class);

this.dtpReplaceDatanodeOnFailure = ReplaceDatanodeOnFailure.get(conf);

this.ugi = UserGroupInformation.getCurrentUser();

this.authority = nameNodeUri == null? "null": nameNodeUri.getAuthority();

this.clientName = "DFSClient_" + dfsClientConf.taskId + "_" +

DFSUtil.getRandom().nextInt() + "_" + Thread.currentThread().getId();

int numResponseToDrop = conf.getInt(

DFSConfigKeys.DFS_CLIENT_TEST_DROP_NAMENODE_RESPONSE_NUM_KEY,

DFSConfigKeys.DFS_CLIENT_TEST_DROP_NAMENODE_RESPONSE_NUM_DEFAULT);

NameNodeProxies.ProxyAndInfo<ClientProtocol> proxyInfo = null;

AtomicBoolean nnFallbackToSimpleAuth = new AtomicBoolean(false);

if (numResponseToDrop > 0) {

// This case is used for testing.

LOG.warn(DFSConfigKeys.DFS_CLIENT_TEST_DROP_NAMENODE_RESPONSE_NUM_KEY

+ " is set to " + numResponseToDrop

+ ", this hacked client will proactively drop responses");

proxyInfo = NameNodeProxies.createProxyWithLossyRetryHandler(conf,

nameNodeUri, ClientProtocol.class, numResponseToDrop,

nnFallbackToSimpleAuth);

}

if (proxyInfo != null) {

this.dtService = proxyInfo.getDelegationTokenService();

this.namenode = proxyInfo.getProxy();

} else if (rpcNamenode != null) {

// This case is used for testing.

Preconditions.checkArgument(nameNodeUri == null);

this.namenode = rpcNamenode;

dtService = null;

} else {

Preconditions.checkArgument(nameNodeUri != null,

"null URI");

proxyInfo = NameNodeProxies.createProxy(conf, nameNodeUri,

ClientProtocol.class, nnFallbackToSimpleAuth);

this.dtService = proxyInfo.getDelegationTokenService();

this.namenode = proxyInfo.getProxy();

}

String localInterfaces[] =

conf.getTrimmedStrings(DFSConfigKeys.DFS_CLIENT_LOCAL_INTERFACES);

localInterfaceAddrs = getLocalInterfaceAddrs(localInterfaces);

if (LOG.isDebugEnabled() && 0 != localInterfaces.length) {

LOG.debug("Using local interfaces [" +

Joiner.on(',').join(localInterfaces)+ "] with addresses [" +

Joiner.on(',').join(localInterfaceAddrs) + "]");

}

Boolean readDropBehind = (conf.get(DFS_CLIENT_CACHE_DROP_BEHIND_READS) == null) ?

null : conf.getBoolean(DFS_CLIENT_CACHE_DROP_BEHIND_READS, false);

Long readahead = (conf.get(DFS_CLIENT_CACHE_READAHEAD) == null) ?

null : conf.getLong(DFS_CLIENT_CACHE_READAHEAD, 0);

Boolean writeDropBehind = (conf.get(DFS_CLIENT_CACHE_DROP_BEHIND_WRITES) == null) ?

null : conf.getBoolean(DFS_CLIENT_CACHE_DROP_BEHIND_WRITES, false);

this.defaultReadCachingStrategy =

new CachingStrategy(readDropBehind, readahead);

this.defaultWriteCachingStrategy =

new CachingStrategy(writeDropBehind, readahead);

this.clientContext = ClientContext.get(

conf.get(DFS_CLIENT_CONTEXT, DFS_CLIENT_CONTEXT_DEFAULT),

dfsClientConf);

this.hedgedReadThresholdMillis = conf.getLong(

DFSConfigKeys.DFS_DFSCLIENT_HEDGED_READ_THRESHOLD_MILLIS,

DFSConfigKeys.DEFAULT_DFSCLIENT_HEDGED_READ_THRESHOLD_MILLIS);

int numThreads = conf.getInt(

DFSConfigKeys.DFS_DFSCLIENT_HEDGED_READ_THREADPOOL_SIZE,

DFSConfigKeys.DEFAULT_DFSCLIENT_HEDGED_READ_THREADPOOL_SIZE);

if (numThreads > 0) {

this.initThreadsNumForHedgedReads(numThreads);

}

this.saslClient = new SaslDataTransferClient(

conf, DataTransferSaslUtil.getSaslPropertiesResolver(conf),

TrustedChannelResolver.getInstance(conf), nnFallbackToSimpleAuth);

}

导入了配置信息和配置了网络相关的参数。建立与NN的IPC链接。

if (proxyInfo != null) {

this.dtService = proxyInfo.getDelegationTokenService();

this.namenode = proxyInfo.getProxy();

} else if (rpcNamenode != null) {

// This case is used for testing.

Preconditions.checkArgument(nameNodeUri == null);

this.namenode = rpcNamenode;

dtService = null;

} else {

Preconditions.checkArgument(nameNodeUri != null,

"null URI");

proxyInfo = NameNodeProxies.createProxy(conf, nameNodeUri,

ClientProtocol.class, nnFallbackToSimpleAuth);

this.dtService = proxyInfo.getDelegationTokenService();

this.namenode = proxyInfo.getProxy();

}

DFSClient构造器的成功,表示可以使用该对象对HDFS进行各种操作。

DFSClinet.close会关闭客户端。

文件和目录

不需要与DN打交道。

在DFSClient.java中,也有典型的目录树操作方法,如mkdirs()、delete()、getFileInfo()、setOnwer等。如下:

void checkOpen() throws IOException {

if (!clientRunning) {

IOException result = new IOException("Filesystem closed");

throw result;

}

}

// DFSClient 中的delete,直接使用RPC调用NN的delete

public boolean delete(String src, boolean recursive) throws IOException {

checkOpen();

TraceScope scope = getPathTraceScope("delete", src);

try {

return namenode.delete(src, recursive);

} catch(RemoteException re) {

throw re.unwrapRemoteException(AccessControlException.class,

FileNotFoundException.class,

SafeModeException.class,

UnresolvedPathException.class,

SnapshotAccessControlException.class);

} finally {

scope.close();

}

}

...

// DFSClient中的 getFileInfo ,也是直接调用NN的getFileInfo

public HdfsFileStatus getFileInfo(String src) throws IOException {

checkOpen();

TraceScope scope = getPathTraceScope("getFileInfo", src);

try {

return namenode.getFileInfo(src);

} catch(RemoteException re) {

throw re.unwrapRemoteException(AccessControlException.class,

FileNotFoundException.class,

UnresolvedPathException.class);

} finally {

scope.close();

}

}

mkdirs在获得必要参数masked后,同样调用的RPC

// DFSClient中的mkdir

public boolean mkdirs(String src, FsPermission permission,

boolean createParent) throws IOException {

if (permission == null) {

permission = FsPermission.getDefault();

}

FsPermission masked = permission.applyUMask(dfsClientConf.uMask);

return primitiveMkdir(src, masked, createParent);

}

...

public boolean primitiveMkdir(String src, FsPermission absPermission,

boolean createParent)

throws IOException {

checkOpen();

if (absPermission == null) {

absPermission =

FsPermission.getDefault().applyUMask(dfsClientConf.uMask);

}

if(LOG.isDebugEnabled()) {

LOG.debug(src + ": masked=" + absPermission);

}

TraceScope scope = Trace.startSpan("mkdir", traceSampler);

try {

return namenode.mkdirs(src, absPermission, createParent);

} catch(RemoteException re) {

throw re.unwrapRemoteException(AccessControlException.class,

InvalidPathException.class,

FileAlreadyExistsException.class,

FileNotFoundException.class,

ParentNotDirectoryException.class,

SafeModeException.class,

NSQuotaExceededException.class,

DSQuotaExceededException.class,

UnresolvedPathException.class,

SnapshotAccessControlException.class);

} finally {

scope.close();

}

}

小结:

- DFSClient中操作目录树/文件的方法都是先checkopen检查DFSClient的状态,然后与NN建立RPC链接,调用NN对应方法。

读与输入流

输入输出流是DFSClinet中最复杂的部分,不仅需要与NN通信,还需要访问DN。相比之下,输入流比输出流简单。

读数据的过程中,NN提供了两个远程方法:

- getBlockLocations()

- reportBadBlocks()