- scrapy爬虫使用undetected_chromedriver登录总是失败

叨叨爱码字

scrapy爬虫

Scrapy是一个优秀的爬虫框架,但是它本身不支持直接使用undetected_chromedriver。undetected_chromedriver是一个Python库,它可以用来绕过网站对SeleniumWebDriver的检测,但是它并不是Scrapy的一部分。如果你想在Scrapy中使用undetected_chromedriver,你需要自己编写中间件来实现这个功能。这可能需要一些额外

- python爬虫---MongoDB保存爬取的数据

他是只猫

学python爬虫与实践pythonmongodb数据库

python爬虫scrapy爬虫博客文章,本文章主要是补充爬虫数据的保存。scrapy爬虫流程可以参考一下博文。https://blog.csdn.net/suwuzs/article/details/118091474以下是对pipelines.py文件写入代码一、对items进行处理MyspiderPipeline这个类是对爬取的数据进行处理,对于较长的数据进行一个缩略。importpymon

- Scrapy 爬虫超时问题的解决方案

杨胜增

scrapy爬虫

Scrapy爬虫超时问题的解决方案在使用Scrapy进行网络爬虫开发时,经常会遇到各种问题,其中超时问题是一个比较常见的问题。超时问题会导致爬虫无法正常抓取数据,影响爬虫的效率和稳定性。本文将详细介绍Scrapy爬虫超时问题的原因及解决方案。问题描述在运行Scrapy爬虫时,可能会遇到以下类似的错误信息:twisted.internet.error.TimeoutError:Usertimeout

- 4. 编写你的第一个Scrapy爬虫

杨胜增

scrapy爬虫c++

4.编写你的第一个Scrapy爬虫在本篇文章中,我们将开始编写一个简单的Scrapy爬虫,帮助你理解如何从一个网站抓取数据。我们将通过一个实际的例子,演示如何创建一个Scrapy爬虫,从目标网页获取信息,并将其保存到本地。4.1Scrapy爬虫的基本构成Scrapy爬虫的基本构成很简单,通常包含以下几个关键部分:name:爬虫的名字,用于在运行时识别。start_urls:起始的URL列表,爬虫从

- 11. Scrapy爬虫的监控与日志管理:确保稳定运行

杨胜增

scrapy爬虫

11.Scrapy爬虫的监控与日志管理:确保稳定运行在进行大规模的爬虫部署时,如何高效地监控爬虫的运行状态,及时发现并解决潜在问题,变得至关重要。Scrapy提供了灵活的日志管理功能,同时也可以与外部工具集成,实现爬虫的实时监控与告警。本篇文章将探讨如何使用Scrapy内置的日志功能来追踪爬虫的状态、调试问题,并通过集成外部监控工具来提高爬虫的稳定性和可维护性。11.1Scrapy内置日志功能Sc

- 爬虫scrapy框架进阶-CrawlSpider, Rule

吃猫的鱼python

爬虫python数据挖掘scrapy

文章适合于所有的相关人士进行学习各位看官看完了之后不要立刻转身呀期待三连关注小小博主加收藏⚓️小小博主回关快会给你意想不到的惊喜呀⚓️文章目录scrapy中加入CrawlSpider️创建项目️提取器和规则RULEscrapy爬虫实战️分析网站️代码部分1.settings部分2.starts部分3.items部分4.重要的lyw_spider部分5.pipelines部分scrapy中加入Cra

- python爬取微信小程序数据,python爬取小程序数据

2301_81900439

前端

大家好,小编来为大家解答以下问题,python爬取微信小程序数据,python爬取小程序数据,现在让我们一起来看看吧!Python爬虫系列之微信小程序实战基于Scrapy爬虫框架实现对微信小程序数据的爬取首先,你得需要安装抓包工具,这里推荐使用Charles,至于怎么使用后期有时间我会出一个事例最重要的步骤之一就是分析接口,理清楚每一个接口功能,然后连接起来形成接口串思路,再通过Spider的回调

- python用scrapy爬虫豆瓣_python爬虫框架scrapy 豆瓣实战

weixin_39745724

Scrapy官方介绍是Anopensourceandcollaborativeframeworkforextractingthedatayouneedfromwebsites.Inafast,simple,yetextensibleway.意思就是一个开源和协作框架,用于以快速,简单,可扩展的方式从网站中提取所需的数据。环境准备本文项目使用环境及工具如下python3scrapymongodbpy

- 职位分析网站

MA木易YA

根据之前在拉勾网所获取到的数据进行分析展示简介1.项目采用Django框架进行网站架构,结合爬虫、echarts图表,wordcloud等对职位信息进行分析展示。2.数据来自拉勾网,采用scrapy爬虫框架获取,仅用作学习。3.页面采用AmazeUI|HTML5跨屏前端框架进行设计。用户这一块和之前博客、图书网站一致,利用Django本身的认证,结合Ajax、邮箱进行登陆注册,这一块的代码是可以移

- Scrapy爬虫爬取书籍网站信息(二)

无情Array

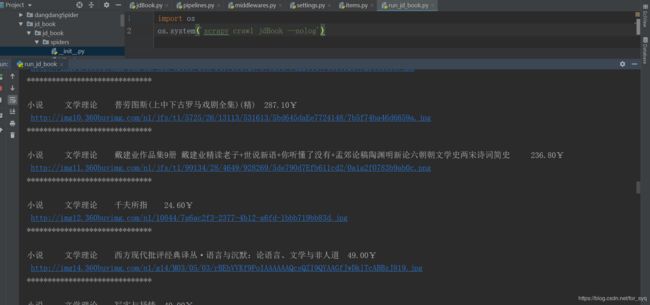

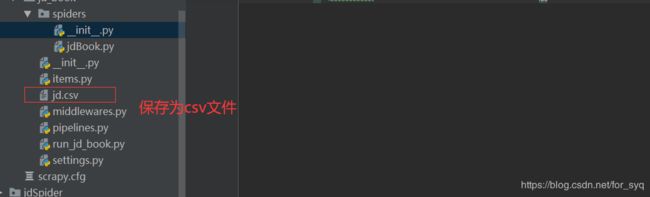

Python语言Scrapy爬虫python

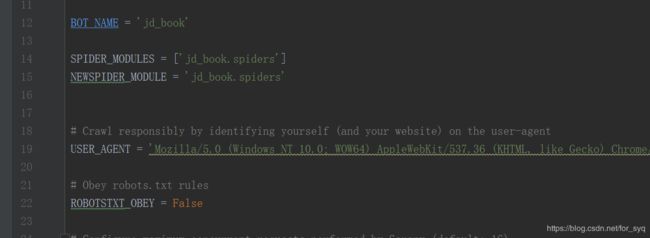

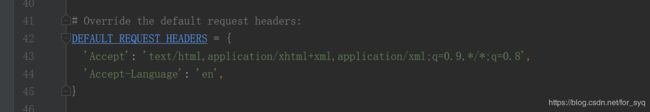

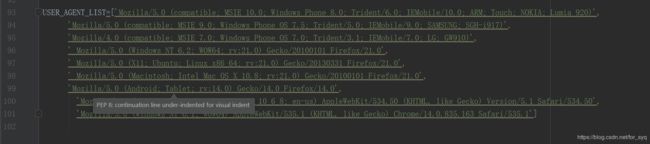

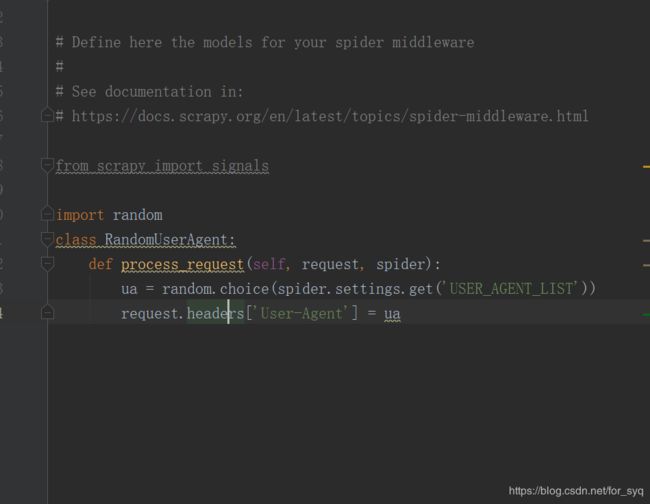

上文中我们了解到了如何在网页中的源代码中查找到相关信息,接下来进行页面爬取工作:1、首先创建一个Scrapy项目,取名为toscrape_book,接下来创建Spider文件以及Spider类,步骤如下:整个Scrapy框架建于D盘下的pycodes文件夹中,并在文件夹下的Spider文件中建立一个名为books的爬虫文件。2、在实现Spider之前,先定义封装书籍信息的Item类,在toscra

- python校园舆情分析系统 可视化 情感分析 朴素贝叶斯分类算法 爬虫 大数据 毕业设计(源码)✅

vx_biyesheji0001

毕业设计biyesheji0001biyesheji0002python分类爬虫毕业设计贝叶斯算法舆情分析情感分析

毕业设计:2023-2024年计算机专业毕业设计选题汇总(建议收藏)毕业设计:2023-2024年最新最全计算机专业毕设选题推荐汇总感兴趣的可以先收藏起来,点赞、关注不迷路,大家在毕设选题,项目以及论文编写等相关问题都可以给我留言咨询,希望帮助同学们顺利毕业。1、项目介绍技术栈:Python语言、Django框架、数据库、Echarts可视化、scrapy爬虫技术、HTML朴素贝叶斯分类算法(情感

- python 爬虫篇(1)---->re正则的详细讲解(附带演示代码)

万物都可def

python爬虫python爬虫mysql

re正则的详细讲解文章目录re正则的详细讲解前言4.re正则表达式(1)e正则的匹配模式(2)re.search的使用(3)re.findall()的使用(4)re.sub()的使用结语前言大家好,今天我将开始更新python爬虫篇,陆续更新几种解析数据的方法,例如re正则表达式beautifulsoupxpathlxml等等,以及selenium自动化的使用,scrapy爬虫框架的使用等等.还会

- 大数据毕业设计:python新能源汽车数据分析可视化系统 Django框架 Vue框架 Scrapy爬虫 Echarts可视化 懂车帝(源码)✅

源码之家

biyesheji0001biyesheji0002毕业设计python大数据毕业设计新能源新能源汽车爬虫懂车帝

博主介绍:✌全网粉丝10W+,前互联网大厂软件研发、集结硕博英豪成立工作室。专注于计算机相关专业毕业设计项目实战6年之久,选择我们就是选择放心、选择安心毕业✌感兴趣的可以先收藏起来,点赞、关注不迷路✌毕业设计:2023-2024年计算机毕业设计1000套(建议收藏)毕业设计:2023-2024年最新最全计算机专业毕业设计选题汇总1、项目介绍技术栈:Python语言、Django框架、MySQL数据

- 方法 ‘XXXX.parse()‘ 的签名与类 ‘Spider‘ 中基方法的签名不匹配

不当王多鱼不改名

scrapypython

Signatureofmethod‘XXXX.parse()’doesnotmatchsignatureofthebasemethodinclass‘Spider’为Scrapy框架遇到的问题在使用Scrapy爬虫框架时遇到的小问题,parse高亮问题描述在使用scrapy默认生成的框架文件时遇到Signatureofmethod‘XXXX.parse()’doesnotmatchsignatur

- 手把手教你用Scrapy爬虫框架爬取食品论坛数据并存入数据库

傻啦嘿哟

关于python那些事儿oracle数据库

目录一、引言二、Scrapy简介三、环境准备四、创建Scrapy项目五、创建Spider六、数据提取七、数据存储八、运行爬虫九、数据分析和可视化总结:一、引言随着互联网的普及,网络上的信息量越来越大。其中,食品论坛是一个汇聚了大量食品行业信息和用户评论的平台。为了获取这些有价值的信息,我们使用Scrapy爬虫框架来爬取食品论坛的数据,并将其存入数据库。在本篇文章中,我们将通过详细的步骤和代码,指导

- Python爬虫框架选择与使用:推荐几个常用的高效爬虫框架

小文没烦恼

python开发语言正则表达式爬虫网络

目录前言一、Scrapy框架1.安装Scrapy2.Scrapy示例代码3.运行Scrapy爬虫二、BeautifulSoup库1.安装BeautifulSoup2.BeautifulSoup示例代码3.运行BeautifulSoup代码三、Requests库1.安装Requests库2.Requests示例代码3.运行Requests代码总结前言随着网络数据的爆炸式增长,爬虫成为了获取和处理数据

- Scrapy爬虫在新闻数据提取中的应用

一勺菠萝丶

scrapy爬虫

Scrapy是一个强大的爬虫框架,广泛用于从网站上提取结构化数据。下面这段代码是Scrapy爬虫的一个例子,用于从新闻网站上提取和分组新闻数据。使用场景在新闻分析和内容聚合的场景中,收集和组织新闻数据是常见需求。例如,如果我们需要为用户提供按日期分类的新闻更新,或者我们想分析特定时间段内的新闻趋势,这段代码就非常适合。页面截图结构截图代码注释解释#Scrapy爬虫的parse方法,用于处理响应并提

- 【转】PyCharm中的sqlite新建完成后不显示表结构

carebon

初学python,学到了scrapy爬虫数据入库,在网上跟着一个视频课进行学习,但是碰到了如下问题:image.pngimage.pngimage.png这里新建了数据库文件之后,将这个.sqlite文件拖动到Database里面,然后出现了上图描述问题。查资料得到解决方法:image.pngimage.png初学Python,这只是我在学习中遇到的一种问题的解决方法,希望能帮到大家。原文链接:h

- 计算机毕业设计:基于python汽车数据采集分析可视化系统+爬虫+django框架

q_3375686806

毕业设计biyesheji0002biyesheji0001python课程设计汽车爬虫django

[毕业设计]2023-2024年最新最全计算机专业毕设选题推荐汇总感兴趣的可以先收藏起来,还有大家在毕设选题,项目以及论文编写等相关问题都可以给我留言咨询,希望帮助更多的人。项目说明1、介绍这款汽车信息网站是基于多项技术和框架设计的全面的汽车信息展示及查询系统。其中,采用了PythonDjango框架和Scrapy爬虫技术实现数据的抓取和处理,结合MySQL数据库进行数据存储和管理,利用Vue3、

- scrapy爬虫总结

Cool_Pepsi

大数据爬虫

目录一.Scrapy1.概述2.流程3.创建爬虫命令二.Selenium1.概述2.Python+SeleniumWebDriver2.1基本使用2.2优缺点2.3启动正常浏览器绑定端口2.4scrapy结合selenium三.多线程1.Lock版本生产者和消费者模式2.Condition版的生产者与消费者模式3.Queue线程安全队列4.多线程下载百思不得姐段子5.scrapy中的多线程四.分布

- 【头歌】——数据分析与实践-python-网络爬虫-Scrapy爬虫基础-网页数据解析-requests 爬虫-JSON基础

くらんゆうき

【头歌】——数据分析与实践答案数据分析python爬虫

【头歌】——数据分析与实践-python-网络爬虫-Scrapy爬虫基础-网页数据解析-requests爬虫-JSON基础Pandas初体验第1关爬取网页的表格信息第2关爬取表格中指定单元格的信息第3关将单元格的信息保存到列表并排序第4关爬取div标签的信息第5关爬取单页多个div标签的信息第6关爬取多个网页的多个div标签的信息Scrapy爬虫基础第1关Scarpy安装与项目创建第2关Scrap

- scrapy爬虫部署(centos7)(含scrapy_splash)2019-03-10

_好孩子

1.配置好python环境,详情见《python3安装(centos)》2.安装docker:yuminstall-ydocker3.配置国内镜像源:进入docker安装目录(默认为/etc/docker/),vim目录下的daemon.json:vim/etc/docker/daemon.json写入以下内容:{"registry-mirrors":["https://kfwkfulq.mirr

- 基于Python的汽车信息爬取与可视化分析系统

沐知全栈开发

python开发语言

介绍这款汽车信息网站是基于多项技术和框架设计的全面的汽车信息展示及查询系统。其中,采用了PythonDjango框架和Scrapy爬虫技术实现数据的抓取和处理,结合MySQL数据库进行数据存储和管理,利用Vue3、Element-Plus、ECharts以及Pinia等前端技术实现了丰富的数据可视化展示和用户交互功能。该系统主要包含以下几个模块:Scrapy爬虫:使用Scrapy框架抓取了“懂车帝

- scrapy爬虫实战

氏族归来

爬虫scrapy爬虫

scrapy爬虫实战Scrapy简介主要特性示例代码安装scrapy,并创建项目运行单个脚本代码示例配置itemsetting爬虫脚本代码解析xpath基本语法:路径表达式示例:通配符和多路径:函数:示例:批量运行附录1,持久化存入数据库附录2,如何在本地启动数据库Scrapy简介Scrapy是一个强大的开源网络爬虫框架,用于从网站上提取数据。它以可扩展性和灵活性为特点,被广泛应用于数据挖掘、信息

- 解决命令行无法启动scrapy爬虫

hyk今天写算法了吗

#Python爬虫scrapy爬虫Python

前言最近在准备毕设项目,想使用scrapy架构来进行爬虫,找了一个之前写过的样例,没想到在用普通的启动命令时报错。报错如下无法将“scrapy”项识别为cmdlet、函数、脚本文件或可运行程序的名称。请检查名称的拼写,如果包括路径,请确保路径正确,然后再试一次。所在位置行:1字符:1解决方法查阅大量资料后发现,在scrapy项目工作目录下使用python-mscrapycrawl爬虫名才能正常启动

- 向爬虫而生---Redis 拓宽篇2 <Pub/Sub发布订阅>

大河之J天上来

redis高级redisjava数据库

前言:受甲流影响,这几天瘫卧在床,没有及时更新...希望大家在学习之余,一定也要注意身体,这鬼甲流太厉害了!!接着上文:向爬虫而生---Redis拓宽篇1<pipeline传输效率>-CSDN博客为什么非要讲一下这个发布订阅问题呢?因为Redis的发布订阅模块与Scrapy爬虫可以结合使用,以实现分布式爬取和数据处理。分布式消息队列:Scrapy可以使用Redis的发布订阅模块作为分布式消息队列,

- 大数据毕业设计:新闻情感分析系统 舆情分析 NLP 机器学习 爬虫 朴素贝叶斯算法(附源码+论文)✅

vx_biyesheji0001

biyesheji0002毕业设计biyesheji0001大数据课程设计自然语言处理python机器学习毕业设计爬虫

毕业设计:2023-2024年计算机专业毕业设计选题汇总(建议收藏)毕业设计:2023-2024年最新最全计算机专业毕设选题推荐汇总感兴趣的可以先收藏起来,点赞、关注不迷路,大家在毕设选题,项目以及论文编写等相关问题都可以给我留言咨询,希望帮助同学们顺利毕业。1、项目介绍技术栈:Python语言、django框架、vue框架、scrapy爬虫框架、jieba分词、nlp算法、爬虫抓取机器学习、朴素

- scrapy爬虫实战教程

罗政

python爬虫

1.概述内容今天我们来用scrapy爬取电影天堂(http://www.dytt8.net/)这个网站,将影片存入mysql,下面是我的结果图:2.要安装的python库1.scrapy2.BeautifulSoup3.MySQLdb这个大家自己百度安装吧!3.爬取步骤1.创建tb_movie表存储电影数据,我这里收集的字段比较详细,大家可以酌情收集。CREATETABLE`tb_movie`(`

- 大数据毕业设计:租房推荐系统 python 租房大数据 爬虫+可视化大屏 计算机毕业设计(附源码+文档)✅

vx_biyesheji0001

biyesheji0001biyesheji0002毕业设计大数据课程设计python毕业设计爬虫推荐系统数据可视化

毕业设计:2023-2024年计算机专业毕业设计选题汇总(建议收藏)毕业设计:2023-2024年最新最全计算机专业毕设选题推荐汇总感兴趣的可以先收藏起来,点赞、关注不迷路,大家在毕设选题,项目以及论文编写等相关问题都可以给我留言咨询,希望帮助同学们顺利毕业。1、项目介绍技术栈:租房大数据分析可视化平台毕业设计python爬虫推荐系统Django框架、vue前端框架、scrapy爬虫、贝壳租房网租

- Centos作为代理服务器为Scrapy爬虫提供代理服务

YxYYxY

Centos作为代理服务器为Scrapy爬虫提供代理服务在我之前的文章Scrapy-redis分布式爬虫+Docker快速部署中,主要是介绍了分布式和Docker的使用,但爬虫在正常爬取中还是遭遇了banIP......所以就得搞代理了.由于2亿的数据已经跑了7000w了才被ban的IP,所以我觉得是因为对方发现了这不正常的请求(每秒400次左右,而且是同一个IP发出),再怎么也会觉得不正常,果然

- ASM系列五 利用TreeApi 解析生成Class

lijingyao8206

ASM字节码动态生成ClassNodeTreeAPI

前面CoreApi的介绍部分基本涵盖了ASMCore包下面的主要API及功能,其中还有一部分关于MetaData的解析和生成就不再赘述。这篇开始介绍ASM另一部分主要的Api。TreeApi。这一部分源码是关联的asm-tree-5.0.4的版本。

在介绍前,先要知道一点, Tree工程的接口基本可以完

- 链表树——复合数据结构应用实例

bardo

数据结构树型结构表结构设计链表菜单排序

我们清楚:数据库设计中,表结构设计的好坏,直接影响程序的复杂度。所以,本文就无限级分类(目录)树与链表的复合在表设计中的应用进行探讨。当然,什么是树,什么是链表,这里不作介绍。有兴趣可以去看相关的教材。

需求简介:

经常遇到这样的需求,我们希望能将保存在数据库中的树结构能够按确定的顺序读出来。比如,多级菜单、组织结构、商品分类。更具体的,我们希望某个二级菜单在这一级别中就是第一个。虽然它是最后

- 为啥要用位运算代替取模呢

chenchao051

位运算哈希汇编

在hash中查找key的时候,经常会发现用&取代%,先看两段代码吧,

JDK6中的HashMap中的indexFor方法:

/**

* Returns index for hash code h.

*/

static int indexFor(int h, int length) {

- 最近的情况

麦田的设计者

生活感悟计划软考想

今天是2015年4月27号

整理一下最近的思绪以及要完成的任务

1、最近在驾校科目二练车,每周四天,练三周。其实做什么都要用心,追求合理的途径解决。为

- PHP去掉字符串中最后一个字符的方法

IT独行者

PHP字符串

今天在PHP项目开发中遇到一个需求,去掉字符串中的最后一个字符 原字符串1,2,3,4,5,6, 去掉最后一个字符",",最终结果为1,2,3,4,5,6 代码如下:

$str = "1,2,3,4,5,6,";

$newstr = substr($str,0,strlen($str)-1);

echo $newstr;

- hadoop在linux上单机安装过程

_wy_

linuxhadoop

1、安装JDK

jdk版本最好是1.6以上,可以使用执行命令java -version查看当前JAVA版本号,如果报命令不存在或版本比较低,则需要安装一个高版本的JDK,并在/etc/profile的文件末尾,根据本机JDK实际的安装位置加上以下几行:

export JAVA_HOME=/usr/java/jdk1.7.0_25

- JAVA进阶----分布式事务的一种简单处理方法

无量

多系统交互分布式事务

每个方法都是原子操作:

提供第三方服务的系统,要同时提供执行方法和对应的回滚方法

A系统调用B,C,D系统完成分布式事务

=========执行开始========

A.aa();

try {

B.bb();

} catch(Exception e) {

A.rollbackAa();

}

try {

C.cc();

} catch(Excep

- 安墨移动广 告:移动DSP厚积薄发 引领未来广 告业发展命脉

矮蛋蛋

hadoop互联网

“谁掌握了强大的DSP技术,谁将引领未来的广 告行业发展命脉。”2014年,移动广 告行业的热点非移动DSP莫属。各个圈子都在纷纷谈论,认为移动DSP是行业突破点,一时间许多移动广 告联盟风起云涌,竞相推出专属移动DSP产品。

到底什么是移动DSP呢?

DSP(Demand-SidePlatform),就是需求方平台,为解决广 告主投放的各种需求,真正实现人群定位的精准广

- myelipse设置

alafqq

IP

在一个项目的完整的生命周期中,其维护费用,往往是其开发费用的数倍。因此项目的可维护性、可复用性是衡量一个项目好坏的关键。而注释则是可维护性中必不可少的一环。

注释模板导入步骤

安装方法:

打开eclipse/myeclipse

选择 window-->Preferences-->JAVA-->Code-->Code

- java数组

百合不是茶

java数组

java数组的 声明 创建 初始化; java支持C语言

数组中的每个数都有唯一的一个下标

一维数组的定义 声明: int[] a = new int[3];声明数组中有三个数int[3]

int[] a 中有三个数,下标从0开始,可以同过for来遍历数组中的数

- javascript读取表单数据

bijian1013

JavaScript

利用javascript读取表单数据,可以利用以下三种方法获取:

1、通过表单ID属性:var a = document.getElementByIdx_x_x("id");

2、通过表单名称属性:var b = document.getElementsByName("name");

3、直接通过表单名字获取:var c = form.content.

- 探索JUnit4扩展:使用Theory

bijian1013

javaJUnitTheory

理论机制(Theory)

一.为什么要引用理论机制(Theory)

当今软件开发中,测试驱动开发(TDD — Test-driven development)越发流行。为什么 TDD 会如此流行呢?因为它确实拥有很多优点,它允许开发人员通过简单的例子来指定和表明他们代码的行为意图。

TDD 的优点:

&nb

- [Spring Data Mongo一]Spring Mongo Template操作MongoDB

bit1129

template

什么是Spring Data Mongo

Spring Data MongoDB项目对访问MongoDB的Java客户端API进行了封装,这种封装类似于Spring封装Hibernate和JDBC而提供的HibernateTemplate和JDBCTemplate,主要能力包括

1. 封装客户端跟MongoDB的链接管理

2. 文档-对象映射,通过注解:@Document(collectio

- 【Kafka八】Zookeeper上关于Kafka的配置信息

bit1129

zookeeper

问题:

1. Kafka的哪些信息记录在Zookeeper中 2. Consumer Group消费的每个Partition的Offset信息存放在什么位置

3. Topic的每个Partition存放在哪个Broker上的信息存放在哪里

4. Producer跟Zookeeper究竟有没有关系?没有关系!!!

//consumers、config、brokers、cont

- java OOM内存异常的四种类型及异常与解决方案

ronin47

java OOM 内存异常

OOM异常的四种类型:

一: StackOverflowError :通常因为递归函数引起(死递归,递归太深)。-Xss 128k 一般够用。

二: out Of memory: PermGen Space:通常是动态类大多,比如web 服务器自动更新部署时引起。-Xmx

- java-实现链表反转-递归和非递归实现

bylijinnan

java

20120422更新:

对链表中部分节点进行反转操作,这些节点相隔k个:

0->1->2->3->4->5->6->7->8->9

k=2

8->1->6->3->4->5->2->7->0->9

注意1 3 5 7 9 位置是不变的。

解法:

将链表拆成两部分:

a.0-&

- Netty源码学习-DelimiterBasedFrameDecoder

bylijinnan

javanetty

看DelimiterBasedFrameDecoder的API,有举例:

接收到的ChannelBuffer如下:

+--------------+

| ABC\nDEF\r\n |

+--------------+

经过DelimiterBasedFrameDecoder(Delimiters.lineDelimiter())之后,得到:

+-----+----

- linux的一些命令 -查看cc攻击-网口ip统计等

hotsunshine

linux

Linux判断CC攻击命令详解

2011年12月23日 ⁄ 安全 ⁄ 暂无评论

查看所有80端口的连接数

netstat -nat|grep -i '80'|wc -l

对连接的IP按连接数量进行排序

netstat -ntu | awk '{print $5}' | cut -d: -f1 | sort | uniq -c | sort -n

查看TCP连接状态

n

- Spring获取SessionFactory

ctrain

sessionFactory

String sql = "select sysdate from dual";

WebApplicationContext wac = ContextLoader.getCurrentWebApplicationContext();

String[] names = wac.getBeanDefinitionNames();

for(int i=0; i&

- Hive几种导出数据方式

daizj

hive数据导出

Hive几种导出数据方式

1.拷贝文件

如果数据文件恰好是用户需要的格式,那么只需要拷贝文件或文件夹就可以。

hadoop fs –cp source_path target_path

2.导出到本地文件系统

--不能使用insert into local directory来导出数据,会报错

--只能使用

- 编程之美

dcj3sjt126com

编程PHP重构

我个人的 PHP 编程经验中,递归调用常常与静态变量使用。静态变量的含义可以参考 PHP 手册。希望下面的代码,会更有利于对递归以及静态变量的理解

header("Content-type: text/plain");

function static_function () {

static $i = 0;

if ($i++ < 1

- Android保存用户名和密码

dcj3sjt126com

android

转自:http://www.2cto.com/kf/201401/272336.html

我们不管在开发一个项目或者使用别人的项目,都有用户登录功能,为了让用户的体验效果更好,我们通常会做一个功能,叫做保存用户,这样做的目地就是为了让用户下一次再使用该程序不会重新输入用户名和密码,这里我使用3种方式来存储用户名和密码

1、通过普通 的txt文本存储

2、通过properties属性文件进行存

- Oracle 复习笔记之同义词

eksliang

Oracle 同义词Oracle synonym

转载请出自出处:http://eksliang.iteye.com/blog/2098861

1.什么是同义词

同义词是现有模式对象的一个别名。

概念性的东西,什么是模式呢?创建一个用户,就相应的创建了 一个模式。模式是指数据库对象,是对用户所创建的数据对象的总称。模式对象包括表、视图、索引、同义词、序列、过

- Ajax案例

gongmeitao

Ajaxjsp

数据库采用Sql Server2005

项目名称为:Ajax_Demo

1.com.demo.conn包

package com.demo.conn;

import java.sql.Connection;import java.sql.DriverManager;import java.sql.SQLException;

//获取数据库连接的类public class DBConnec

- ASP.NET中Request.RawUrl、Request.Url的区别

hvt

.netWebC#asp.nethovertree

如果访问的地址是:http://h.keleyi.com/guestbook/addmessage.aspx?key=hovertree%3C&n=myslider#zonemenu那么Request.Url.ToString() 的值是:http://h.keleyi.com/guestbook/addmessage.aspx?key=hovertree<&

- SVG 教程 (七)SVG 实例,SVG 参考手册

天梯梦

svg

SVG 实例 在线实例

下面的例子是把SVG代码直接嵌入到HTML代码中。

谷歌Chrome,火狐,Internet Explorer9,和Safari都支持。

注意:下面的例子将不会在Opera运行,即使Opera支持SVG - 它也不支持SVG在HTML代码中直接使用。 SVG 实例

SVG基本形状

一个圆

矩形

不透明矩形

一个矩形不透明2

一个带圆角矩

- 事务管理

luyulong

javaspring编程事务

事物管理

spring事物的好处

为不同的事物API提供了一致的编程模型

支持声明式事务管理

提供比大多数事务API更简单更易于使用的编程式事务管理API

整合spring的各种数据访问抽象

TransactionDefinition

定义了事务策略

int getIsolationLevel()得到当前事务的隔离级别

READ_COMMITTED

- 基础数据结构和算法十一:Red-black binary search tree

sunwinner

AlgorithmRed-black

The insertion algorithm for 2-3 trees just described is not difficult to understand; now, we will see that it is also not difficult to implement. We will consider a simple representation known

- centos同步时间

stunizhengjia

linux集群同步时间

做了集群,时间的同步就显得非常必要了。 以下是查到的如何做时间同步。 在CentOS 5不再区分客户端和服务器,只要配置了NTP,它就会提供NTP服务。 1)确认已经ntp程序包: # yum install ntp 2)配置时间源(默认就行,不需要修改) # vi /etc/ntp.conf server pool.ntp.o

- ITeye 9月技术图书有奖试读获奖名单公布

ITeye管理员

ITeye

ITeye携手博文视点举办的9月技术图书有奖试读活动已圆满结束,非常感谢广大用户对本次活动的关注与参与。 9月试读活动回顾:http://webmaster.iteye.com/blog/2118112本次技术图书试读活动的优秀奖获奖名单及相应作品如下(优秀文章有很多,但名额有限,没获奖并不代表不优秀):

《NFC:Arduino、Andro