OpenCV图像特征提取与检测C++(五)特征描述子--Brute-Force匹配、FLANN特征匹配、平面对象识别、AKAZE局部特征检测与匹配、BRISK特征检测与匹配、ORB特征提检测与匹配

特征描述子

即图像中每个像素位置的描述,通过此描述去匹配另一张图像是否含有相同特征。

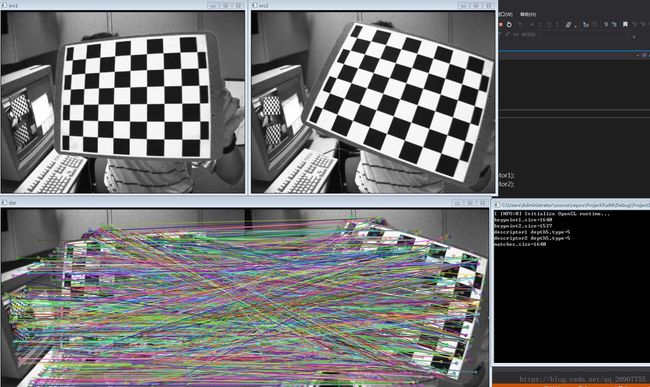

暴力匹配:Brute-Force

图像匹配本质上是特征匹配。因为我们总可以将图像表示成多个特征向量的组成,因此如果两

幅图片具有相同的特征向量越多,则可以认为两幅图片的相似程度越高。而特征向量的相似程度通常是用它们之间的欧氏距离来衡量,欧式距离越小,则可以认为越相似。

代码:

#include

#include

using namespace cv;

using namespace std;

using namespace cv::xfeatures2d;

int main() {

Mat src1, src2;

src1 = imread("C:/Users/Administrator/Desktop/pic/04.jpg");

src2 = imread("C:/Users/Administrator/Desktop/pic/03.jpg");

imshow("src1", src1);

imshow("src2", src2);

int minHessian = 400;

Ptr detector = SURF::create(minHessian);

vectorcout << "descriptor2 depth" << descriptor2.depth() << ",type=" << descriptor2.type() << endl;

BFMatcher matcher(NORM_L2); //Brute - Force 匹配,参数表示匹配的方式,默认NORM_L2(欧几里得) ,NORM_L1(绝对值的和)

vector FLANN特征匹配

算法速度特别快

特征匹配记录下目标图像与待匹配图像的特征点(KeyPoint),并根据特征点集合构造特征量(descriptor),对这个特征量进行比较、筛选,最终得到一个匹配点的映射集合。我们也可以根据这个集合的大小来衡量两幅图片的匹配程度。

代码:

#include

#include

using namespace cv;

using namespace std;

using namespace cv::xfeatures2d;

int main() {

Mat src1, src2;

src1 = imread("C:/Users/Administrator/Desktop/pic/test3.jpg");

src2 = imread("C:/Users/Administrator/Desktop/pic/test4.jpg");

imshow("src1", src1);

imshow("src2", src2);

int minHessian = 300;

Ptr detector = SURF::create(minHessian);// 也可以用 SIFT 特征

vectorcout << "matches[" << i << "].trainIdx" << matches[i].trainIdx << ","<cout << "matches[" << i << "].distanIdx" << matches[i].distance<< ","<double dist = matches[i].distance;

if (dist > maxDist) {

maxDist = dist;

}

if (dist < minDist) {

minDist = dist;

}

}

cout << "maxdistance=" << maxDist << endl;

cout << "mindistance=" << minDist << endl;

vector 平面对象识别

对象形变与位置变换、

API:

// 发现两个平面的透视变换,生成变换矩阵。即根据srcPoints的顶点数

//据与dstPoints的顶点数据,返回有srcPoints变换到dstPoints的变换矩阵

Mat findHomography(

InputArray srcPoints, // srcPoints 与 dstPoints 应该是同尺寸

InputArray dstPoints,

int method = 0, // 发现变换矩阵的算法 RANSAC

double ransacReprojThreshold = 3,

OutputArray mask=noArray(),

const int maxIters = 2000,

const double confidence = 0.995

);

void perspectiveTransform( // 透视变换

InputArray src,

OutputArray dst,

InputArray m // 变换矩阵

);代码:

#include

#include

using namespace cv;

using namespace std;

using namespace cv::xfeatures2d;

int main()

{

Mat img1 = imread("C:/Users/Administrator/Desktop/pic/test3.jpg",0);

Mat img2 = imread("C:/Users/Administrator/Desktop/pic/test4.jpg",0);

imshow("object image", img1);

imshow("object in scene", img2);

// surf featurs extraction

int minHessian = 500;

Ptr detector = SURF::create(minHessian); // 也可以用 SIFT 特征

vector AKAZE局部特征检测与匹配

局部特征相关算法在过去二十年期间风靡一时,其中代表的有SIFT、SURF算法等(广泛应用于目标检测、识别、匹配定位中),这两种算法是用金字塔策略构建高斯尺度空间(SURF算法采用框滤波来近似高斯函数)。不论SIFT还是SURF算法在构造尺度空间时候存在一个重要的缺点:高斯模糊不保留对象边界信息并且在所有尺度上平滑到相同程度的细节与噪声,影响定位的准确性和独特性。

针对高斯核函数构建尺度空间的缺陷,有学者提出了非线性滤波构建尺度空间:双边滤波、非线性扩散滤波方式。非线性滤波策略构建尺度空间主要能够局部自适应进行滤除小细节同时保留目标的边界使其尺度空间保留更多的特征信息。例如:BFSIFT采取双边滤波与双向匹配方式改善SIFT算法在SAR图像上匹配性能低下的问题(主要由于SAR图像斑点噪声严重),但是付出更高的计算复杂度。AKAZE作者之前提出的KAZE算法采取非线性扩散滤波相比于SIFT与SURF算法提高了可重复性和独特性。但是KAZE算法缺点在于计算密集,通过AOS数值逼近的策略来求解非线性扩散方程,虽然AOS求解稳定并且可并行化,但是需要求解大型线性方程组,在移动端实时性要求难以满足。

与SIFT、SURF算法相比,AKAZE算法更快同时与ORB、BRISK算法相比,可重复性与鲁棒性提升很大。

KAZE是日语音译过来的

步骤:

- AOS 构造尺度空间(非线性方式构造,得到的keypoints也就更准确) 尺度不变性

- Hessian矩阵特征点检测

- 方向指定,基于一阶微分图像 旋转不变性

- 描述子生成 归一化处理,光照不变性

- AKAZE局部特征检测与匹配 A表示Accelerated(加速的)

与SIFT、 SUFR比较:

更加稳定

非线性尺度空间

AKAZE速度更加快

代码:(特征检测)

#include

#include

using namespace cv;

using namespace std;

using namespace cv::xfeatures2d;

int main() {

Mat src = imread("C:/Users/Administrator/Desktop/pic/test3.jpg");

imshow("src", src);

Ptr kaze = KAZE::create();// KAZE局部特征检测类

vectorakeze = AKAZE::create();// AKAZE局部特征检测类

vector 代码:(特征匹配)

#include

#include

using namespace cv;

using namespace std;

using namespace cv::xfeatures2d;

int main()

{

Mat img1 = imread("C:/Users/Administrator/Desktop/pic/test3.jpg", 0);

Mat img2 = imread("C:/Users/Administrator/Desktop/pic/test4.jpg", 0);

imshow("box image", img1);

imshow("scene image", img2);

// extract akaze features

Ptr detector = AKAZE::create(); // AKAZE局部特征检测类

vector BRISK特征提取:一种二进制特征描述算子

具有较好的旋转不变性,尺度不变性,鲁棒性等.

在图像配准应用中,速度比较:ORB>FREAK>BRISK>SURF>SIFT,在对有较大模糊的图像配准时,BRISK算法在其中表现最为出色。

Brisk(Binary Robust Invariant Scalable Keypoints)特征相比于SURF SIFT 有些步骤是相同的:

- 构建尺度空间

- 特征点检测

- FAST9-16寻找特征 连续 9-16 个点小于或大于当前值,就把其当作特征点的候选者

- 特征点定位

- 关键点描述子

代码:

#include

#include

using namespace cv;

using namespace std;

using namespace cv::xfeatures2d;

int main() {

Mat src1 = imread("C:/Users/Administrator/Desktop/pic/test3.jpg", 0);

Mat src2 = imread("C:/Users/Administrator/Desktop/pic/test4.jpg", 0);

Ptr detector = BRISK::create();

vectordetec = BRISK::create();

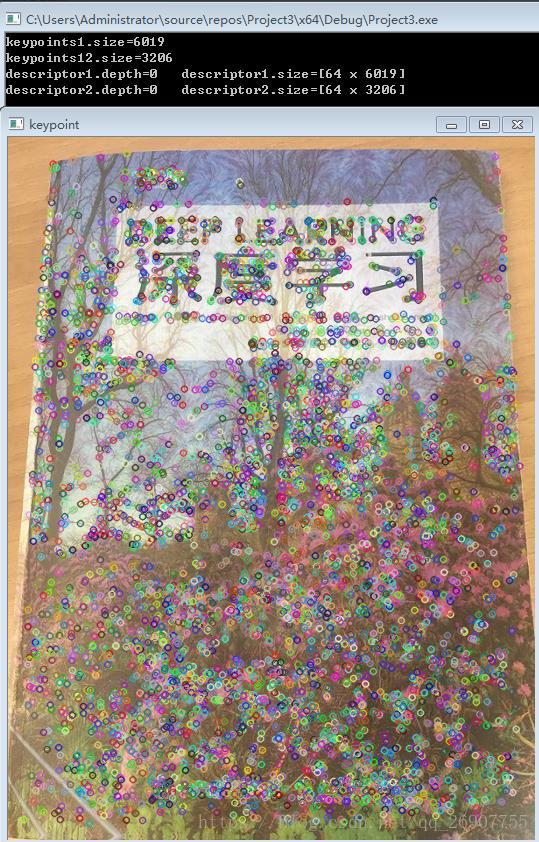

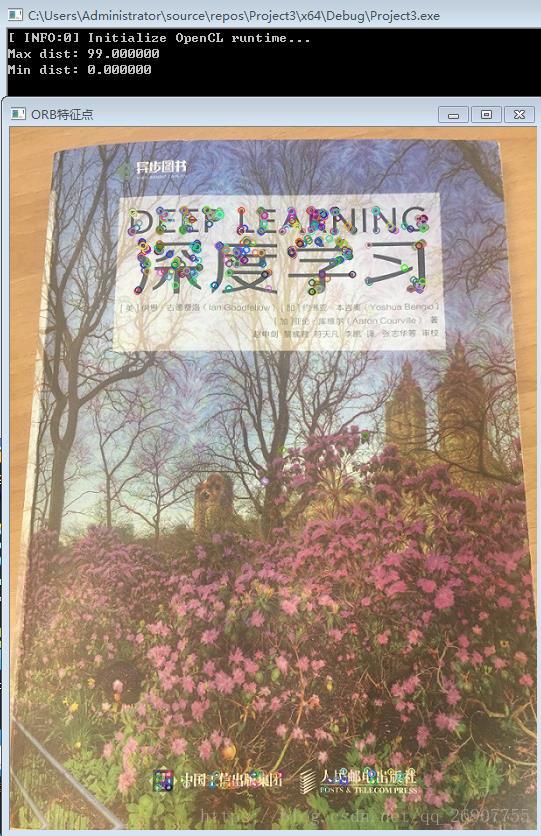

vector ORB特征提检测与匹配

ORB(Oriented FAST and Rotated BRIEF)是一种快速特征点提取和描述的算法。这个算法是由Ethan Rublee, Vincent Rabaud, Kurt Konolige以及Gary R.Bradski在2011年一篇名为“ORB:An Efficient Alternative to SIFTor SURF”的文章中提出。ORB算法分为两部分,分别是特征点提取和特征点描述。特征提取是由FAST(Features from Accelerated Segment Test)算法发展来的,特征点描述是根据BRIEF(Binary Robust IndependentElementary Features)特征描述算法改进的。ORB特征是将FAST特征点的检测方法与BRIEF特征描述子结合起来,并在它们原来的基础上做了改进与优化。据说,ORB算法的速度是sift的100倍,是surf的10倍。

ORB是一种快速的特征提取和匹配的算法。它的速度非常快,但是相应的算法的质量较差。和sift相比,ORB使用二进制串作为特征描述,这就造成了高的误匹配率。

代码:

#include

#include

using namespace cv;

using namespace std;

using namespace cv::xfeatures2d;

int main()

{

//读取要匹配的两张图像

Mat img_1 = imread("C:/Users/Administrator/Desktop/pic/test3.jpg");

Mat img_2 = imread("C:/Users/Administrator/Desktop/pic/test4.jpg");

//初始化

//首先创建两个关键点数组,用于存放两张图像的关键点,数组元素是KeyPoint类型

vector类型的orb,用于接收ORB类中create()函数的返回值

Ptr orb = ORB::create();

//第一步:检测Oriented FAST角点位置.

//detect是Feature2D中的方法,orb是子类指针,可以调用

//看一下detect()方法的原型参数:需要检测的图像,关键点数组,第三个参数为默认值

/*

CV_WRAP virtual void detect( InputArray image,

CV_OUT std::vector& keypoints,

InputArray mask=noArray() );

*/

orb->detect(img_1, keypoints_1);

orb->detect(img_2, keypoints_2);

//第二步:根据角点位置计算BRIEF描述子

orb->compute(img_1, keypoints_1, descriptors_1);

orb->compute(img_2, keypoints_2, descriptors_2);

//定义输出检测特征点的图片。

Mat outimg1;

//注意看,这里并没有用到描述子,描述子的作用是用于后面的关键点筛选。

drawKeypoints(img_1, keypoints_1, outimg1, Scalar::all(-1), DrawMatchesFlags::DEFAULT);

imshow("ORB特征点", outimg1);

//第三步:对两幅图像中的BRIEF描述子进行匹配,使用 Hamming 距离

//创建一个匹配点数组,用于承接匹配出的DMatch,其实叫match_points_array更为贴切。matches类型为数组,元素类型为DMatch

vectorif (dist>max_dist) max_dist = dist;

}

printf("Max dist: %f\n", max_dist);

printf("Min dist: %f\n", min_dist);

//第五步:根据最小距离,对匹配点进行筛选,

//当描述自之间的距离大于两倍的min_dist,即认为匹配有误,舍弃掉。

//但是有时最小距离非常小,比如趋近于0了,所以这样就会导致min_dist到2*min_dist之间没有几个匹配。

// 所以,在2*min_dist小于30的时候,就取30当上限值,小于30即可,不用2*min_dist这个值了

vector