【ML从入门到入土系列09】HMM

文章目录

- 1 理论

- 2 代码

- 3 参考

1 理论

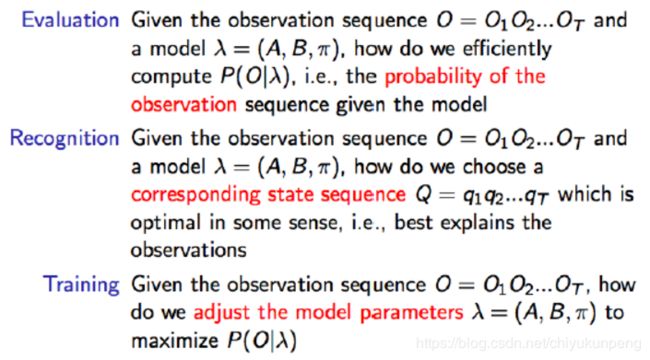

HMM解决的三大问题如下,即概率计算,学习以及预测问题。分别采用前向/后向算法,Viterbi算法,Baum-Welch算法进行求解。

2 代码

class HiddenMarkov:

# 前向算法

def forward(self, Q, V, A, B, O, PI):

N = len(Q) #可能存在的状态数量

M = len(O) # 观测序列的大小

alphas = np.zeros((N, M)) # alpha值

T = M

for t in range(T): # 遍历每一时刻,算出alpha值

indexOfO = V.index(O[t]) # 找出序列对应的索引

for i in range(N):

if t == 0: # 计算初值

alphas[i][t] = PI[t][i] * B[i][indexOfO]

print(

'alpha1(%d)=p%db%db(o1)=%f' % (i, i, i, alphas[i][t]))

else:

alphas[i][t] = np.dot(

[alpha[t - 1] for alpha in alphas],

[a[i] for a in A]) * B[i][indexOfO]

print('alpha%d(%d)=[sigma alpha%d(i)ai%d]b%d(o%d)=%f' %

(t, i, t - 1, i, i, t, alphas[i][t]))

P = np.sum([alpha[M - 1] for alpha in alphas])

# 后向算法

def backward(self, Q, V, A, B, O, PI):

N = len(Q) # 可能存在的状态数量

M = len(O) # 观测序列的大小

betas = np.ones((N, M)) # beta

for i in range(N):

print('beta%d(%d)=1' % (M, i))

for t in range(M - 2, -1, -1):

indexOfO = V.index(O[t + 1]) # 找出序列对应的索引

for i in range(N):

betas[i][t] = np.dot(

np.multiply(A[i], [b[indexOfO] for b in B]),

[beta[t + 1] for beta in betas])

realT = t + 1

realI = i + 1

print(

'beta%d(%d)=[sigma a%djbj(o%d)]beta%d(j)=(' %

(realT, realI, realI, realT + 1, realT + 1),

end='')

for j in range(N):

print(

"%.2f*%.2f*%.2f+" % (A[i][j], B[j][indexOfO],

betas[j][t + 1]),

end='')

print("0)=%.3f" % betas[i][t])

indexOfO = V.index(O[0])

P = np.dot(

np.multiply(PI, [b[indexOfO] for b in B]),

[beta[0] for beta in betas])

print("P(O|lambda)=", end="")

for i in range(N):

print(

"%.1f*%.1f*%.5f+" % (PI[0][i], B[i][indexOfO], betas[i][0]),

end="")

print("0=%f" % P)

# viterbi算法

def viterbi(self, Q, V, A, B, O, PI):

N = len(Q) #可能存在的状态数量

M = len(O) # 观测序列的大小

deltas = np.zeros((N, M))

psis = np.zeros((N, M))

I = np.zeros((1, M))

for t in range(M):

realT = t + 1

indexOfO = V.index(O[t]) # 找出序列对应的索引

for i in range(N):

realI = i + 1

if t == 0:

deltas[i][t] = PI[0][i] * B[i][indexOfO]

psis[i][t] = 0

print('delta1(%d)=pi%d * b%d(o1)=%.2f * %.2f=%.2f' %

(realI, realI, realI, PI[0][i], B[i][indexOfO],

deltas[i][t]))

print('psis1(%d)=0' % (realI))

else:

deltas[i][t] = np.max(

np.multiply([delta[t - 1] for delta in deltas],

[a[i] for a in A])) * B[i][indexOfO]

print(

'delta%d(%d)=max[delta%d(j)aj%d]b%d(o%d)=%.2f*%.2f=%.5f'

% (realT, realI, realT - 1, realI, realI, realT,

np.max(

np.multiply([delta[t - 1] for delta in deltas],

[a[i] for a in A])), B[i][indexOfO],

deltas[i][t]))

psis[i][t] = np.argmax(

np.multiply(

[delta[t - 1] for delta in deltas],

[a[i]

for a in A])) + 1 #由于其返回的是索引,因此应+1才能和正常的下标值相符合

print('psis%d(%d)=argmax[delta%d(j)aj%d]=%d' %

(realT, realI, realT - 1, realI, psis[i][t]))

print(deltas)

print(psis)

I[0][M - 1] = np.argmax([delta[M - 1] for delta in deltas

]) + 1

print('i%d=argmax[deltaT(i)]=%d' % (M, I[0][M - 1]))

for t in range(M - 2, -1, -1):

I[0][t] = psis[int(I[0][t + 1]) - 1][t + 1]

print('i%d=psis%d(i%d)=%d' % (t + 1, t + 2, t + 2, I[0][t]))

print("状态序列I:", I)

3 参考

理论:周志华《机器学习》,李航《统计学习方法》

代码:https://github.com/fengdu78/lihang-code