spring cloud stream+Kafka实现消息传递

(一) 为什么要消息传递在构建微服务应用程序中很重要

回答这个问题之前,首先介绍一个概念

事件驱动架构(EDA):使用异步消息实现事件之间的通信,也被称为消息驱动架构(MDA).

而基于EDA的方法允许开发人员构建高度解耦的系统,它可以对变更做出反应,而不需要与特定的库或者服务紧密耦合.当与微服务结合之后,.EDA通过让服务监听由应用程序发出的事件流(消息)的方式,允许开发人员迅速地向应用程序中添加新功能.

也就是说,基于EDA的方法构建的系统具有的高度的解耦性以及扩展性;

(二) Spring Cloud Stream 简介

1 、Spring Cloud Stream是一个由注解驱动的架构,它允许开发人员在Spring应用程序中构建消息发布者和消费者

2、Spring Cloud可以通过Spring Cloud Stream将消息传递集成到Spring 的微服务中

3、Spring Cloud Stream可以使用多个消息平台(包括Apache Kafka和RabbitMQ),而平台的具体实现细节则被排除在应用程序之外.在应用程序中实现消息的发布和消费是通过平台无关的Spring接口实现的.

(三) 安装ZooKeeper与kafka

zookeeper安装与入门:https://blog.csdn.net/douya2016/article/details/102983218

kafka安装与学习:https://www.cnblogs.com/qingyunzong/p/9004509.html#_label3_1

(四) Spring Cloud Stream + Kafka 实例

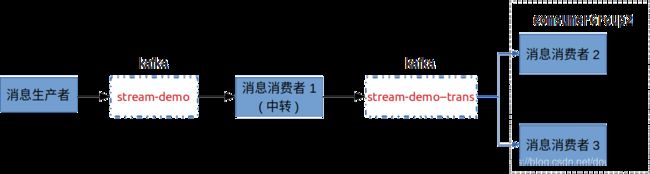

消息生产者生产消息,并向kafka发布,消息消费者1从kafka中消费消息,并负责中转;中转之后,将其发布到kafka,然后由comsumerGroup2组中的消息消费者消费;

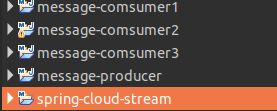

项目结构如下:

其中

messgae-producer:消息生产者,负责向kafka中发布消息;

message-comsumer1:消息消费者1,负责消息中转

messgae-comsumer2:消息消费者2

messgae-comsumer3:消息消费者3

Spring Cloud Stream 与kafka依赖:

org.springframework.cloud

spring-cloud-stream-binder-kafka

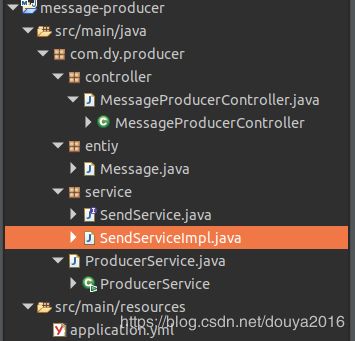

1.message-producer项目

application.yml

server:

port: 8750

spring:

application:

name: producer

cloud:

stream:

bindings:

output: #通道名称,使用stream默认的通道名称,可以自定义

destination: stream-demo #要写入的消息队列的名称

content-type: application/json #发送或接受什么类型的消息

kafka: #使用kafka作为服务中的消息总线

binder:

zkNodes: localhost:2181 #zookeeper的网络位置,如果是集群,逗号分割

brokers: localhost:9092 #kafka的网络位置

auto-create-topics: true启动类

package com.dy.producer;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

/**

* EnableBinding注解告诉Spring Cloud Steam将应用程序绑定到消息代理

* @author dy

*

*/

@SpringBootApplication

public class ProducerService {

public static void main(String[] args) {

SpringApplication.run(ProducerService.class, args);

}

}

控制器MessageProducerController

package com.dy.producer.controller;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.web.bind.annotation.PostMapping;

import org.springframework.web.bind.annotation.RequestBody;

import org.springframework.web.bind.annotation.RestController;

import com.dy.producer.entiy.Message;

import com.dy.producer.service.SendService;

@RestController

public class MessageProducerController {

@Autowired

private SendService sendService;

@PostMapping("/sendMsg")

public void send(@RequestBody Message message) {

sendService.sendMsg(message);

}

}

服务类SendServiceImpl,通过默认的Source类上定义的一组通道与消息代理进行通信

package com.dy.producer.service;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.cloud.stream.annotation.EnableBinding;

import org.springframework.cloud.stream.messaging.Source;

import org.springframework.messaging.support.MessageBuilder;

import org.springframework.stereotype.Service;

import com.dy.producer.entiy.Message;

@Service

@EnableBinding(Source.class)

public class SendServiceImpl implements SendService {

@Autowired

private Source source;

@Override

public void sendMsg(Message msg) {

source.output().send(MessageBuilder.withPayload(msg).build());

}

}

实体类Message

package com.dy.stream.entiy;

public class Message {

String sender;

String reciver;

String messgaeName;

String messageContent;

public Message(String sender, String reciver, String messgaeName, String messageContent) {

super();

this.sender = sender;

this.reciver = reciver;

this.messgaeName = messgaeName;

this.messageContent = messageContent;

}

public String getSender() {

return sender;

}

public void setSender(String sender) {

this.sender = sender;

}

public String getReciver() {

return reciver;

}

public void setReciver(String reciver) {

this.reciver = reciver;

}

public String getMessgaeName() {

return messgaeName;

}

public void setMessgaeName(String messgaeName) {

this.messgaeName = messgaeName;

}

public String getMessageContent() {

return messageContent;

}

public void setMessageContent(String messageContent) {

this.messageContent = messageContent;

}

@Override

public String toString() {

return sender + "-" + reciver + "-" + messgaeName + "-" + messageContent;

}

}

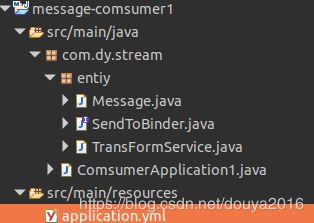

2.message-comsumer1 消息中转

application.yml

server:

port: 9751

spring:

application:

name: comsumer1

cloud:

stream:

bindings:

input: #通道名称,使用stream默认的通道名称,可以自定义, 接受消息生产者生产的消息

destination: stream-demo #要写入的消息队列的名称

# group: comsumerGroup1 #该属性确保服务只处理一次

output:

destination: stream-demo-trans #转发

kafka: #使用kafka作为服务中的消息总线

binder:

zkNodes: localhost:2181 #zookeeper的网络位置,如果是集群,逗号分割

brokers: localhost:9092 #kafka的网络位置

auto-create-topics: true启动类

package com.dy.stream;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

@SpringBootApplication

public class ComsumerApplication1 {

public static void main(String[] args) {

SpringApplication.run(ComsumerApplication1.class, args);

}

}

SendToBinder:消息代理借口

package com.dy.stream.entiy;

import org.springframework.cloud.stream.annotation.Input;

import org.springframework.cloud.stream.annotation.Output;

import org.springframework.messaging.MessageChannel;

import org.springframework.messaging.SubscribableChannel;

public interface SendToBinder {

@Output("out")

MessageChannel output();

@Input("input")

SubscribableChannel input();

}

TransFormService:绑定自己的SendToBinder接口,然后监听input,返回ACK表示中转站收到消息了,再转发消息出去

package com.dy.stream.entiy;

import org.springframework.cloud.stream.annotation.EnableBinding;

import org.springframework.cloud.stream.annotation.StreamListener;

import org.springframework.messaging.handler.annotation.SendTo;

/**

* 定自己的SendToBinder接口,然后监听input,返回ACK表示中转站收到消息了,再转发消息出去,代码如下:

* @author dy

*

*/

@EnableBinding(SendToBinder.class)

public class TransFormService {

@StreamListener("input")

//SendTo注解:给别人一个反馈ACK

@SendTo("output")

public Object transform(Message payload) {

System.out.println("消息中转:" + payload.toString());

return payload;

}

}

3.message-comsumer2

application.yml

server:

port: 9752

spring:

application:

name: comsumer2

cloud:

stream:

bindings:

input: #通道名称,使用stream默认的通道名称,可以自定义

destination: stream-demo-trans #要写入的消息队列的名称

# content-type: text/plain #发送或接受什么类型的消息

group: comsumerGroup2 #该属性确保服务只处理一次

kafka: #使用kafka作为服务中的消息总线

binder:

zkNodes: localhost:2181 #zookeeper的网络位置,如果是集群,逗号分割

brokers: localhost:9092 #kafka的网络位置

auto-create-topics: true启动类

package com.dy.stream;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.springframework.cloud.stream.annotation.EnableBinding;

import org.springframework.cloud.stream.annotation.StreamListener;

import org.springframework.cloud.stream.messaging.Sink;

@SpringBootApplication

public class ComsumerApplication2 {

public static void main(String[] args) {

SpringApplication.run(ComsumerApplication2.class, args);

}

}

RecieveService:使用Spring Sink接口监听默认通道input上传入的消息:

package com.dy.stream.entiy;

import org.springframework.cloud.stream.annotation.EnableBinding;

import org.springframework.cloud.stream.annotation.StreamListener;

import org.springframework.cloud.stream.messaging.Sink;

@EnableBinding(Sink.class)

public class RecieveService {

// 每次收到来自input通道消息时, Spring Cloud Stream将执行此方法

@StreamListener(Sink.INPUT)

public void recieve(Message payload) {

System.out.println(payload.toString());

}

}

4.message-comsumer3

同message-comsumer2

分别启动以上项目,发送messgae

各模块运行结果如下:

message-producer:

![]()

message-comsumer1:消息中转

message-comsumer2:消息消费者2

message-comsumer3:消息消费者3

![]()

从以上模块的结果可以看出,message-producer将消息分布给kafka,message-comsumer1从kafka中接收消息后对消息进行了中转,而message-comsumer2和message-comsumer3由于同属于一个组,因此只有一个消费者从kafka中订阅到了消息;

项目下载地址:

https://github.com/xdouya/Spring-Cloud-Stream-kafka/tree/master