tensorflow加载模型并测试的方法

利用tensorflow搭建模型并保存时,保存模型的方法为

saver = tf.train.Saver()

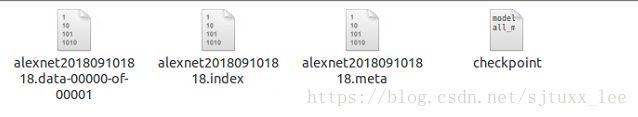

saver.save(sess, model_path + model_name)这样会在model_path路径下得到3个名为model_name的文件和一个checkpoint文件,例如,model_name=alexnet201809101818,则会得到如下四个文件

.data-00000-of-00001和.index保存了所有的weights、biases、gradients等变量。

.meta保存了图结构。

checkpoint文件是个文本文件,里面记录了保存的最新的checkpoint文件以及其它checkpoint文件列表。

在加载模型时,需要加载两个东西:图结构和变量值。加载图结构可以手动重新搭建网络,也可以直接加载.meta文件。

手动重新搭建网络

网络结构同https://blog.csdn.net/sjtuxx_lee/article/details/82594265中的AlenNet结构,使用同样的代码重新搭建。

import tensorflow as tf

import numpy as np

from keras.datasets import mnist

from keras.utils import to_categorical

import os

import cv2

from tqdm import tqdm

import random

os.environ['CUDA_VISIBLE_DEVICES']='0'

log_dir = '/opt/Data/lixiang/alexnet/log'

model_path = '/opt/Data/lixiang/alexnet/model'

n_output = 10

lr = 0.00001

dropout_rate = 0.75

epochs = 20

test_step = 10

batch_size = 32

image_size = 224

def load_mnist(image_size):

(x_train,y_train),(x_test,y_test) = mnist.load_data()

train_image = [cv2.cvtColor(cv2.resize(img,(image_size,image_size)),cv2.COLOR_GRAY2BGR) for img in x_train]

test_image = [cv2.cvtColor(cv2.resize(img,(image_size,image_size)),cv2.COLOR_GRAY2BGR) for img in x_test]

train_image = np.asarray(train_image)

test_image = np.asarray(test_image)

train_label = to_categorical(y_train)

test_label = to_categorical(y_test)

print('finish loading data!')

return train_image, train_label, test_image, test_label

def get_batch(image, label, batch_size, now_batch, total_batch):

if now_batch < total_batch:

image_batch = image[now_batch*batch_size:(now_batch+1)*batch_size]

label_batch = label[now_batch*batch_size:(now_batch+1)*batch_size]

else:

image_batch = image[now_batch*batch_size:]

label_batch = label[now_batch*batch_size:]

# image_batch = tf.convert_to_tensor(image_batch)

# label_batch = tf.convert_to_tensor(label_batch)

return image_batch, label_batch

def shuffle_set(train_image, train_label, test_image, test_label):

train_row = range(len(train_label))

random.shuffle(train_row)

train_image = train_image[train_row]

train_label = train_label[train_row]

test_row = range(len(test_label))

random.shuffle(test_row)

test_image = test_image[test_row]

test_label = test_label[test_row]

return train_image, train_label, test_image, test_label

def print_layer(layer):

print(layer.op.name + ':' + str(layer.get_shape().as_list()))

# define layers

def conv(x, kernel, strides, b):

return tf.nn.relu(tf.nn.bias_add(tf.nn.conv2d(x, kernel, strides, padding = 'SAME'), b))

def max_pooling(x, kernel, strides):

return tf.nn.max_pool(x, kernel, strides, padding = 'VALID')

def fc(x, w, b):

return tf.nn.relu(tf.add(tf.matmul(x,w),b))

# define variables

weights = {

'wc1':tf.Variable(tf.random_normal([11,11,3,96], dtype=tf.float32, stddev=0.1), name='weights1'),

'wc2':tf.Variable(tf.random_normal([5,5,96,256], dtype=tf.float32, stddev=0.1), name='weights2'),

'wc3':tf.Variable(tf.random_normal([3,3,256,384], dtype=tf.float32, stddev=0.1), name='weights3'),

'wc4':tf.Variable(tf.random_normal([3,3,384,384], dtype=tf.float32, stddev=0.1), name='weights4'),

'wc5':tf.Variable(tf.random_normal([3,3,384,256], dtype=tf.float32, stddev=0.1), name='weights5'),

'wd1':tf.Variable(tf.random_normal([6*6*256, 4096], dtype=tf.float32, stddev=0.1), name='weights_fc1'),

'wd2':tf.Variable(tf.random_normal([4096, 1000], dtype=tf.float32, stddev=0.1), name='weights_fc2'),

'wd3':tf.Variable(tf.random_normal([1000, n_output], dtype=tf.float32, stddev=0.1), name='weights_fc3'),

'd1':tf.Variable(tf.random_normal([28*28*3, 1000], dtype=tf.float32, stddev=0.1), name='weights_fc1'),

'd2':tf.Variable(tf.random_normal([1000, 1000], dtype=tf.float32, stddev=0.1), name='weights_fc2'),

'd3':tf.Variable(tf.random_normal([1000, n_output], dtype=tf.float32, stddev=0.1), name='weights_fc3'),

}

bias = {

'bc1':tf.Variable(tf.random_normal([96]), name='bias1'),

'bc2':tf.Variable(tf.random_normal([256]), name='bias2'),

'bc3':tf.Variable(tf.random_normal([384]), name='bias3'),

'bc4':tf.Variable(tf.random_normal([384]), name='bias4'),

'bc5':tf.Variable(tf.random_normal([256]), name='bias5'),

'bd1':tf.Variable(tf.random_normal([4096]), name='bias_fc1'),

'bd2':tf.Variable(tf.random_normal([1000]), name='bias_fc2'),

'bd3':tf.Variable(tf.random_normal([n_output]), name='bias_fc3'),

'd1':tf.Variable(tf.random_normal([1000]), name='bias_fc1'),

'd2':tf.Variable(tf.random_normal([1000]), name='bias_fc2'),

'd3':tf.Variable(tf.random_normal([n_output]), name='bias_fc3'),

}

strides = {

'sc1':[1,4,4,1],

'sc2':[1,1,1,1],

'sc3':[1,1,1,1],

'sc4':[1,1,1,1],

'sc5':[1,1,1,1],

'sp1':[1,2,2,1],

'sp2':[1,2,2,1],

'sp3':[1,2,2,1]

}

pooling_size = {

'kp1':[1,3,3,1],

'kp2':[1,3,3,1],

'kp3':[1,3,3,1]

}

#build model

def alexnet(inputs, weights, bias, strides, pooling_size, keep_prob):

with tf.name_scope('conv1'):

conv1 = conv(inputs, weights['wc1'], strides['sc1'], bias['bc1'])

print_layer(conv1)

with tf.name_scope('pool1'):

pool1 = max_pooling(conv1, pooling_size['kp1'], strides['sp1'])

print_layer(pool1)

with tf.name_scope('conv2'):

conv2 = conv(pool1, weights['wc2'], strides['sc2'], bias['bc2'])

print_layer(conv2)

with tf.name_scope('pool2'):

pool2 = max_pooling(conv2, pooling_size['kp2'], strides['sp2'])

print_layer(pool2)

with tf.name_scope('conv3'):

conv3 = conv(pool2, weights['wc3'], strides['sc3'], bias['bc3'])

print_layer(conv3)

with tf.name_scope('conv4'):

conv4 = conv(conv3, weights['wc4'], strides['sc4'], bias['bc4'])

print_layer(conv4)

with tf.name_scope('conv5'):

conv5 = conv(conv4, weights['wc5'], strides['sc5'], bias['bc5'])

print_layer(conv5)

with tf.name_scope('pool3'):

pool3 = max_pooling(conv5, pooling_size['kp3'], strides['sp3'])

print_layer(pool3)

flatten = tf.reshape(pool3, [-1,6*6*256])

with tf.name_scope('fc1'):

fc1 = fc(flatten, weights['wd1'], bias['bd1'])

fc1_drop = tf.nn.dropout(fc1, keep_prob)

print_layer(fc1_drop)

with tf.name_scope('fc2'):

fc2 = fc(fc1_drop, weights['wd2'], bias['bd2'])

fc2_drop = tf.nn.dropout(fc2, keep_prob)

print_layer(fc2_drop)

with tf.name_scope('fc3'):

outputs = tf.matmul(fc2_drop, weights['wd3']) + bias['bd3']

print_layer(outputs)

return outputs

x = tf.placeholder(tf.float32, [None, image_size, image_size, 3])

y = tf.placeholder(tf.float32, [None, n_output])

keep_prob = tf.placeholder(tf.float32)

pred = alexnet(x, weights, bias, strides, pooling_size, keep_prob)

loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(labels = y, logits = pred))

tf.summary.scalar('loss', loss)

train_step = tf.train.AdamOptimizer(learning_rate = lr).minimize(loss)

correct = tf.equal(tf.argmax(y,1), tf.argmax(pred,1))

accuracy = tf.reduce_mean(tf.cast(correct, tf.float32))

tf.summary.scalar('accuracy', accuracy)

init = tf.global_variables_initializer()

merge = tf.summary.merge_all()

saver = tf.train.Saver()值得注意的是,由于只建立了一个图,所以所有的op和变量都会被添加到这个默认图中,不用专门设置。建立好图结构后,再建立新的会话,导入变量值。

train_image, train_label, test_image, test_label = load_mnist(image_size)

with tf.Session() as sess:

sess.run(init)

test_total_batch = int(len(test_label)/batch_size)

ckpt = tf.train.latest_checkpoint(model_path)# 找到存储变量值的位置

saver.restore(sess, ckpt)# 加载到当前环境中

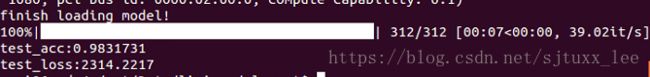

print('finish loading model!')

test_writer = tf.summary.FileWriter(log_dir + '/restore')

test_accuracy_list = []

test_loss_list = []

# test

for j in tqdm(range(test_total_batch)):

x_test_batch, y_test_batch = get_batch(test_image, test_label, batch_size, j, test_total_batch)

summary,test_accuracy,test_loss = sess.run([merge, accuracy,loss], feed_dict = {x:x_test_batch, y:y_test_batch, keep_prob:dropout_rate})

test_accuracy_list.append(test_accuracy)

test_loss_list.append(test_loss)

test_writer.add_summary(summary,j)

print('test_acc:'+ str(np.mean(test_accuracy_list)))

print('test_loss:'+ str(np.mean(test_loss_list)))加载.meta文件构建图

在保存模型的时候我们已经将图结构也保存下来了,所以不需要再重新搭建,只要导入保存的.meta文件即可。

import tensorflow as tf

import numpy as np

from keras.datasets import mnist

from keras.utils import to_categorical

import os

import cv2

from tqdm import tqdm

os.environ['CUDA_VISIBLE_DEVICES']='0'

log_dir = '/opt/Data/lixiang/alexnet/log'

model_path = '/opt/Data/lixiang/alexnet/model'

batch_size = 32

image_size = 224

def load_mnist(image_size):

(x_train,y_train),(x_test,y_test) = mnist.load_data()

train_image = [cv2.cvtColor(cv2.resize(img,(image_size,image_size)),cv2.COLOR_GRAY2BGR) for img in x_train]

test_image = [cv2.cvtColor(cv2.resize(img,(image_size,image_size)),cv2.COLOR_GRAY2BGR) for img in x_test]

train_image = np.asarray(train_image)

test_image = np.asarray(test_image)

train_label = to_categorical(y_train)

test_label = to_categorical(y_test)

print('finish loading data!')

return train_image, train_label, test_image, test_label

def get_batch(image, label, batch_size, now_batch, total_batch):

if now_batch < total_batch:

image_batch = image[now_batch*batch_size:(now_batch+1)*batch_size]

label_batch = label[now_batch*batch_size:(now_batch+1)*batch_size]

else:

image_batch = image[now_batch*batch_size:]

label_batch = label[now_batch*batch_size:]

return image_batch, label_batch

saver = tf.train.import_meta_graph(model_path + '/alexnet201809101818.meta')# 加载图结构

gragh = tf.get_default_graph()# 获取当前图,为了后续训练时恢复变量

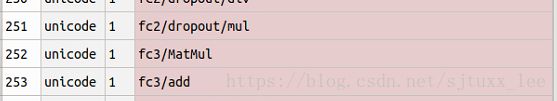

tensor_name_list = [tensor.name for tensor in gragh.as_graph_def().node]# 得到当前图中所有变量的名称

x = gragh.get_tensor_by_name('Placeholder:0')# 获取输入变量(占位符,由于保存时未定义名称,tf自动赋名称“Placeholder”)

y = gragh.get_tensor_by_name('Placeholder_1:0')# 获取输出变量

keep_prob = gragh.get_tensor_by_name('Placeholder_2:0')# 获取dropout的保留参数

pred = gragh.get_tensor_by_name('fc3/add:0')# 获取网络输出值

# 定义评价指标

loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(labels = y, logits = pred))

correct = tf.equal(tf.argmax(y,1), tf.argmax(pred,1))

accuracy = tf.reduce_mean(tf.cast(correct, tf.float32))在这段代码中,需要关注的有以下几个点

1. 由于加载图结构后,获取一个网络中间变量的方法是依靠变量名称,所以需要依靠tensor_name_list = [tensor.name for tensor in gragh.as_graph_def().node]来得到变量名称的列表

2. 使用模型测试时不能再重新定义输入输出占位符,因为无法导入网络中进行传递数据,而要采用gragh.get_tensor_by_name()方法获取加载的图结构中的输入输出占位符,所以建议大家在保存模型时给占位符们定义好专属的名称,便于恢复模型时获取,name中Placeholder:0一定要写“:0”。

3. 网络的输出值也要通过gragh.get_tensor_by_name()的方法获取,不清楚输出值的名字时要在tensor_name_list中进行查看,同样要加“:0”。

从tensor_name_list中可以获取中间层变量名称。

恢复好模型后就可以进行测试了

train_image, train_label, test_image, test_label = load_mnist(image_size)

with tf.Session() as sess:

saver.restore(sess, tf.train.latest_checkpoint(model_path))# 加载变量值

print('finish loading model!')

# test

test_total_batch = int(len(test_label)/batch_size)

test_accuracy_list = []

test_loss_list = []

for j in tqdm(range(test_total_batch)):

x_test_batch, y_test_batch = get_batch(test_image, test_label, batch_size, j, test_total_batch)

test_accuracy,test_loss = sess.run([accuracy,loss], feed_dict = {x:x_test_batch, y:y_test_batch, keep_prob:dropout_rate})

test_accuracy_list.append(test_accuracy)

test_loss_list.append(test_loss)

print('test_acc:'+ str(np.mean(test_accuracy_list)))

print('test_loss:'+ str(np.mean(test_loss_list)))