关于淘宝网评论数据的抓取

关于淘宝网评论数据的抓取

第一步 如何获取商品基本信息

我们打开多张淘宝的商品网页,分析网页的URL组成,寻找其中的规律,下面给出一个例子

我们发现了一个规律就是http://item.taobao.com/item.htm?id=商品id

(这个只适用于大部分商品)

第二步 观察浏览器是如何获取指定商品的评论数据

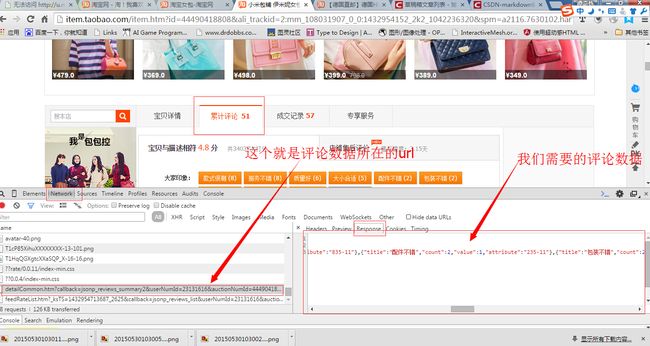

我们在浏览器中是可以浏览商品的评论数据的,我可以借助chrome浏览器的开发者工具,监测我们在浏览商品的评论数据的时候访问了那些链接,分析出我们是从哪一个链接中获得了评论数据,这个是关键点。下面是分析过程

我们从上图可以知道,评论数据是怎么来的了,关键是要弄清楚这个评论数据的url是怎么来的就好说了,这是我们接下来要解决的文件

第三步 评论数据的url怎么来的

对于http://rate.taobao.com/detailCommon.htm?callback=jsonp_reviews_summary2&userNumId=23131616&auctionNumId=44490418808&siteID=4&ua=

这么一个连接,不可能是凭空产生的,那么它唯一的来源就是商品页面,就包含了它,或者它的各个部分,那么我就去分析商品页面的源码,其实源码其实很多,不好找,把 rate.taobao.com/detailCommon.htm 这一段,作为关键字去搜索就得到了

我们在这个地方就可以得到了 淘宝评论的url,

<div id="reviews"

data-reviewApi="//rate.taobao.com/detail_rate.htm?userNumId=23131616&auctionNumId=44490418808&showContent=1¤tPage=1&ismore=0&siteID=4"

data-reviewCountApi=""

data-listApi="//rate.taobao.com/feedRateList.htm?userNumId=23131616&auctionNumId=44490418808&siteID=4"

data-commonApi="//rate.taobao.com/detailCommon.htm?userNumId=23131616&auctionNumId=44490418808&siteID=4"

data-usefulApi="//rate.taobao.com/vote_useful.htm?userNumId=23131616&auctionNumId=44490418808">

</div>那么接下来就看程序实现了

首先我们要模拟浏览器去访问一个淘宝的商品页面,那么就用HttpClient,

private static List<CommentInfo> grabData(String id) {

// TODO Auto-generated method stub

String goodUrl=baseUrl+id;

log("开始分析商品 "+id+"网页数据:"+goodUrl);

String html = null;

// 打开商品所在的html页面

try {

html = Client.sendGet(goodUrl);

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

// 如果商品页面打开失败则返回

if(html==null)

{

log("打开商品页面失败,"+goodUrl);

return null;

}

// 分析商品页面,提取其中的评论数据的url

String url= analysis(html);

// String temp="http://rate.taobao.com/feedRateList.htm?_ksTS=1430057796067_1323&callback=jsonp_reviews_list&userNumId=1753146414&auctionNumId=39232136537&siteID=1¤tPageNum=1&rateType=&orderType=sort_weight&showContent=1&attribute=&ua=";

if(url==null)

{

log("提取商品评论数据url失败,终止分析");

return null;

}

// 获得商品的评论数据

List<CommentInfo> list=analysisComments(url);

return list;

}我们访问了商品页面,那么就要获得其中的评论数据的url, 这个标签,然后再去访问这个标签的 /** 到这里,一切都很明白了,以下是全部代码 主类 网络访问类 需要的库主要是 httpclient,jsoup,fastjson,gson, HttpCient,JSoup

关键在于 找到 data-listApi="//rate.taobao.com/feedRateList.htm?userNumId=23131616&auctionNumId=44490418808&siteID=4"

这个属性的值,这个值就是我们要的评论数据url了。这个使用JSoup就可以用上了

* 根据商品的html获得商品评论数据的url

* @param html 商品的html

* @return 评论数据的url,如果无法获取的话,就返回null

*/

public static String analysis(String html)

{

Document doc=Jsoup.parse(html); Element e=doc.getElementById("reviews");

if(e!=null)

{

System.out.println(e.html());

String url=e.attr("data-listapi");

System.out.println(url);

return url;

}

return null;

}

/**

* author:tanqidong

* create Time:2015年4月26日,下午8:53:03

* description:

* fileName:DataObtainer.java

*/

package com.computer.test;

import java.io.IOException;

import java.util.ArrayList;

import java.util.List;

import org.apache.http.client.ClientProtocolException;

import org.jsoup.Jsoup;

import org.jsoup.nodes.Document;

import org.jsoup.nodes.Element;

import com.alibaba.fastjson.JSONArray;

import com.alibaba.fastjson.JSONObject;

import com.computer.app.CommentInfoApp;

import com.computer.entity.CommentInfo;

import com.computer.net.Client;

import com.google.gson.Gson;

/**

* @author

*

*/

public class DataObtainer {

/**

* 商品基本的url

*/

private static final String baseUrl="http://item.taobao.com/item.htm?id=";

/**

* 查询评论附带的参数

*/

private static final String urlCondition="&callback=jsonp_reviews_list&rateType=&orderType=sort_weight&showContent=1&attribute=&ua=¤tPageNum=";

/**

* 输出日志标签

*/

private static final String defaultTag = "DataObtainer";

/*

* 默认抓取的评论条数

*/

private static final int defaultCommentCount=100;

/**

* @param args

* @throws IOException

* @throws ClientProtocolException

*/

public static void main(String[] args) throws ClientProtocolException, IOException {

// TODO Auto-generated method stub

// 这里修改商品id,做测试

String id="44413860299";

// 抓取商品评论数据

Listpackage com.computer.net;

import java.io.ByteArrayOutputStream;

import java.io.File;

import java.io.IOException;

import java.io.InputStream;

import java.util.ArrayList;

import java.util.List;

import java.util.Map;

import java.util.Set;

import org.apache.http.HttpEntity;

import org.apache.http.HttpResponse;

import org.apache.http.NameValuePair;

import org.apache.http.client.ClientProtocolException;

import org.apache.http.client.CookieStore;

import org.apache.http.client.HttpClient;

import org.apache.http.client.entity.UrlEncodedFormEntity;

import org.apache.http.client.methods.HttpGet;

import org.apache.http.client.methods.HttpPost;

import org.apache.http.client.params.ClientPNames;

import org.apache.http.client.params.CookiePolicy;

import org.apache.http.client.protocol.ClientContext;

import org.apache.http.cookie.Cookie;

import org.apache.http.entity.BufferedHttpEntity;

import org.apache.http.entity.mime.MultipartEntityBuilder;

import org.apache.http.impl.client.BasicCookieStore;

import org.apache.http.impl.client.DefaultHttpClient;

import org.apache.http.message.BasicNameValuePair;

import org.apache.http.protocol.BasicHttpContext;

import org.apache.http.protocol.HttpContext;

public class Client {

public static HttpClient httpClient=new DefaultHttpClient();

private static Cookie mCookie=null;

private static CookieStore mCookieStore=null;

public static HttpClient getHttpClient()

{

if(httpClient==null)

{

httpClient=new DefaultHttpClient();

httpClient.getParams().setParameter(ClientPNames.COOKIE_POLICY, CookiePolicy.BEST_MATCH);

// User-Agent Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.1; WOW64; Trident/7.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; InfoPath.3; .NET4.0C; .NET4.0E; Shuame)

httpClient.getParams().setParameter("User-Agent","Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.1; WOW64; Trident/7.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; InfoPath.3; .NET4.0C; .NET4.0E; Shuame)");

/*

// ��֤������

// ��������Ϲд�ģ������ʵ�������д

httpClient.getCredentialsProvider().setCredentials(new AuthScope("10.60.8.20", 8080),

new UsernamePasswordCredentials("username", "password"));

// ���ʵ�Ŀ��վ�㣬�˿ں�Э��

HttpHost targetHost = new HttpHost("www.google.com", 443, "https");

// ���������?

HttpHost proxy = new HttpHost("10.60.8.20", 8080);

httpClient.getParams().setParameter(ConnRoutePNames.DEFAULT_PROXY, proxy);

// Ŀ����?

HttpGet httpget = new HttpGet("/adsense/login/zh_CN/?");

System.out.println("Ŀ��: " + targetHost);

System.out.println("����: " + httpget.getRequestLine());

System.out.println("����: " + proxy);

*/

}

return httpClient;

}

public static byte[] sendGet_byte(String url)

{

getHttpClient();

byte[] data=null;

httpClient=new DefaultHttpClient();

HttpGet httpGet=new HttpGet(url);

InputStream is=null;

HttpContext httpContext=new BasicHttpContext();

if(mCookieStore==null)

{

mCookieStore=new BasicCookieStore();

}

httpContext.setAttribute(ClientContext.COOKIE_STORE, mCookieStore);

HttpResponse httpResponse;

try {

httpResponse = httpClient.execute(httpGet,httpContext);

HttpEntity httpEntity=httpResponse.getEntity();

if(httpEntity!=null)

{

is=httpEntity.getContent();

}

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

if(is!=null)

{

data=readStream(is);

}

return data;

}

public static String sendGet(String url) throws ClientProtocolException, IOException{

getHttpClient();

String result = null;

httpClient = new DefaultHttpClient();

HttpGet get = new HttpGet(url);

InputStream in = null;

HttpContext context = new BasicHttpContext();

if(mCookieStore==null)

mCookieStore = new BasicCookieStore();

context.setAttribute(ClientContext.COOKIE_STORE, mCookieStore);

try {

// long t1=System.currentTimeMillis();

HttpResponse response = httpClient.execute(get,context);

// System.out.println(System.currentTimeMillis()-t1);

List参考:

页面访问不了,就去百度一下