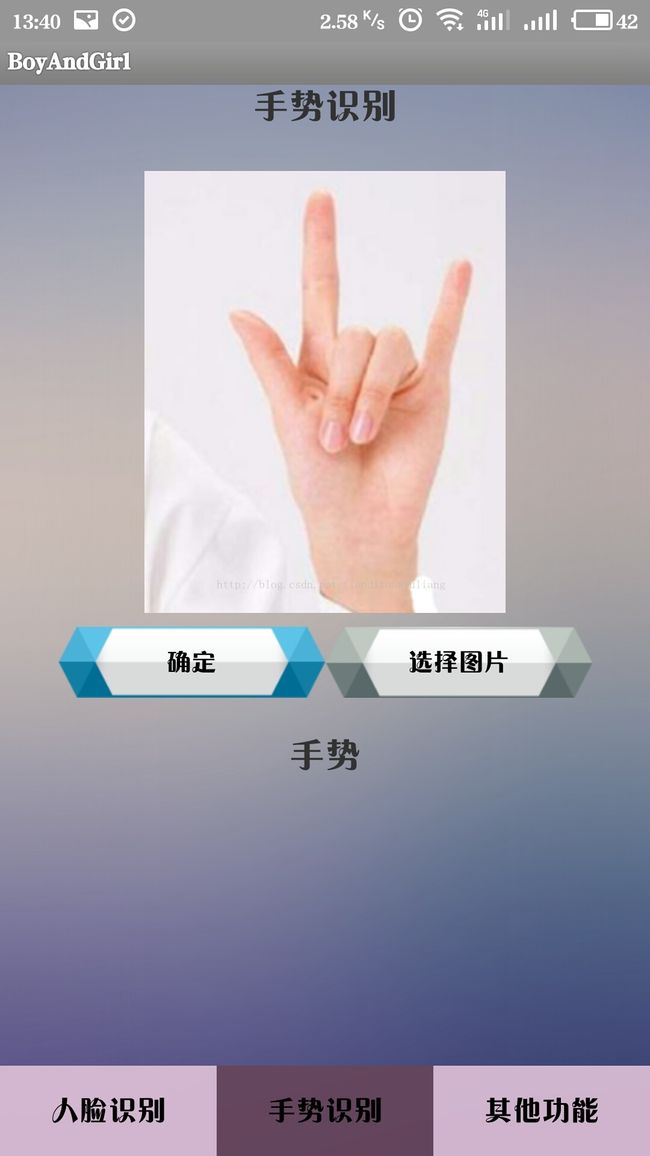

使用face++的API接口-手势识别

本文地址:http://blog.csdn.net/tiandixuanwuliang/article/details/78089775

本文将介绍如何使用face++的API接口实现手势识别。

手势识别和人脸识别基本代码相同,请大家结合“使用face++的API接口-人脸识别”一文学习。

一、GestureFragment.java代码(核心逻辑代码)

package com.wllfengshu.boyandgirl;

import java.io.IOException;

import java.util.HashMap;

import java.util.Map;

import org.json.JSONException;

import org.json.JSONObject;

import android.annotation.SuppressLint;

import android.app.Fragment;

import android.content.Intent;

import android.graphics.Bitmap;

import android.graphics.BitmapFactory;

import android.graphics.Paint;

import android.os.Bundle;

import android.os.Handler;

import android.os.Message;

import android.view.LayoutInflater;

import android.view.View;

import android.view.View.OnClickListener;

import android.view.ViewGroup;

import android.widget.Button;

import android.widget.ImageView;

import android.widget.TextView;

import android.widget.Toast;

import com.wllfengshu.util.Constant;

import com.wllfengshu.util.DrawUtil;

import com.wllfengshu.util.GifView;

import com.wllfengshu.util.HttpUtils;

import com.wllfengshu.util.ImageUtil;

@SuppressLint({ "NewApi", "HandlerLeak" })

public class GestureFragment extends Fragment implements OnClickListener {

private ImageView iv_gesture;

private Button ib_gesture_enter;

private Button ib_gesture_choice;

private TextView tv_gesture;

private Bitmap scalingPhoto;// 浣嶅浘

private String gesture;

private Paint paint;// 鐢荤瑪宸ュ叿

private View view;

private GifView gif;

@Override

public View onCreateView(LayoutInflater inflater, ViewGroup container,

Bundle savedInstanceState) {

view = inflater.inflate(R.layout.fragment_gesture, container, false);

iv_gesture = (ImageView) view.findViewById(R.id.iv_gesture);

ib_gesture_enter = (Button) view.findViewById(R.id.ib_gesture_enter);

ib_gesture_choice = (Button) view.findViewById(R.id.ib_gesture_choice);

tv_gesture = (TextView) view.findViewById(R.id.tv_gesture);

gif = (GifView) view.findViewById(R.id.gif);

ib_gesture_enter.setOnClickListener(this);

ib_gesture_choice.setOnClickListener(this);

paint = new Paint();

scalingPhoto = BitmapFactory.decodeResource(this.getResources(),

R.drawable.defualtg);

return view;

}

@SuppressLint("HandlerLeak")

private Handler handler = new Handler() {

@Override

public void handleMessage(Message msg) {

String str = (String) msg.obj;

System.out.println("********gesture:" + str);

if (str.equals("403") || str.equals("400") || str.equals("413")

|| str.equals("500")) {

Toast.makeText(getActivity(), "Please Try Again",

Toast.LENGTH_SHORT).show();

} else {

try {

JSONObject resultJSON = new JSONObject(str);

gesture = DrawUtil.GesturePrepareBitmap(resultJSON,

scalingPhoto, paint, iv_gesture);

System.out.println("------------gesture" + gesture);

tv_gesture.setText("手势:" + gesture);

} catch (JSONException e) {

e.printStackTrace();

}

}

gif.setVisibility(View.GONE);// 鍋滄gif

};

};

@Override

public void onActivityResult(int requestCode, int resultCode, Intent data) {

if (requestCode == 1) {

if (data != null) {

String photoPath = ImageUtil.getPhotoPath(getActivity(), data);

scalingPhoto = ImageUtil.getScalingPhoto(photoPath);

iv_gesture.setImageBitmap(scalingPhoto);

}

}

super.onActivityResult(requestCode, resultCode, data);

}

@Override

public void onClick(View v) {

switch (v.getId()) {

case R.id.ib_gesture_choice:

Intent intent = new Intent();

intent.setAction(Intent.ACTION_PICK);

intent.setType("image/*");

startActivityForResult(intent, 1);

break;

case R.id.ib_gesture_enter:

gif.setVisibility(View.VISIBLE);

gif.setMovieResource(R.raw.red);

String base64ImageEncode = ImageUtil

.getBase64ImageEncode(scalingPhoto);

System.out.println(base64ImageEncode);

final Map map = new HashMap();

map.put("api_key", Constant.API_KEY);

map.put("api_secret", Constant.API_SECRET);

map.put("image_base64", base64ImageEncode);

new Thread(new Runnable() {

@Override

public void run() {

try {

String result = HttpUtils.post(Constant.URL_GESTURE,

map);

Message message = new Message();

message.obj = result;

handler.sendMessage(message);

} catch (IOException e) {

e.printStackTrace();

}

}

}).start();

break;

}

}

}

二、手势返回值解析:

public static String GesturePrepareBitmap(JSONObject jsObject,

Bitmap scalingPhoto, Paint paint, ImageView imageView)

throws JSONException {

String gestureEN = "";

Bitmap bitmapPre = Bitmap.createBitmap(scalingPhoto.getWidth(),

scalingPhoto.getHeight(), scalingPhoto.getConfig());

Canvas canvas = new Canvas(bitmapPre);

canvas.drawBitmap(scalingPhoto, 0, 0, null);

final JSONArray jsonArray = jsObject.getJSONArray("hands");

Integer num = jsonArray.length();

for (int i = 0; i < num; i++) {

JSONObject hands = jsonArray.getJSONObject(i);

JSONObject postion = hands.getJSONObject("hand_rectangle");

JSONObject gesture = hands.getJSONObject("gesture");

Map mapGesture = ImageUtil.GetMapGesture(gesture);

String gestureUS = ImageUtil.getGestureUS(mapGesture);

gestureEN = ImageUtil.getGestureENS(gestureUS);

System.out.println("-- " + gestureEN);

float y = (float) postion.getDouble("top");

float x = (float) postion.getDouble("left");

float w = (float) postion.getDouble("width");

float h = (float) postion.getDouble("height");

System.out.println("x:" + x + " y:" + y + " w:" + w + " h" + h);

System.out.println(bitmapPre.getWidth() + " "

+ bitmapPre.getHeight());

System.out.println("x:" + x + " y:" + y + " w:" + w + " h" + h);

paint.setColor(Color.RED);

paint.setStrokeWidth(5);

canvas.drawLine(x, y, x + w, y, paint);

canvas.drawLine(x, y + h, x + w, y + h, paint);

canvas.drawLine(x, y, x, y + h, paint);

canvas.drawLine(x + w, y, x + w, y + h, paint);

scalingPhoto = bitmapPre;

imageView.setImageBitmap(scalingPhoto);

}

return gestureEN;

}

本文还有一些其他的封装函数,由于篇幅问题不一一粘贴,请大家自行下载本文代码案例:

下载地址:http://download.csdn.net/download/tiandixuanwuliang/9984027