Hadoop安装:

首先到官方下载官网的hadoop2.7.7,链接如下

https://mirrors.tuna.tsinghua.edu.cn/apache/hadoop/common/

找网盘的hadooponwindows-master.zip

链接如下

https://pan.baidu.com/s/1VdG6PBnYKM91ia0hlhIeHg

把hadoop-2.7.7.tar.gz解压后

使用hadooponwindows-master的bin和etc替换hadoop2.7.7的bin和etc

注意:安装Hadoop2.7.7

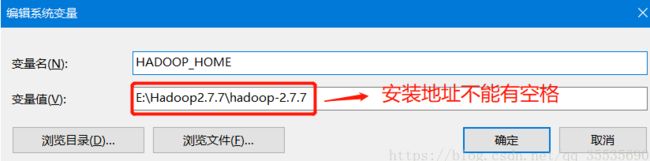

官网下载Hadoop2.7.7,安装时注意,最好不要安装到带有空格的路径名下,例如:Programe Files,否则在配置Hadoop的配置文件时会找不到JDK(按相关说法,配置文件中的路径加引号即可解决,但我没测试成功)。

配置HADOOP_HOME

path添加%HADOOP_HOME%\bin(win10不用分号或者如下编辑界面不用分号,其余加上 ;)

-----------------------------------------------------------配置文件----------------------------

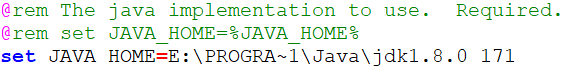

使用编辑器打开E:\Hadoop2.7.7\hadoop-2.7.7\etc\hadoop\hadoop-env.cmd

修改JAVA_HOME的路径

把set JAVA_HOME改为jdk的位置

注意其中PROGRA~1代表Program Files

set JAVA_HOME=E:\PROGRA~1\Java\jdk1.8.0_171

打开 hadoop-2.7.7/etc/hadoop/hdfs-site.xml

修改路径为hadoop下的namenode和datanode

dfs.replication

1

dfs.namenode.name.dir

/E:/Hadoop2.7.7/hadoop-2.7.7/data/namenode

dfs.datanode.data.dir

/E:/Hadoop2.7.7/hadoop-2.7.7/data/datanode

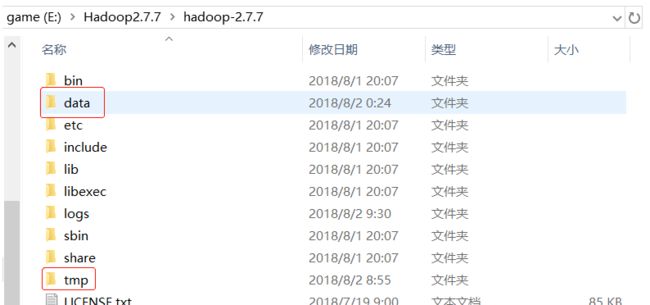

在E:\Hadoop-2.7.7目录下 添加tmp文件夹

在E:/Hadoop2.7.7/hadoop-2.7.7/添加data和namenode,datanode子文件夹

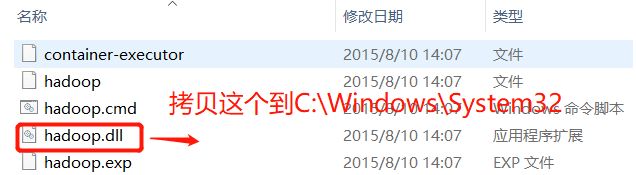

还需要把hadoop.dll(从)拷贝到 C:\Windows\System32

不然在window平台使用MapReduce测试时报错

以管理员身份打开命令提示符

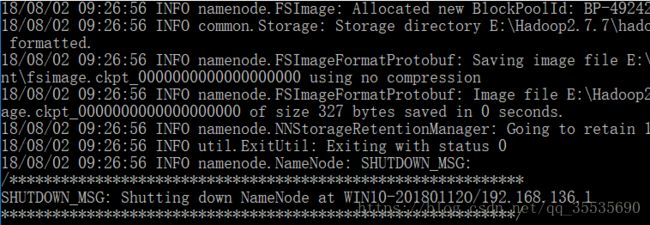

输入hdfs namenode -format,看到seccessfully就说明format成功。

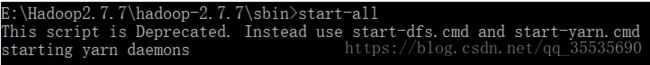

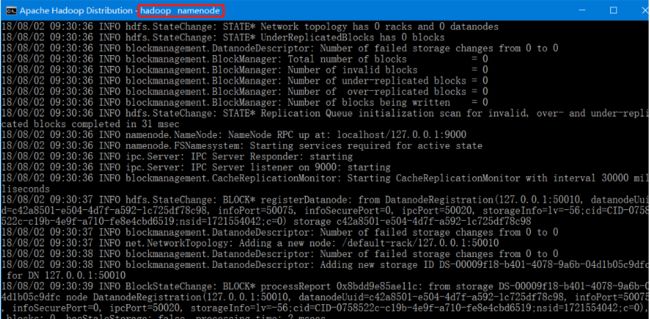

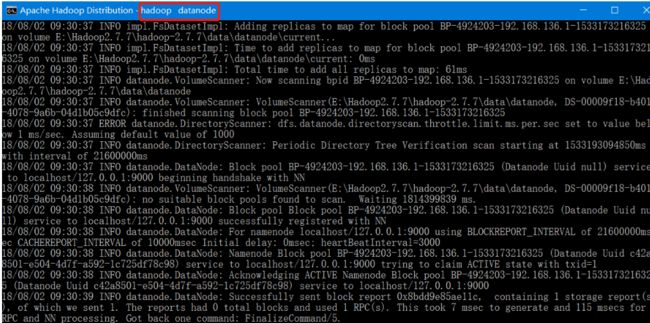

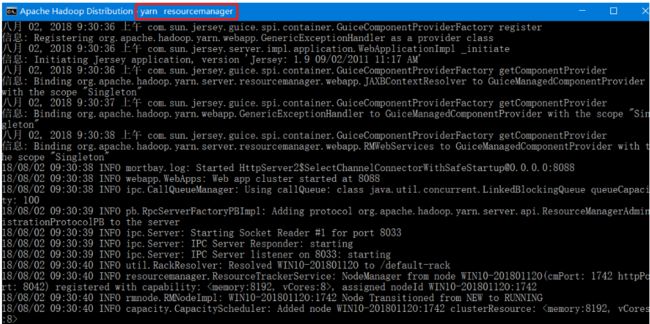

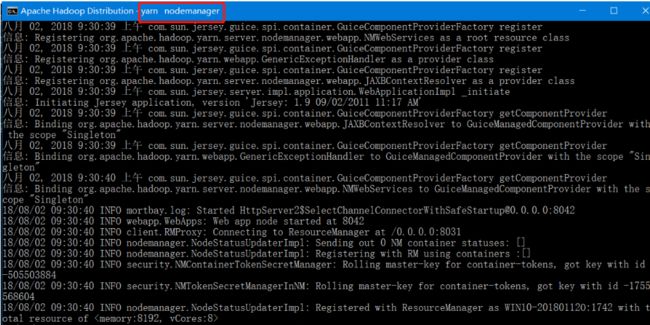

转到Hadoop-2.7.3\sbin文件下 输入start-all,启动hadoop集群 ,关闭是 stop-all

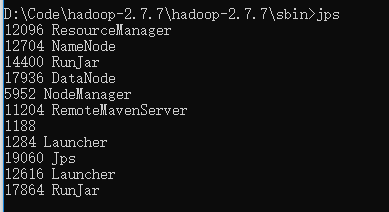

输入jps - 可以查看运行的所有节点

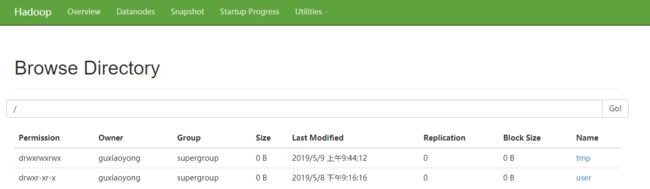

访问http://localhost:50070,访问hadoop的web界面

---------------------------------------------------------------------

hadoop启动后,创建如下的HDFS文件:

D:\Code\hadoop-2.7.7\hadoop-2.7.7\sbin>hdfs dfs -mkdir /user

D:\Code\hadoop-2.7.7\hadoop-2.7.7\sbin>hdfs dfs -mkdir /user/hive

D:\Code\hadoop-2.7.7\hadoop-2.7.7\sbin>hdfs dfs -mkdir /user/hive/warehouse

D:\Code\hadoop-2.7.7\hadoop-2.7.7\sbin>hdfs dfs -mkdir /tmp

D:\Code\hadoop-2.7.7\hadoop-2.7.7\sbin>hdfs dfs -mkdir /tmp/hive

D:\Code\hadoop-2.7.7\hadoop-2.7.7\sbin>hadoop fs -chmod -R 777 /tmp

HIVE安装:

1.安装hadoop

2.从maven中下载mysql-connector-java-5.1.26-bin.jar(或其他jar版本)放在hive目录下的lib文件夹

3.配置hive环境变量,HIVE_HOME=F:\hadoop\apache-hive-2.1.1-bin

4.hive配置

hive的配置文件放在$HIVE_HOME/conf下,里面有4个默认的配置文件模板

hive-default.xml.template 默认模板

hive-env.sh.template hive-env.sh默认配置

hive-exec-log4j.properties.template exec默认配置

hive-log4j.properties.template log默认配置

可不做任何修改hive也能运行,默认的配置元数据是存放在Derby数据库里面的,大多数人都不怎么熟悉,我们得改用mysql来存储我们的元数据,以及修改数据存放位置和日志存放位置等使得我们必须配置自己的环境,下面介绍如何配置。

(1)创建配置文件

$HIVE_HOME/conf/hive-default.xml.template -> $HIVE_HOME/conf/hive-site.xml

$HIVE_HOME/conf/hive-env.sh.template -> $HIVE_HOME/conf/hive-env.sh

$HIVE_HOME/conf/hive-exec-log4j.properties.template -> $HIVE_HOME/conf/hive-exec-log4j.properties

$HIVE_HOME/conf/hive-log4j.properties.template -> $HIVE_HOME/conf/hive-log4j.properties

(2)修改 hive-env.sh

export HADOOP_HOME=F:\hadoop\hadoop-2.7.2

export HIVE_CONF_DIR=F:\hadoop\apache-hive-2.1.1-bin\conf

export HIVE_AUX_JARS_PATH=F:\hadoop\apache-hive-2.1.1-bin\lib

(3)修改 hive-site.xml

1 2 34 5 14 15hive.metastore.warehouse.dir 6 7 8 9/user/hive/warehouse 10 11location of default database for the warehouse 12 1316 17 26 27hive.exec.scratchdir 18 19 20 21/tmp/hive 22 23HDFS root scratch dir for Hive jobs which gets created with write all (733) permission. For each connecting user, an HDFS scratch dir: ${hive.exec.scratchdir}/ 24 25is created, with ${hive.scratch.dir.permission}. 28 29 38 39hive.exec.local.scratchdir 30 31 32 33F:/hadoop/apache-hive-2.1.1-bin/hive/iotmp 34 35Local scratch space for Hive jobs 36 3740 41 50 51hive.downloaded.resources.dir 42 43 44 45F:/hadoop/apache-hive-2.1.1-bin/hive/iotmp 46 47Temporary local directory for added resources in the remote file system. 48 4952 53 62 63hive.querylog.location 54 55 56 57F:/hadoop/apache-hive-2.1.1-bin/hive/iotmp 58 59Location of Hive run time structured log file 60 6164 65 72 73 74 75hive.server2.logging.operation.log.location 66 67F:/hadoop/apache-hive-2.1.1-bin/hive/iotmp/operation_logs 68 69Top level directory where operation logs are stored if logging functionality is enabled 70 7176 77 82 83javax.jdo.option.ConnectionURL 78 79jdbc:mysql://localhost:3306/hive?characterEncoding=UTF-8 80 8184 85 90 91javax.jdo.option.ConnectionDriverName 86 87com.mysql.jdbc.Driver 88 8992 93 98 99javax.jdo.option.ConnectionUserName 94 95root 96 97100 101 106 107 108 109javax.jdo.option.ConnectionPassword 102 103root 104 105110 111 116 117datanucleus.autoCreateSchema 112 113true 114 115118 119 124 125datanucleus.autoCreateTables 120 121true 122 123126 127 132 133 134 135datanucleus.autoCreateColumns 128 129true 130 131136 137 hive.metastore.schema.verification 138 139false 140 141142 143 Enforce metastore schema version consistency. 144 145 True: Verify that version information stored in metastore matches with one from Hive jars. Also disable automatic 146 147 schema migration attempt. Users are required to manully migrate schema after Hive upgrade which ensures 148 149 proper metastore schema migration. (Default) 150 151 False: Warn if the version information stored in metastore doesn't match with one from in Hive jars. 152 153 154 155

注:需要事先在hadoop上创建hdfs目录

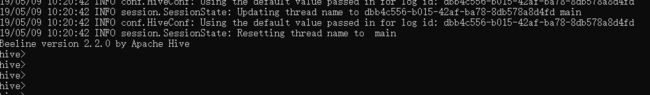

启动metastore服务:hive --service metastore

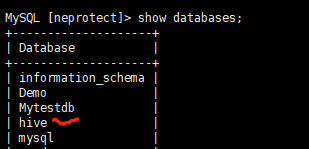

在数据库中生成对应的 hive 数据库

启动Hive:hive

-------------------------------------------------------------- 创建表 以及 查询案例

hive上创建表:

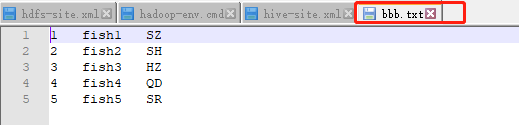

CREATE TABLE testB (

id INT,

name string,

area string

) PARTITIONED BY (create_time string) ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' STORED AS TEXTFILE;

将本地文件上传到 HDFS:

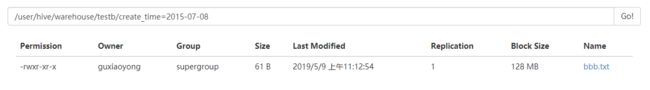

HDFS下执行: D:\Code\hadoop-2.7.7\hadoop-2.7.7\sbin>hdfs dfs -put D:\Code\hadoop-2.7.7\gxy\bbb.txt /user/hive/warehouse

hive导入HDFS中的数据:

LOAD DATA INPATH '/user/hive/warehouse/bbb.txt' INTO TABLE testb PARTITION(create_time='2015-07-08');

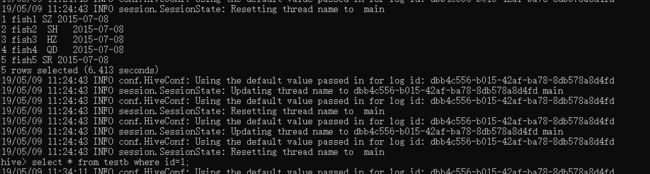

执行选择命令:

select * from testb;