卷积神经网络CNN的前向和后向传播(一)

卷积神经网络CNN的前向和后向传播

- 卷积运算与相关的区别

- 卷积运算的正向和反向传播

原文 Forward And Backpropagation in Convolutional Neural Network地址: https://medium.com/@2017csm1006/forward-and-backpropagation-in-convolutional-neural-network-4dfa96d7b37e 在墙外,在这写是为了方便大家参考。

下面的文章演示了卷积运算在CNN中进行反向传播的过程。

卷积运算与相关的区别

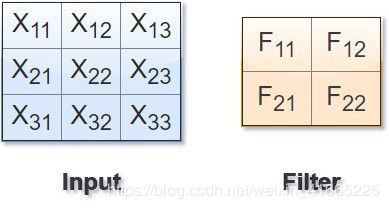

让我们考虑卷积层中的输入和卷积核(也称之为滤波器)。简单起见, c h a n n e l channel channel=1,输入矩阵 X X X为3x3,卷积核 F F F为2x2, p a d d i n g = 0 , s t r i d e = 1 padding=0,stride=1 padding=0,stride=1。前向传播为卷积过程。

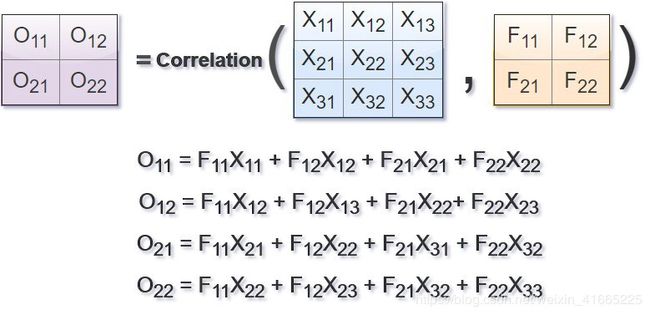

而滤波器矩阵 F F F与输入矩阵 X X X的相关矩阵 O O O如下图所示:

卷积核与输入图像的卷积相当于将卷积核旋转180度(先水平翻转再垂直翻转),然后与输入矩阵进行相关操作:

由此可见,卷积运算与相关运算是一样的,只不过是和旋转后的卷积核进行相关运算。

卷积运算的正向和反向传播

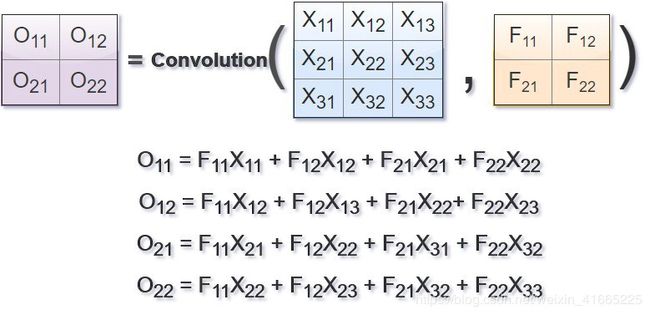

注意: 为了方便推导出卷积核的值和输入矩阵值的梯度方程,我们将考虑卷积运算和相关运算看作是一样的,这只是为了处理上简单的考虑。

因此,卷积操作可以用下图来表示:

注意此处为了方便, F F F没有进行180度翻转,因此这里卷积和相关是一样的。

可以用下面的图来进行可视化。

现在,我们要计算卷积核 F F F 相对于误差 E E E 的梯度,要解以下的方程。

∂ E F 11 = ∂ E O 11 ⋅ ∂ O 11 F 11 + ∂ E O 12 ⋅ ∂ O 12 F 11 + ∂ E O 21 ⋅ ∂ O 21 F 11 + ∂ E O 22 ⋅ ∂ O 22 F 11 \frac{\partial E}{F_{11}}=\frac{\partial E}{O_{11}}·\frac{\partial O_{11}}{F_{11}}+\frac{\partial E}{O_{12}}·\frac{\partial O_{12}}{F_{11}}+\frac{\partial E}{O_{21}}·\frac{\partial O_{21}}{F_{11}}+\frac{\partial E}{O_{22}}·\frac{\partial O_{22}}{F_{11}} F11∂E=O11∂E⋅F11∂O11+O12∂E⋅F11∂O12+O21∂E⋅F11∂O21+O22∂E⋅F11∂O22

∂ E F 12 = ∂ E O 11 ⋅ ∂ O 11 F 12 + ∂ E O 12 ⋅ ∂ O 12 F 12 + ∂ E O 21 ⋅ ∂ O 21 F 12 + ∂ E O 22 ⋅ ∂ O 22 F 12 \frac{\partial E}{F_{12}}=\frac{\partial E}{O_{11}}·\frac{\partial O_{11}}{F_{12}}+\frac{\partial E}{O_{12}}·\frac{\partial O_{12}}{F_{12}}+\frac{\partial E}{O_{21}}·\frac{\partial O_{21}}{F_{12}}+\frac{\partial E}{O_{22}}·\frac{\partial O_{22}}{F_{12}} F12∂E=O11∂E⋅F12∂O11+O12∂E⋅F12∂O12+O21∂E⋅F12∂O21+O22∂E⋅F12∂O22

∂ E F 21 = ∂ E O 11 ⋅ ∂ O 11 F 21 + ∂ E O 12 ⋅ ∂ O 12 F 21 + ∂ E O 21 ⋅ ∂ O 21 F 21 + ∂ E O 22 ⋅ ∂ O 22 F 21 \frac{\partial E}{F_{21}}=\frac{\partial E}{O_{11}}·\frac{\partial O_{11}}{F_{21}}+\frac{\partial E}{O_{12}}·\frac{\partial O_{12}}{F_{21}}+\frac{\partial E}{O_{21}}·\frac{\partial O_{21}}{F_{21}}+\frac{\partial E}{O_{22}}·\frac{\partial O_{22}}{F_{21}} F21∂E=O11∂E⋅F21∂O11+O12∂E⋅F21∂O12+O21∂E⋅F21∂O21+O22∂E⋅F21∂O22

∂ E F 22 = ∂ E O 11 ⋅ ∂ O 11 F 22 + ∂ E O 12 ⋅ ∂ O 12 F 22 + ∂ E O 21 ⋅ ∂ O 21 F 22 + ∂ E O 22 ⋅ ∂ O 22 F 22 \frac{\partial E}{F_{22}}=\frac{\partial E}{O_{11}}·\frac{\partial O_{11}}{F_{22}}+\frac{\partial E}{O_{12}}·\frac{\partial O_{12}}{F_{22}}+\frac{\partial E}{O_{21}}·\frac{\partial O_{21}}{F_{22}}+\frac{\partial E}{O_{22}}·\frac{\partial O_{22}}{F_{22}} F22∂E=O11∂E⋅F22∂O11+O12∂E⋅F22∂O12+O21∂E⋅F22∂O21+O22∂E⋅F22∂O22

这一些等式也等同于

∂ E F 11 = ∂ E O 11 ⋅ X 11 + ∂ E O 12 ⋅ X 11 + ∂ E O 21 ⋅ X 11 + ∂ E O 22 ⋅ X 11 \frac{\partial E}{F_{11}}=\frac{\partial E}{O_{11}}·X_{11}+\frac{\partial E}{O_{12}}·X_{11}+\frac{\partial E}{O_{21}}·X_{11}+\frac{\partial E}{O_{22}}·X_{11} F11∂E=O11∂E⋅X11+O12∂E⋅X11+O21∂E⋅X11+O22∂E⋅X11

∂ E F 12 = ∂ E O 11 ⋅ X 12 + ∂ E O 12 ⋅ X 12 + ∂ E O 21 ⋅ X 12 + ∂ E O 22 ⋅ X 12 \frac{\partial E}{F_{12}}=\frac{\partial E}{O_{11}}·X_{12}+\frac{\partial E}{O_{12}}·X_{12}+\frac{\partial E}{O_{21}}·X_{12}+\frac{\partial E}{O_{22}}·X_{12} F12∂E=O11∂E⋅X12+O12∂E⋅X12+O21∂E⋅X12+O22∂E⋅X12

∂ E F 21 = ∂ E O 11 ⋅ X 21 + ∂ E O 12 ⋅ X 21 + ∂ E O 21 ⋅ X 21 + ∂ E O 22 ⋅ X 21 \frac{\partial E}{F_{21}}=\frac{\partial E}{O_{11}}·X_{21}+\frac{\partial E}{O_{12}}·X_{21}+\frac{\partial E}{O_{21}}·X_{21}+\frac{\partial E}{O_{22}}·X_{21} F21∂E=O11∂E⋅X21+O12∂E⋅X21+O21∂E⋅X21+O22∂E⋅X21

∂ E F 22 = ∂ E O 11 ⋅ X 22 + ∂ E O 12 ⋅ X 22 + ∂ E O 21 ⋅ X 22 + ∂ E O 22 ⋅ X 22 \frac{\partial E}{F_{22}}=\frac{\partial E}{O_{11}}·X_{22}+\frac{\partial E}{O_{12}}·X_{22}+\frac{\partial E}{O_{21}}·X_{22}+\frac{\partial E}{O_{22}}·X_{22} F22∂E=O11∂E⋅X22+O12∂E⋅X22+O21∂E⋅X22+O22∂E⋅X22

如果我们仔细看,这个等式可以写成卷积操作的形式:

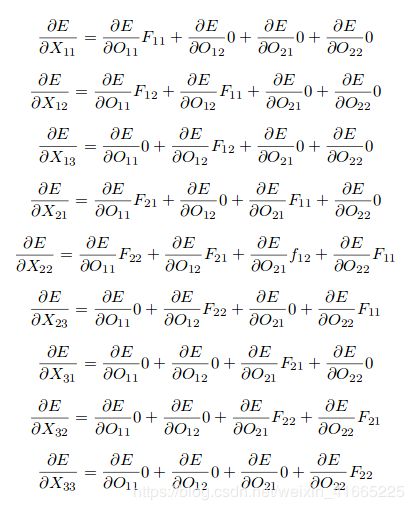

类似地,我们可以得到输入矩阵 X X X 相对于误差 E E E 的梯度值:

为了得到输入矩阵的梯度 ∂ E / ∂ X \left. \partial E \middle/ \partial X \right. ∂E/∂X,我们需要将卷积核旋转180度,通过输出的误差梯度 ∂ E / ∂ O \left. \partial E \middle/ \partial O \right. ∂E/∂O计算旋转卷积核 F F F 的全卷积,如下图所示。

因此,卷积运算就可以实现卷积层的正向传播和反向传播。

要计算池化层和Relu层的梯度,可以通过使用导数的链式法则来计算。