SSD训练自己数据--详细过程及问题总结

境说明

Ubuntu16.04/GPU 1080Ti/Cuda8

1.代码下载

地址:https://github.com/balancap/SSD-Tensorflow

2.预训练模型验证

cd checkpoints/

unzip ssd_300_vgg.ckpt.zip

复制ssd_notebook.ipynb中的代码生成demo_test.py,逻辑很简单,图片路径等信息自己适配修改:

代码如下:

#coding:utf-8

import os

import math

import random

import time

import numpy as np

import tensorflow as tf

import cv2

slim = tf.contrib.slim

#import matplotlib.pyplot as plt

import matplotlib.image as mpimg

import sys

sys.path.append('../')

from nets import ssd_vgg_300, ssd_common, np_methods

from preprocessing import ssd_vgg_preprocessing

#import visualization

# TensorFlow session: grow memory when needed. TF, DO NOT USE ALL MY GPU MEMORY!!!

os.environ["CUDA_VISIBLE_DEVICES"] = '0'

gpu_memory_fraction=0.45

gpu_options = tf.GPUOptions(per_process_gpu_memory_fraction=gpu_memory_fraction)

config = tf.ConfigProto(log_device_placement=False, gpu_options=gpu_options)

isess = tf.InteractiveSession(config=config)

# Input placeholder.

net_shape = (300, 300)

data_format = 'NHWC'

img_input = tf.placeholder(tf.uint8, shape=(None, None, 3))

# Evaluation pre-processing: resize to SSD net shape.

image_pre, labels_pre, bboxes_pre, bbox_img = ssd_vgg_preprocessing.preprocess_for_eval(

img_input, None, None, net_shape, data_format, resize=ssd_vgg_preprocessing.Resize.WARP_RESIZE)

image_4d = tf.expand_dims(image_pre, 0)

# Define the SSD model.

reuse = True if 'ssd_net' in locals() else None

ssd_net = ssd_vgg_300.SSDNet()

with slim.arg_scope(ssd_net.arg_scope(data_format=data_format)):

predictions, localisations, _, _ = ssd_net.net(image_4d, is_training=False, reuse=reuse)

# Restore SSD model.

ckpt_filename = '../checkpoints/ssd_300_vgg.ckpt'

# ckpt_filename = '../checkpoints/VGG_VOC0712_SSD_300x300_ft_iter_120000.ckpt'

isess.run(tf.global_variables_initializer())

saver = tf.train.Saver()

saver.restore(isess, ckpt_filename)

# SSD default anchor boxes.

ssd_anchors = ssd_net.anchors(net_shape)

# Main image processing routine.

def process_image(img, select_threshold=0.5, nms_threshold=.45, net_shape=(300, 300)):

# Run SSD network.

rimg, rpredictions, rlocalisations, rbbox_img = isess.run([image_4d, predictions, localisations, bbox_img],

feed_dict={img_input: img})

# Get classes and bboxes from the net outputs.

rclasses, rscores, rbboxes = np_methods.ssd_bboxes_select(

rpredictions, rlocalisations, ssd_anchors,

select_threshold=select_threshold, img_shape=net_shape, num_classes=21, decode=True)

rbboxes = np_methods.bboxes_clip(rbbox_img, rbboxes)

rclasses, rscores, rbboxes = np_methods.bboxes_sort(rclasses, rscores, rbboxes, top_k=400)

rclasses, rscores, rbboxes = np_methods.bboxes_nms(rclasses, rscores, rbboxes, nms_threshold=nms_threshold)

# Resize bboxes to original image shape. Note: useless for Resize.WARP!

rbboxes = np_methods.bboxes_resize(rbbox_img, rbboxes)

return rclasses, rscores, rbboxes

# Test on some demo image and visualize output.

#测试的文件夹

path = '../demo/'

paths = '../demo_save/'

image_names = sorted(os.listdir(path))

#文件夹中的第几张图,-1代表最后一张

t1 = time.time()

for t in range(10):

for image1 in image_names:

pathi = path + image1

img = mpimg.imread(pathi)

#img = cv2.imread(path + 'person.jpg')

height, width = img.shape[:2]

rclasses, rscores, rbboxes = process_image(img)

obj_cnt, _= rbboxes.shape

for i in range(obj_cnt):

p1, p2, p3, p4 = rbboxes[i,:]

left = int(width*(p2))

top = int(height*(p1))

right = int(width*(p4))

bottom = int(height*(p3))

cv2.rectangle(img, (left, top), (right, bottom), (0, 255, 0), 3)

cv2.imwrite(paths + image1, img[:,:,::-1])

t2 = time.time()

print('Avg time=', (t2-t1)/10/len(image_names))

# visualization.bboxes_draw_on_img(img, rclasses, rscores, rbboxes, visualization.colors_plasma)

#visualization.plt_bboxes(img, rclasses, rscores, rbboxes)

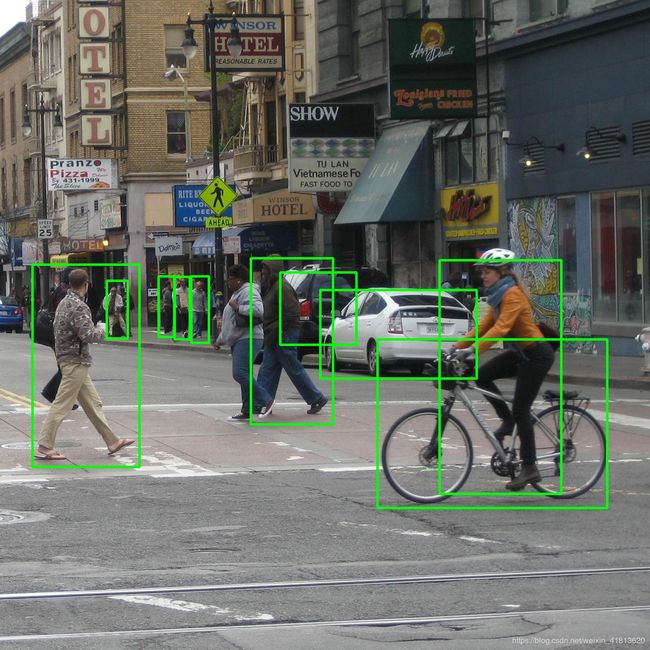

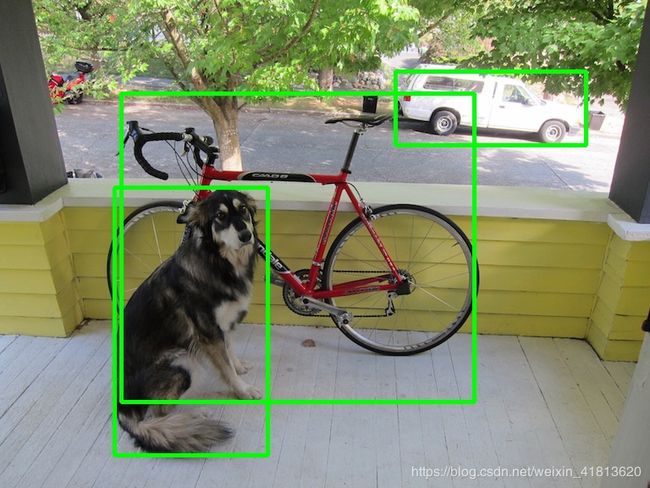

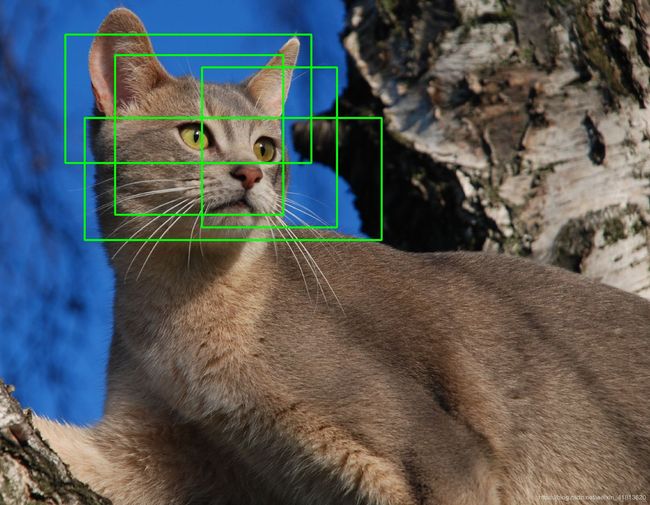

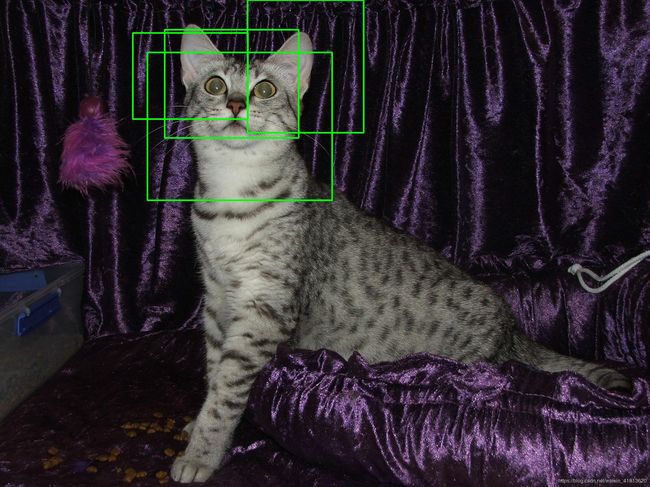

效果如下:

3. 准备训练数据集

3.1 此部分可参考:https://blog.csdn.net/hitzijiyingcai/article/details/81636455

其中VOCdevkit 可以放在scripts目录下。

按下列文件夹结构,将训练数据集放到各个文件夹下面,生成4个训练、测试和验证txt文件列表

VOCdevkit

—VOC2007

——Annotations

——ImageSets

———Layout

———Main

———Segmentation

——JPEGImages

Annotations中是所有的xml文件

JPEGImages中是所有的训练图片

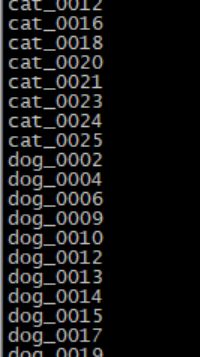

Main中是4个txt文件,其中test.txt是测试集,train.txt是训练集,val.txt是验证集,trainval.txt是训练和验证集。

4个文件中内容如下,只保留文件名称,没有后缀。

3.2

按照VOC格式准备好数据后,需要转换为tfrecods格式,按照下面步骤操作:

(1)修改类别信息,datasets/pascalvoc_common.py

将训练类修改别成自己的:

VOC_LABELS = {

'none': (0, 'Background'),

'cat': (1, 'Animal'),

'dog': (2, 'Animal'),

}

(2)修改datasets/pascalvoc_to_tfrecords.py文件:

67行定义一个tfrecords文件包含多少张图片

SAMPLES_PER_FILES = 600

82行的图片后缀根据自己情况修改;

83行读取方式为’rb‘;

filename = directory + DIRECTORY_IMAGES + name + '.jpg'

image_data = tf.gfile.FastGFile(filename, 'rb').read()

(3)运行tf_convert_data.py生成tfrecords

–output_dir路径需要提前创建,

python tf_convert_data.py --dataset_name=pascalvoc --dataset_dir=./VOCdevkit/VOC2007/ --output_name=voc_2007_train --output_dir=./train_data/peg2

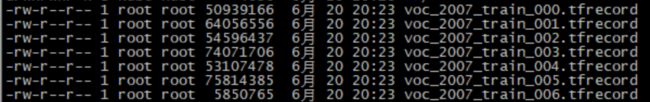

中间过程:

生成tf文件列表如下:

4. 训练

4.1.修改相关文件

(1).train_ssd_network.py

下面这些参数也可以在调用时传入,

tf.app.flags.DEFINE_integer(

'save_summaries_secs', 600, #保存统计时间间隔

'The frequency with which summaries are saved, in seconds.')

tf.app.flags.DEFINE_integer(

'save_interval_secs', 1800, #保存模型时间间隔

'The frequency with which the model is saved, in seconds.')

tf.app.flags.DEFINE_integer( #类别数

'num_classes', 3, 'Number of classes to use in the dataset.')

tf.app.flags.DEFINE_integer(

'batch_size', 64, 'The number of samples in each batch.')

tf.app.flags.DEFINE_integer('max_number_of_steps', 50000,#迭代次数

'The maximum number of training steps.')

(2).eval_ssd_network.py

tf.app.flags.DEFINE_integer(

'num_classes', 3, 'Number of classes to use in the dataset.')

(3).nets/ssd_vgg_300.py

default_params = SSDParams(

img_shape=(300, 300),

num_classes=3, #类别数

no_annotation_label=3, #类别数

(4).datasets/pascalvoc_2007.py

TRAIN_STATISTICS = {

'none': (0, 0),

'cat': (952, 952),#应该是图片数和对象数

'dog': (2033, 2033),

'total': (2985, 2985),

}

TEST_STATISTICS = {

'none': (0, 0),

'cat': (116, 116),

'dog': (253, 253),

'total': (369, 369),

}

SPLITS_TO_SIZES = {

'train': 300,

'test': 300,

}

NUM_CLASSES = 2

(5).caffe_to_tensorflow.py

'num_classes', 3, 'Number of classes in the dataset.')

4.2.加载预训练好的vgg16模型

链接:https://pan.baidu.com/s/1diWbdJdjVbB3AWN99406nA 密码:ge3x

解压放入checkpoint

4.3.开始训练

python ./train_ssd_network.py

–train_dir=./train_model/ \ #训练生成模型的存放路径

–dataset_dir=./train_data/peg2/ \ #数据存放路径

–dataset_name=pascalvoc_2007 \

–dataset_split_name=train \

–model_name=ssd_300_vgg \ #加载的模型的名字

–checkpoint_path=./checkpoints/vgg_16.ckpt \ #预训练模型的名字

–checkpoint_model_scope=vgg_16 \

–checkpoint_exclude_scopes=ssd_300_vgg/conv6,ssd_300_vgg/conv7,ssd_300_vgg/block8,ssd_300_vgg/block9,ssd_300_vgg/block10,ssd_300_vgg/block11,ssd_300_vgg/block4_box,ssd_300_vgg/block7_box,ssd_300_vgg/block8_box,ssd_300_vgg/block9_box,ssd_300_vgg/block10_box,ssd_300_vgg/block11_box \

–trainable_scopes=ssd_300_vgg/conv6,ssd_300_vgg/conv7,ssd_300_vgg/block8,ssd_300_vgg/block9,ssd_300_vgg/block10,ssd_300_vgg/block11,ssd_300_vgg/block4_box,ssd_300_vgg/block7_box,ssd_300_vgg/block8_box,ssd_300_vgg/block9_box,ssd_300_vgg/block10_box,ssd_300_vgg/block11_box \

–save_summaries_secs=60 \

–save_interval_secs=1200 \ #每1200s保存一下模型

–weight_decay=0.0005 \

–optimizer=adam \

–learning_rate=0.001 \

–batch_size=32

–learning_rate_decay_factor=0.94 \

–gpu_memory_fraction=0.90

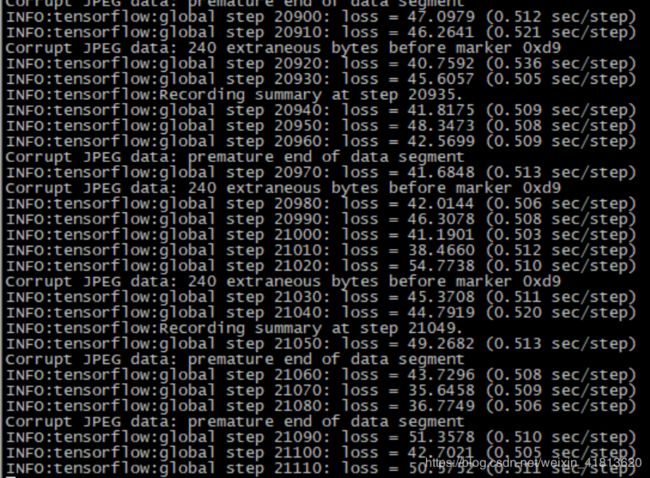

中间过程:

训练10343次效果:

5. 问题

5.1.模型加载报错

InvalidArgumentError (see above for traceback): Assign requires shapes of both tensors to match. lhs shape= [3,3,256,12] rhs shape= [3,3,256,84]

[[Node: save/Assign_5 = Assign[T=DT_FLOAT, _class=[“loc:@ssd_300_vgg/block10_box/conv_cls/weights”], use_locking=true, validate_shape=true, _device="/job:localhost/replica:0/task:0/device:GPU:0"](ssd_300_vgg/block10_box/conv_cls/weights, save/RestoreV2_5/_11)]]

–原因:4.1.中的一些文件没有配置,训练出来的模型加载报错。

参考:

https://blog.csdn.net/duanyajun987/article/details/81564081

https://github.com/balancap/SSD-Tensorflow/issues/88

https://github.com/balancap/SSD-Tensorflow/issues/69