Spark2.3.0 通过Phoenix4.7 查询 Hbase 数据.

0. 环境

| 软件 | 版本 |

| JDK | 1.8 |

| SCALA | 2.11.8 |

| Spark | 2.3.0 |

| Phoenix | 4.7.0 |

| Hbase | 1.1.2 |

1. 目标数据

CREATE TABLE TABLE1 (ID BIGINT NOT NULL PRIMARY KEY, COL1 VARCHAR);

UPSERT INTO TABLE1 (ID, COL1) VALUES (1, 'test_row_1');

UPSERT INTO TABLE1 (ID, COL1) VALUES (2, 'test_row_2');

2. 代码:

package com.zl

import java.util.Properties

import org.apache.spark.{SparkConf, SparkContext}

import org.apache.spark.SparkContext

import org.apache.spark.sql.{Row, SQLContext, SparkSession}

/**

*

*

*/

object App {

private val DB_PHOENIX_DRIVER = "org.apache.phoenix.jdbc.PhoenixDriver"

private val DB_PHOENIX_URL = "jdbc:phoenix:hadoop002,hadoop004,hadoop003"

private val DB_PHOENIX_USER = ""

private val DB_PHOENIX_PASS = ""

private val DB_PHOENIX_FETCHSIZE = "10000"

/**

* 加载数据查询SQL

*/

private val SQL_QUERY = " ( SELECT ID , COL1 FROM TABLE1 limit 10 ) events "

def main(args: Array[String]): Unit = {

val conf = new SparkConf()

conf.setAppName("phoenix-test")

.setMaster("local[*]")

val sc = new SparkContext(conf)

val sparkSession = SparkSession.builder().config(conf).getOrCreate()

// JDBC连接属性

val connProp = new Properties

connProp.put("driver", DB_PHOENIX_DRIVER)

connProp.put("user", DB_PHOENIX_USER)

connProp.put("password", DB_PHOENIX_PASS)

connProp.put("fetchsize", DB_PHOENIX_FETCHSIZE)

val rows = sparkSession.read.jdbc(DB_PHOENIX_URL, SQL_QUERY, connProp).javaRDD

println(rows.collect())

}

}

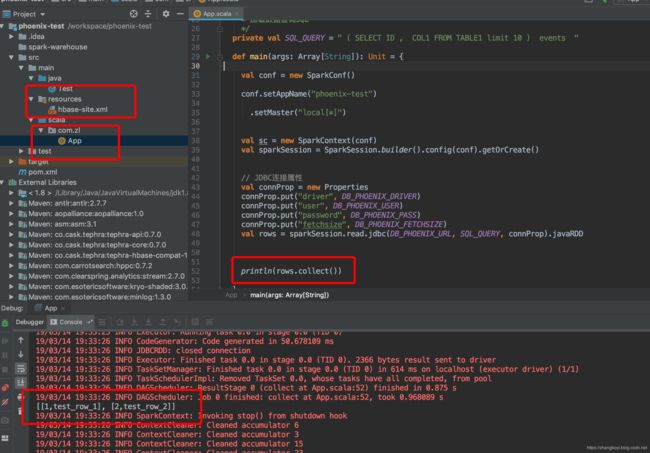

3.项目结构与运行效果

4. 新建项目 pom.xml 文件:

4.0.0

org.apache.spark

spark-parent_2.11

2.3.2

../pom.xml

spark-core_2.11

core

jar

Spark Project Core

http://spark.apache.org/

org.apache.avro

avro

org.apache.avro

avro-mapred

${avro.mapred.classifier}

com.google.guava

guava

com.twitter

chill_${scala.binary.version}

com.twitter

chill-java

org.apache.xbean

xbean-asm5-shaded

org.apache.hadoop

hadoop-client

org.apache.spark

spark-launcher_${scala.binary.version}

${project.version}

org.apache.spark

spark-kvstore_${scala.binary.version}

${project.version}

org.apache.spark

spark-network-common_${scala.binary.version}

${project.version}

org.apache.spark

spark-network-shuffle_${scala.binary.version}

${project.version}

org.apache.spark

spark-unsafe_${scala.binary.version}

${project.version}

net.java.dev.jets3t

jets3t

org.apache.curator

curator-recipes

org.eclipse.jetty

jetty-plus

compile

org.eclipse.jetty

jetty-security

compile

org.eclipse.jetty

jetty-util

compile

org.eclipse.jetty

jetty-server

compile

org.eclipse.jetty

jetty-http

compile

org.eclipse.jetty

jetty-continuation

compile

org.eclipse.jetty

jetty-servlet

compile

org.eclipse.jetty

jetty-proxy

compile

org.eclipse.jetty

jetty-client

compile

org.eclipse.jetty

jetty-servlets

compile

javax.servlet

javax.servlet-api

${javaxservlet.version}

org.apache.commons

commons-lang3

org.apache.commons

commons-math3

com.google.code.findbugs

jsr305

org.slf4j

slf4j-api

org.slf4j

jul-to-slf4j

org.slf4j

jcl-over-slf4j

log4j

log4j

org.slf4j

slf4j-log4j12

com.ning

compress-lzf

org.xerial.snappy

snappy-java

org.lz4

lz4-java

com.github.luben

zstd-jni

org.roaringbitmap

RoaringBitmap

commons-net

commons-net

org.scala-lang

scala-library

org.json4s

json4s-jackson_${scala.binary.version}

org.glassfish.jersey.core

jersey-client

org.glassfish.jersey.core

jersey-common

org.glassfish.jersey.core

jersey-server

org.glassfish.jersey.containers

jersey-container-servlet

org.glassfish.jersey.containers

jersey-container-servlet-core

io.netty

netty-all

io.netty

netty

com.clearspring.analytics

stream

io.dropwizard.metrics

metrics-core

io.dropwizard.metrics

metrics-jvm

io.dropwizard.metrics

metrics-json

io.dropwizard.metrics

metrics-graphite

com.fasterxml.jackson.core

jackson-databind

com.fasterxml.jackson.module

jackson-module-scala_${scala.binary.version}

org.apache.derby

derby

test

org.apache.ivy

ivy

oro

oro

${oro.version}

org.seleniumhq.selenium

selenium-java

test

org.seleniumhq.selenium

selenium-htmlunit-driver

test

xml-apis

xml-apis

test

org.hamcrest

hamcrest-core

test

org.hamcrest

hamcrest-library

test

org.mockito

mockito-core

test

org.scalacheck

scalacheck_${scala.binary.version}

test

org.apache.curator

curator-test

test

net.razorvine

pyrolite

4.13

net.razorvine

serpent

net.sf.py4j

py4j

0.10.7

org.apache.spark

spark-tags_${scala.binary.version}

org.apache.spark

spark-launcher_${scala.binary.version}

${project.version}

tests

test

org.apache.spark

spark-tags_${scala.binary.version}

test-jar

test

org.apache.commons

commons-crypto

${hive.group}

hive-exec

provided

${hive.group}

hive-metastore

provided

org.apache.thrift

libthrift

provided

org.apache.thrift

libfb303

provided

target/scala-${scala.binary.version}/classes

target/scala-${scala.binary.version}/test-classes

${project.basedir}/src/main/resources

${project.build.directory}/extra-resources

true

org.apache.maven.plugins

maven-antrun-plugin

generate-resources

run

org.apache.maven.plugins

maven-dependency-plugin

copy-dependencies

package

copy-dependencies

${project.build.directory}

false

false

true

true

guava,jetty-io,jetty-servlet,jetty-servlets,jetty-continuation,jetty-http,jetty-plus,jetty-util,jetty-server,jetty-security,jetty-proxy,jetty-client

true

Windows

Windows

.bat

unix

unix

.sh

sparkr

org.codehaus.mojo

exec-maven-plugin

sparkr-pkg

compile

exec

${project.basedir}${file.separator}..${file.separator}R${file.separator}install-dev${script.extension}