10 Software Tools You Should Know

10 Software Tools You Should Know

Posted by Jason Sachs on May 20 2012 under Tools | Software DevelopmentUnless you're designing small analog electronic circuits, it's pretty hard these days to get things done in embedded systems design without the help of computers. I thought I'd share a list of software tools that help me get my job done. Most of these are free or inexpensive. Most of them are also for working with software. If you never have to design, read, or edit any software, then you're one of a few people that won't benefit from reading this.

Disclaimer: the "best" software tools are usually a matter of opinion. You may not agree with my opinion, so just take it for what it's worth.

1. Revision control systems -- whether you work in a team of 100, or you're just by yourself, if you're working on a design, you should be using revision control software. This is software that lets you manage different versions of documents, whether they're schematics or source code. Text documents like source code or configuration files especially lend themselves to revision control, because it's easy to view differences between versions and merge changes from one version to another, especially if there's more than one person working on a group of documents.

A collection of documents in a revision control system is called a repository. Where the repository is located depends, in a way, on what kind of revision control system you're using -- there are two basic categories of revision control systems: centralized and distributed. Centralized version control involves a server that contains the repository. When you reach a convenient point in time, you commit your changes to the repository. A distributed version control system (DVCS) doesn't need a centralized server: each person working on the repository has their own local cache (including the entire history) and it's possible to transfer new commits from one repository to another, either by "pushing" from the source system or "pulling" from the destination system.

The three most prevalent revision control systems in the open-source community in 2012 are Subversion (SVN), Mercurial (hg), and Git. Subversion is centralized, but Mercurial and Git are distributed.

I use Mercurial for my personal software development. Setting up a repository is as easy as going into the root of the directory where you're working and typing hg init. You then add the files you like with hg add and then commit with hg commit. It takes me only a few minutes and all of a sudden I have the ability to go back to an earlier version of a file. I do this especially with server configuration files on my home PC (for the Apache webserver, for instance) -- if I make a change in one file and it causes a bug, I can just roll back to an earlier version of the file.

If you're the only one working on a project, it's ridiculously easy to manage a revision control system -- just commit every now and then, when you reach a good stopping point. If you're working with others, there are ways to reconcile the conflicts caused when one of you makes a change and another makes a different change; this is called "merging" and for text documents it's usually pretty easy as long as you do it frequently; for binary documents it's next to impossible and the appropriate way to handle that is to place a lock on a document in the repository that essentially declares that you're working on a particular file and no one else should.

All three of these systems (svn, hg, and git) were started as command-line tools, but all have a variety of different GUI front ends, including the Tortoise series of UIs that is a lightweight add-on to the system file explorer in your operating system (e.g. Windows Explorer or the Mac OSX Finder). They are a little bit less easy to use than non-free version control systems; we've used SurroundSCM at work, and while I dislike the back-end behavior somewhat, I've found the Surround UI much easier to use to reconcile differences between branches.

It's also worth noting that there are many online hosting systems for repositories, including bitbucket.org, SourceForge, Google Code, and GitHub; all of these offer free hosting for public repositories; bitbucket gives you free private repository hosting for your own personal projects having up to 5 users. I would definitely recommend using a DVCS like Mercurial or Git, because it means you can transfer your project from one hosting site to another, and even if the site goes down temporarily you still have your local copy of the repository you can work with.

2. File comparison tools -- Whether you're merging source code into a revision control system, or you're just trying to see what the difference is between one file and another, you'll need file comparison software. There's command-line tools like "diff", and there are GUI file compare software. I like the program called Beyond Compare -- it's not free, but it's inexpensive and very easy to use. It also allows you to compare two directories, and lets you do a 3-way file compare. There are Windows and Linux versions of Beyond Compare, and an OSX version is in the works but not available yet. Other tools out there are SourceGear DiffMerge (free, cross-platform = Win/OSX/Linux), Compare It! (inexpensive, Windows only), DeltaWalker (moderate cost, OSX) and UltraCompare (moderate cost, cross-platform).

3. Editors -- working with text files can be easy or difficult depending on the software you're using. The heavyweight editors are the integrated development environments (IDEs) like Eclipse or NetBeans or Visual Studio. These are great if you're working with specific software tools for programming a particular processor. But sometimes you just need to edit a file quickly. The basic editors that come with the operating system (like Notepad in Windows, or TextEdit on the Mac) are lightweight but don't have many features. I like to have a middleweight editor available: some good free editors in this category are Notepad++ for Windows and TextWrangler for the Mac; I've also used the non-free UltraEdit which is a little more powerful and available for Win/OSX/Linux. SlickEdit is a professional-level editor that is a few hundred dollars and probably overkill for most tasks, but some of my coworkers swear by it. (hopefully they don't swear at it!)

It wouldn't be fair to mention editors without mentioning GNU emacs -- this is a free open-source editor that originated when most operating systems were terminal-based, and most of its commands are activated with obscure keyboard sequences, if you like that sort of thing, which I don't. It is a very powerful editor that is customizable via Lisp programming. You can do just about anything in emacs if it can be displayed in a terminal.

Two major features in editors that I look for are the following:

- Large file support. If you have very large text files (multimegabyte), make sure you get an editor that can open a file without having to load all of it in memory at once. This is not true of Notepad, but it is true of Notepad++ and UltraEdit.

- "Find in files" functionality -- a typical source tree spans more than one file, and often you forget where you put something. (Well, I forget where I put things, at least!) Having the ability to search for a word or a pattern in multiple files is invaluable. The Unix utility "grep" allows you to do this at the command-line, but find-in-files features in editors allow you to double-click on the search results and jump to the particular lines within the editor.

You may also need to edit binary files in a hex editor -- this lets you view and edit the character codes of nonprintable characters. Both Notepad++ and UltraEdit have hex edit modes. If you're on the Mac, try using the standalone Hex Fiend -- it's pretty good.

There are also specialized editors for XML files -- in the free category, there's XML Notepad and the firstobject XML editor "foxe"; the user interface to foxe is a bit clunky, but it does very very well with large files.

4. Build tools -- if you're starting to write software in an IDE, you can just click "Build" and the program will compile your source code for you. Beware of this practice. It means you're letting the IDE worry about the details, which is fine for small systems where you're learning something, but there are lots of options to change the way your software is compiled, and in an IDE these are buried in menus, and not easily transfered from one project to another. If you're lucky, you can identify the files used to store the build settings, so that you can check them into your revision control system, because otherwise you're not storing your complete project source.

Professional software engineers -- again, this is my opinion -- should be using a defined build tool. The standard plain-vanilla solution (I just typed "pain-vanilla" by mistake and was very tempted to keep it that way) is a program called make which was introduced in the 1970s and is unfortunately still used. Makefiles consist of a bunch of lines that look like this:

foo.obj: foo.c cc -o$@$<

These are "rules" that tell make how to build your software by turning source files (e.g. "foo.c" above) into target files (e.g. "foo.obj") by executing a program. ("cc" in this case.) They also dictate dependencies: suppose file F3 depends on running command X to create file F3 from file F2, and file F2 depends on running command Y to create file F2 from file F1 -- a build tool will be able to infer the dependency graph, so that if you change file F1, it can figure out that it has to rebuild files F2 and F3 accordingly. This may seem trivial, but in anything but a tiny software project, it's really important. I have been working on a relatively simple software project that has a few dozen source files, and it takes 5 minutes to compile all the files. Having a proper build tool that captures the dependencies correctly will allow you to perform an incremental build and only compile the ones that are necessary based on the changes you've made.

There are many shortcomings of make; among other things the syntax of makefiles is rather cryptic, and it's difficult to handle special cases where you need to do special things at compile-time without writing separate scripts to do them.

For Java programs, the standard tools are ant and maven; these make it easier, and can be used to build other systems besides Java software, but not often used in general circumstances.

There are plenty of other build tools as well. In the last few years I have started to shift my preference from tools with a declarative syntax (e.g. makefiles or ant scripts) to tools with a more general-purpose syntax. The idea here is that for most simple tasks, you can describe these very briefly, but if you want to do something complicated, you have the full power of a general-purpose programming language. (For example, if you wanted to use a command-line option "-On" in a particular build task where "n" was a number equal to the size of a file modulo 7, you could write a function to do that automatically) These include rake (based around Ruby) and gradle (based around Groovy) and scons and waf (both based around Python). I've used scons for about 3 years because I know Python, and a coworker was recommended scons to me. It works well for some things and is extremely flexible, but other things are really hard to change the default behavior of scons to do what you really want to do. Lately I've just started using waf and I find it's much simpler to do what I need, so I'd recommend you look at waf if you have a new project, though I wish it had a less cryptic name.

5. Scripting tools -- Sometimes you need to put together a quick piece of software to do something. It's often hard to do this in C or C++ because you have to spend energy writing code to parse strings or read files or whatever, and then you have to compile the C/C++ program into one executable per computer platform. Ugh.

The better solution is to use a scripting language. These are usually interpreted computer languages, with versions of the interpreter available for most operating systems, so if you write a script, you should be able to use it on multiple operating systems.

Examples of modern scripting languages are Python, Ruby, and Groovy. (I prefer Python.) Earlier languages include awk and Perl. I have avoided using Perl for a couple of reasons: it has a very strange and cryptic syntax, with different prefixes for different types ($foo is a single variable, but @foo is an array), and encourages use of "pseudovariables" (<> and $_) with side effects that depend implicitly on other actions that have been executed in the program. These encourage bad programming style: making short cryptic scripts that can do something clever in a few lines of punctuation is not easily read or maintained by others. I am embarrassed to admit that 10 years ago I used to use awk a lot, because it was simpler than perl; awk is an ancient text processing scripting language that had its place, but in some ways it's just as bad as Perl in encouraging bad programming practices.

Whatever your preference, I would strongly recommend using a language that you can test in a debugger. My experience in awk was awful, and when debugging programs I'd have to put in some print statements to figure out what was going on. I am very happy with Python because there's a great plugin for Eclipse called pydev, where you can set breakpoints and single-step through your scripts.

I also use a program called JSDB from time to time. JSDB is a standalone JavaScript shell built using the SpiderMonkey JavaScript engine found in the Firefox web browser, which also includes a number of utility classes for accessing files, databases, network streams, serial ports, etc. It doesn't have an integrated debugger (although there's an odd debugger that has kind of an instant webserver so you can point your web browser to a port on your computer and it will allow you debug your program), and it's a little quirky sometimes, but it's much more lightweight than Python and for some things I find I can get going much more quickly.

All of the scripting languages I've mentioned in this section are free.

6. Numerical analysis tools -- Unless you are doing something really simple like an internet-enabled traffic light, chances are you're doing some kind of math in an embedded system project. Maybe you want to graph data, or fit a curve to data, or solve an equation, or design a low-pass filter, or explore how something in your system varies when you change a parameter. Numerical analysis tools will help you with these tasks, and include programs like MATLAB, Mathematica, and MathCAD. None of these are free, and a fully outfitted version of MATLAB with all the toolboxes can run you into thousands of dollars, but they provide a lot of functionality. MATLAB specializes in data analysis, and Mathematica specializes in symbolic algebra. MathCAD lies somewhere in between, with a what-you-see-is-what-you-get approach: every operation used to derive the output you see in a MathCAD worksheet is visible in that worksheet, so it's very transparent, and if you print out the worksheet someone can see all the steps needed to duplicate your efforts. Compare this to an Excel spreadsheet for example, where what you see are the results of an operation; to look at the steps needed to perform that operation you have to look in each of the cells and see if there is a formula there.

MATLAB also has a few free software "clones": SciLab, Octave, and PyLab all offer some of the base functionality MATLAB offers, with syntax that is either identical or very similar, but none are as polished and professional as MATLAB. PyLab's extra selling point is that it's implemented using Python and offers scientific and graphing libraries for Python, so if you know Python you can leverage that knowledge in doing scientific computation.

I have a love/hate relationship with MATLAB. On the one hand, it's a beautiful piece of software that lets you do numerical analysis and visualization, with additional toolboxes for just about everything under the sun (signal processing, filter design, control loop simulation, etc.). On the other hand, it's not cheap, and the folks at MathWorks that sell MATLAB seem to blithely pretend that its cost isn't of concern as they show off their latest features.

My last word on numerical analysis tools is a plea and a short rant:

Don't use Excel for numerical analysis!

When I started work as an electrical engineer in 1996, MATLAB was unfamiliar to me, so when I had to graph data, I used what I knew: Microsoft Excel. Excel is spreadsheet software aimed primarily at business applications, but it has the ability to read text-delimited files (like comma-separated value = CSV files) and let you graph the results, with some control over the way the plots are rendered, so it will work for graphing data. But if you do anything more than basic plots, you'll find it gets really difficult and frustrating really quickly. If you have one set of data to graph, maybe it's not that bad, and you can get it to look the way you want interactively. If you have a bunch of data files you want to graph in the same way, it's a real hassle and you'll end up doing the same things over and over again by hand. Sure, you can write macros in Visual Basic, which is what I did when I wanted to put more than one plot on a page and have the axes line up, but then you'll find that the object model available to you is weird and quirky and may not let you do what you want, and you start to think Bad Thoughts about certain people in Redmond, Washington. If you get to the point in Excel where you're writing macros, stop and rethink your situation. For all the time you invest in getting Excel to do what you want, you can spend that time instead in learning other software tools where it is much easier to graph and analyze data for scientific purposes rather than business purposes. Remember, the folks at Microsoft are trying to make software that sales and marketing people can use to solve their problems, and as engineers, we get whatever features happen to get tossed in for us.

7. Documentation utilities -- often you will need to communicate your ideas to others, and there are many different types of software to help do this. Yes, there's the non-free Microsoft programs like Visio for flowcharts/graphs, Word for documents, and PowerPoint for presentations. Sometimes you want something free or more specialized, though, for particular kinds of documents.

- Graph visualization -- we're not talking about the x-y chart here, but rather something to visualize networks of nodes and edges. I've used graphviz (command-line based) and yEd (interactive) and would recommend both.

- Sequence diagrams -- these let you visualize use cases where a sequence of events causes software components to interact. Quick Sequence Diagram Editor is fairly basic but can help draw these diagrams.

- "Typesetting" tools (non-WYSIWYG software for producing documentation) -- docutils and sphinx both take ReStructured Text (a sort of wiki-style markup language) and produce Python-style documentation; the effort needed to produce code documentation is fairly low, and although both are intended for Python, it's not hard to use them for any documentation. I gave up on TeX and LaTeX a while ago, but that's a personal quirk of mine, and both are used heavily in the scientific community, so you'll find a lot of support out there. There's also DocBook. Or you can give into WYSIWYG software. Beware.

- "Self-documenting" code tools: Doxygen is one of the most common of these, and turns comments in your code into documentation; Javadoc is a Java-specific documentation tool.

8. Terminal and communications software: When you need to communicate over serial ports, it's time to use terminal software. Before web browsers, this was a lot more common. Which software is good? Friends don't let friends use the version of HyperTerminal bundled with Windows -- it's not very robust -- and Microsoft finally stopped including it with Windows when they released Vista. My favorites are PuTTYtel and TeraTerm Pro.

Other communications software handles FTP and its secure variants, SFTP and SCP. The hands-down winner in my book is Cyberduck -- it's really easy to use, and while it was originally Mac-only, it's now available for both OSX and Windows. (besides, how can you not like a program that has a rubber duckie for a logo?)

9. Software quality assurance (QA) tools: If you write software and you think you write bug-free software, you are fooling yourself. There are a lot of software tools out there which can help you find bugs before they find you. Okay, here's where I have to admit I'm really just a beginner, and am not very familiar with what's out there. (Shame on me!) But I do know enough to suggest some places to look. Some of the major categories here are the following:

- Static analysis tools. This is software that parses your software, either through the raw source code or the compiled object files. The most well-known example is lint for C. Lint is like the English teacher you had in high school who spilled red ink all over your papers, and who complained when you used "who" instead of "whom", or if you ended sentences with a preposition. Do you need to follow all these rules rigorously in order to communicate effectively in English? No, but doing so will help you prevent errors. It's the same thing in C -- writing code that avoids certain poor patterns will help you prevent errors, and lint helps you find those. Java has FindBugs and there are similar programs available for other computer languages. Also in this category is software that measures code complexity -- good software design generally keeps function size small; if you find you're writing functions that have more than 20-30 lines of code, chances are you can split them up into smaller chunks that are more easily designed and debugged.

- Unit testing and code coverage. It's generally recognized that testing large software packages is extremely difficult, and it's much easier to test smaller software modules using "unit tests" which are written to try various input patterns on a single module of code. Unit testing in embedded systems can be difficult; one approach is to cross-compile software on a PC and hope that any bugs in your software can be detected in the PC-compiled version. It's also helpful when you can write automated test scripts, so that when you make a change to your software, you can run the test scripts and check whether you introduced any new bugs. For code coverage, some of my coworkers in the medical industry use Cantata++, which isn't cheap, but then again these are medical devices.

- Code "beautifiers" -- if you and your coworkers all write using the same code style, then you can catch stupid syntax bugs more easily. Normally we have our own style, but there's software out there which can do auto-indent, and convert tabs to spaces and whatnot. One that I've looked at is called Uncrustify, along with a GUI tool called UniversalIndentGUI.

10. Basic command-line utilities

Many of the following programs are UNIX command-line utilities from the days when there were no graphical user interfaces, and things had to be done by hand. Unless there's some revolutionary improvement in GUIs, there will always be a lot more you can do through the command-line, just because there are more combinations of things you can do via scripts. If you're running OSX or Linux, you already have these; if you're running Windows you can download binary versions that will run on your OS at http://unxutils.sourceforge.net/ or http://sourceforge.net/projects/unxutils/

- less -- this is probably the one I use most; it lets you display the contents of a file page by page. You can also search for text content, or if you are viewing a log file that is being updated continuously, you can type F and it will continuously show you the latest lines being appended.

- grep -- lets you search a group of files for a regular expression.

- touch -- This updates the latest-modified-time of a file to the current date/time, or if a file does not exist, it creates an empty file.

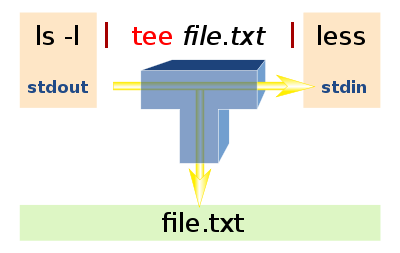

- tee -- piping a command through tee (e.g.

someprog | tee logfile) lets you copy the output of that command to a file while also printing the output to the terminal.

- which -- If you run a program foo.exe at the terminal and you want to know where that program is located, just type

which foo.exe - head and tail -- These let you print the first or last N lines of a file.

- uniq -- filters output to remove identical successive lines.

- du -- prints disk usage (total space taken) of a directory.

- wc -- counts lines, words, and characters of a file. (I use it when writing a Letter to the Editor so I can keep my word count under the maximum a newspaper allows.)

- cp, rm, mv, ls, cat -- these all have DOS equivalents (copy, delete, rename, dir, type), but the UNIX equivalents are sometimes expected by scripts so I have these installed in my path.

- md5sum -- useful for verifying file integrity by calculating the MD5 hash of a file.

- gzip and gunzip -- these compress and uncompress individual files; the .gz format is less common than .zip but you will find it occasionally, especially in webpages that are transmitted in compressed form.

- wget and curl -- these are programs that allow you to download web pages via HTTP outside your browser, for example if you want to download a known webpage directly to a file, or "screen-scrape" the contents of a page. They aren't standard UNIX programs; wget is from the GNU project, and curl is its own special thing. I like curl better in most cases as it lets you do more.

Well, that's all for this list. It was difficult paring down my list to 10; a few other programs like 7zip, CPU-Z, and System Explorer would have made the cut, but I wanted to stick to a list of ten.

Happy computing!

中文翻译版见: http://www.csdn.net/article/2013-05-09/2815204-10-Software-Tools-You-Should-Know