深度残差收缩网络

深度残差收缩网络

作者:友成

链接:https://zhuanlan.zhihu.com/p/85238942

来源:知乎

著作权归作者所有。商业转载请联系作者获得授权,非商业转载请注明出处。

深度残差收缩网络(Deep Residual Shrinkage Networks,DRSN)是深度残差网络(Deep Residual Networks)的一种改进形式。顾名思义,深度残差收缩网络,就是对“深度残差网络”的“残差路径”进行“收缩”的一种网络。深度残差收缩网络的设计体现了一种思想:在特征学习的过程中,剔除冗余信息也是非常重要的。

1.深度残差网络基础

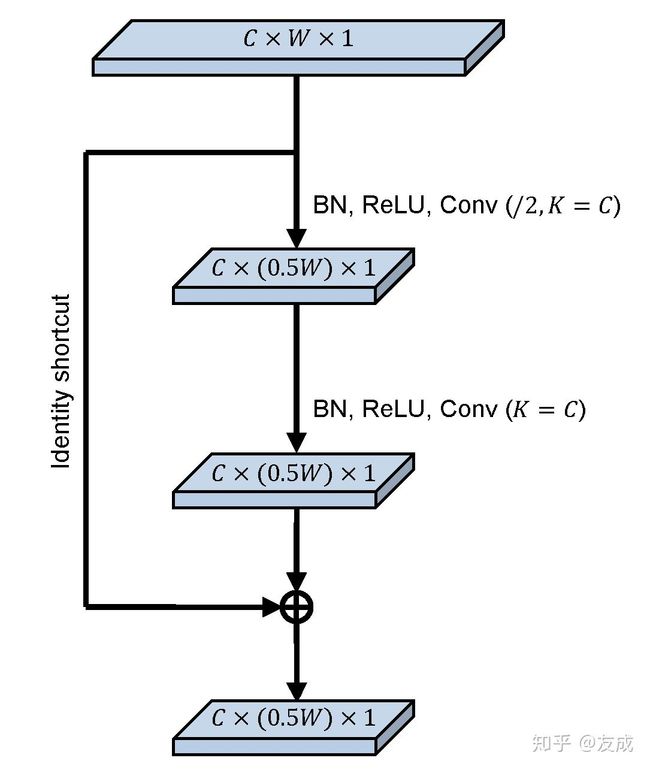

残差模块(Residual Building Unit, RBU)是深度残差网络基本组成部分。如下图所示,长方体表示通道数为C、宽度为W、高为1的特征图;一个残差模块可以包含两个批标准化(Batch Normalization, BN)、两个整流线性单元激活函数(Rectifier Linear Unit activation function, ReLU)、两个卷积层(Convolutional layer)和恒等映射(Identity shortcut)。恒等映射是深度残差网络的核心贡献,极大程度地降低了深度神经网络训练的难度。K表示卷积层中卷积核的个数,在此图中K与输入特征图的通道数C相等。在这张图中,输出特征图的尺寸和输入特征图的尺寸相等。

在残差模块中,输出特征图的宽度可以发生改变。例如,在下图中,将卷积层中卷积核的移动步长设置为2(用"/2"表示),那么输出特征图的宽度就会减半,变成0.5W。

输出特征图的通道数也可以发生改变。例如,在下图中,如果将卷积层中卷积核的个数设置为2C,那么输出特征图的通道数就会变成2C,也就是使得输出特征图的通道数翻倍。

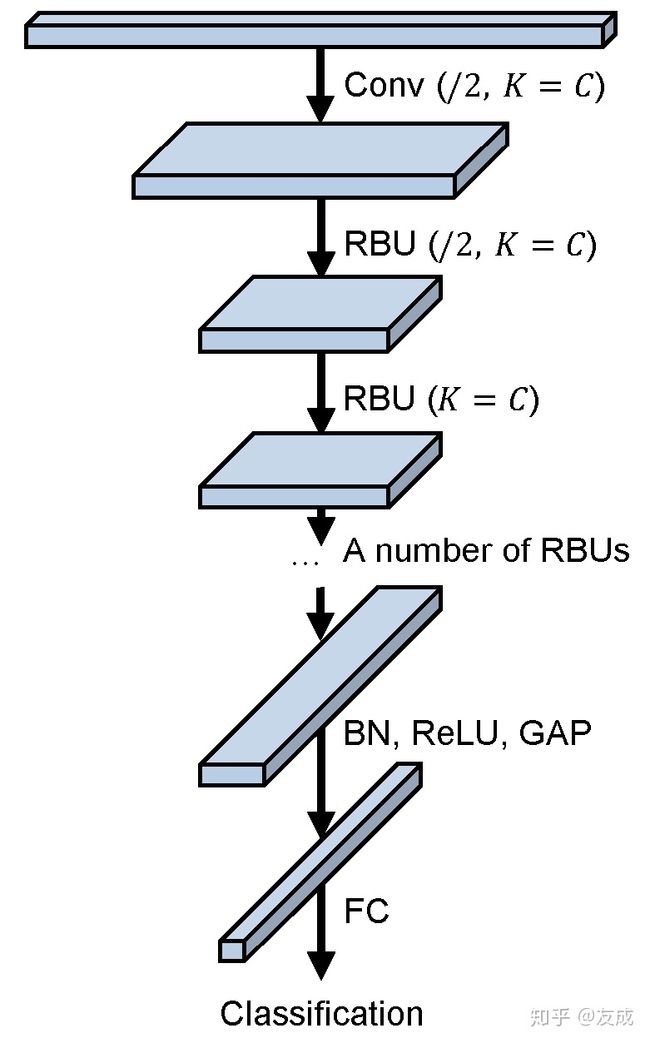

深度残差网络的整体结构如下图所示。我们可以看到,一个深度残差网络,从前到后,包含了一个卷积层、一定数量的残差模块、一个批标准化、一个ReLU激活函数、一个全局均值池化和一个全连接输出层。深度残差网络的主体部分就是由很多个残差模块构成的。在深度残差网络进行基于反向传播的模型训练时,其损失不仅能够通过卷积层等进行逐层的反向传播,而且能够通过恒等映射进行更为方便的反向传播,从而更容易训练得到更优的模型。

2.深度残差收缩网络

深度残差收缩网络面向的是带有“噪声”的信号,将“软阈值化”作为“收缩层”引入残差模块之中,并且提出了自适应设置阈值的方法。实际上,这里的“噪声”可以宽泛地理解为“与当前任务无关的特征信息”。

2.1 软阈值化

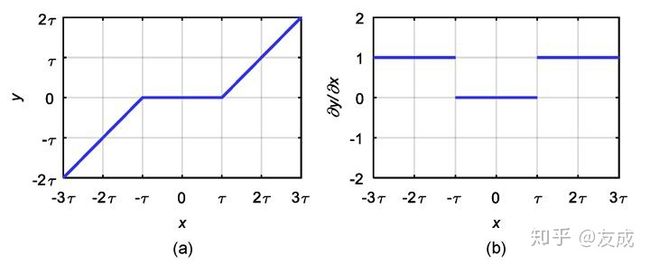

首先解释软阈值化的概念。软阈值化是将输入数据朝着零的方向进行收缩的一种函数,经常在信号降噪算法中使用。其公式如下:

x表示输入特征,y表示输出特征,τ表示阈值。在这里,阈值需要是一个正数,并且不能太大。如果阈值比所有的输入特征的绝对值都大,那么输出特征y就只能为零。这样的话,软阈值化就没有了意义。同时,软阈值化函数的导数公式如下:

我们可以看出,软阈值化函数的导数要么为零,要么为一。这个性质是和ReLU激活函数相同的,因此软阈值化函数也有利于防止“梯度消失”和“梯度爆炸”。将上述两个公式以图片的形式展示出来,得到下图:

2.2 网络结构

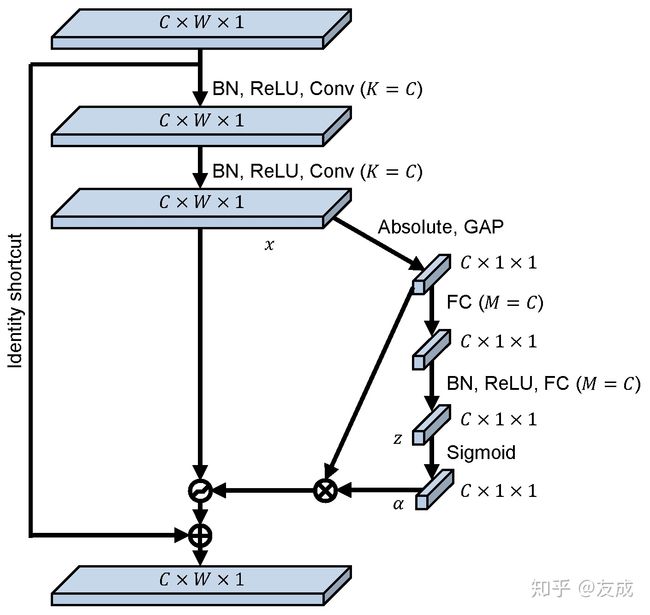

这部分首先介绍一种改进后的残差模块。我们可以对比图1和图6的区别。相较于图1,图6中的残差模块里多了一个小型的子网络。这个子网络的作用就是自适应地设置阈值。通过仔细观察这个子网络,可以发现,这个子网络所设置的阈值,其实就是(特征图的绝对值的平均值)×(一个系数α)。在sigmoid函数的作用下,α是一个0和1之间的数字。在这种方式下,阈值不仅是一个正数,而且不会太大,即不会使输出全部为零。

将图4中的基本残差模块,替换成图6中改进的残差模块RSBU-CS,就得到了第一种深度残差网络(Deep Residual Shrinkage Network with channel-shared thresholds, DRSN-CS)的结构,如下图所示:

再介绍另一种改进后的残差模块。相较于图6,图8中的残差模块所获得的阈值,不是一个值,而是一个向量,也就是特征图的每一个通道都对应着一个收缩阈值。

类似的,将图4中的基本残差模块,替换成图8中改进的残差模块RSBU-CW,就得到了第一种深度残差网络(Deep Residual Shrinkage Network with Channel-Wise thresholds, DRSN-CW)的结构,如下图所示:

3.实验验证

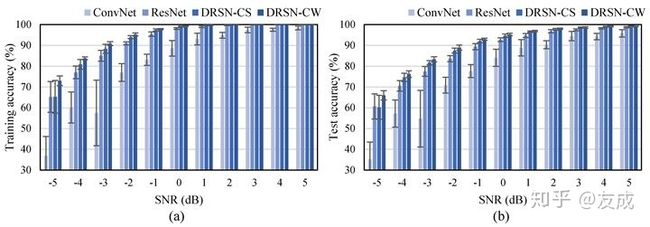

论文采集了8种不同健康状态下的振动信号,进行深度残差收缩网络的分类效果验证。为了体现深度残差收缩网络对噪声的抑制能力,在振动信号中分别添加了不同量的高斯噪声、拉普拉斯噪声和粉红噪声,信噪比分别为-5dB至5dB,并且与卷积神经网络(ConvNet)和深度残差网络(ResNet)进行了对比。

下图展示了在不同程度高斯噪声情况下的实验结果:

然后是不同程度拉普拉斯噪声情况下的实验结果:

最后是不同程度粉红噪声情况下的实验结果:

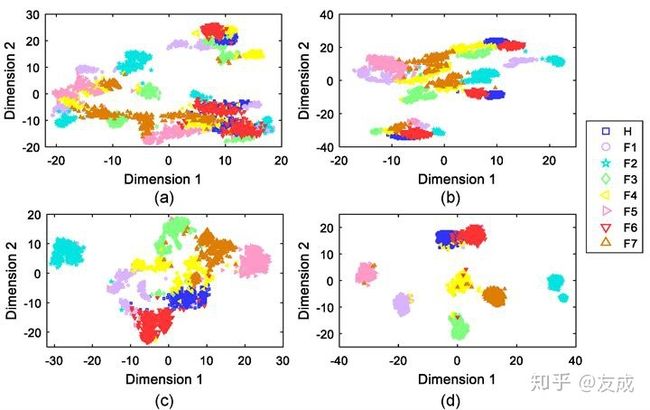

将一组测试样本的高层特征约简到二维平面,以散点图的形式绘制出来,得到下图:

将算法训练过程中的交叉熵误差随迭代次数的变化,以曲线的形式绘制出来,得到下图:

[转载] 原文网址:

http://www.360doc.com/content/19/1002/20/66569649_864509261.shtmlwww.360doc.com

M. Zhao, S. Zhong, X. Fu, B. Tang, M. Pecht, Deep Residual Shrinkage Networks for Fault Diagnosis, IEEE Transactions on Industrial Informatics, 2019, DOI: 10.1109/TII.2019.2943898

https://ieeexplore.ieee.org/document/8850096/ieeexplore.ieee.org

Keras程序

#!/usr/bin/env python3

# -*- coding: utf-8 -*-

"""

Created on Sat Dec 28 23:24:05 2019

Implemented using TensorFlow 1.0.1 and Keras 2.2.1

M. Zhao, S. Zhong, X. Fu, et al., Deep Residual Shrinkage Networks for Fault Diagnosis,

IEEE Transactions on Industrial Informatics, 2019, DOI: 10.1109/TII.2019.2943898

@author: super_9527

"""

from __future__ import print_function

import keras

import numpy as np

from keras.datasets import mnist

from keras.layers import Dense, Conv2D, BatchNormalization, Activation

from keras.layers import AveragePooling2D, Input, GlobalAveragePooling2D

from keras.optimizers import Adam

from keras.regularizers import l2

from keras import backend as K

from keras.models import Model

from keras.layers.core import Lambda

K.set_learning_phase(1)

# Input image dimensions

img_rows, img_cols = 28, 28

# The data, split between train and test sets

(x_train, y_train), (x_test, y_test) = mnist.load_data()

if K.image_data_format() == 'channels_first':

x_train = x_train.reshape(x_train.shape[0], 1, img_rows, img_cols)

x_test = x_test.reshape(x_test.shape[0], 1, img_rows, img_cols)

input_shape = (1, img_rows, img_cols)

else:

x_train = x_train.reshape(x_train.shape[0], img_rows, img_cols, 1)

x_test = x_test.reshape(x_test.shape[0], img_rows, img_cols, 1)

input_shape = (img_rows, img_cols, 1)

# Noised data

x_train = x_train.astype('float32') / 255. + 0.5*np.random.random([x_train.shape[0], img_rows, img_cols, 1])

x_test = x_test.astype('float32') / 255. + 0.5*np.random.random([x_test.shape[0], img_rows, img_cols, 1])

print('x_train shape:', x_train.shape)

print(x_train.shape[0], 'train samples')

print(x_test.shape[0], 'test samples')

# convert class vectors to binary class matrices

y_train = keras.utils.to_categorical(y_train, 10)

y_test = keras.utils.to_categorical(y_test, 10)

def abs_backend(inputs):

return K.abs(inputs)

def expand_dim_backend(inputs):

return K.expand_dims(K.expand_dims(inputs,1),1)

def sign_backend(inputs):

return K.sign(inputs)

def pad_backend(inputs, in_channels, out_channels):

pad_dim = (out_channels - in_channels)//2

inputs = K.expand_dims(inputs,-1)

inputs = K.spatial_3d_padding(inputs, ((0,0),(0,0),(pad_dim,pad_dim)), 'channels_last')

return K.squeeze(inputs, -1)

# Residual Shrinakge Block

def residual_shrinkage_block(incoming, nb_blocks, out_channels, downsample=False,

downsample_strides=2):

residual = incoming

in_channels = incoming.get_shape().as_list()[-1]

for i in range(nb_blocks):

identity = residual

if not downsample:

downsample_strides = 1

residual = BatchNormalization()(residual)

residual = Activation('relu')(residual)

residual = Conv2D(out_channels, 3, strides=(downsample_strides, downsample_strides),

padding='same', kernel_initializer='he_normal',

kernel_regularizer=l2(1e-4))(residual)

residual = BatchNormalization()(residual)

residual = Activation('relu')(residual)

residual = Conv2D(out_channels, 3, padding='same', kernel_initializer='he_normal',

kernel_regularizer=l2(1e-4))(residual)

# Calculate global means

residual_abs = Lambda(abs_backend)(residual)

abs_mean = GlobalAveragePooling2D()(residual_abs)

# Calculate scaling coefficients

scales = Dense(out_channels, activation=None, kernel_initializer='he_normal',

kernel_regularizer=l2(1e-4))(abs_mean)

scales = BatchNormalization()(scales)

scales = Activation('relu')(scales)

scales = Dense(out_channels, activation='sigmoid', kernel_regularizer=l2(1e-4))(scales)

scales = Lambda(expand_dim_backend)(scales)

# Calculate thresholds

thres = keras.layers.multiply([abs_mean, scales])

# Soft thresholding

sub = keras.layers.subtract([residual_abs, thres])

zeros = keras.layers.subtract([sub, sub])

n_sub = keras.layers.maximum([sub, zeros])

residual = keras.layers.multiply([Lambda(sign_backend)(residual), n_sub])

# Downsampling (it is important to use the pooL-size of (1, 1))

if downsample_strides > 1:

identity = AveragePooling2D(pool_size=(1,1), strides=(2,2))(identity)

# Zero_padding to match channels (it is important to use zero padding rather than 1by1 convolution)

if in_channels != out_channels:

identity = Lambda(pad_backend, arguments={'in_channels':in_channels,'out_channels':out_channels})(identity)

residual = keras.layers.add([residual, identity])

return residual

# define and train a model

inputs = Input(shape=input_shape)

net = Conv2D(8, 3, padding='same', kernel_initializer='he_normal', kernel_regularizer=l2(1e-4))(inputs)

net = residual_shrinkage_block(net, 1, 8, downsample=True)

net = BatchNormalization()(net)

net = Activation('relu')(net)

net = GlobalAveragePooling2D()(net)

outputs = Dense(10, activation='softmax', kernel_initializer='he_normal', kernel_regularizer=l2(1e-4))(net)

model = Model(inputs=inputs, outputs=outputs)

model.compile(loss='categorical_crossentropy', optimizer=Adam(), metrics=['accuracy'])

model.fit(x_train, y_train, batch_size=100, epochs=5, verbose=1, validation_data=(x_test, y_test))

# get results

K.set_learning_phase(0)

DRSN_train_score = model.evaluate(x_train, y_train, batch_size=100, verbose=0)

print('Train loss:', DRSN_train_score[0])

print('Train accuracy:', DRSN_train_score[1])

DRSN_test_score = model.evaluate(x_test, y_test, batch_size=100, verbose=0)

print('Test loss:', DRSN_test_score[0])

print('Test accuracy:', DRSN_test_score[1])

TFLearn程序

#!/usr/bin/env python3

# -*- coding: utf-8 -*-

"""

Created on Mon Dec 23 21:23:09 2019

Implemented using TensorFlow 1.0 and TFLearn 0.3.2

M. Zhao, S. Zhong, X. Fu, B. Tang, M. Pecht, Deep Residual Shrinkage Networks for Fault Diagnosis,

IEEE Transactions on Industrial Informatics, 2019, DOI: 10.1109/TII.2019.2943898

@author: super_9527

"""

from __future__ import division, print_function, absolute_import

import tflearn

import numpy as np

import tensorflow as tf

from tflearn.layers.conv import conv_2d

# Data loading

from tflearn.datasets import cifar10

(X, Y), (testX, testY) = cifar10.load_data()

# Add noise

X = X + np.random.random((50000, 32, 32, 3))*0.1

testX = testX + np.random.random((10000, 32, 32, 3))*0.1

# Transform labels to one-hot format

Y = tflearn.data_utils.to_categorical(Y,10)

testY = tflearn.data_utils.to_categorical(testY,10)

def residual_shrinkage_block(incoming, nb_blocks, out_channels, downsample=False,

downsample_strides=2, activation='relu', batch_norm=True,

bias=True, weights_init='variance_scaling',

bias_init='zeros', regularizer='L2', weight_decay=0.0001,

trainable=True, restore=True, reuse=False, scope=None,

name="ResidualBlock"):

# residual shrinkage blocks with channel-wise thresholds

residual = incoming

in_channels = incoming.get_shape().as_list()[-1]

# Variable Scope fix for older TF

try:

vscope = tf.variable_scope(scope, default_name=name, values=[incoming],

reuse=reuse)

except Exception:

vscope = tf.variable_op_scope([incoming], scope, name, reuse=reuse)

with vscope as scope:

name = scope.name #TODO

for i in range(nb_blocks):

identity = residual

if not downsample:

downsample_strides = 1

if batch_norm:

residual = tflearn.batch_normalization(residual)

residual = tflearn.activation(residual, activation)

residual = conv_2d(residual, out_channels, 3,

downsample_strides, 'same', 'linear',

bias, weights_init, bias_init,

regularizer, weight_decay, trainable,

restore)

if batch_norm:

residual = tflearn.batch_normalization(residual)

residual = tflearn.activation(residual, activation)

residual = conv_2d(residual, out_channels, 3, 1, 'same',

'linear', bias, weights_init,

bias_init, regularizer, weight_decay,

trainable, restore)

# get thresholds and apply thresholding

abs_mean = tf.reduce_mean(tf.reduce_mean(tf.abs(residual),axis=2,keep_dims=True),axis=1,keep_dims=True)

scales = tflearn.fully_connected(abs_mean, out_channels//4, activation='linear',regularizer='L2',weight_decay=0.0001,weights_init='variance_scaling')

scales = tflearn.batch_normalization(scales)

scales = tflearn.activation(scales, 'relu')

scales = tflearn.fully_connected(scales, out_channels, activation='linear',regularizer='L2',weight_decay=0.0001,weights_init='variance_scaling')

scales = tf.expand_dims(tf.expand_dims(scales,axis=1),axis=1)

thres = tf.multiply(abs_mean,tflearn.activations.sigmoid(scales))

# soft thresholding

residual = tf.multiply(tf.sign(residual), tf.maximum(tf.abs(residual)-thres,0))

# Downsampling

if downsample_strides > 1:

identity = tflearn.avg_pool_2d(identity, 1,

downsample_strides)

# Projection to new dimension

if in_channels != out_channels:

if (out_channels - in_channels) % 2 == 0:

ch = (out_channels - in_channels)//2

identity = tf.pad(identity,

[[0, 0], [0, 0], [0, 0], [ch, ch]])

else:

ch = (out_channels - in_channels)//2

identity = tf.pad(identity,

[[0, 0], [0, 0], [0, 0], [ch, ch+1]])

in_channels = out_channels

residual = residual + identity

return residual

# Real-time data preprocessing

img_prep = tflearn.ImagePreprocessing()

img_prep.add_featurewise_zero_center(per_channel=True)

# Real-time data augmentation

img_aug = tflearn.ImageAugmentation()

img_aug.add_random_flip_leftright()

img_aug.add_random_crop([32, 32], padding=4)

# Build a Deep Residual Shrinkage Network with 3 blocks

net = tflearn.input_data(shape=[None, 32, 32, 3],

data_preprocessing=img_prep,

data_augmentation=img_aug)

net = tflearn.conv_2d(net, 16, 3, regularizer='L2', weight_decay=0.0001)

net = residual_shrinkage_block(net, 1, 16)

net = residual_shrinkage_block(net, 1, 32, downsample=True)

net = residual_shrinkage_block(net, 1, 32, downsample=True)

net = tflearn.batch_normalization(net)

net = tflearn.activation(net, 'relu')

net = tflearn.global_avg_pool(net)

# Regression

net = tflearn.fully_connected(net, 10, activation='softmax')

mom = tflearn.Momentum(0.1, lr_decay=0.1, decay_step=20000, staircase=True)

net = tflearn.regression(net, optimizer=mom, loss='categorical_crossentropy')

# Training

model = tflearn.DNN(net, checkpoint_path='model_cifar10',

max_checkpoints=10, tensorboard_verbose=0,

clip_gradients=0.)

model.fit(X, Y, n_epoch=100, snapshot_epoch=False, snapshot_step=500,

show_metric=True, batch_size=100, shuffle=True, run_id='model_cifar10')

training_acc = model.evaluate(X, Y)[0]

validation_acc = model.evaluate(testX, testY)[0]